Submitted by :

- Amarendra Sabat

- Claudinei Daitx

- Suwei (Stream) Qi

-

The NASA data set comprises different size NACA 0012 airfoils at various wind tunnel speeds and angles of attack.

-

The span of the airfoil and the observer position were the same in all of the experiments.

-

The NASA data set was obtained from a series of aerodynamic and acoustic tests of two and three-dimensional airfoil blade sections conducted in an anechoic wind tunnel.

-

Relevant Papers:

-

T.F. Brooks, D.S. Pope, and A.M. Marcolini. Airfoil self-noise and prediction. Technical report, NASA RP-1218, July 1989.

-

K. Lau. A neural networks approach for aerofoil noise prediction. Master’s thesis, Department of Aeronautics. Imperial College of Science, Technology and Medicine (London, United Kingdom), 2006.

-

R. Lopez. Neural Networks for Variational Problems in Engineering. PhD Thesis, Technical University of Catalonia, 2008.

-

-

Citation :

-

Dua, D. and Graff, C. (2019). UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science.

Link: Airfoil Self-Noise Data Set

import os

import warnings

import pandas as pd

import seaborn as sns

import numpy as np

# To plot pretty figures

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

from IPython.core.display import HTML, display

# Machine learn packages

import tensorflow as tf

from sklearn.pipeline import Pipeline

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import LinearRegression, Ridge, Lasso, ElasticNet, BayesianRidge

from sklearn.svm import SVR

from sklearn.preprocessing import StandardScaler, QuantileTransformer, MaxAbsScaler

from sklearn.decomposition import PCA

from sklearn.metrics import r2_score, mean_squared_error, mean_absolute_error

from mpl_toolkits.mplot3d import Axes3D

from tensorflow import keras

# Remove all warnings in this notebook

warnings.filterwarnings('ignore')

tf.logging.set_verbosity(tf.logging.ERROR)

# Same random seed state

np.random.seed(42)

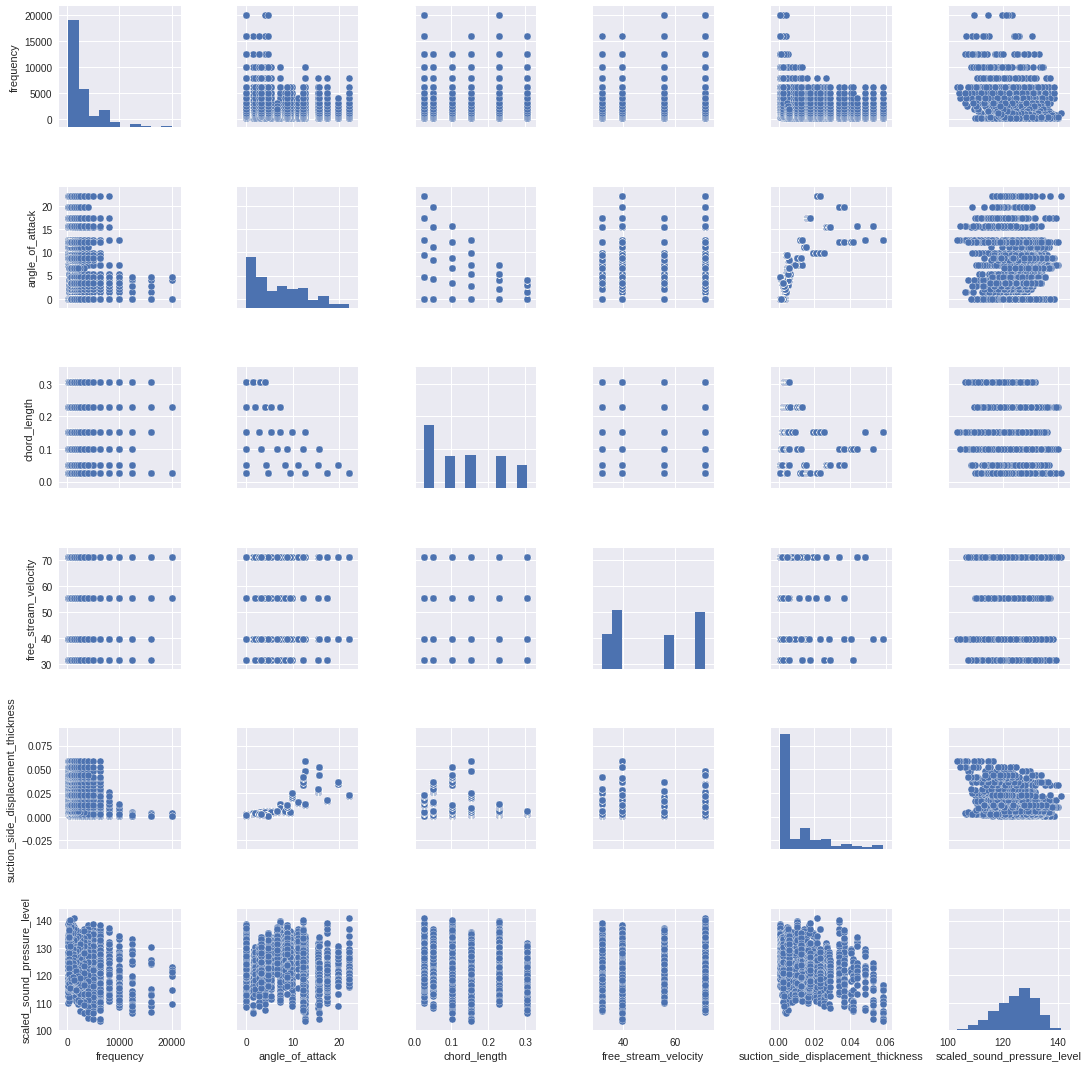

random_state=42- Frequency (Hertzs)

- Angle of attack (degrees)

- Chord length (meters)

- Free-stream velocity (meters per second)

- Suction side displacement thickness (meters)

The only output is: 6. Scaled sound pressure level (decibels)

airfoil_dataset.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| frequency | angle_of_attack | chord_length | free_stream_velocity | suction_side_displacement_thickness | scaled_sound_pressure_level | |

|---|---|---|---|---|---|---|

| 0 | 800 | 0.0 | 0.3048 | 71.3 | 0.002663 | 126.201 |

| 1 | 1000 | 0.0 | 0.3048 | 71.3 | 0.002663 | 125.201 |

| 2 | 1250 | 0.0 | 0.3048 | 71.3 | 0.002663 | 125.951 |

| 3 | 1600 | 0.0 | 0.3048 | 71.3 | 0.002663 | 127.591 |

| 4 | 2000 | 0.0 | 0.3048 | 71.3 | 0.002663 | 127.461 |

airfoil_dataset.tail().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| frequency | angle_of_attack | chord_length | free_stream_velocity | suction_side_displacement_thickness | scaled_sound_pressure_level | |

|---|---|---|---|---|---|---|

| 1498 | 2500 | 15.6 | 0.1016 | 39.6 | 0.052849 | 110.264 |

| 1499 | 3150 | 15.6 | 0.1016 | 39.6 | 0.052849 | 109.254 |

| 1500 | 4000 | 15.6 | 0.1016 | 39.6 | 0.052849 | 106.604 |

| 1501 | 5000 | 15.6 | 0.1016 | 39.6 | 0.052849 | 106.224 |

| 1502 | 6300 | 15.6 | 0.1016 | 39.6 | 0.052849 | 104.204 |

airfoil_dataset.describe().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| frequency | angle_of_attack | chord_length | free_stream_velocity | suction_side_displacement_thickness | scaled_sound_pressure_level | |

|---|---|---|---|---|---|---|

| count | 1503.000000 | 1503.000000 | 1503.000000 | 1503.000000 | 1503.000000 | 1503.000000 |

| mean | 2886.380572 | 6.782302 | 0.136548 | 50.860745 | 0.011140 | 124.835943 |

| std | 3152.573137 | 5.918128 | 0.093541 | 15.572784 | 0.013150 | 6.898657 |

| min | 200.000000 | 0.000000 | 0.025400 | 31.700000 | 0.000401 | 103.380000 |

| 25% | 800.000000 | 2.000000 | 0.050800 | 39.600000 | 0.002535 | 120.191000 |

| 50% | 1600.000000 | 5.400000 | 0.101600 | 39.600000 | 0.004957 | 125.721000 |

| 75% | 4000.000000 | 9.900000 | 0.228600 | 71.300000 | 0.015576 | 129.995500 |

| max | 20000.000000 | 22.200000 | 0.304800 | 71.300000 | 0.058411 | 140.987000 |

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1503 entries, 0 to 1502

Data columns (total 6 columns):

frequency 1503 non-null int64

angle_of_attack 1503 non-null float64

chord_length 1503 non-null float64

free_stream_velocity 1503 non-null float64

suction_side_displacement_thickness 1503 non-null float64

scaled_sound_pressure_level 1503 non-null float64

dtypes: float64(5), int64(1)

memory usage: 70.5 KB

-

There are only four free stream velocities.

- 31.7 m/s

- 39.6 m/s

- 55.5 m/s

- 71.3 m/s

-

There are six chord lengths:

- 2.5 cm

- 5 cm

- 10 cm

- 15 cm

- 22 cm

- 30 cm

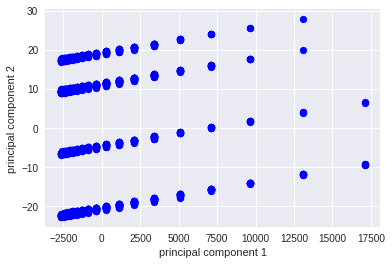

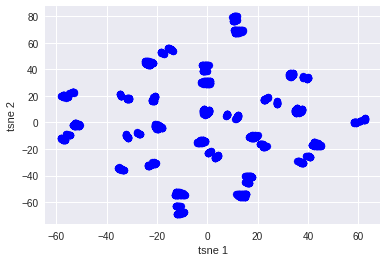

You can use this concept to reduce the number of features in your dataset without having to lose much information and keep or improve the model’s performance. In this case, you can see two different dimensionality reductions PCA and T-SNE and, it shows that this dataset is non-linear.

In this section, I will split the original dataset in two new datasets:

- Features: all columns except the target column

- Target: only the target column

Using PCA is possible to see this dataset is non-linear and I can not get good results if I use a linear model such as Linear Regression.

Using T-SNE you can see in this dataset all the clusters and detect that you need a non-linear model to get the best results for the prediction.

For this scenario I'm using three feature engines:

- Quantile transformer: This method transforms the features to follow a uniform or a normal distribution. This method is good when you work with non-linear datasets

- Max Abs scaler: Scale each feature by its maximum absolute value.

- Standard scaler: Standardize features by removing the mean and scaling to unit variance.

train_set = QuantileTransformer(random_state=0).fit_transform(train_set)

train_set = MaxAbsScaler().fit_transform(train_set)

train_set = StandardScaler().fit_transform(train_set)I have the class ModelEstimator to train and predict all models. In this case, I am using linear and non-linear models to see the difference between them.

Machine learning models:

- Lasso

- Linear regression

- Neural network

- SVR

- Bayesian Ridge

- Ridge

- ElasticNet

Now I am choosing a few models and parameters to test and get the best result for each model. In this case I do not need to choose a specific parameter because the ModelEstimator class is using GridSearchCV to decide the best estimator for each model.

Another approach is to use neural networks to find best results. In this case, I am using five hidden layers and Ridge (L2) for the regularization.

-

Using linear regressions such as LinearRegression the best model has an accuracy of nearby 45%.

-

Using non-linear regressions such as SVR the best model has an accuracy of nearby 79%. It is 34% more than linear models.

-

Using a neural network the accuracy is around 88%. It is around 10% more than SVR.

For this dataset using a neural network, you can get the best result but, it does not mean the SVR not fit well. It means for this dataset and this amount of data (1503 rows) a neural network fits better than an SVR model.

| Model | R2 score | MSE score |

|---|---|---|

| LinearRegression | 0.4568062110663442 | 26.531048242586873 |

| Ridge | 0.45686912517307376 | 26.52797534809731 |

| Lasso | 0.45551532899816394 | 26.594098400972552 |

| ElasticNet | 0.45551532899816394 | 26.594098400972552 |

| BayesianRidge | 0.4568668381953528 | 26.52808705025128 |

| SVR | 0.7981376926095791 | 9.859498994362172 |

| Neural network | 0.8892580886540213 | 5.4089333351256155 |