Welcome to my really highly opinionated Repo for my GitOps home-lab. In this repo I install a basic Vanilla Kubernetes and I use Flux to manage its state.

- Introduction

- Features

- Pre-start Checklist

- Machine Preparation

- Kubernetes and Cillium

- Flux Installation

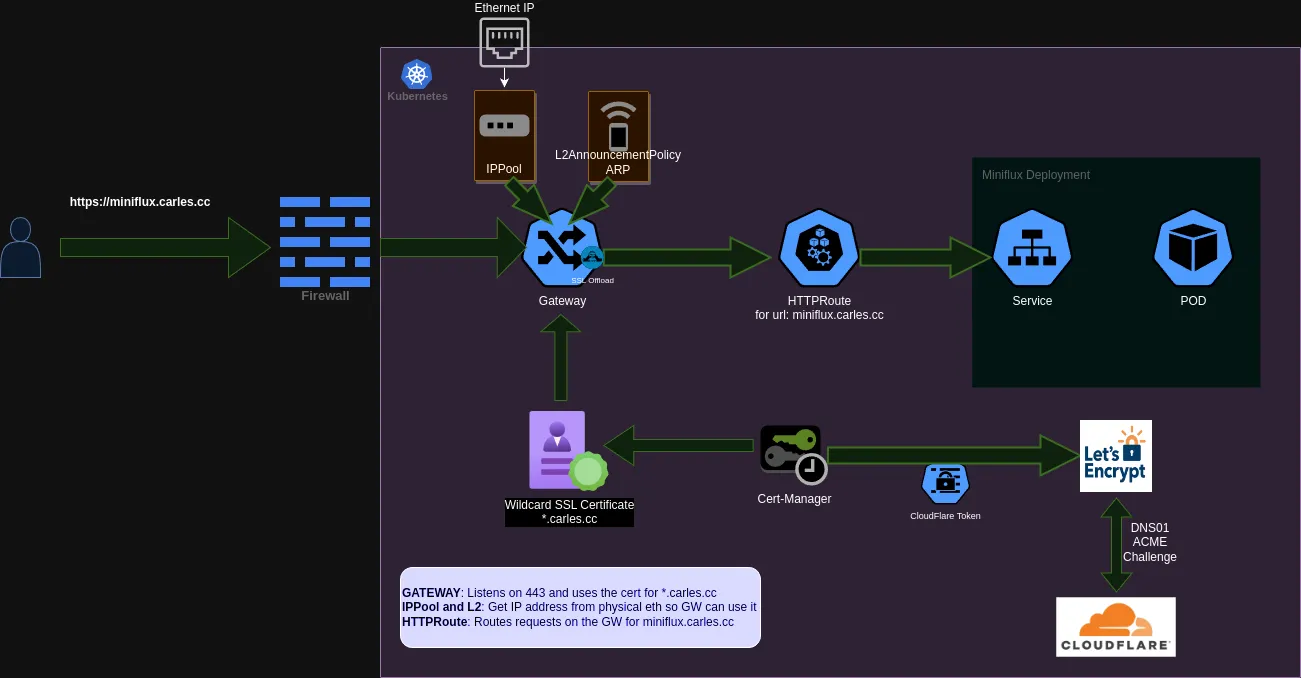

- Gateway API and SSL

- Storage: NFS

- Monitoring and SRE: Robusta, Loki and Grafana

My personal Goal with this project is to have an easy and elegant way to manage applications that I want to run in my kubernetes home-lab, while at the same time use it to keep learning the intrinsicacies of Kubernetes and GitOps. That is why I took some choices like for example installing Vanilla Kubernetes by hand. You won't find here Ansible playbooks or other automatisms to install Kubernetes, Flux and its tools. For now I choose to install everything by hand and learn and interiorize during the process. It is my goal to take out any abstraction on top of the basic Kubernetes components while at the same time enjoying a useful GitOps installation.

From a technical perspective I am interested in getting as much as possible out of Cilium and eBPF. I will replace Kube-proxy, the ingress controller and MetalLB with Cilium and Gateway API.

- Documented manual Installation of Kubernetes v1.29 mono-node cluster in Debian 12 BookWorm

- Opinionated implementation of Flux with strong community support

- Encrypted secrets thanks to SOPS

- SSL certificates thanks to Cloudflare, cert-manager and let'sencrypt

- Next-gen networking thanks to Cilium and Gateway API

- A Renovate-ready repository

... and more!

Before getting started everything below must be taken into consideration, you must...

- run the cluster on bare metal machines or VMs within your home network — this is NOT designed for cloud environments.

- have Debian 12 freshly installed on 1 or more AMD64/ARM64 bare metal machines or VMs. Each machine will be either a control node or a worker node in your cluster.

- give your nodes unrestricted internet access — air-gapped environments won't work.

- have a domain you can manage on Cloudflare.

- be willing to commit encrypted secrets to a public GitHub repository.

- have a DNS server that supports split DNS (e.g. AdGuardHome or Pi-Hole) deployed somewhere outside your cluster ON your home network.

According to the Official Documentation the requirements for kubernetes are just 2 Cores and 2 Gb of Ram to install a Control-Plane node, but obviously only with this we would be leaving little room left for our apps.

📍 For my home-lab I will only be installing one Control-Plane node, so to run apps in it I will remove the Kubernetes Taint that block control-plane nodes from running normal Pods.

📍 I Choose Debian Stable because IMO is the best hassle-free, community-driven and stability and security focused distribution. Talos would be another great option.

Perform a basic server installation without Swap partition or SwapFile, Kubernetes and Swap aren't friends yet.

After Debian is installed:

-

[Post install] Enable sudo for your non-root user

su - apt update apt install -y sudo usermod -aG sudo ${username} echo "${username} ALL=(ALL) NOPASSWD:ALL" | tee /etc/sudoers.d/${username} exit newgrp sudo sudo apt update

-

[Post install] Add SSH keys (or use

ssh-copy-idon the client that is connecting)📍 First make sure your ssh keys are up-to-date and added to your github account as instructed.

mkdir -m 700 ~/.ssh sudo apt install -y curl curl https://github.com/${github_username}.keys > ~/.ssh/authorized_keys chmod 600 ~/.ssh/authorized_keys

-

[Containerd] Configure Kernel modules and pre-requisites for containerd

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf overlay br_netfilter EOF

sudo modprobe overlay sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF

sudo sysctl --system

-

[Containerd] Install Containerd

sudo apt-get update sudo apt-get install containerd

-

[Containerd] Set the configuration file for Containerd:

sudo mkdir -p /etc/containerd containerd config default | sudo tee /etc/containerd/config.toml -

[Containerd] Set cgroup driver to systemd:

sudo vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] runtime_type = "io.containerd.runc.v2" runtime_engine = "" runtime_root = "" privileged_without_host_devices = false base_runtime_spec = "" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] SystemdCgroup = true

Edit the containerd configuration file and ensure that the option SystemdCgroup is set to "true".

After that restart containerd and ensure everything is up and running properly.

sudo systemctl restart containerd.service

sudo systemctl status containerd.service

When we already have a CRI installed(containerd) and starting properly using systemd it is the time to install K8s, for this guide we want to provision a Kubernetes cluster without kube-proxy, and to use Cilium to fully replace it. For simplicity, we will use kubeadm to bootstrap the cluster. For help with installing kubeadm and for more provisioning options please refer to the official Kubeadm documentation.

Then, after installing kubeadm, kubectl and kubelet and perform the kubeadm init we can ensure everything is almost up and ready in our cluster:

> sudo kubeadm init --skip-phases=addon/kube-proxyNotice that we are skipping the installation of kube-proxy, which isn't a default option. We do this because we want to use Cilium to take over the role of the kube-proxy. This is requisite of Cilium to be able to use its implementation of the Gateway API.

~ on main [!?]

❯ k get nodes

NAME STATUS ROLES AGE VERSION

bacterio UnReady control-plane 5d v1.29.3In order to get a Ready state, we still have to install our Pod Network Add-on or CNI.

Before installing the Container Network Interface we will install the necessary CRDs for the Gateway API to work:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.0.0/config/crd/standard/gateway.networking.k8s.io_gatewayclasses.yaml

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.0.0/config/crd/standard/gateway.networking.k8s.io_gateways.yaml

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.0.0/config/crd/standard/gateway.networking.k8s.io_httproutes.yaml

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.0.0/config/crd/standard/gateway.networking.k8s.io_referencegrants.yaml

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.0.0/config/crd/experimental/gateway.networking.k8s.io_grpcroutes.yaml

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.0.0/config/crd/experimental/gateway.networking.k8s.io_tlsroutes.yamlIn this guide we will install Cilium using Helm, as described in the Cilium Documentation for Kube-Proxy free kubernetes.

- First we add the Cilium Helm repository:

helm repo add cilium https://helm.cilium.io/- We install Cilium setting up the right env vars:

API_SERVER_IP=<your_api_server_ip>

# Kubeadm default is 6443

API_SERVER_PORT=<your_api_server_port>

helm install cilium cilium/cilium --version 1.15.1 \

--set operator-replicas=1 #We are installing on a mono-node so this is necessary

--namespace kube-system \

--set kubeProxyReplacement=strict \

--set gatewayAPI.ebabled=true \

--set k8sServiceHost=${API_SERVER_IP} \

--set k8sServicePort=${API_SERVER_PORT} \

--set l2announcements.enabled=true \

--set externalIPs.enabled=true \

--set hubble.ui.enabled=true \

--set hubble.relay.enabled=true \

--set k8s.requireIPv4PodCIDR=true \

--set annotateK8sNode=trueThis will install Cilium as a CNI plugin with the eBPF kube-proxy replacement to implement handling of Kubernetes services of type ClusterIP, NodePort, LoadBalancer and services with externalIPs. As well, the eBPF kube-proxy replacement also supports hostPort for containers such that using portmap is not necessary anymore.

Finally, as a last step, verify that Cilium has come up correctly on all nodes and is ready to operate:

$ kubectl -n kube-system get pods -l k8s-app=cilium

NAME READY STATUS RESTARTS AGE

cilium-fmh8d 1/1 Running 0 10mNote, in above Helm configuration, the kubeProxyReplacement has been set to strict mode. This means that the Cilium agent will bail out in case the underlying Linux kernel support is missing.

By default, Helm sets kubeProxyReplacement=false, which only enables per-packet in-cluster load-balancing of ClusterIP services.

Cilium’s eBPF kube-proxy replacement is supported in direct routing as well as in tunneling mode.

And after that the Cluster will become Ready.

~ on main [!?]

❯ k get nodes

NAME STATUS ROLES AGE VERSION

bacterio Ready control-plane 5d v1.29.3After deploying Cilium with above Quick-Start guide, we can first validate that the Cilium agent is running in the desired mode:

$ kubectl -n kube-system exec ds/cilium -- cilium-dbg status | grep KubeProxyReplacement

KubeProxyReplacement: True [eth0 (Direct Routing), eth1]Cilium provides Hubble for observability, it can both be used using the Hubble CLI or the UI, in order to access the Hubble UI you just need to execute:

cilium hubble uiFinally, we untaint the Control-Plane node to allow it to run workloads and test that it can in fact run a Pod.

kubectl taint nodes bacterio node-role.kubernetes.io/control-plane:NoSchedule-kubectl run testpod --image=nginx

kubectl get podsFlux is a set of continuous and progressive delivery solutions for Kubernetes that are open and extensible. In a more plain language Flux is a tool for keeping Kubernetes clusters in sync with sources of configuration (like Git repositories), that way we can use GIT as source of truth and use it to interact with our Cluster.

The Flux command-line interface (CLI) is used to bootstrap and interact with Flux.

curl -s https://fluxcd.io/install.sh | sudo bashAnd here you can find instructions to add bash autocompletion features to the shell.

Export your GitHub personal access token and username:

export GITHUB_TOKEN=<your-token>

export GITHUB_USER=<your-username>The kind of GITHUB_TOKEN in use here is PAT(Personal Access Token)

We can use the Flux CLI to run a pre-flight check on our Cluster and see if we fulfill all the basic requirements to install Flux.

flux check --preWhich should produce an output like:

► checking prerequisites

✔ kubernetes 1.29.2 >=1.25.0

✔ prerequisites checks passedFor information on how to bootstrap using a GitHub org, Gitlab and other git providers, see Bootstraping.

flux bootstrap github \

--owner=$GITHUB_USER \

--repository=home-cluster \

--branch=main \

--path=./clusters/home-cluster \

--personalThe output is similar to:

► connecting to github.com

✔ repository created

✔ repository cloned

✚ generating manifests

✔ components manifests pushed

► installing components in flux-system namespace

deployment "source-controller" successfully rolled out

deployment "kustomize-controller" successfully rolled out

deployment "helm-controller" successfully rolled out

deployment "notification-controller" successfully rolled out

✔ install completed

► configuring deploy key

✔ deploy key configured

► generating sync manifests

✔ sync manifests pushed

► applying sync manifests

◎ waiting for cluster sync

✔ bootstrap finishedThe bootstrap command above does the following:

- Creates a git repository home-cluster on your GitHub account.

- Adds Flux component manifests to the repository.

- Deploys Flux Components to your Kubernetes Cluster.

- Configures Flux components to track the path /clusters/home-cluster/ in the repository

By default Flux installs 4 components: source-controller, kustomize-controller, helm-controller and notification-controller, you can check that all of them are properly running by looking in the flux-system namespace.

❯ k get all -n flux-system

NAME READY STATUS RESTARTS AGE

pod/helm-controller-5f964c6579-z44r9 1/1 Running 0 6d18h

pod/kustomize-controller-9c588946c-6h9fd 1/1 Running 0 6d18h

pod/notification-controller-76dc5d768-z47jw 1/1 Running 0 6d18h

pod/source-controller-6c49485888-gl6dz 1/1 Running 0 6d18h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/notification-controller ClusterIP 10.97.135.57 <none> 80/TCP 6d18h

service/source-controller ClusterIP 10.97.126.173 <none> 80/TCP 6d18h

service/webhook-receiver ClusterIP 10.107.72.214 <none> 80/TCP 6d18h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/helm-controller 1/1 1 1 6d18h

deployment.apps/kustomize-controller 1/1 1 1 6d18h

deployment.apps/notification-controller 1/1 1 1 6d18h

deployment.apps/source-controller 1/1 1 1 6d18h

NAME DESIRED CURRENT READY AGE

replicaset.apps/helm-controller-5f964c6579 1 1 1 6d18h

replicaset.apps/kustomize-controller-9c588946c 1 1 1 6d18h

replicaset.apps/notification-controller-76dc5d768 1 1 1 6d18h

replicaset.apps/source-controller-6c49485888 1 1 1 6d18hAnd now, to move to the next steps, we can just clone the GIT repository we just created to start deploying apps and configuration onto our Cluster.

git clone git@github.com:cc250080/home-cluster.git

cd home-clusterTo structure the repository we use a mono-repo approach, the flux documentation Ways of structuring your repositories is very helpful here specially the following example which I took as a baseline.

├── apps #The applications are installed in this folders

│ └── miniflux

│ ├── kustomization.yaml

│ ├── miniflux-httproute.yaml

│ └── miniflux.yaml

├── flux-system # Flux components

│ ├── gotk-components.yaml

│ ├── gotk-sync.yaml

│ └── kustomization.yaml

└── infrastructure # This is executed before apps, here the yamls are for controllers, infrastructure and sources like Helm Repositories

├── cert-manager

│ ├── certificate-carlescc.yaml

│ ├── cert-manager.crds.yaml

│ ├── cert-manager.yaml

│ ├── cloudflare-api-token-secret-encrypted.yaml

│ ├── clusterIssuer.yaml

│ ├── kustomization.yaml

│ └── ns-cert-manager.yaml

├── gateway-api

│ ├── bacterio-gw.yaml

│ ├── ippool-l2.yaml

│ └── kustomization.yaml

└── sourcesIn the past, I loaded kubernetes secrets by hand with kubectl apply and kept them out of any shared storage, including git repositories. However, in my quest to follow the gitops way, I wanted a better option with much less manual work. My goal is to build a kubernetes deployment that could be redeployed from the git repository at a moment’s notice with the least amount of work required.

In order to store secrets safely in a public or private Git repository, we will use Mozilla’s SOPS CLI to encrypt Kubernetes secrets with Age, OpenPGP, AWS KMS, GCP KMS or Azure Key Vault.

In my case I will use age:

sudo apt install age

curl -LO https://github.com/getsops/sops/releases/download/v3.8.1/sops-v3.8.1.linux.amd64

sudo mv sops-v3.8.1.linux.amd64 /usr/local/bin/sops

sudo chmod +x /usr/local/bin/sops# Download the checksums file, certificate and signature

curl -LO https://github.com/getsops/sops/releases/download/v3.8.1/sops-v3.8.1.checksums.txt

curl -LO https://github.com/getsops/sops/releases/download/v3.8.1/sops-v3.8.1.checksums.pem

curl -LO https://github.com/getsops/sops/releases/download/v3.8.1/sops-v3.8.1.checksums.sig

# Verify the checksums file

cosign verify-blob sops-v3.8.1.checksums.txt \

--certificate sops-v3.8.1.checksums.pem \

--signature sops-v3.8.1.checksums.sig \

--certificate-identity-regexp=https://github.com/getsops \

--certificate-oidc-issuer=https://token.actions.githubusercontent.com age-keygen -o age.agekey

Public key: age1wnvnq64tpze4zjdmq2n44eh7jzkxf5ra7mxjvjld6cjwtaddffqqc54w23

cat age.agekey

# created: 2022-04-19T14:41:19-05:00

# public key: age1wnvnq64tpze4zjdmq2n44eh7jzkxf5ra7mxjvjld6cjwtaddffqqc54w23

AGE-SECRET-KEY-13T0N7N0W9NZKDXEFYYPWU7GN65W3UPV6LRERXUZ3ZGED8SUAAQ4SK6SMDLAs you can see, Age creates both a Public and a Private Key. I recommend that you store this Secret now in your to-go key management software.

Create a secret with the age private key, the key name must end with .agekey to be detected as an age key:

cat age.agekey |

kubectl create secret generic sops-age \

--namespace=flux-system \

--from-file=age.agekey=/dev/stdinNext, make encryption easier by creating a small configuration file for SOPS. This allows you to encrypt quickly without telling SOPS which key you want to use. Create a .sops.yaml file like this one in the root directory of your flux repository:

creation_rules:

- encrypted_regex: '^(data|stringData)$'

age: age1wnvnq64tpze4zjdmq2n44eh7jzkxf5ra7mxjvjld6cjwtaddffqqc54w23Finally, we must tell flux that it needs to decrypt secrets and we must provide the location of the decryption key. Flux is built heavily on Kustomize manifests and that’s where our key configuration belongs.

Edit the file gotk-sync.yaml from the flux-system folder in the base of your Git cluster configuration:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: flux-system

namespace: flux-system

spec:

interval: 10m0s

path: ./clusters/home-cluster

prune: true

sourceRef:

kind: GitRepository

name: flux-system

decryption:

provider: sops

secretRef:

name: sops-ageNow we are ready to encrypt a secret and push it into our GitOps Repository:

sops -e cloudflare-api-token-secret.yaml | tee cloudflare-api-token-secret-encrypted.yamlThanks to Cilium and Gateway API we can have an installation of Services that do not require Ingress Controllers or MetalLB. Note that from now on all the actions in the cluster will be performed using GitOps and organizing/pushing yamls into the GitOps Repository.

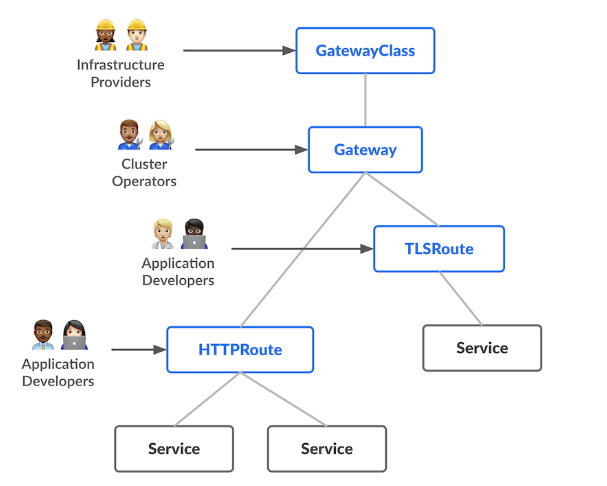

I wont go here in much detail about the role-oriented nature of Gateway API, but let's say that we will build some generic or more static components that can be used by all services, and then each application that we install will only need a small and simple HTTPRoute object to function.

The GatewayClass is something we already created when we installed Cilium, in this case our GatewayClass will be Cilium.

This Cilium objects are used to ensure a Pool of external IPs on our Home-Server, they work by attaching an IP to a Pool and then use ARP over the physical network interfaces. Note that I have used a refex to configure the ARP advertisement. BGP is also supported by Cilium but that would be overkill for my small home setup.

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: bacterio-ip-pool

namespace: kube-system

spec:

cidrs:

- cidr: "10.9.8.13/32"

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumL2AnnouncementPolicy

metadata:

name: bacterio-l2advertisement-policy

namespace: kube-system

spec:

interfaces:

- ^enx+ # host interface regex

externalIPs: true

loadBalancerIPs: trueThe Gateway objects listens on the external IP address that we reserved in the previos step and listens on port 80 and 443, doing TLS offloading. For tls it consumes a wildcard certificate managed by cert-manager. This Gateway will be used for all the Workloads in my server that needs HTTP or HTTPS access.

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: bacterio-gw

namespace: default

spec:

gatewayClassName: cilium

addresses:

- value: "10.9.8.13"

listeners:

- name: bacterio-http

port: 80

protocol: HTTP

- name: bacterio-https

port: 443

protocol: HTTPS

hostname: "*.carles.cc"

tls:

mode: Terminate

certificateRefs:

- kind: Secret

name: wildcard-carlescc

allowedRoutes:

namespaces:

from: AllThe HTTPRoute object is just the nex between the Service of our application and the Gateway and is a very simple object, here I provide an example of the HTTPRoute I configured for my RSS server (miniflux). Basically we specify the Gateway we want to use as the Parent, the Service as the backendRef and the hostname we want to use for our application.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: miniflux-route

namespace: default

spec:

parentRefs:

- name: bacterio-gw

hostnames:

- "miniflux.carles.cc"

rules:

- backendRefs:

- name: miniflux

port: 8080The NFS CSI Driver allows a Kubernetes cluster to access NFS servers on Linux. The driver is installed in the Kubernetes cluster and requires existing and configured NFS servers.

The status of the project is GA, meaning it is in General Availability and should be considered to be stable for production use.

The installation is also pretty straightforward. First we install the NFS CSI Driver:

apiVersion: helm.toolkit.fluxcd.io/v2beta2

kind: HelmRelease

metadata:

name: csi-driver-nfs

namespace: kube-system

spec:

interval: 10m

chart:

spec:

chart: csi-driver-nfs

version: '4.6.*'

sourceRef:

kind: HelmRepository

name: csi-driver-nfs

namespace: default

interval: 60m

values:

replicaCount: 1And then we create a StorageClass that can be used by our applications:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi

provisioner: nfs.csi.k8s.io

parameters:

server: ofelia.carles.cc

share: /volume1/k8snfs

reclaimPolicy: Retain

volumeBindingMode: ImmediateHere the parameters server and share are pointing to my personal NAS server, which should be ready prior to performing this task.

This section is still a work in progress, I set up a minimal monitoring including an SRE application I want to explore, Robusta.

I isolated the components to keep things KISS and grafana is automatically setting up the datasources and importing a Kubernetes from the dashboard official page.

In the future I plan to improve this by using an object storage for Loki and also to allow me to use Mimir.

├── monitoring

│ ├── grafana.yaml

│ ├── kustomization.yaml

│ ├── loki.yaml

│ ├── ns-monitoring.yaml

│ ├── prometheus.yaml

│ ├── promtail.yaml

│ └── robusta.yaml