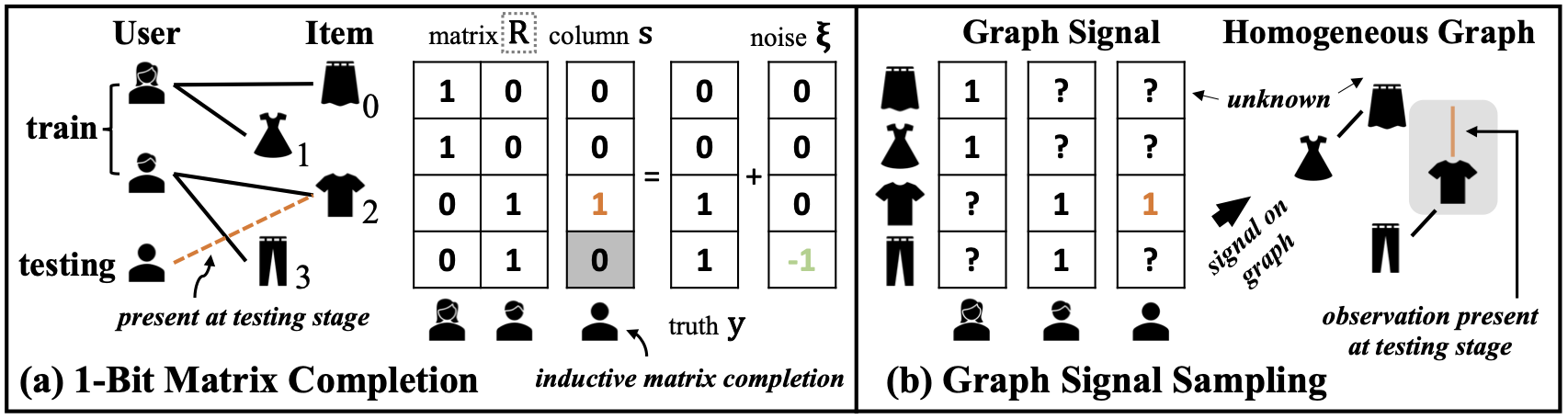

The official implementation for "Graph Signal Sampling for Inductive One-Bit Matrix Completion: a Closed-form Solution".

[2023.04.26] We release the PySpark version of our codes for collaborative filtering.

We use the Netflix benchmark to evaluate model performance, where the Dataframe scheme is as follows:

| Feature Name | Content |

|---|---|

| uid | user identity |

| mid | movie identity |

| time | feedback time |

Below we report the HR@50, HR@100 and NDCG@100 results on the above provided dataset.

| Graph Regularization | Lapalacian | HR@50 | HR@100 | NDCG@100 |

|---|---|---|---|---|

| Bandlimited Norm | Hypergraph | 0.19623 | 0.29322 | 0.08761 |

| Diffusion Process | Hypergraph | 0.18990 | 0.28547 | 0.08682 |

| Random Walk | Hypergraph | 0.19512 | 0.29250 | 0.08766 |

| Inverse Cosine | Hypergraph | 0.19353 | 0.29130 | 0.08757 |

| Bandlimited Norm | Covariance | 0.20030 | 0.29517 | 0.08814 |

| Diffusion Process | Covariance | 0.19228 | 0.28798 | 0.08675 |

| Random Walk | Covariance | 0.19922 | 0.29500 | 0.08817 |

| Inverse Cosine | Covariance | 0.19830 | 0.29540 | 0.08831 |

conf/: configurations for loggingsrc/: codes for model definitionrunme.sh: train or evaluate EasyDGL and baseline models

Download our data to $DATA_HOME directory, then Reproduce above results on Netflix benchmark:

bash runme.sh $DATA_HOME

If you find our codes useful, please consider citing our work

@inproceedings{chen2023graph,

title={Graph Signal Sampling for Inductive One-Bit Matrix Completion: a Closed-form Solution},

author={Chen, Chao and Geng, Haoyu and Zeng, Gang and Han, Zhaobing and Chai, Hua and Yang, Xiaokang and Yan, Junchi},

booktitle={Proceedings of the International Conference on Learning Representations (ICLR'23)},

year={2023},

}