TransReID: Transformer-based Object Re-Identification [arxiv]

The official repository for TransReID: Transformer-based Object Re-Identification achieves state-of-the-art performances on object re-ID, including person re-ID and vehicle re-ID.

pip install -r requirements.txt

(we use /torch 1.6.0 /torchvision 0.7.0 /timm 0.3.2 /cuda 10.1 / 16G or 32G V100 for training and evaluation.

Note that we use torch.cuda.amp to accelerate speed of training which requires pytorch >=1.6)mkdir dataDownload the person datasets Market-1501, MSMT17, DukeMTMC-reID,Occluded-Duke, and the vehicle datasets VehicleID, VeRi-776, Then unzip them and rename them under the directory like

data

├── market1501

│ └── images ..

├── MSMT17

│ └── images ..

├── dukemtmcreid

│ └── images ..

├── Occluded_Duke

│ └── images ..

├── VehicleID_V1.0

│ └── images ..

└── VeRi

└── images ..

You need to download the ImageNet pretrained transformer model : ViT-Base, ViT-Small, DeiT-Small, DeiT-Base

We utilize 1 GPU for training.

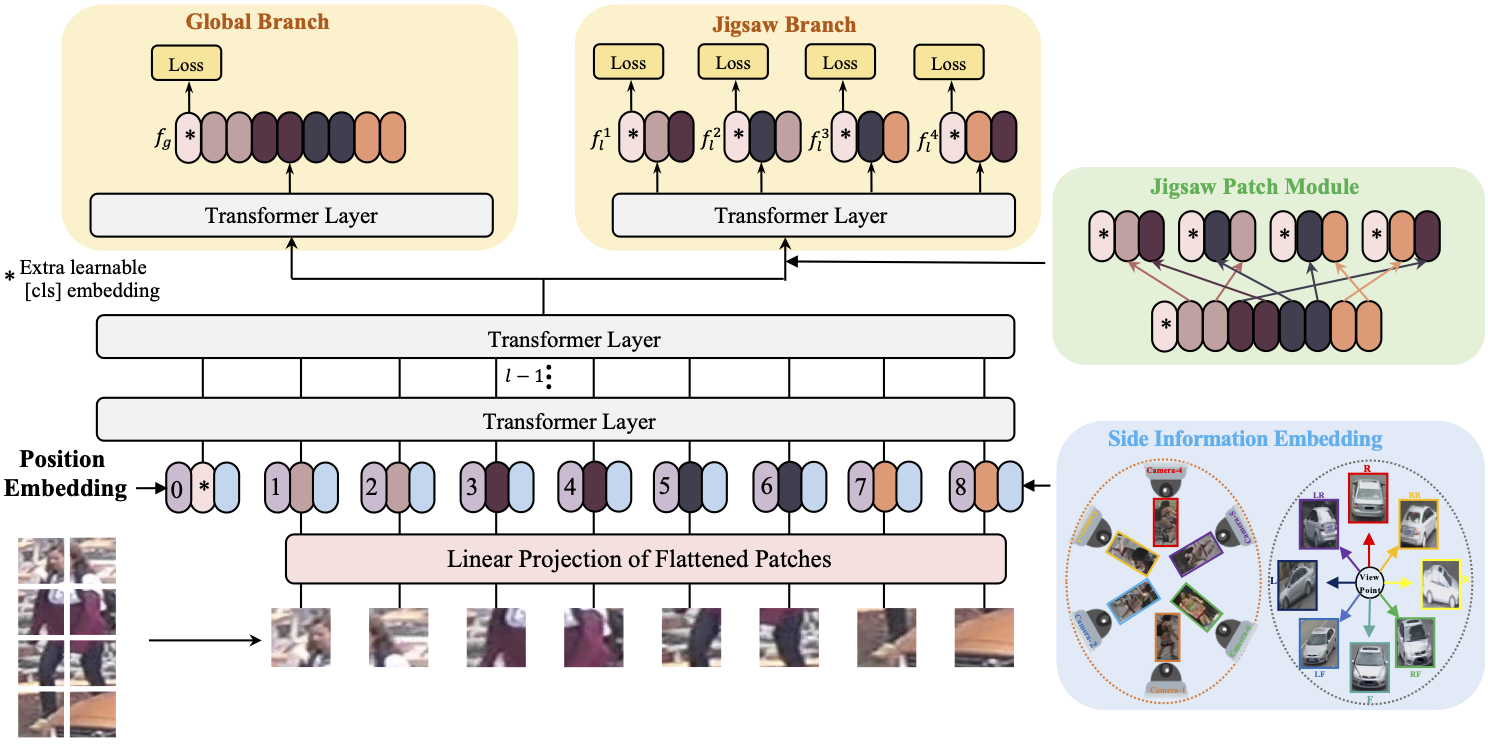

python train.py --config_file configs/transformer_base.yml MODEL.DEVICE_ID "('your device id')" MODEL.STRIDE_SIZE ${1} MODEL.SIE_CAMERA ${2} MODEL.SIE_VIEW ${3} MODEL.JPM ${4} MODEL.TRANSFORMER_TYPE ${5} OUTPUT_DIR ${OUTPUT_DIR} DATASETS.NAMES "('your dataset name')"${1}: stride size for pure transformer, e.g. [16, 16], [14, 14], [12, 12]${2}: whether using SIE with camera, True or False.${3}: whether using SIE with view, True or False.${4}: whether using JPM, True or False.${5}: choose transformer type from'vit_base_patch16_224_TransReID',(The structure of the deit is the same as that of the vit, and only need to change the imagenet pretrained model)'vit_small_patch16_224_TransReID','deit_small_patch16_224_TransReID',${OUTPUT_DIR}: folder for saving logs and checkpoints, e.g.../logs/market1501

or you can directly train with following yml and commands:

# DukeMTMC transformer-based baseline

python train.py --config_file configs/DukeMTMC/vit_base.yml MODEL.DEVICE_ID "('0')"

# DukeMTMC baseline + JPM

python train.py --config_file configs/DukeMTMC/vit_jpm.yml MODEL.DEVICE_ID "('0')"

# DukeMTMC baseline + SIE

python train.py --config_file configs/DukeMTMC/vit_sie.yml MODEL.DEVICE_ID "('0')"

# DukeMTMC TransReID (baseline + SIE + JPM)

python train.py --config_file configs/DukeMTMC/vit_transreid.yml MODEL.DEVICE_ID "('0')"

# DukeMTMC TransReID with stride size [12, 12]

python train.py --config_file configs/DukeMTMC/vit_transreid_stride.yml MODEL.DEVICE_ID "('0')"

# MSMT17

python train.py --config_file configs/MSMT17/vit_transreid.yml MODEL.DEVICE_ID "('0')"

# OCC_Duke

python train.py --config_file configs/OCC_Duke/vit_transreid.yml MODEL.DEVICE_ID "('0')"

# Market

python train.py --config_file configs/Market/vit_transreid.yml MODEL.DEVICE_ID "('0')"

python test.py --config_file 'choose which config to test' MODEL.DEVICE_ID "('your device id')" TEST.WEIGHT "('your path of trained checkpoints')"Some examples:

# DukeMTMC

python test.py --config_file configs/DukeMTMC/vit_transreid.yml MODEL.DEVICE_ID "('0')" TEST.WEIGHT '../logs/duke_vit_transreid/transformer_120.pth'

# MSMT17

python test.py --config_file configs/MSMT17/vit_transreid.yml MODEL.DEVICE_ID "('0')" TEST.WEIGHT '../logs/msmt17_vit_transreid/transformer_120.pth'

# OCC_Duke

python test.py --config_file configs/OCC_Duke/vit_transreid.yml MODEL.DEVICE_ID "('0')" TEST.WEIGHT '../logs/occ_duke_vit_transreid/transformer_120.pth'

# Market

python test.py --config_file configs/Market/vit_transreid.yml MODEL.DEVICE_ID "('0')" TEST.WEIGHT '../logs/market_vit_transreid/transformer_120.pth'

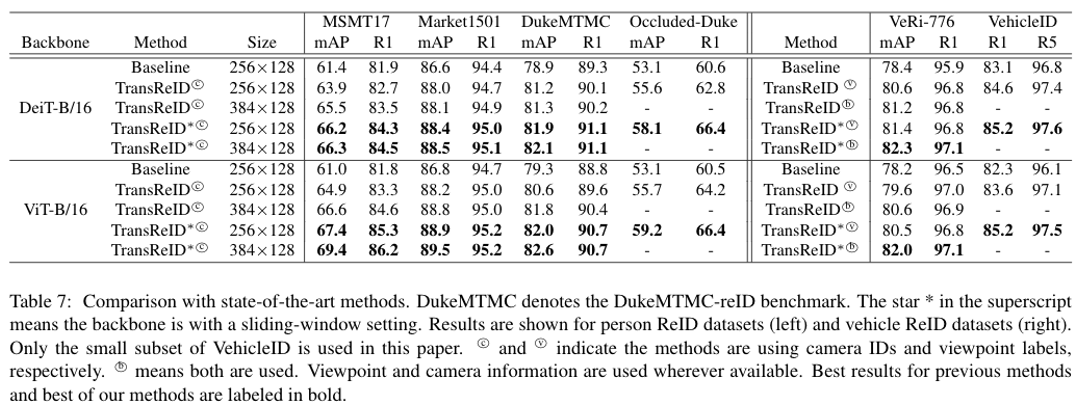

| Model | MSMT17 | Market | Duke | OCC_Duke | VeRi | VehicleID |

|---|---|---|---|---|---|---|

| baseline(ViT) | model | log | model | log | model | log | model | log | TBD | TBD |

| TransReID^*(ViT) | model | log | model | log | model | log | model | log | TBD | TBD |

| TransReID^*(DeiT) | model | log | model | log | model | log | model | log | TBD | TBD |

(We reorganize the code. Now we are running each experiment one by one to make sure that you can reproduce the results in our paper. We will gradually upload the logs and models after training in the following weeks. Thanks for your attention.)

Codebase from reid-strong-baseline , pytorch-image-models

If you find this code useful for your research, please cite our paper

@article{he2021transreid,

title={TransReID: Transformer-based Object Re-Identification},

author={He, Shuting and Luo, Hao and Wang, Pichao and Wang, Fan and Li, Hao and Jiang, Wei},

journal={arXiv preprint arXiv:2102.04378},

year={2021}

}

If you have any question, please feel free to contact us. E-mail: shuting_he@zju.edu.cn , haoluocsc@zju.edu.cn