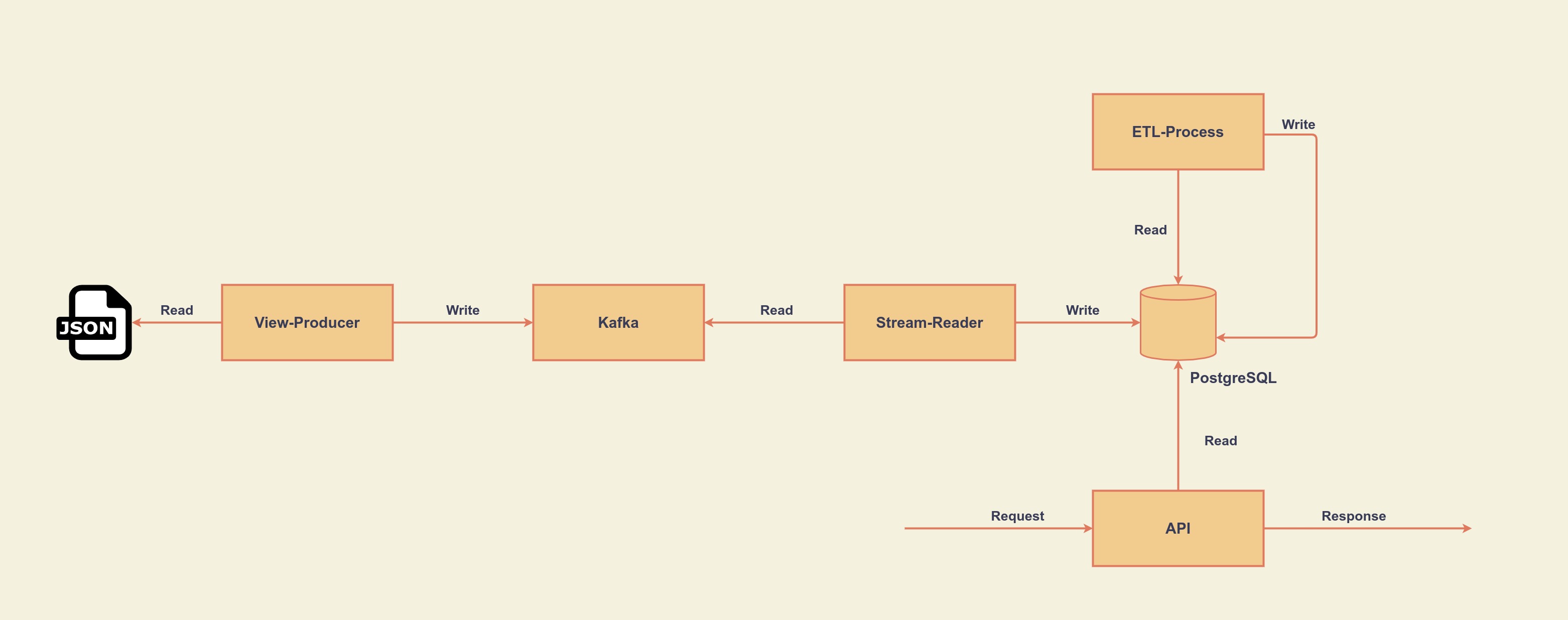

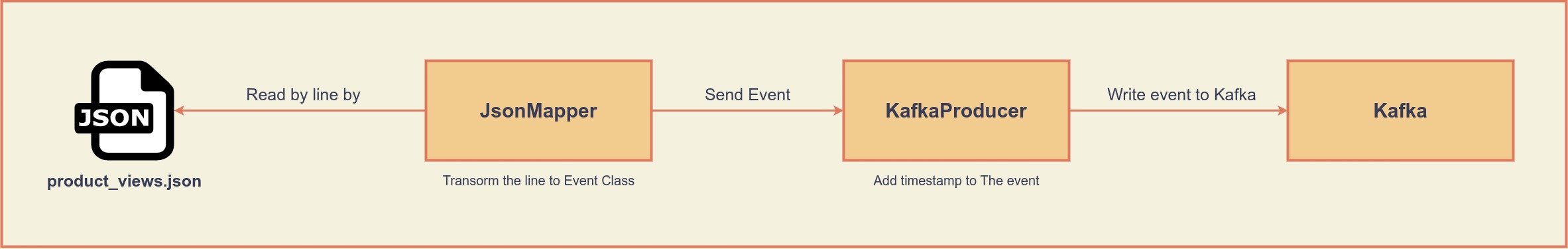

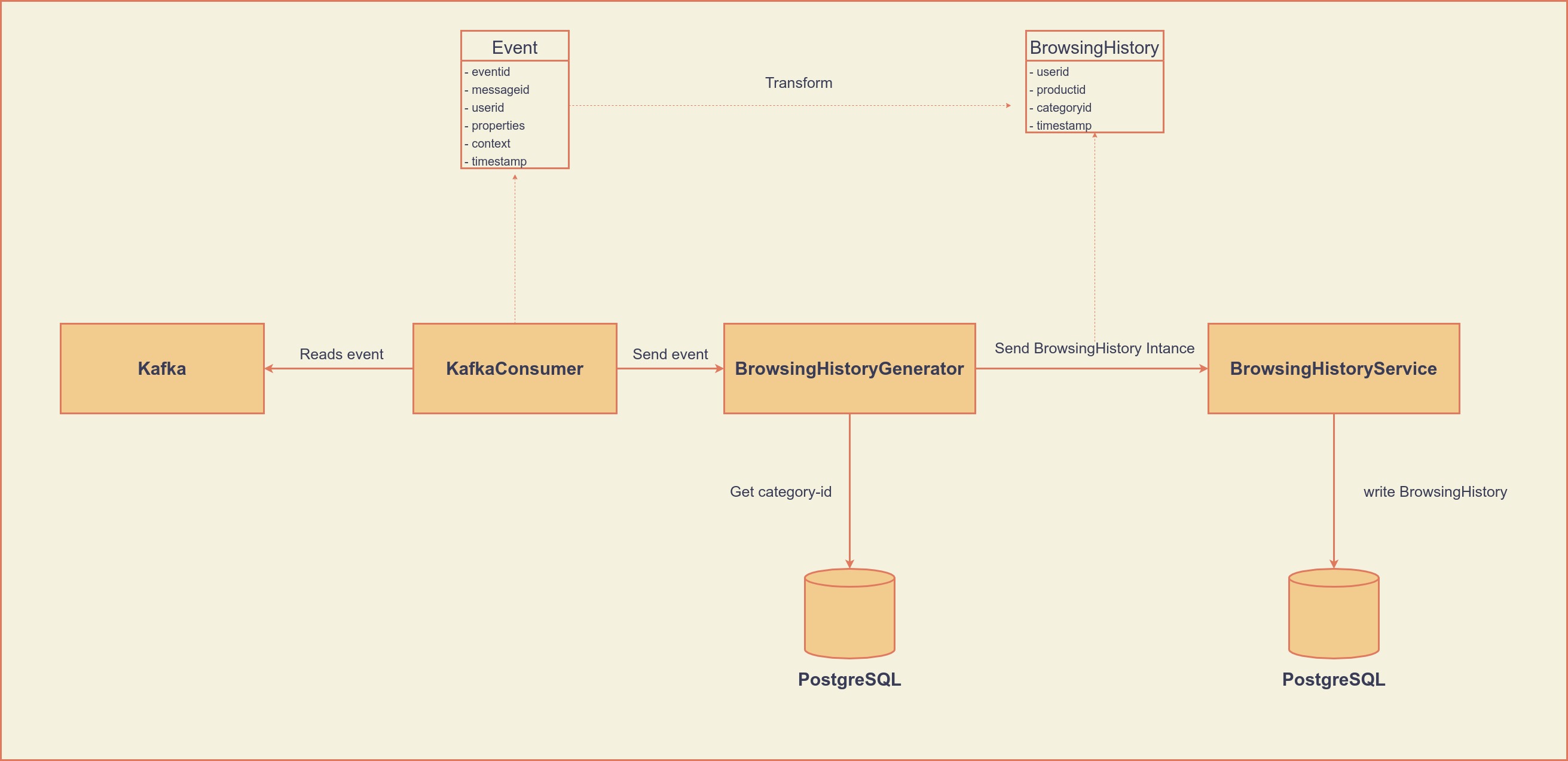

This project occurs four application. The project read ndjson file that named product-views.json by line by and write the line to Kafka. And then, reads the line, that written to Kafka, from Kafka then write to PostgreSQL.

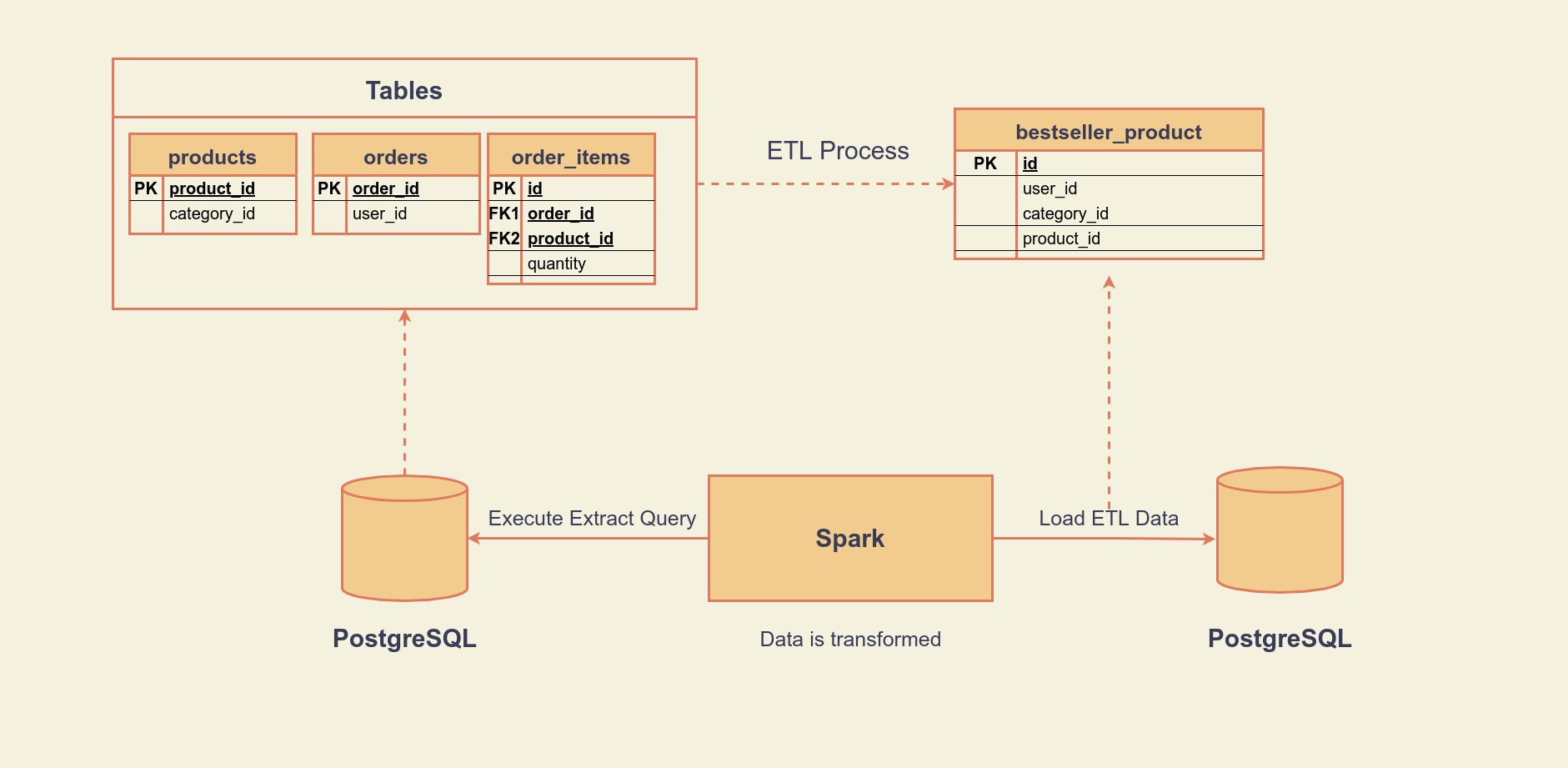

Plus, implements ETL process to products, orders and order_items tables that take place in PostgreSQL.

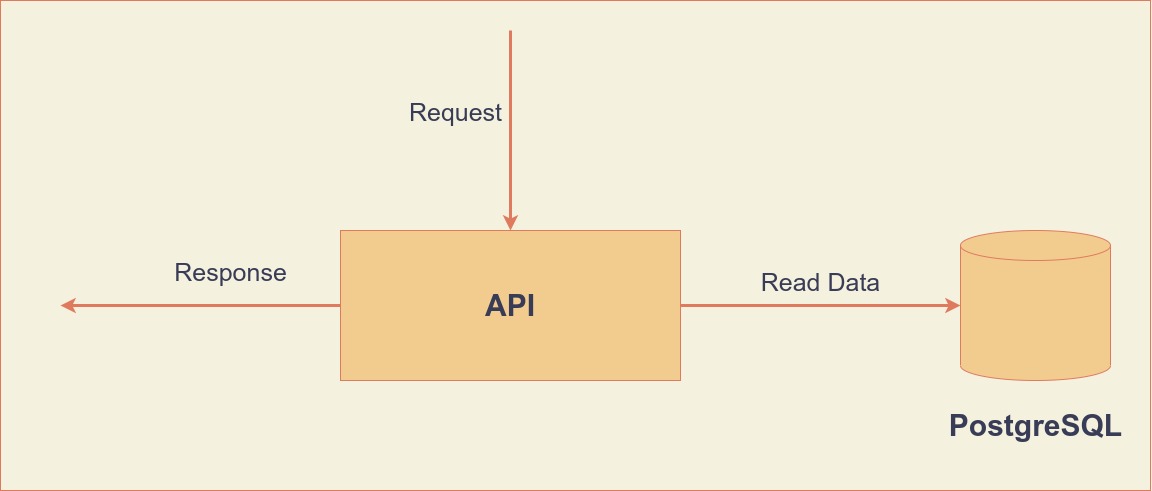

Also, with API return ten viewed products given userId and recommend products given userId.

| Module | Use Technology | Description |

|---|---|---|

| view-producer | Java Spring | Reads product-views.json file by line by and transform the line to Event class and writes to Kafka. |

| stream-reader | Java Spring | Reads event from Kafka and transform the event to BrowsingHistory class and the class write to PostgreSQL Database. |

| etl-process | PySpark | Implements ETL to orders, products and order_items tables. These tables joins bestseller_product table. |

| api | Java Spring | Returns response for specific requests. |

Click the module name to read its own README

| Module | Code | README | Unittest | Containerization |

|---|---|---|---|---|

| viewed-producer | ✔️ | ✔️ | ❌ | ✔️ |

| stream-reader | ✔️ | ✔️ | ❌ | ✔️ |

| etl_process | ✔️ | ✔️ | ✔️ | ✔️ |

| api | ✔️ | ✔️ | ✔️ | ✔️ |

If you don't want to install anything so run below command:

docker-compose -f project-docker-compose.yml up -d

If you want to install applications single by single except above installing. For this, run below command and click to module name you want to install.

docker-compose up -d