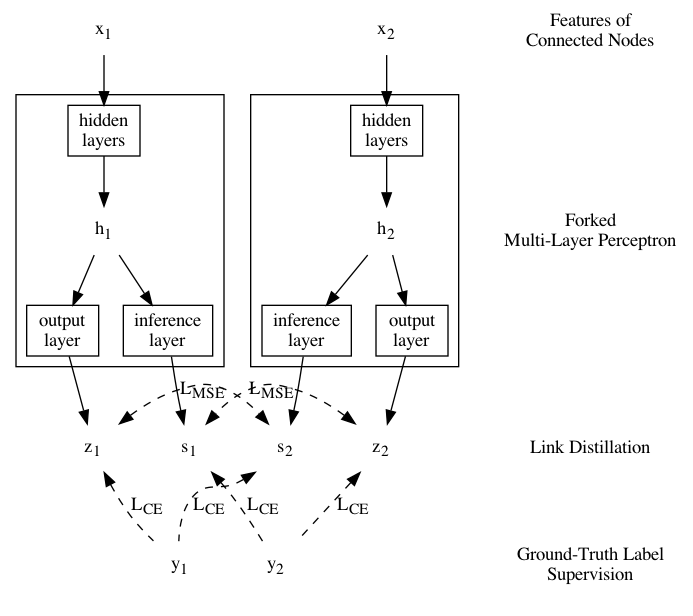

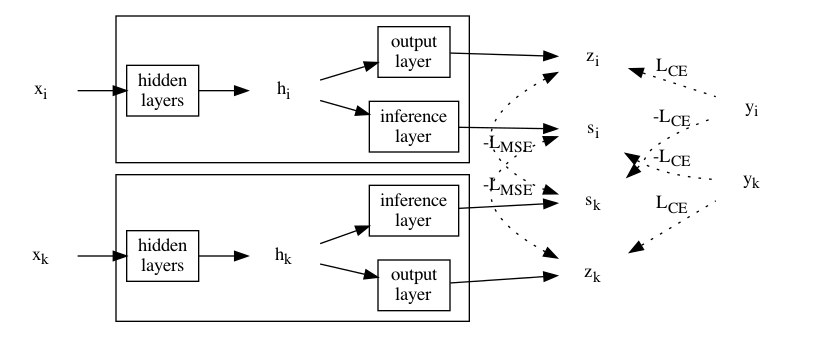

LinkDist distils self-knowledge from links into a Multi-Layer Perceptron (MLP) without the need to aggregate messages.

Experiments with 8 real-world datasets show the Learnt MLP (named LinkDistMLP) can predict the label of a node without knowing its adjacencies but achieve comparable accuracy against GNNs in the contexts of both semi- and full-supervised node classification tasks.

We also introduce Contrastive Learning techniques to further boost the accuracy of LinkDist and LinkDistMLP (as CoLinkDist and CoLinkDistMLP).

| MLP-based Method | ogbn-arxiv | ogbn-mag | ogbn-products |

|---|---|---|---|

| MLP | 0.5550 ± 0.0023 | 0.2692 ± 0.0026 | 0.6106 ± 0.0008 |

| MLP + FLAG | 0.5602 ± 0.0019 | - | 0.6241 ± 0.0016 |

| CoLinkDistMLP | 0.5638 ± 0.0016 | 0.2761 ± 0.0018 | 0.6259 ± 0.0010 |

Install dependencies torch and DGL:

pip3 install torch ogbRun the script with arguments algorithm_name, dataset_name, and dataset_split.

- Available algorithms: mlp, gcn, gcn2mlp, linkdistmlp, linkdist, colinkdsitmlp, colinkdist.

- If the

algorithm_nameends with-trans, the experiment is run with the transductive setting. Otherwise, it is run with inductive setting.

- If the

- Available datasets: cora / citeseer / pubmed / corafull / amazon-photo / amazon-com / coauthor-cs / coauthor-phy

- We divide the dataset into a training set with

dataset_splitout of ten nodes, a validation set and a testing set. Ifdataset_splitis 0, we select at most 20 nodes from every class to construct the training set.

- We divide the dataset into a training set with

The following command experiments GCN on Cora dataset with the inductive setting:

python3 main.py gcn cora 0The following command experiments CoLinkDistMLP on Amazon Photo dataset with the transductive setting, the Amazon Photo dataset is divided into a training set of 60% nodes, a validation set of 20% nodes, and a testing set of 20% nodes:

python3 main.py colinkdistmlp-trans amazon-photo 6To reproduce all experiments in our paper, run

bash runThe output logs will be saved into trans.log, induc.log, and full.log.

@article{2106.08541v1,

author = {Yi Luo and Aiguo Chen and Ke Yan and Ling Tian},

eprint = {2106.08541v1},

month = {Jun},

title = {Distilling Self-Knowledge From Contrastive Links to Classify Graph Nodes

Without Passing Messages},

type = {article},

url = {http://arxiv.org/abs/2106.08541v1},

year = {2021},

}