This repository contains Pytorch original implementation of the paper: 'Unsupervised Learning of Category-Specific Symmetric 3D Keypoints from Point Sets' by Clara Fernandez-Labrador, Ajad Chhatkuli, Danda Pani Paudel, José J. Guerrero , Cédric Demonceaux and Luc Van Gool.

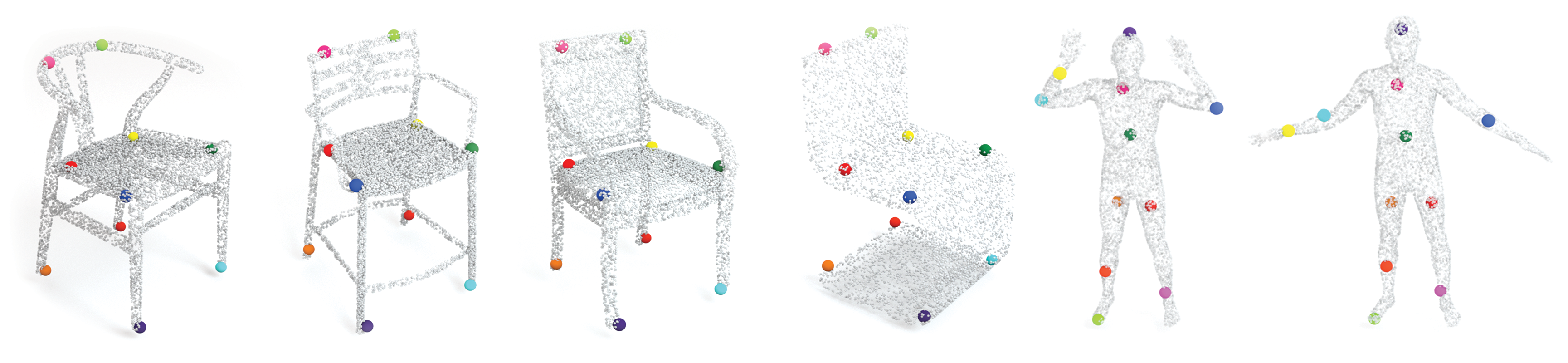

Automatic discovery of category-specific 3D keypoints from a collection of objects of some category is a challenging problem. One reason is that not all objects in a category necessarily have the same semantic parts. The level of difficulty adds up further when objects are represented by 3D point clouds, with variations in shape and unknown coordinate frames. We define keypoints to be category-specific, if they meaningfully represent objects’ shape and their correspondences can be simply established order-wise across all objects. This paper aims at learning category-specific 3D keypoints, in an unsupervised manner, using a collection of misaligned 3D point clouds of objects from an unknown category. In order to do so, we model shapes defined by the keypoints, within a category, using the symmetric linear basis shapes without assuming the plane of symmetry to be known. The usage of symmetry prior leads us to learn stable keypoints suitable for higher misalignments. To the best of our knowledge, this is the first work on learning such keypoints directly from 3D point clouds. Using categories from four benchmark datasets, we demonstrate the quality of our learned keypoints by quantitative and qualitative evaluations. Our experiments also show that the keypoints discovered by our method are geometrically and semantically consistent.

@article{fernandez2020unsupervised,

title={Unsupervised Learning of Category-Specific Symmetric 3D Keypoints from Point Sets},

author={Fernandez-Labrador, Clara and Chhatkuli, Ajad and Paudel, Danda Pani and Guerrero, Jose J and Demonceaux, C{\'e}dric and Van Gool, Luc},

journal={European Conference on Computer Vision (ECCV)},

year={2020}

}We use the file data/create_datasets.py to prepare the input data.

You can access to our data here.

We also refer to the official websites to download the original datasets:

environment.yml contains a copy of the virtual environment with all the dependences and packages needed.

In order to reproduce it, simply run:

conda env create -f environment.yml --name fernandez2020unsupervised

Start activating your environment:

conda activate fernandez2020unsupervised

Then, compile cuda module - index_max:

cd models/index_max_ext

python setup.py install

Now you are ready to go!

$ python train.py --dataset 'ShapeNet' --category 'airplane' --ckpt_model 'airplane_10b' --batch_size 32 --node_num 14 --node_knn_k_1 3 --basis_num 10 --input_pc_num 1600 --surface_normal_len 0Check models/options_detector.py for more options.

python test.py --dataset 'ShapeNet' --category 'airplane' --ckpt_model 'airplane_10b' --node_num 14 --node_knn_k_1 3 --basis_num 10 --input_pc_num 1600 --surface_normal_len 0To visualize the results use viz.py

You can try out directly some of our pretrained models. The checkpoints can be found here.

python test.py --dataset 'ModelNet10' --category 'chair' --ckpt_model 'chair_10b' --node_num 14 --node_knn_k_1 3 --basis_num 10python test.py --dataset 'ShapeNet' --category 'airplane' --ckpt_model 'airplane_10b' --node_num 14 --node_knn_k_1 3 --basis_num 10 --input_pc_num 1600 --surface_normal_len 0Data

│

└───dataset_1_name

│ └───checkpoints

│ └─model_1_name

│ | checkpoint.tar

│ | ...

│ └─model_2_name

│ └─...

│ └───results

│ └─model_1_name

│ | name_file1.mat

│ | ...

│ └─model_2_name

│ └─...

│ └───train_data_npy

│ └─category1

│ | name_file1.npy

│ | name_file2.npy

│ | ...

│ └─category2

│ └─...

│ └───test_data_npy

│ └─category1

│ └─category2

│ └─...

│

└───dataset_2_name

│ ...

This repository is released under GPL-3.0 License (see LICENSE file for details).