Interact with smart home via hand gestures.

- Clone repository

git clone git@github.com:chaichana-t/hand-gestures-smart-home.git

cd hand-gestures-smart-home- Install dependencies (recommend python version >= 3.9)

pip install -r requirements.txt- You can config constant like

time intervalorbulb ipinconfig.py

- Run app

python main.pytime_interval = 2

bulb_ips = ['192.168.1.103','192.168.1.102']- Supporting smart home interactions

- Turn on / off

- Increase brightness

- Decrease brightness

- Coloring

- Temperature

- Randomly pick

- Disco

Voice is being used as an input for interaction in smart home devices. However, some people aren’t be able to use their voice like everyone else. For example, Deaf people. We recognize the difficulty and want to ensure that everyone has access to smart home technology. As a result, we'll be utilizing computer vision to address this issue.

We can't predict if users would gesture only the actions that are presented in real-world settings. As a result, we've utilized certain strategies to overcome, which we'll discuss in the upcoming session.

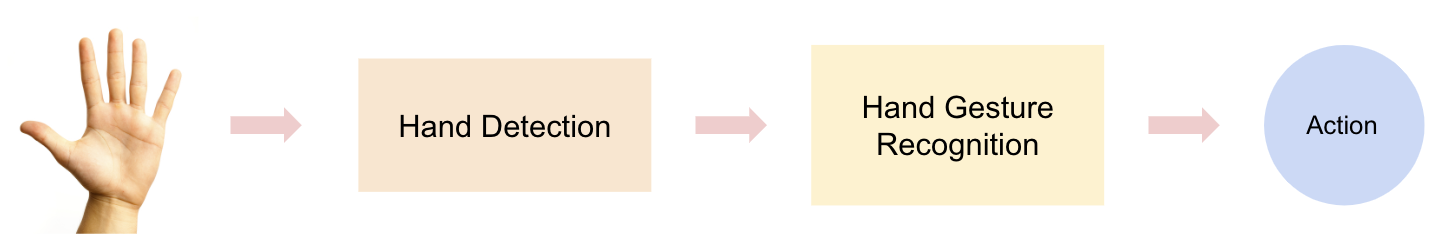

From our researches we found two possible approaches for the work

- Real-time Hand Gesture Recognition: link

- Detect hand using Google MediaPipe.

- Recognize hand gesture using hand landmarks using Neural Networks.

- Hand Detection using Object Detection

- Detect hand with its action using object detection approach.

- AI

- Hand Detection

- Hand Gesture Recognition

- APP

- Motion Interval

- Yeelight connection

Use two stages model

- Google MediaPipe (ref. Github)

-

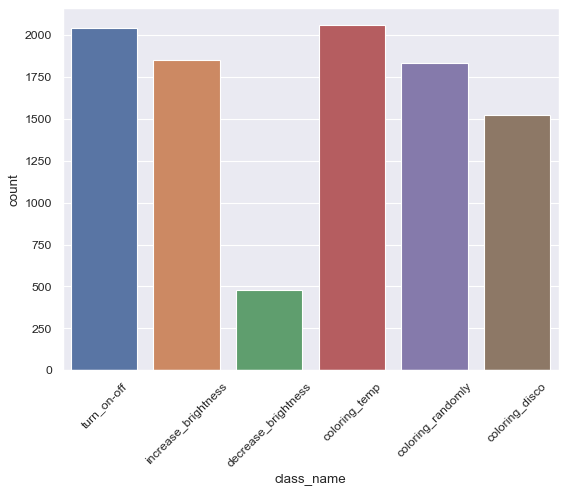

Data

-

Model

-

Fully Connected Neural Network

-

Input:

Coordinate x, y of each key points- Normalized by position and size

def normalize(landmarks): base_x, base_y = landmarks[0][0], landmarks[0][1] landmarks = [[landmark[0] - base_x, landmark[1] - base_y] for landmark in landmarks] max_value = max([max([abs(x) for x in landmark]) for landmark in landmarks]) landmarks = [[landmark[0] / max_value, landmark[1] / max_value] for landmark in landmarks] return landmarks

- Structure:

[[x0, y0], [x1, y1], ..., [x20, y20]]

- Normalized by position and size

-

Output:

Action -

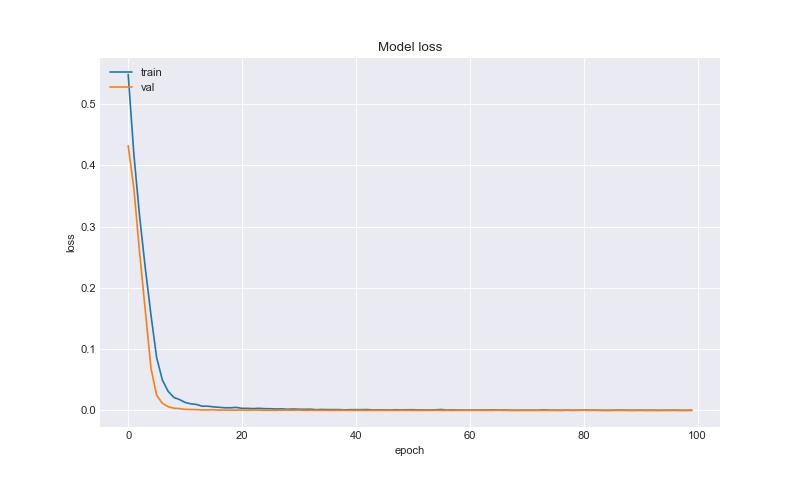

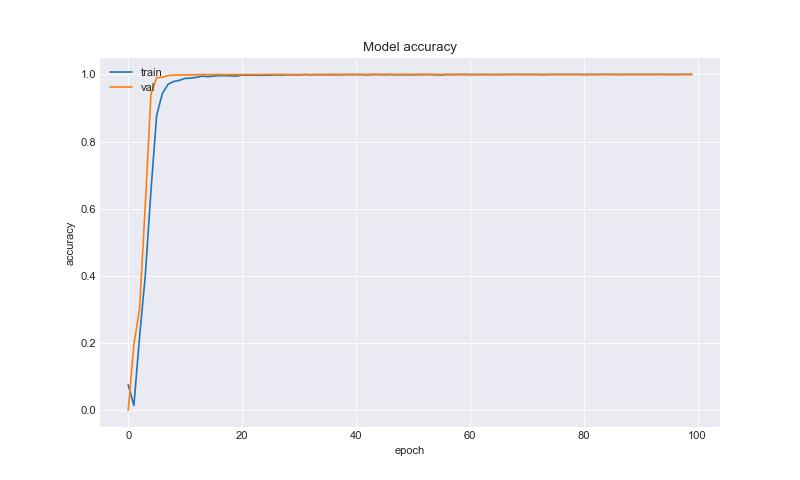

Model Architecture

class HandGestureModel(nn.Module): def __init__(self): super(HandGestureModel, self).__init__() self.classifier = nn.Sequential( nn.Linear(in_features=42, out_features=64), nn.ReLU(), nn.Dropout(p=0.2), nn.Linear(in_features=64, out_features=128), nn.ReLU(), nn.Dropout(p=0.2), nn.Linear(in_features=128, out_features=512), nn.ReLU(), nn.Dropout(p=0.2), nn.Linear(in_features=512, out_features=64), nn.ReLU(), nn.Dropout(p=0.2), nn.Linear(in_features=64, out_features=32), nn.ReLU(), nn.Linear(in_features=32, out_features=6), ) self.sigmoid = nn.Sigmoid() def forward(self, x): x = torch.flatten(x, start_dim=1) x = self.classifier(x) return self.sigmoid(x)

-

-

-

Our Contribute (from Technical Challenges)

- This problem is

Multiclass Single-label Classification- Typical

SoftmaxandCrossEntropyLosswere applied.- Model can recognize every action with high confidence.

- What about edge cases if we don’t have in dataset?

- We applied

SigmoidandBCELossfor idealy minimizing all action's probabilities of edge cases to zero.- Train it like

Multiclass Multi-label Classification(but each label has an 1’s in one-hot encoding) - Inference using max confidence and thresholding

0.999 - However, this does not perfectly work.

- Train it like

- Typical

- This problem is

-

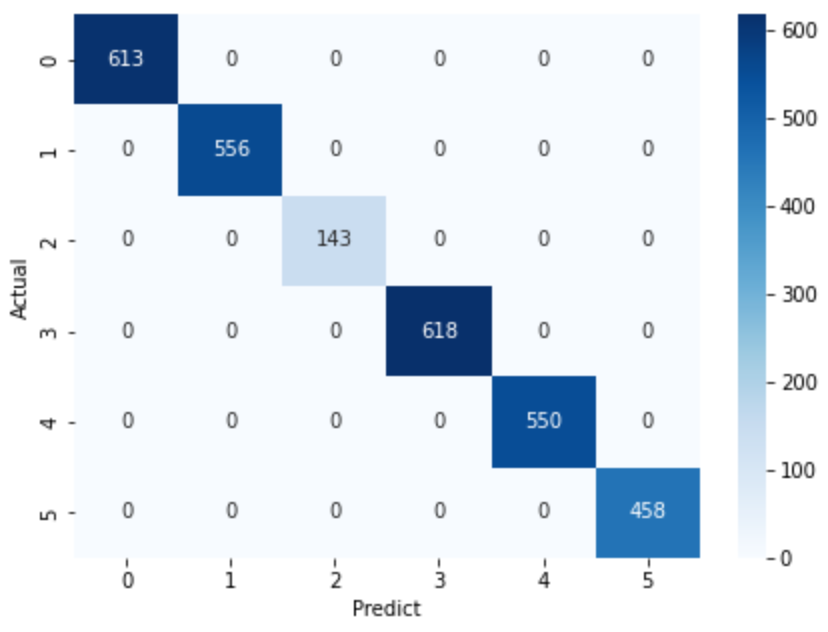

Model Evaluation

-

Classification Report

precision recall f1-score support 0 1.0000 1.0000 1.0000 613 1 1.0000 1.0000 1.0000 556 2 1.0000 1.0000 1.0000 143 3 1.0000 1.0000 1.0000 618 4 1.0000 1.0000 1.0000 550 5 1.0000 1.0000 1.0000 458 accuracy 1.0000 2938 macro avg 1.0000 1.0000 1.0000 2938 weighted avg 1.0000 1.0000 1.0000 2938

-

-

Motion Interval

- We use time as interval in order to request Yeelight API which currently is 2 seconds.

-

Yeelight connection

- We use YeeLight python library to control our smart light bulb.

- Our actions that could be used by the model

- Turn on / off (toggle)

- Increase brightness

- Decrease brightness

- Coloring

- Temp (toggle)

- Randomly pick

- Disco

-

Gesture Action

Turn on / off (toggle) Increase brightness Decrease brightness

Temp (toggle) Randomly pick Disco

- Sufficient lighting is required

- Improve model performance such that actions that aren't supplied aren't recognized

- Improve model more generalized to recognize all possible hand gestures

- Improve UX/UI

- Port it onto smaller device

- Expand it to more smart devices (Not only Yeelight bulb)