An iOS Application inspired by HBO's Silicon Valley built to classify food and provide it's nutritive information. The app contains a CoreML model built with Keras 1.2.2 and is a fine-tuned InceptionV3 model. The model is built to recognize over 150 dishes from multiple cuisines. For each of these dishes I gathered around 800 to 900 images from Google, Instagram and Flickr which matched a search query to the title of the category. I was able to achieve 86.97% Top-1 Accuracy and 97.42% Top-5 Accuracy

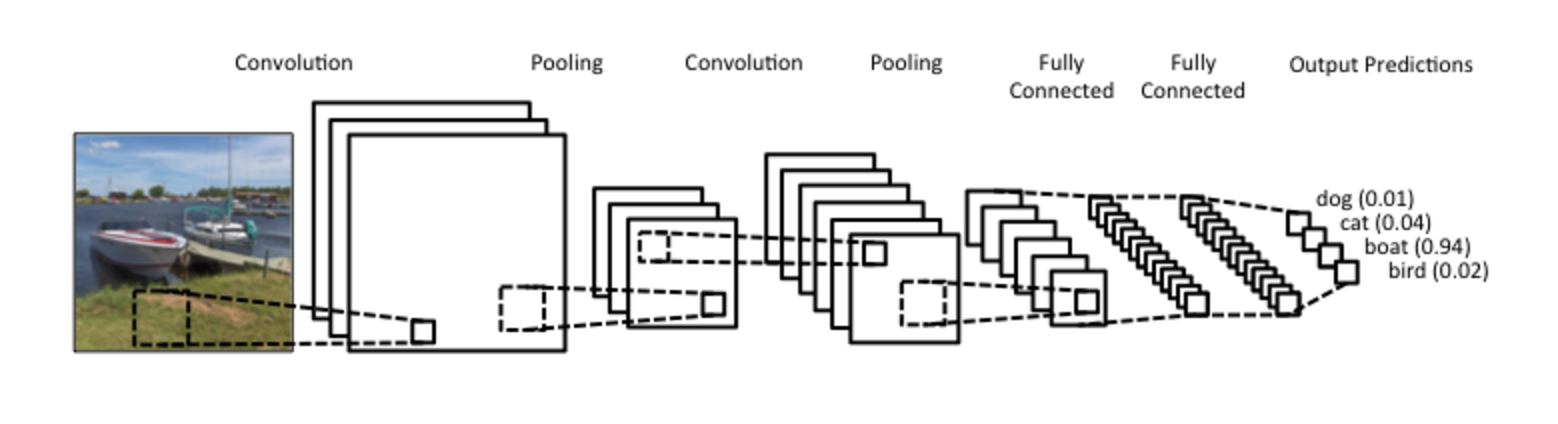

Convolutional Neural Networks (CNN), a technique within the broader Deep Learning field, have been a revolutionary force in Computer Vision applications, especially in the past half-decade or so. One main use-case is that of image classification, e.g. determining whether a picture is that of a dog or cat.

You don't have to limit yourself to a binary classifier of course; CNNs can easily scale to thousands of different classes, as seen in the well-known ImageNet dataset of 1000 classes, used to benchmark computer vision algorithm performance.

As an introductory project for myself, and on the lines of what I have learnt in CS229 at Stanford, I chose to use a pre-trained image classifier that comes with Keras. I have been in love with HBO's silicon valley and I'm especially fond of the character Jian Yang. Hence I chose this project.

There are 150 different classes of food with 800 to 900 labeled images per class for supervised training.

I found this script on github: keras-finetuning and used it for the finetuning of my dataset.

Over the last 2 months, I have built this project by exploring various models for machine learning. This is the best result I have achieved as of October 7th 2017

Once I built my model, I had to convert it into a .mlmodel file for use inside Xcode.

I used Core ML Tools to convert it to the Core ML model format.

Core ML Tools is a Python package (

coremltools), hosted at the Python Package Index (PyPI). For information about Python packages, see Python Packaging User Guide.

This is how you can convert a kera built model to CoreML using the tools.

import coremltools

coreml_model = coremltools.converters.keras.convert('SeeFood.h5')

coreml_model.save('SeeFood.mlmodel')Once the CoreML model was created, I imported it into my Xcode workspace and let Xcode automatically create the class for it. I simply used these classes in my swift code for detection of food.

-

Download the .mlmodel file and drag it into you Xcode Project and wait for a moment.

-

You will see that Xcode has generated input and output classes, and the main class SeeFood, which has a model property and two prediction methods.

-

Open ViewController.swift and import CoreML

import UIKit

import CoreML-

Implement a method to either capture a photo using AVFoundation or using a photo in your library using Photos framework.

-

Implement the required methods for image classification.

let model = SeeFood()

let previewImage = UIImage(data: imageData)

guard let input = model.preprocess(image: previewImage!) else {

print("preprocessing failed")

return

}

guard let result = try? model.prediction(image: input) else {

print("prediction failed")

return

}

let confidence = result.foodConfidence["\(result.classLabel)"]! * 100.0

print("\(result.classLabel) - \(converted) %")- Cuisine Categorization

- Fetch Recipe for image

- Increase dataset to include over 300 titles

- Reduce latency while identifying the food item

- Port app to Android/Web