This set of scripts is a proof of concept on how to do API CI/CD with WSO2 API Manager using the WSO2 API Manager Publisher REST API.

This PoC assumes a deployment with the following criteria.

- There are one or more API Manager deployments that manage APIs belonging to different departments.

- Environments are either separated as different deployments or differently named (and contexted) APIs in the same environment (e.g.: lower level dev* environments could be in the same API Manager deployment)

- API Management is done by a different team from the teams that actually implement and consume APIs

These scripts have taken the following user stories into consideration.

When there is a need to create a new API, the implementation party will put a request (e.g. in writing as an email or an entry in the ticketing system). This request will ultimately be converted to a standard JSON format that is machine readable.

For an example, the JSON format the script recognizes is as follows. It contains only the basic information needed to create an API.

{

"username": "Username of the user",

"department": "Department of the user",

"apis": [

{

"name": "SampleAPI_dev1",

"description": "Sample API description",

"context": "/api/dev1/sample",

"version": "v1"

}

]

}The JSON-Schema for this is as follows.

{

"$schema": "http://json-schema.org/draft-04/schema#",

"type": "object",

"properties": {

"username": {

"type": "string"

},

"department": {

"type": "string"

},

"apis": {

"type": "array",

"items": [

{

"type": "object",

"properties": {

"name": {

"type": "string"

},

"description": {

"type": "string"

},

"context": {

"type": "string"

},

"version": {

"type": "string"

}

},

"required": [

"name",

"description",

"context",

"version"

]

}

]

}

},

"required": [

"username",

"department",

"apis"

]

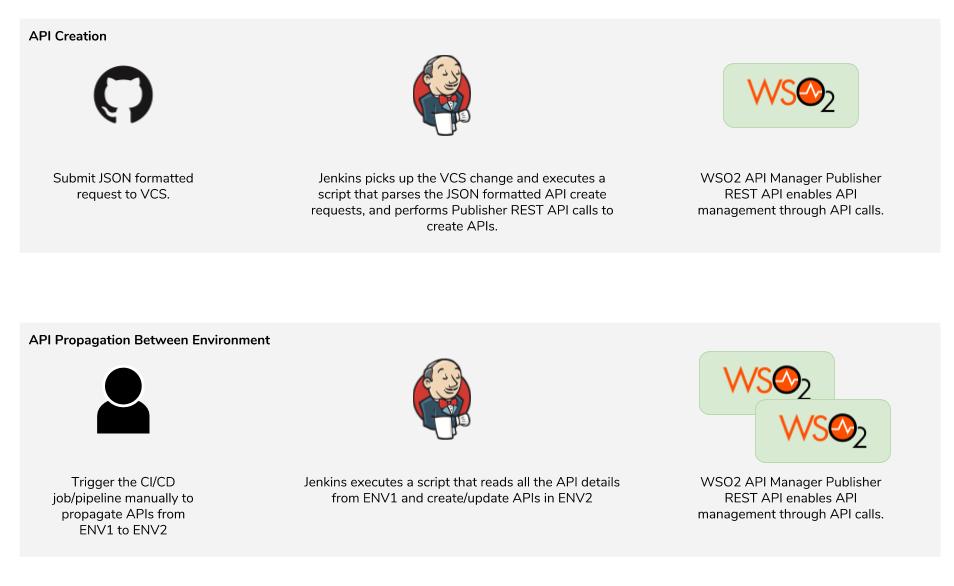

}This JSON content will be submitted to a VCS (e.g. Git) that will trigger an Automation Tool job (e.g. Jenkins). The automation tool job can execute the Python script api_create.py which does the following.

- Read and parse the JSON based API create requests

- Submit API create REST calls to the provided API Manager Publisher REST API

This way, the API create requests can be used as the trigger point to automate the API creation flow.

When the APIs are created, the implementation party can login to the Publisher UI and implement the API and publish it.

After the APIs are published and tested in a particular environment, they have to be promoted to the next environment. This process can be triggered manually as an automation tool job (e.g. Jenkins job or pipeline).

The script executed in this step, api_propagate.py, will pull all API details from one environment and create/update APIs based on those details in the next environment. During this process, additional steps like API renaming, context renaming, and backend switching can also be done if they conform to a pattern.

In codifying the stories, the scripts have made the following assumptions for the ease of implementation. Scenarios that fall out of the following criteria can also use these scripts, provided certain changes are introduced.

- Backend URLs - The backend URLs of the APIs are basically uniform and fall within a pattern that makes it easy to change backend URLs when APIs are promoted between environments.

- Emails are not used as usernames for WSO2 API Manager. If this is used, configuration changes in the WSO2 API Manager should be done in order to allow proper use of the feature.

- APIs versioning feature is not used.

api_propagate.pyscript does not take into account the existence of different versions of the same API. Therefore, no API Version Creation requests are made. If this feature is used, the script has to modified so that it takes into account this possibility.

These scripts require Python 2.7. Furthermore, requests Python library is used to make REST API calls.

To setup the environment with the dependencies, use setup.sh script. This will create a Python2.7 Virtual Environment, and install the dependencies mentioned in the requirements.txt file.

If you already have setup an environment (e.g. an existing virtual environment), load the environment and check if the dependencies are met with the script check_requirements.sh.

If you are setting up a virtual environment manually, better name it

venvas the helper scripts for testing work with that name.

The Python scripts take the required parameters as environment variables. Therefore, any automation tool that executes these scripts should inject the environment variables with the proper values during execution. For an example, Jenkins can make use of EnvInject plugin to load environment variabels from a pre-populated properties file for a build job.

The environment variables required for each script are as follows.

- API Manager URL -

WSO2_APIM_APIMGT_URL - Gateway URL -

WSO2_APIM_GW_URL - API Manager Username -

WSO2_APIM_APIMGT_USERNAME - API Manager Password -

WSO2_APIM_APIMGT_PASSWD - Verify SSL flag -

WSO2_APIM_VERIFY_SSL - Production Backend URL -

WSO2_APIM_BE_PROD - Sandbox Backend URL -

WSO2_APIM_BE_SNDBX - API Status -

WSO2_APIM_API_STATUS

- ENV1 API Manager URL -

WSO2_APIM_ENV1_APIMGT_URL - ENV1 Gateway URL -

WSO2_APIM_ENV1_GW_URL - ENV1 API Manager Username -

WSO2_APIM_ENV1_APIMGT_USERNAME - ENV1 API Manager Password -

WSO2_APIM_ENV1_APIMGT_PASSWD - ENV1 Identifier -

WSO2_APIM_ENV1_ID - ENV2 API Manager URL -

WSO2_APIM_ENV2_APIMGT_URL - ENV2 Gateway URL -

WSO2_APIM_ENV2_GW_URL - ENV2 API Manager Username -

WSO2_APIM_ENV2_APIMGT_USERNAME - ENV2 API Manager Password -

WSO2_APIM_ENV2_APIMGT_PASSWD - ENV2 Identifier -

WSO2_APIM_ENV2_ID - Verify SSL flag -

WSO2_APIM_VERIFY_SSL

For the api_create.py script, the input JSON files are contained in the api_definitions folder. The format for these JSON files is mentioned in the above API Creation section.

The information gathered from these JSON files are then appended to the API Create Request template read from the file api_template.json. The important fields are replaced in Python using the information read from the JSON files. The request object built using this template is then submitted to the API Create/Update REST endpoint.

During the API Propagation flow, there can be scenarios where the default behavior has to be modified in order to suit deployment specific details. To address this, a separate Python script is introduced called extensions.py. This script contains the function implementations that are called during various points of the API Propagation story.

Currently, there are two such extension points, one to address how APIs are queried for from the first environment, and another to address how API definitions should change before they are applied to the second environment. These two points are handled by functions propagate_filter_api_env1_by() and propagate_change_apidef() respectively.

To do deployment specific modifications, refer to how the default implementations are being done and modify as needed in the extensions.py script.

When an API is being created in a single WSO2 API Manager deployment, if it already exists by name and version, there will an error response. Therefore, before an API creation it has to be checked whether the request should actually be an update or version addition to an existing API.

The traditional approach to API migration between deployments is the WSO2 API Manager Import/Export Tool. However, this tool does not handle the create/update granularity for each API successfully, as it assumes APIs to not exist in the new environment. Therefore, standard WSO2 API Manager Publisher REST APIs are used in the CI/CD process.

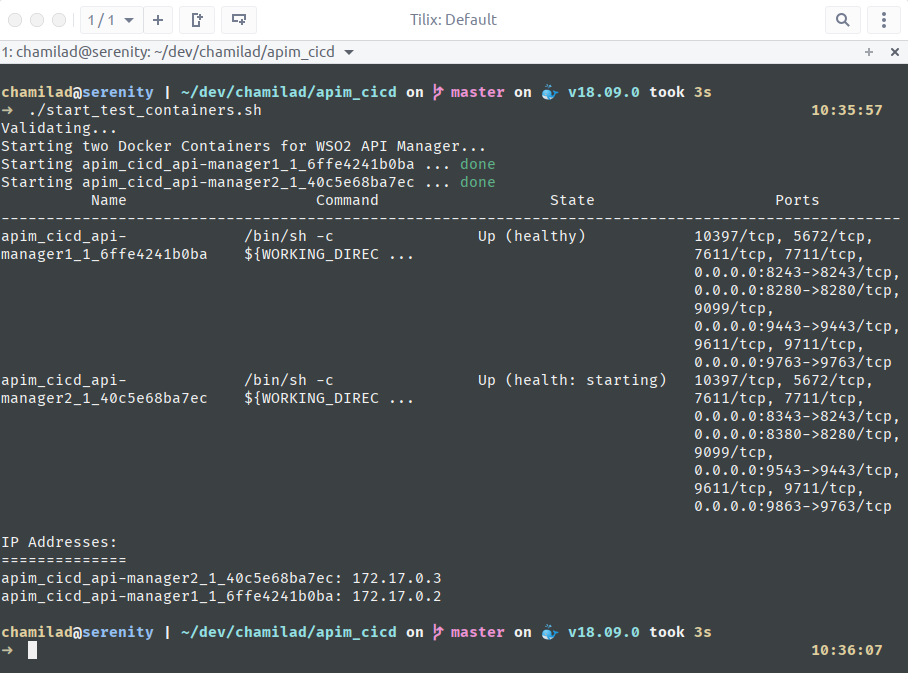

To make it easy to try out the scripts, a Docker Compose setup has been provided. This docker-compose.yml setup contains two WSO2 API Manager 2.6.0 Containers that will be mapped to ports 9443/8243 (api-manager1) and 9543/8343 (api-manager2) respectively.

To use the Docker images used in the Docker Compose configuration, you should have access to the WSO2 Docker Registry,

docker.wso2.com. The instructions to do this can be found at the WSO2 Docker download page.

To start this deployment, run start_test_containers.sh script. This will in essence run docker-compose up and output the IP addresses of the Containers.

The Containers are networked as bridged to the default Docker bridge on the Host, and therefore will share IP addresses from the range

172.17.0.1/16

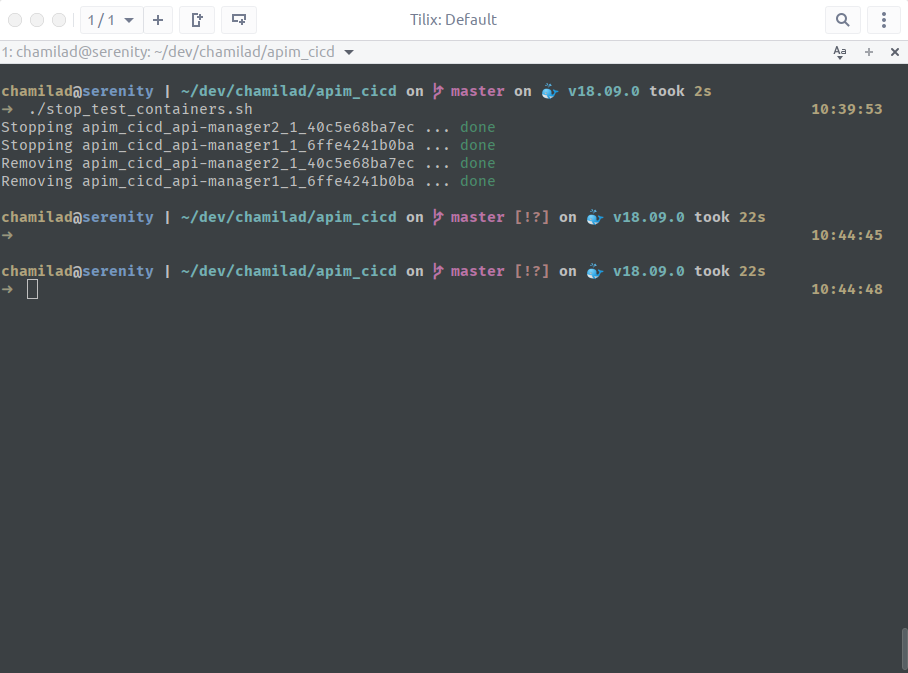

Once the testing is done, the deployment can be torn down with the script stop_test_containers.sh.

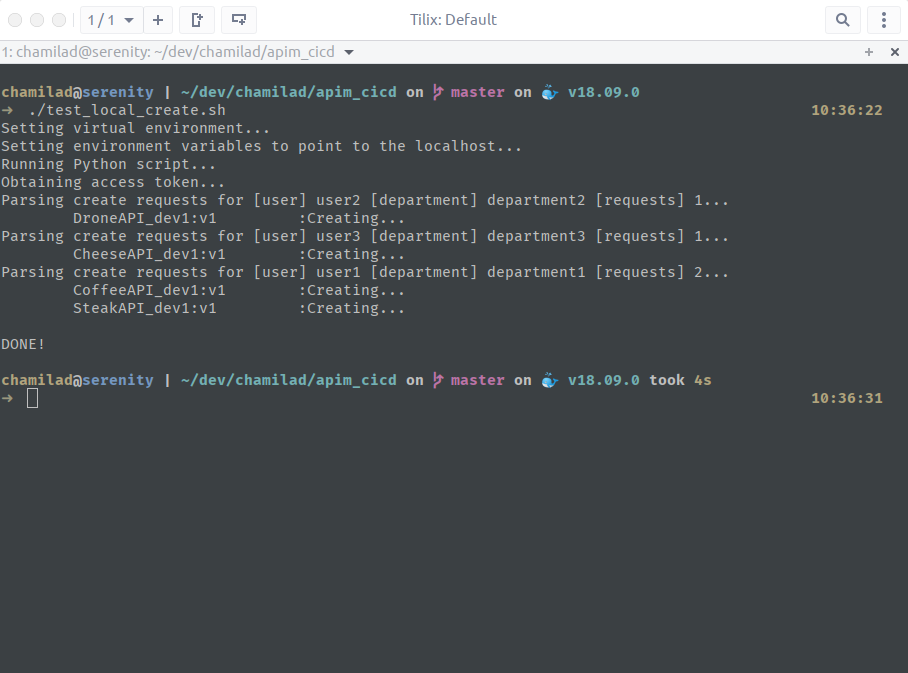

To run the Python scripts by setting the required environment variables, the test_local_*.sh scripts can be used.

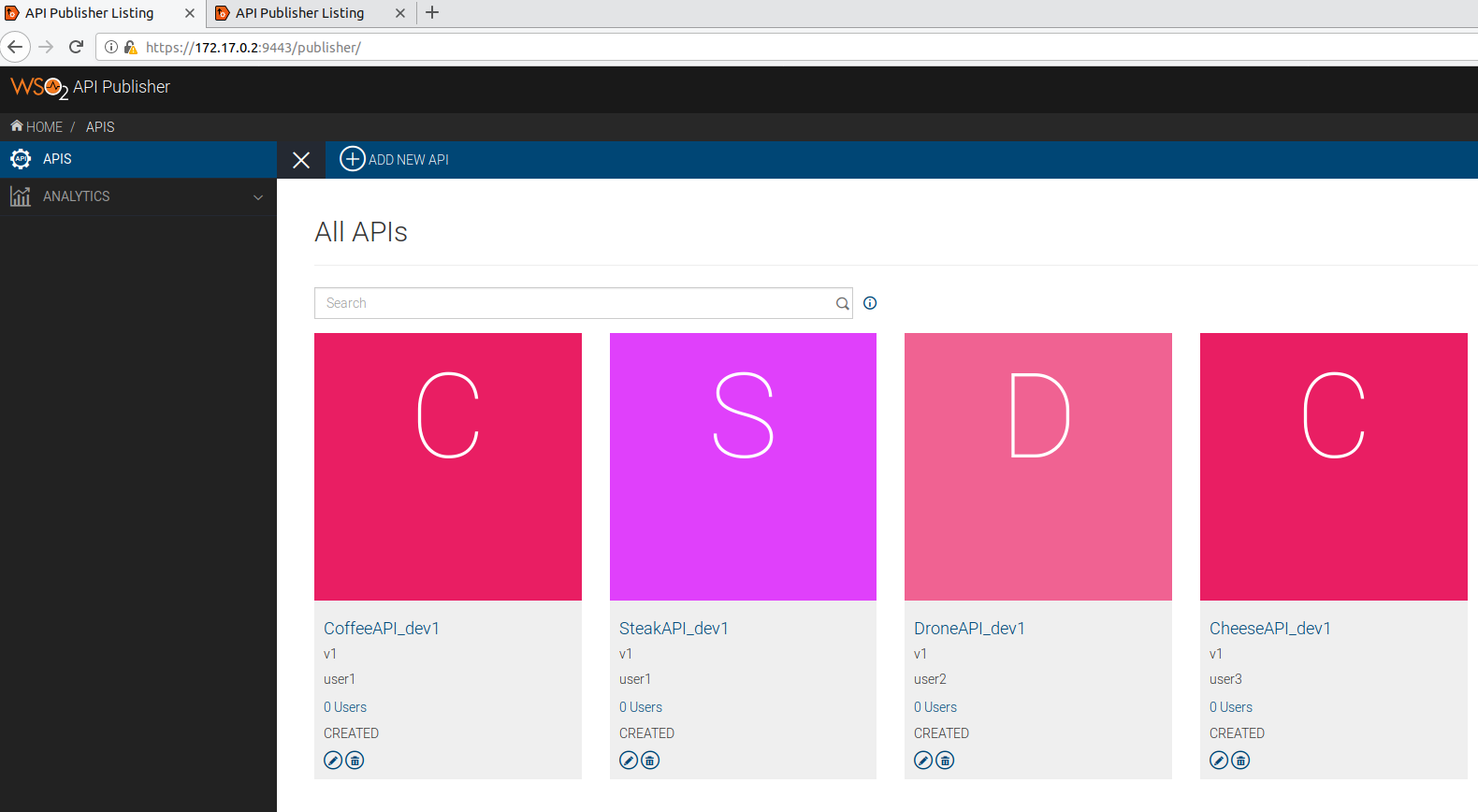

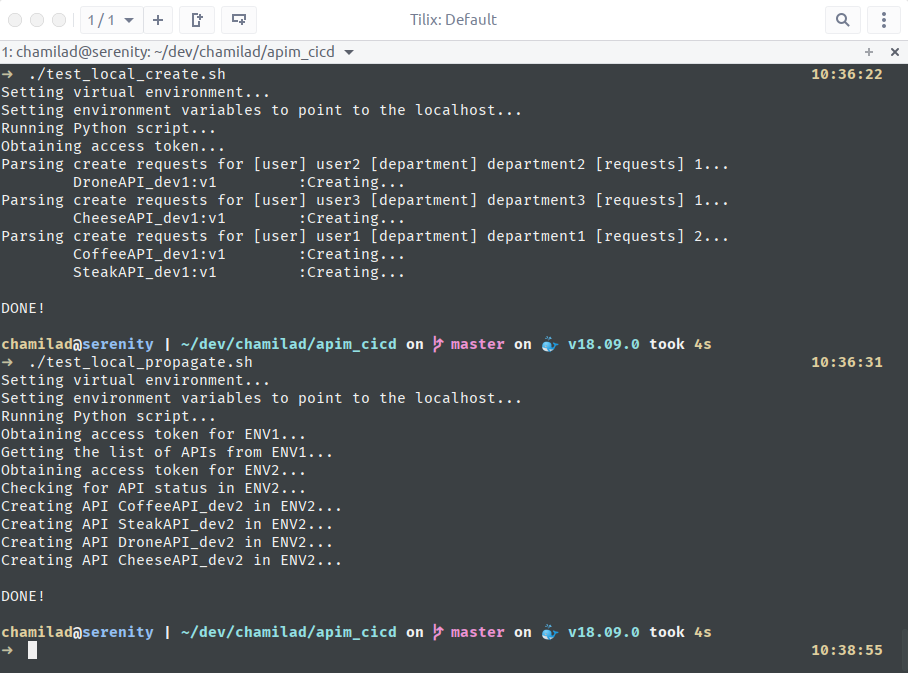

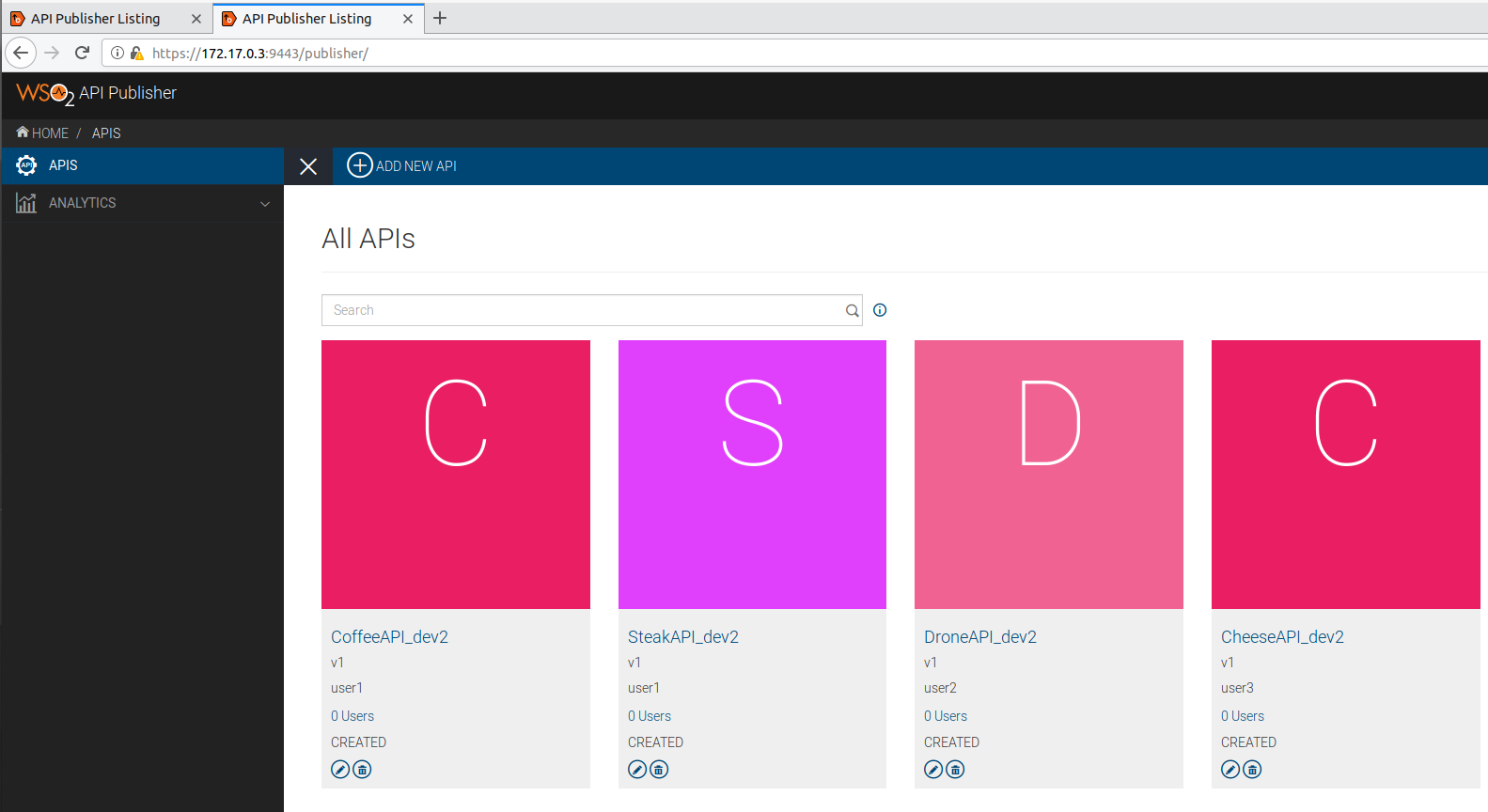

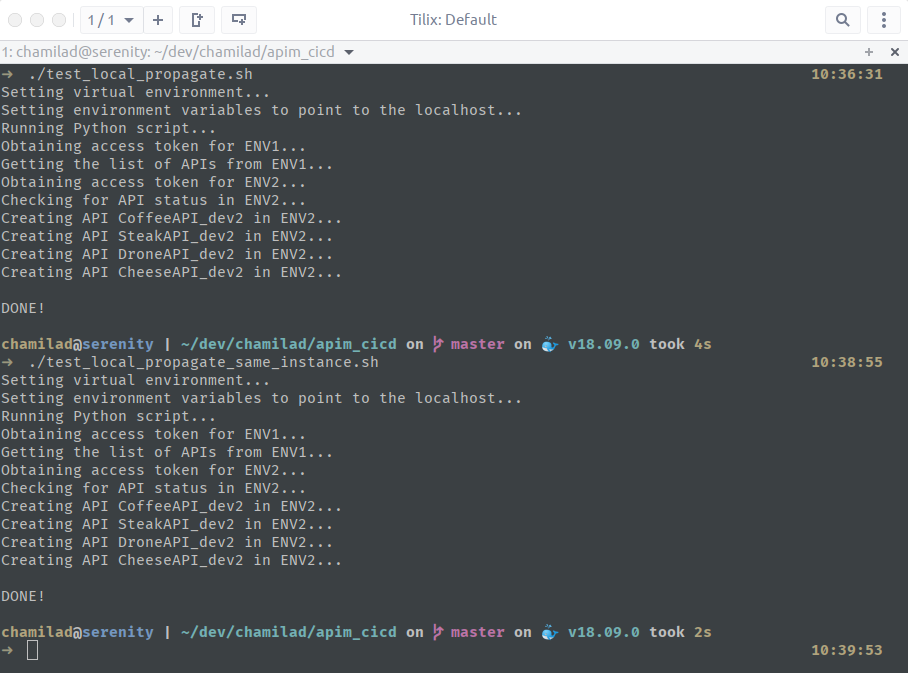

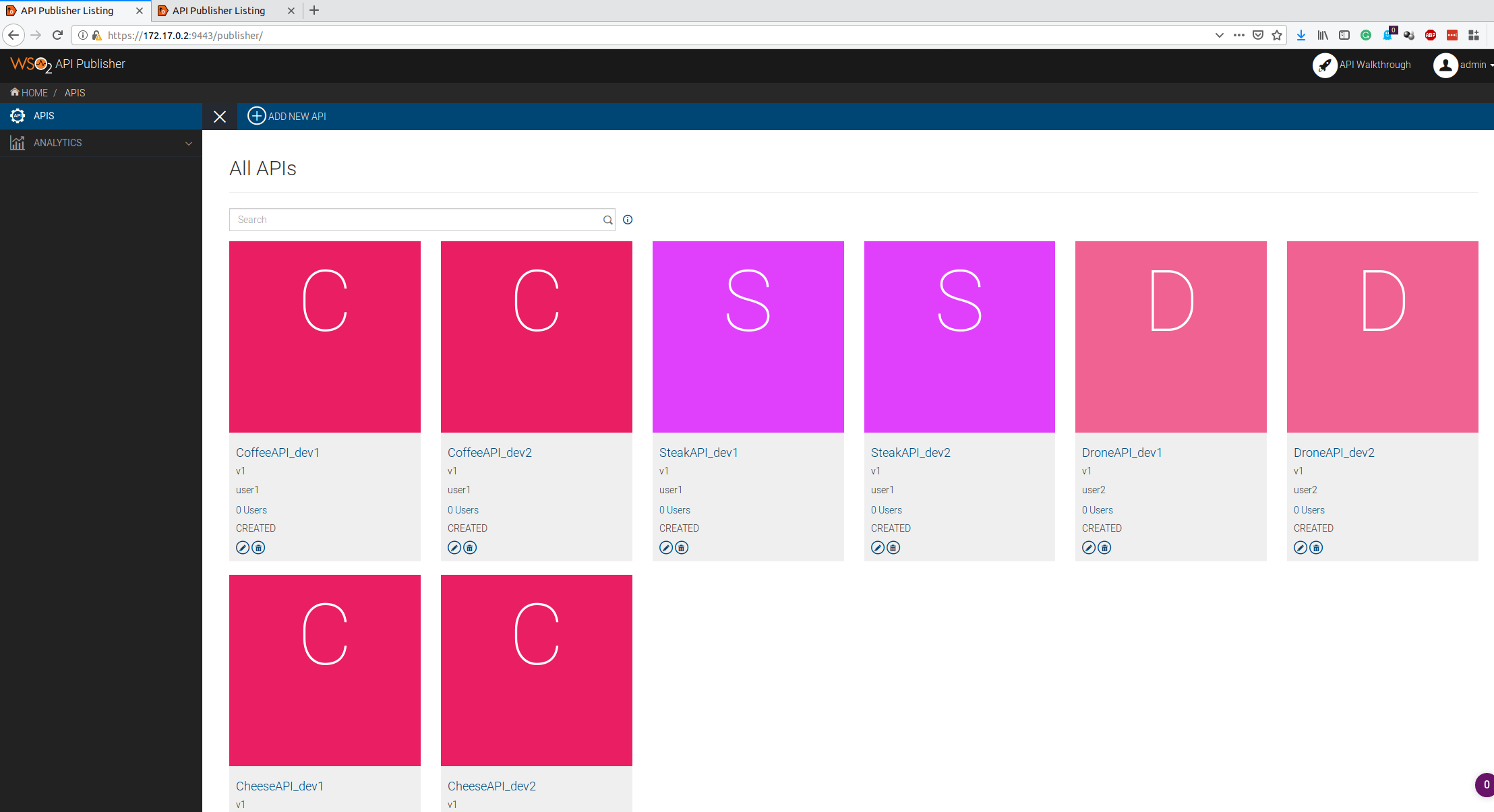

test_local_create.sh- This script runs theapi_create.pyscript pointing the script to the local setup atlocalhost:9443(172.17.0.1refers to the default Docker bridge network itself, thuslocalhost.)test_local_propagate.sh- This script runs theapi_propagate.pyscript, pointed to two API Manager deployments on localhost, the second one with an offset of100, i.e.172.17.0.1:9443and172.17.0.1:9543test_local_propagate_same_instance.sh- This does the same as #2 above, but with both environments pointing to the same API Manager deployment.

Simply start the Docker Containers as mentioned above, and execute scripts test_local_create.sh, test_local_propagate.sh, and test_local_propagate_same_instance.sh in that order. You can visit the pages ENV1 Publisher and ENV2 Publisher to see the APIs getting created as the scripts are executed.