- 1. CVPR Paper

- 2. Video collection

- 3. Detection

- 4. Sementation

- 5. Lip language

- 6. Some Interesting

- 7. Tracking

- 8. Action Recognition

- 9. Reconstruction

- 10. Detection

- 11. Sementation

- 12. Action Recognition

- 13. Point Cloud Representation

- 14. Summary

- 15. Reference

All the paper is available at official website.

The offline list of paper is available at this

| Paper ID | Type | Title |

|---|---|---|

| 122 | Poster | Detect-and-Track: Efficient Pose Estimation in Videos |

| 255 | Poster | Multi-Cue Correlation Filters for Robust Visual Tracking |

| 281 | Spotlight | Tracking Multiple Objects Outside the Line of Sight using Speckle Imaging |

| 281 | Poster | Tracking Multiple Objects Outside the Line of Sight using Speckle Imaging |

| 369 | Oral | Total Capture: A 3D Deformation Model for Tracking Faces, Hands, and Bodies |

| 369 | Poster | Total Capture: A 3D Deformation Model for Tracking Faces, Hands, and Bodies |

| 423 | Spotlight | Fast and Accurate Online Video Object Segmentation via Tracking Parts |

| 423 | Poster | Fast and Accurate Online Video Object Segmentation via Tracking Parts |

| 678 | Poster | Learning Attentions: Residual Attentional Siamese Network for High Performance Online Visual Tracking |

| 736 | Spotlight | GANerated Hands for Real-Time 3D Hand Tracking from Monocular RGB |

| 736 | Poster | GANerated Hands for Real-Time 3D Hand Tracking from Monocular RGB |

| 890 | Poster | CarFusion: Combining Point Tracking and Part Detection for Dynamic 3D Reconstruction of Vehicles |

| 892 | Poster | Context-aware Deep Feature Compression for High-speed Visual Tracking |

| 1022 | Poster | A Benchmark for Articulated Human Pose Estimation and Tracking |

| 1194 | Poster | Hyperparameter Optimization for Tracking with Continuous Deep Q-Learning |

| 1264 | Poster | End-to-end Flow Correlation Tracking with Spatial-temporal Attention |

| 1280 | Spotlight | VITAL: VIsual Tracking via Adversarial Learning |

| 1280 | Poster | VITAL: VIsual Tracking via Adversarial Learning |

| 1304 | Poster | SINT++: Robust Visual Tracking via Adversarial Hard Positive Generation |

| 1353 | Poster | Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking |

| 1439 | Poster | Efficient Diverse Ensemble for Discriminative Co-Tracking |

| 1494 | Poster | Correlation Tracking via Joint Discrimination and Reliability Learning |

| 1676 | Spotlight | Learning Spatial-Aware Regressions for Visual Tracking |

| 1676 | Poster | Learning Spatial-Aware Regressions for Visual Tracking |

| 1679 | Poster | Fusing Crowd Density Maps and Visual Object Trackers for People Tracking in Crowd Scenes |

| 1949 | Poster | Rolling Shutter and Radial Distortion are Features for High Frame Rate Multi-camera Tracking |

| 2129 | Poster | High-speed Tracking with Multi-kernel Correlation Filters |

| 2628 | Poster | A Causal And-Or Graph Model for Visibility Fluent Reasoning in Tracking Interacting Objects |

| 2951 | Spotlight | High Performance Visual Tracking with Siamese Region Proposal Network |

| 2951 | Poster | High Performance Visual Tracking with Siamese Region Proposal Network |

| 3013 | Oral | Fast and Furious: Real Time End-to-End 3D Detection, Tracking and Motion Forecasting with a Single Convolutional Net |

| 3013 | Poster | Fast and Furious: Real Time End-to-End 3D Detection, Tracking and Motion Forecasting with a Single Convolutional Net |

| 3292 | Spotlight | MX-LSTM: mixing tracklets and vislets to jointly forecast trajectories and head poses |

| 3292 | Poster | MX-LSTM: mixing tracklets and vislets to jointly forecast trajectories and head poses |

| 3502 | Poster | A Prior-Less Method for Multi-Face Tracking in Unconstrained Videos |

| 3583 | Poster | Towards dense object tracking in a 2D honeybee hive |

| 3817 | Spotlight | Good Appearance Features for Multi-Target Multi-Camera Tracking |

| 3817 | Poster | Good Appearance Features for Multi-Target Multi-Camera Tracking |

| 3980 | Poster | A Twofold Siamese Network for Real-Time Object Tracking |

https://pan.baidu.com/s/1eSIVG90

- holoportation_ virtual 3D teleportation in real-time (Microsoft Research).mp4

- Realtime Multi-Person 2D Human Pose Estimation using Part Affinity Fields, CVPR 2017 Oral

- Full-Resolution Residual Networks (FRRNs) for Semantic Image Segmentation in Street Scenes

- YOLO v2

- DeepGlint CVPR2016

The mask rcnn is proposed by KaiMing, and implied in github repostory

-

mask rcnn extends Faster R-CNN by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition.

-

output:

- a class label

- a bounding-box offset

- object mask

-

It can run at 5 fps and training on COCO takes one to two days on a single 8-GPU machine.

-

It has another application: human pose estimation, instance segementation, bounding-box object detection, and person keypoint detection, camera calibration.

- By viewing each keypoint as a one-hot binary mask, it can estimate human pose.

-

It belongs to the instance segmentation field.

I have the mask rcnn in bus scene.

It performs well. This is all the result

It's an amazing thing that training lip language recogition

- given the audio of President Barack Obama, we synthesize a high quality video of him speaking with accurate lip sync. see video

- takes a single photograph of a child as input and automatically produces a series of age-progressed outputs between 1 and 80 years of age, accounting for pose, expression, and illumination. see video

6.3. PanoCatcher

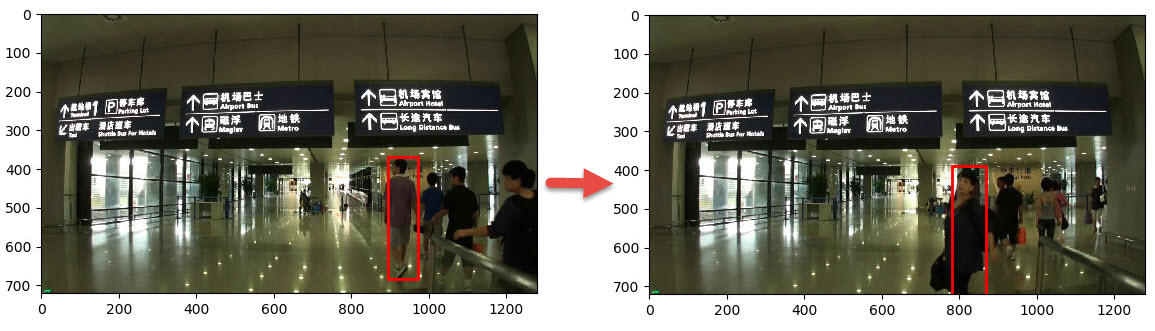

It is a tracking method based on deep learning. This author designed a network consisting of correlation filter layer, who solved the backpropagation program

- I have tried this method. But it doesn't work well and have some test failure cases, as following

-

abstract We present a framework that allows the explicit incorporation of global context within CF trackers. We reformulate the original optimization problem and provide a closed form solution for single and multidimensional features in the primal and dual domain.

-

video, paper, matlab code, python code

-

advantage:

- It's an end-to-end tracking method, which can be trained directly.

- It can run in real-time.

-

disadvantage:

- It's will drift with the object occlusion

- It's will scale wrongly with the object enlarge or being small.

-

My opion:

- Tracking should be combined both the object feature itself and the context feature.

- Bas

- Mask RCNN is amazing, but it's not fast enough for real time detection.

- There are lots of computer vision tasks need to be done, and only few tasks are finished. Obejct recognition is the simplest task, which is extremly handled and the rate of recognition is more than that of human beings. But, the majority tasks are still need to be done, such as: action recogition, action predict, 3D object recognition, 3D object representation, 3D action recognition, represention of speak, smell, feel and vision. Machine vision is the kernel task for robot intelligence. So don't worry about nothing to do in this field.