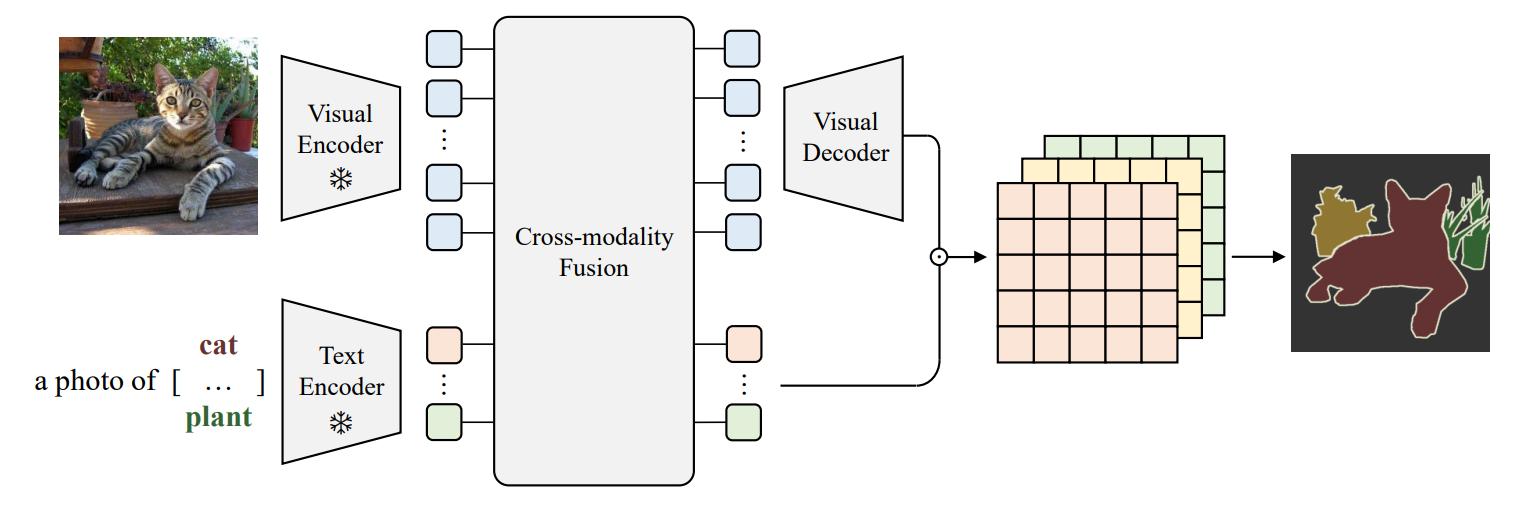

The repo contains official PyTorch implementation of BMVC 2022 oral paper Open-vocabulary Semantic Segmentation with Frozen Vision-Language Models by Chaofan Ma*, Yuhuan Yang*, Yanfeng Wang, Ya Zhang, and Weidi Xie.

For more information, check out the project page and the paper on arXiv.

- python==3.7.11

- torch==1.9.0

- torchvision==0.10.0

- clip (from https://github.com/openai/CLIP)

- einops==0.3.2

- timm==0.4.12

- albumentations==1.1.0

- opencv-python==4.5.5.64

Same as LSeg, we follow HSNet for data preparation. The datasets should be appropriately placed to have following directory structure:

For PASCAL-$5^i$ dataset:

dataset_root

├── SegmentationClassAug

└── VOCdevkit

└── VOC2012

├── Annotations

├── ImageSets

├── JPEGImages

├── SegmentationClass

└── SegmentationObject

For COCO-$20^i$ dataset:

dataset_root

├── annotation

├── train2014

└── val2014

More details such as datasets downloading please refers to HSNet datasets preparing.

python train.py --dataset_name {pascal, coco} \

--dataset_root your/pascal/or/coco/dataset_root \

--fold {0, 1, 2, 3} python test.py --dataset_name {pascal, coco} \

--dataset_root your/pascal/or/coco/dataset_root \

--fold {0, 1, 2, 3} \

--test_with_org_resolution \

--load_ckpt_path path/to/saved/checkpointCurrently, we do not add code about model saving when training, write it by yourself then pass through --load_ckpt_path for evaluation.

If this code is useful for your research, please consider citing:

@inproceedings{ma2022fusioner,

title = {Open-vocabulary Semantic Segmentation with Frozen Vision-Language Models},

author = {Chaofan Ma, Yuhuan Yang, YanFeng Wang, Ya Zhang and Weidi Xie},

booktitle = {British Machine Vision Conference},

year = {2022}

}Many thanks to the code bases from LSeg, CLIP, Segmenter, HSNet.