Chao-Yuan Wu, Philipp Krähenbühl, CVPR 2021

@inproceedings{lvu2021,

Author = {Chao-Yuan Wu and Philipp Kr\"{a}henb\"{u}hl},

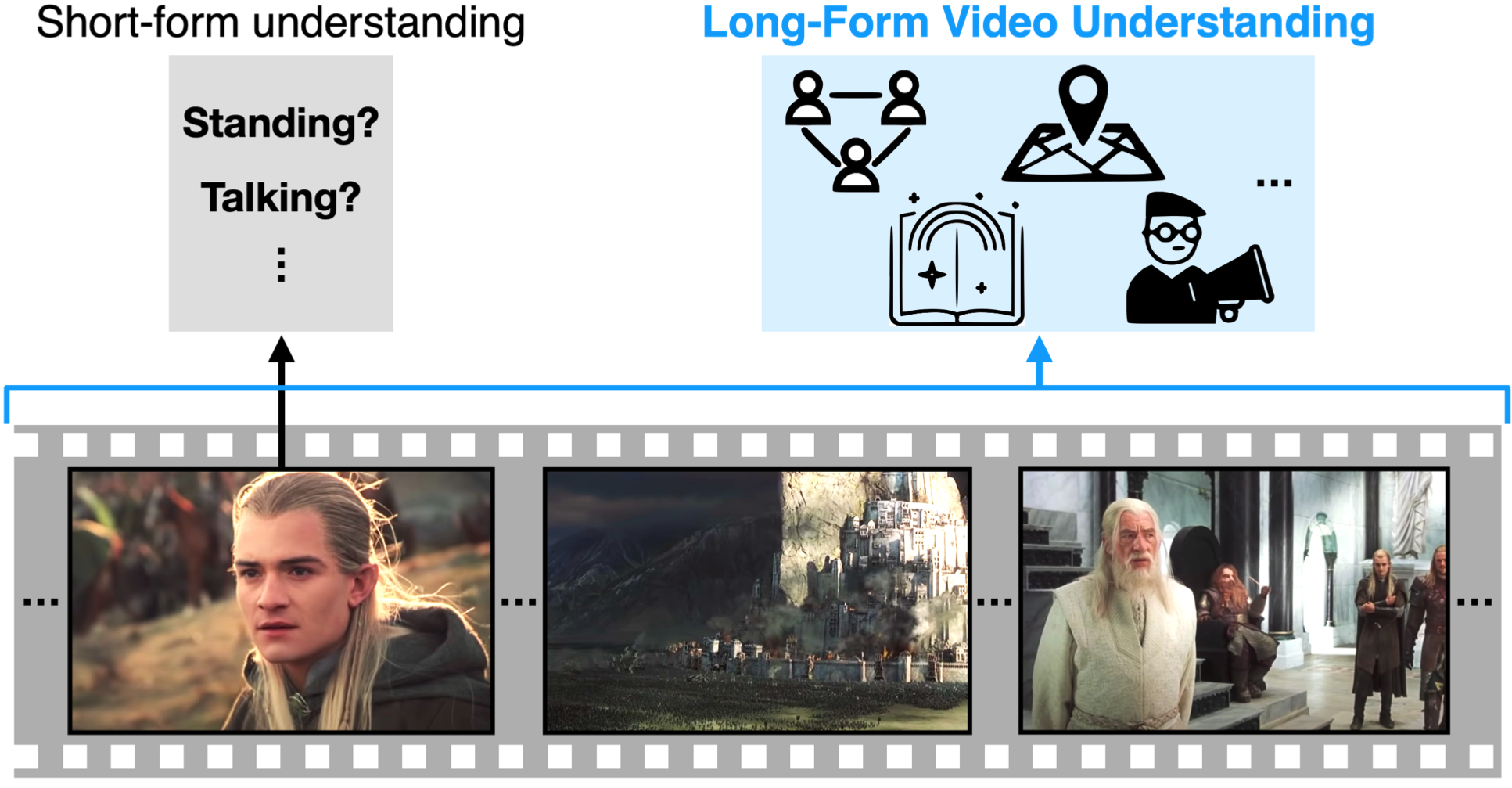

Title = {{Towards Long-Form Video Understanding}},

Booktitle = {{CVPR}},

Year = {2021}}This repo implements Object Transformers for long-form video understanding.

Please organize data/ as follows

data

|_ ava

|_ features

|_ instance_meta

|_ lvu_1.0

ava, features, and instance_meta could be found at this Google Drive folder.

lvu_1.0 can be found at here.

Please also download pre-trained weights at this Google Drive folder and put them in pretrained_models/.

bash run_pretrain.sh

This pretrains on a small demo dataset data/instance_meta/instance_meta_pretrain_demo.pkl as an example. Please follow its file format if you'd like to pretrain on a larger dataset (e.g., latest full version of MovieClips).

bash run_ava.sh

This should achieve 31.0 mAP.

bash run.sh [1-9]

The argument selects a task to run on. Please see run.py for details.

This implementation largely borrows from Huggingface Transformers. Please consider citing it if you use this repo.