Pytorch implementation for Variational AutoEncoders (VAEs) and conditional Variational AutoEncoders.

The model is implemented in pytorch and trained on MNIST (a dataset of handwritten digits). The encoders

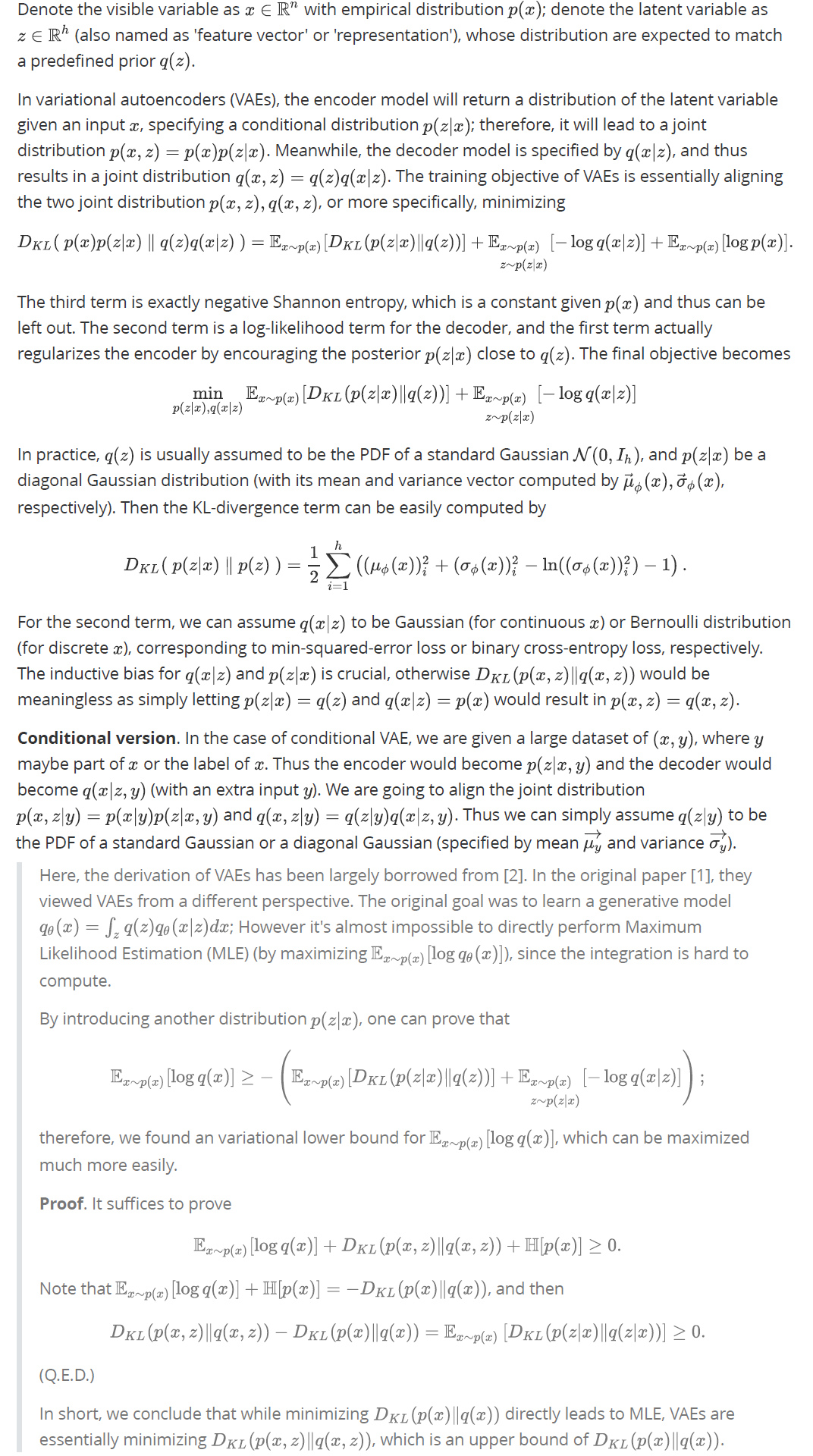

Samples generated by VAE:

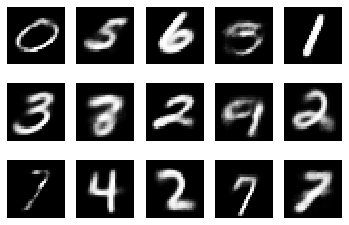

Samples generated by conditional VAE.

To train the model, run

cd Models/VAE

python train_VAE.py # or train_cVAE.pyTo use the models, just run the jupyter notebook Demo.ipynb to see a few illustrations.

[1] Kingma, D. P., & Welling, M. (2013). Auto-Encoding Variational Bayes. arXiv: Machine Learning

[2] Jianlin Su (2018, Mar 28). Variational Auto-Encoders: from a Bayesian perspective. (in Chinese) Blog post: Retrieved from https://kexue.fm/archives/5343