This repository provides a model for change point learning and online detection. The algorithms are implemented with R and C++, model definitions and proves are provided in this README file.

- Introduction

- Usage

- R version

- C++ version (in development)

- Reference

- License

Keywords:

Bayesian statistics; graphical model; dynamic Bayesian network; infinite state hidden Markov model; change point; survival analysis;

Development Note: For now only exact learning method with univariate-Gaussian observation and Weibull segment duration is supported. See R Examples for demonstration.

The process of Bayesian online change point detection proposed by Adam and MacKay1 is in essence an filtering process on an infinite state hidden Markov model, in which the observed time series can be split into a set of connected segments, each segment is generated by a hidden model, called "the observation model"(there are infinitely many possible ways of segmentation thus infinitely many possible observation models). A "change point" is defined as the beginning time index of a new segment. A "duration" is defined as the length of a segment, duration is generated from a model called "the duration model".

Usually the duration model is assumed exponentially distributed, so that the hazard rate, or the probability of a change point occurring, w.r.t time is constant. From survival analysis perspective, a constant hazard rate is good for capturing random failures, but fails to capture early-life, wear-out and bathtub failures, which are more common in real applications.

This repository aims at extend the model's flexibility to make it better fit real life scenarios. The included functionalities are:

- For online change point detection, allow for both filtering and fixed-lag smoothing, so that users can trade off latency with accuracy.

- For offline duration model learning, allow distributions with non-constant hazard rates, such as Weibull, Gamma, log-Normal ...

- For offline duration model learning, allow learning with both exact method and simulation method. Exact method can provide the MAP estimate of the target random variable, but requires

memory. Simulation method can provide posterior distribution of the target random variable, and it's requires only

memory. Simulation method can provide posterior distribution of the target random variable, and it's requires only  memory.

memory. - For observation model, allow models with various structures, such as (Bayesian)linear regression, multivariate Gaussian, multinomial ...

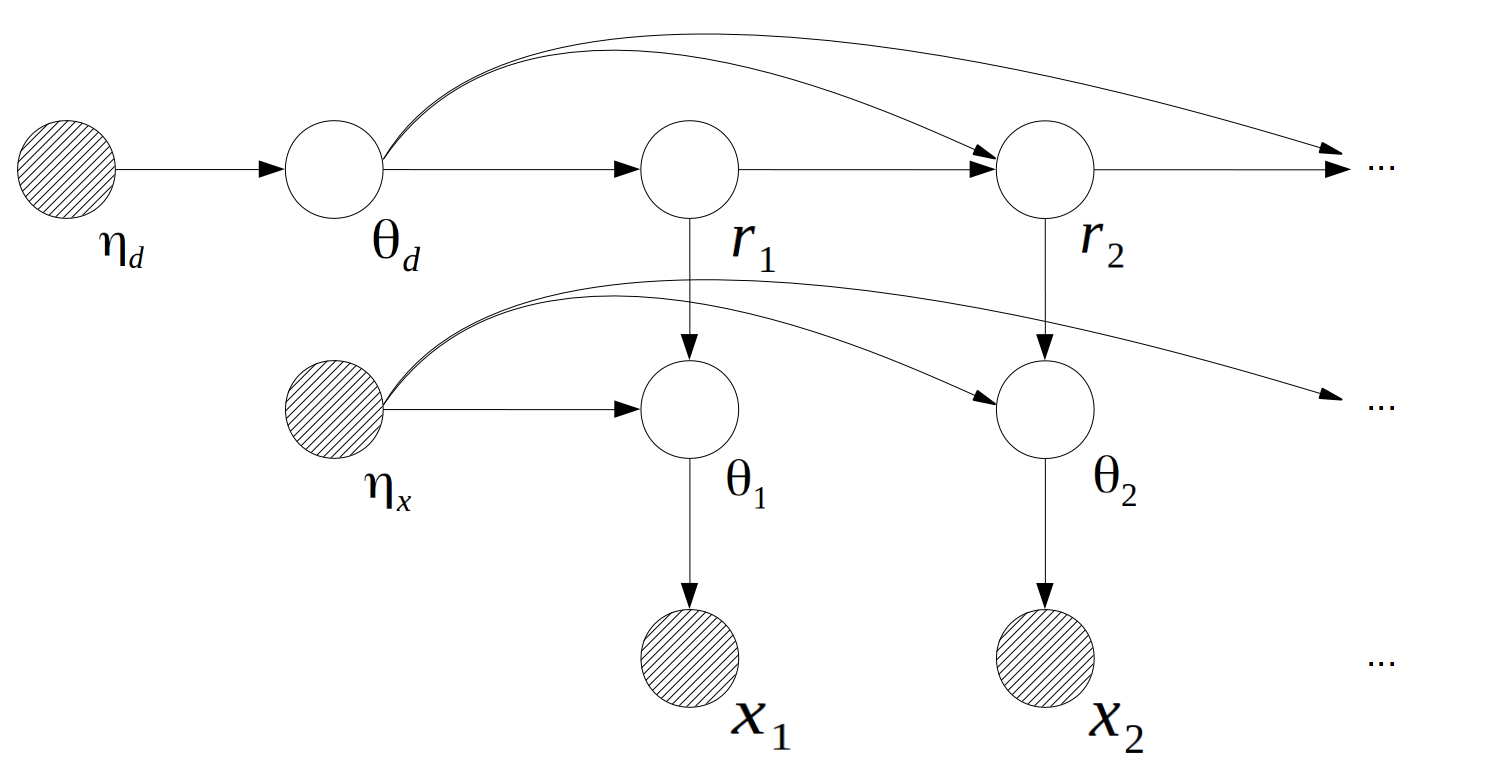

The graphical representation of the generating process of  shows as follow:

shows as follow:

Where  is the time length since the last change point at

is the time length since the last change point at  , called "the run length at time

, called "the run length at time  ".

".  is the parameter of the observation model at time

is the parameter of the observation model at time  ,

,  .

.  is the parameter of the duration model,

is the parameter of the duration model,  .

.  is the hyper-parameter of

is the hyper-parameter of  ,

,  is the hyper-parameter of

is the hyper-parameter of  .

.

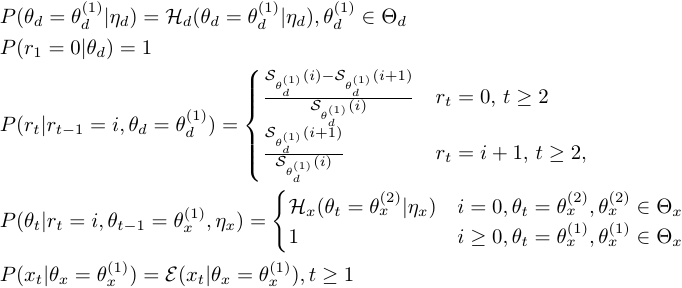

The conditional probability distributions are:

Where  is the prior for the duration distribution,

is the prior for the duration distribution,  is the prior for the observation distributions.

is the prior for the observation distributions.  is the observation distribution at time

is the observation distribution at time  ,

,  is the survival function of the duration distribution when

is the survival function of the duration distribution when  .

.

The model can fit to various scenarios by flexibly adjusting the forms of  ,

,  ,

,  and

and  .

.

For online detection problem, the goal is to estimate:

Where  is the time lag between most recent observation and the estimation horizon. The detection problem is equivalent to filtering when

is the time lag between most recent observation and the estimation horizon. The detection problem is equivalent to filtering when  , to fixed-lag smoothing when

, to fixed-lag smoothing when  . The probability of a change point occurring at time

. The probability of a change point occurring at time  is

is  , and when

, and when  , we say "at time

, we say "at time  there is a change point".

there is a change point".

For offline learning problem, the goal is then to estimate:

A typical scenario is we first need to learn  from historical data, then apply the learned

from historical data, then apply the learned  to online detection problems. See R Examples.

to online detection problems. See R Examples.

The code has 2 versions, R and C++. Both versions provide the same functionalities. R version is better for educational purpose, but runs relatively slower.

Load the script directly from source:

source("https://raw.githubusercontent.com/chenhaotian/Changepoints/master/R/changepoints.r") This section lists the only the general descriptions. See the section R Examples for implementation notes.

| Name | Description |

|---|---|

| bcp | Create a Bayesian change point object for offline learning. Usage: bcp(x = NULL, breaks = NULL, cpt_model = c("weibull", "gamma", "exponential"), obs_model = c("univariate-gaussian", "multivariate-gaussian", "multinomial", "poisson", "exponential", "gamma","linear"), obs_prior = list(), cpt_prior = list(), shape = NULL, scale = NULL,) Arguments: x: numeric matrix, or an object that can be coerced to a matrix. Observation sequence, each row of x is a TRANSPOSE of an observation vector. breaks: if 'x' is an rbind of multiple set of observations, 'breaks' will be the ending row number of each sets. For example if 'x' is an rbind of 3 days time series with 5,9 and 3 observations each day. then 'breaks' will be c(5,14,17). When 'breaks' = NULL, the model will assume all observations came from only dataset. Default NULL.cpt_model: the segment duration distribution to be used, must be one of "weibull","gamma" and "exponential" obs_model: the observation distribution to be used, must be one of "univariate-gaussian","multivariate-gaussian","multinomial","poisson","exponential","gamma" and "linear". obs_prior: hyper parameters for the observation distribution. See Examples for details. cpt_prior: hyper parameters for the segment duration distribution. See Examples for details. shape, scale: the initial shape and scale parameter for the segment duration distribution. No need to specify, default NULL. Value: returns an object of class 'bcp'. This object will be further used in the learning and offline smoothing processes. |

| bcpEM | Get MAP estimates of the segment duration with (generalized) EM algorithm. Usage: bcpEM(bcpObj, maxit = 100, deps = 1e-04, nstart = 10L) Arguments: bcpObj: and object created by bcp(). maxit: number of maximum EM iterations. deps: learning precision, the learning stops when the difference between the most recent two observed data likelihoods is smaller than 'deps'. nstart: number of random restarts, set 'nstart' bigger to avoid local optimal, but will cost more time. Value: The MAP estimates of shape and scale parameters will be stored in bcpObj$MAP. |

| bcpMCMC | Sample from the posterior distribution of the segment durations with MCMC. Usage: bcpMCMC(bcpObj, burnin = 100, nSample = 5000) Arguments: bcpObj: an object created by bcp(). burnin: number of burn-in samples. nSamples: number of samples to draw after burn-in. Value: The posterior samples of shape and scale will be stored in bcpObj$postSamples. |

| bcpo | Create a Bayesian change point object for online inference. Usage: bcpo(shape = NULL, scale = NULL, cpt_model = c("weibull", "gamma", "exponential"), obs_model = c("univariate-gaussian", "multivariate-gaussian", "multinomial", "poisson", "exponential", "gamma", "linear"), obs_prior = list(), cpt_prior = list(), l = 0) Arguments: shape, scale: the shape and scale of the segment duration distribution. shape and scale can be learned from bcpEM() or bcpMCMC(), or be specified manually. cpt_model, obs_model, obs_prior, cpt_prior: same as the ones defined in bcp(). l: inference lag, bcpOnline will perform online filtering when l=0, fixed-lag smoothing when l>0. Value: returns an object of class 'bcpo'. This object will be further used in the bcpOnline() function for online filtering and fixed-lag smoothing. |

| bcpOnline | Bayesian change point online filtering and fixed-lag smoothing. Usage: bcpOnline(bcpoObj, newObs) Arguments: bcpoObj: an object created by bcpo(). newObs: new observations matrix, or an object that can be coerced to a matrix. Value: The filtered/fixed-lag smoothed change point probability will be attached to bcpoObj$pCPT;The change point indicator of each time point will be attached to bcpoObj$isCPT |

| priorNormal | Pick an weekly informed Normal-Inverse-Wishart prior for Gaussian distributions empirically. Usage: priorNormal(x) Arguments: x: numeric matrix, or an object that can be coerced to a matrix. Value: A list of length 4, representing the NIW parameters 'm', 'k', 'v' and 'S' respectively. |

Example 1: Learn segment duration distribution with EM algorithm(exact inference), then use the learned parameters to perform online change point detection.

Say we have observed a time series data in 10 days, there are 200, 300, 220, 250, 190, 290, 310, 320, 230, 220, 220, 240, 310, 230 and 242 observations in each day. We want to learn the segment duration distribution from this 10 series.

After learning the segment duration distribution, we want to apply the learned model to some new data for online detection.

Step 1: Generate some synthetic data, for demo purpose:

## generate historical data 'x', x is normally distributed, the segment duration is Weibull with shape=2 and scale = 50

set.seed(2)

setSizes <- c(200,300,220,250,190,290,310,320,230,220,220,240,310,230,242) #10 days of data

BREAKS <- cumsum(setSizes)

x <- unlist(sapply(setSizes,function(n){

head(unlist(sapply(round(rweibull(round(n/2),shape = 2,scale = 50)),function(l){rnorm(l,mean = rnorm(1,mean = 0,sd = 40),sd = rgamma(1,shape = 10,rate = 3))})),n)

}))

## generate new data 'xnew', with the same distributions as 'x'

set.seed(3)

newx <- unlist(sapply(round(rweibull(10,shape = 2,scale = 50)),function(l){

rnorm(l,mean = rnorm(1,mean = 0,sd = 40),sd = rgamma(1,shape = 10,rate = 3))

})) Step 2: Offline learning from historical data:

## use univariate-Gaussian as the observation model, Weibull as the segment duration model.

obs_model <- "univariate-gaussian"

cpt_model <- "weibull"

## hyper parameters of univariate-Gaussian is calculated imperically using priorNormal(x),

## hyper parameters of Weibull is assumed uniform(start=1,end=10) for shape, and gamma(shape=1,scale=100) for scale. In this model we assume the Weibull parameters are marginally independent of each other.

obs_prior <- priorNormal(x)

cpt_prior = list(start=1,end=10,shape=1,scale=100)

## for learning purpose. Create an 'bcp' object named "bcpObj"

bcpObj <- bcp(x,breaks = BREAKS,cpt_model = cpt_model,obs_model = obs_model,obs_prior = obs_prior,cpt_prior = cpt_prior)

## Learning Weibull(shape,scale) with generalized EM algorithm, random restart 2 times to avoid local optimal

bcpEM(bcpObj,nstart = 2) Step 3: Use the MAP estimates we just learned to perform online filtering on new observations:

## perfom online filtering, by setting l=0 in bcpo()

bcpoObj1 <- bcpo(shape = bcpObj$MAP[1],scale = bcpObj$MAP[2],cpt_model = cpt_model,obs_model = obs_model,obs_prior = obs_prior,cpt_prior = cpt_prior,l=0)

## use a for loop to mimic the arrival of data stream

for(i in seq_along(newx)) bcpOnline(bcpoObj=bcpoObj1,newObs=newx[i]) Step 4: Use the MAP estimates we just learned to perform online fixed-lag smoothing on new observations:

## perfom online fixed-lag smoothing(by setting l>0, in this case let's use l=10 and l=40)

bcpoObj2 <- bcpo(shape = bcpObj$MAP[1],scale = bcpObj$MAP[2],cpt_model = cpt_model,obs_model = obs_model,obs_prior = obs_prior,cpt_prior = cpt_prior,l=10L)

bcpoObj3 <- bcpo(shape = bcpObj$MAP[1],scale = bcpObj$MAP[2],cpt_model = cpt_model,obs_model = obs_model,obs_prior = obs_prior,cpt_prior = cpt_prior,l=40L)

## use a for loop to mimic the arrival of data stream

for(i in seq_along(newx)){

bcpOnline(bcpoObj=bcpoObj2,newObs=newx[i])

bcpOnline(bcpoObj=bcpoObj3,newObs=newx[i])

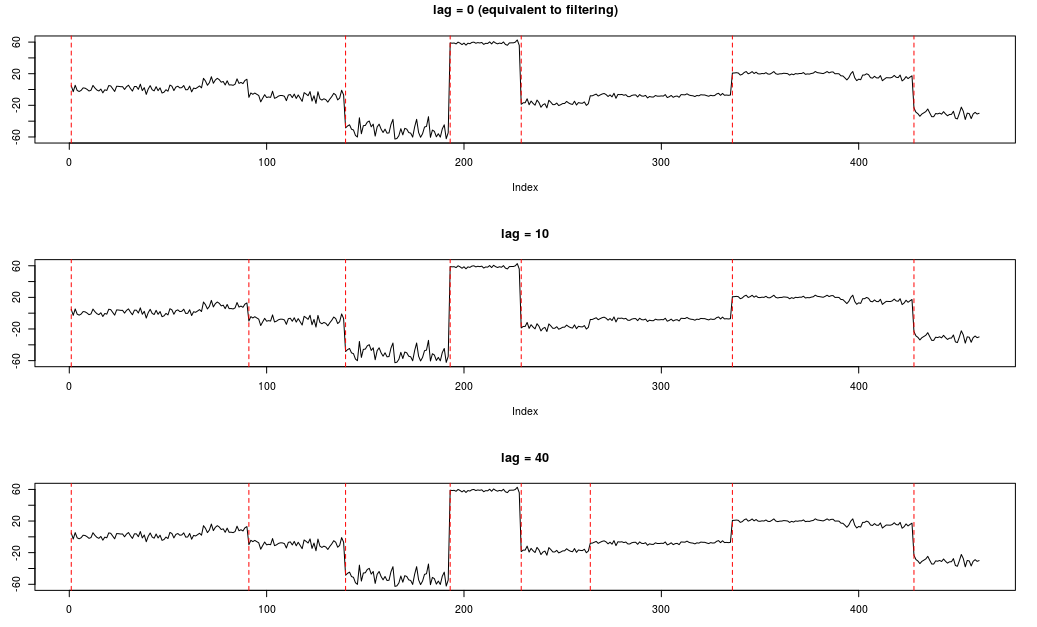

} Step 5: Compare the filtered and fixed-lag smoothed results:

par(mfcol = c(3,1))

plot(newx,type="l",main = "lag = 0 (equivalent to filtering)")

abline(v=which(bcpoObj1$isCPT),lty=2,col="red")

plot(newx,type="l",main = "lag = 10")

abline(v=which(bcpoObj2$isCPT),lty=2,col="red")

plot(newx,type="l",main = "lag = 40")

abline(v=which(bcpoObj3$isCPT),lty=2,col="red") Example 2: Learn with MCMC, then perform online inference.

in development ...

in development ...

Do whatever you want with the files in this repository, under the condition that you obey the licenses of the included packages/softwares/publications.

Footnotes

-

Adams, Ryan Prescott, and David JC MacKay. "Bayesian online changepoint detection." arXiv preprint arXiv:0710.3742 (2007). ↩