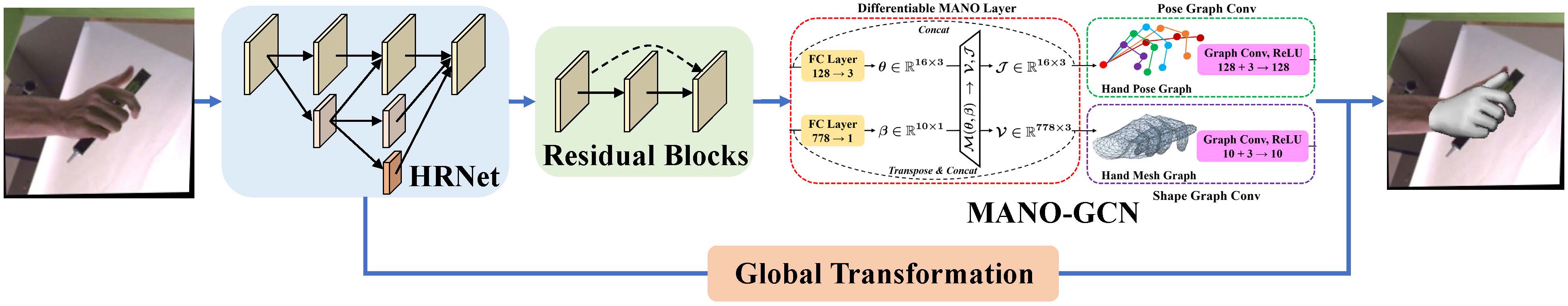

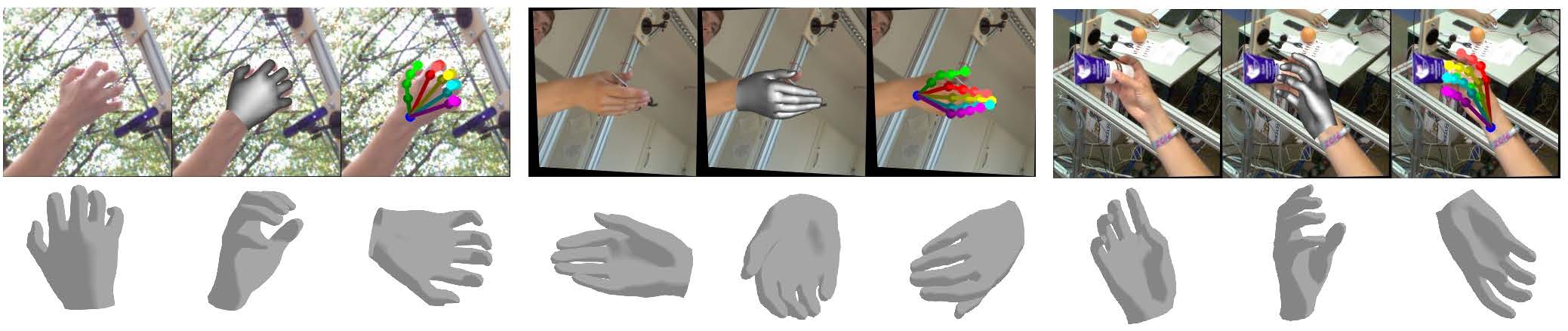

This is the implementation of our paper: Capturing Implicit Spatial Cues for Monocular 3D Hand Reconstruction (ICME 2021 Oral).

The installation of this repo is very simple, just install PyTorch and several other python libraries.

# 0. (Optional) We highly recommend that you install in a clean conda environment:

Install Anaconda3 from https://anaconda.org/

conda create -n manogcn python=3.8

# 1. Install PyTorch:

Please follow the official website: https://pytorch.org/

An example: pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

# 2. Install some required python libraries:

pip install opencv-python tqdm yacs scipyWe support FreiHAND and HO3D datasets. Please download them and create a softlink like:

ln -s ~/data/freihand/ datasets/We provide scripts for easily training and inference. Please check scripts/train.sh and scripts/eval.sh, such as:

config_file="configs/manogcn_1x_freihand.yaml"

gpus=4,5

gpun=2

# ------------------------ need not change -----------------------------------

CUDA_VISIBLE_DEVICES=$gpus python -m torch.distributed.run --standalone --nnodes=1 --nproc_per_node=$gpun \

train_net.py --config-file $config_fileBefore training, you may download the pretrained HRNet model pose_hrnet_w32_384x288.pth from https://github.com/leoxiaobin/deep-high-resolution-net.pytorch and put it here.

We leverage PyTorch3D to render the hand mesh. Please install it and check pytorch3d_plot.py.

@INPROCEEDINGS{manogcn,

author={Wu, Qi and Chen, Joya and Zhou, Xu and Yao, Zhiming and Yang, Xianjun},

booktitle={ICME},

title={Capturing Implicit Spatial Cues for Monocular 3D Hand Reconstruction},

year={2021},

pages={1-6}

}