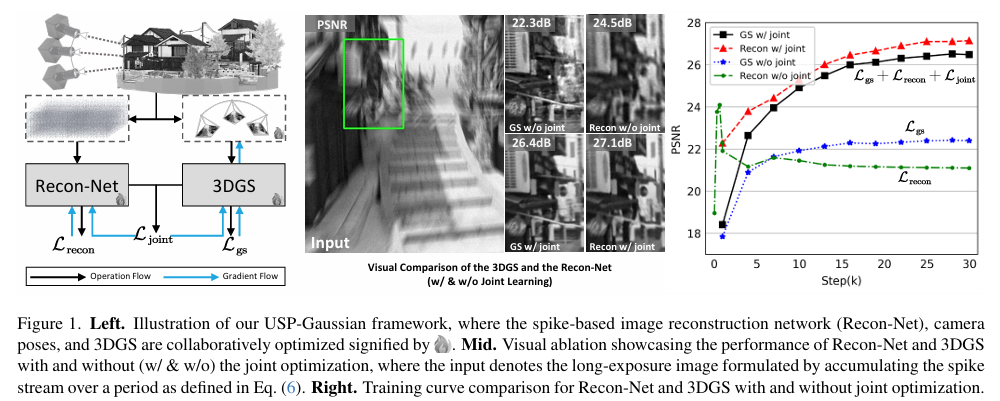

We propose a synergistic optimization framework USP-Gaussian, that unifies spike-based image reconstruction, pose correction, and Gaussian splatting into an end-to-end framework. Leveraging the multi-view consistency afforded by 3DGS and the motion capture capability of the spike camera, our framework enables a joint iterative optimization that seamlessly integrates information between the spike-to-image network and 3DGS. Experiments on synthetic datasets with accurate poses demonstrate that our method surpasses previous approaches by effectively eliminating cascading errors. Moreover, we integrate pose optimization to achieve robust 3D reconstruction in real-world scenarios with inaccurate initial poses, outperforming alternative methods by effectively reducing noise and preserving fine texture details.

- Release the synthetic/real-world dataset.

- Release the training code.

- Release the scripts for processing synthetic dataset.

- Release the project page.

- Multi-GPU training script & depth sequence render.

Our environment keeps the same with the BAD-Gaussian building on the nerfstudio. For installation, you can run the following command:

# (Optional) create a fresh conda env

conda create --name nerfstudio -y "python<3.11"

conda activate nerfstudio

# install dependencies

pip install --upgrade pip setuptools

pip install "torch==2.1.2+cu118" "torchvision==0.16.2+cu118" --extra-index-url https://download.pytorch.org/whl/cu118

conda install -c "nvidia/label/cuda-11.8.0" cuda-toolkit

pip install ninja git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torch

# install nerfstudio!

pip install nerfstudio==1.0.3

Moreover, well-organized synthetic and real-world datasets can be found in the download link.

Overall, the structure of our project is formulated as:

<project root>

├── bad_gaussians

├── data

│ ├── real_world

│ └── synthetic

├── imgs

├── train.py

└── render.py

For a comprehensive guide on synthesizing the entire synthetic dataset from scratch, as well as the pose estimation method, please refer to the Dataset file. Besides, for the dataset input explanation, please check #2 (comment).

In this project, there is no need to use the

blur_data. The inclusion of theblur_datafolder is just for the convenience of visualizing the input data. The sharp_data contains the provided ground truth images, which are used to calculate the image restoration and 3D restoration metrics such as PSNR, SSIM, and LPIPS. In real-world datasets, since we cannot capture the corresponding sharp images, you can place the tfp reconstructed images in sharp_data to make the code run. However, the calculation results of the metrics will not be accurate.

- For training on the spike-deblur-nerf scene

wine, run:

CUDA_VISIBLE_DEVICES=0 python train.py --seed_set 425 --net_lr 1e-3 \

--use_3dgs --use_spike --use_flip --use_multi_net --use_multi_reblur \

--data_name wine --exp_name joint_optimization --data_path data/synthetic/wine

- For training on the real-world scene

sheep, run:

CUDA_VISIBLE_DEVICES=0 python train.py --seed_set 425 --net_lr 1e-3 \

--use_3dgs --use_spike --use_flip --use_multi_net --use_multi_reblur --use_real \

--data_name sheep --exp_name joint_optimization --data_path data/real_world/sheep

For rendering 3D scene from the input camera trajectory, run:

CUDA_VISIBLE_DEVICES=0 python render.py interpolate \

--load-config outputs/sheep/bad-gaussians/<exp_date_time>/config.yml \

--pose-source train \

--frame-rate 30 \

--output-format video \

--interpolation-steps 5 \

--output-path renders/sheep.mp4

Our code is implemented based on the BAD-Gaussian and thanks for Lingzhe Zhao for his detailed help.

If you find our work useful in your research, please cite:

@article{chen2024usp,

title={USP-Gaussian: Unifying Spike-based Image Reconstruction, Pose Correction and Gaussian Splatting},

author={Chen, Kang and Zhang, Jiyuan and Hao, Zecheng and Zheng, Yajing and Huang, Tiejun and Yu, Zhaofei},

journal={arXiv preprint arXiv:2411.10504},

year={2024}

}