This repository contains a PyTorch implementation of SalGAN: Visual Saliency Prediction with Generative Adversarial Networks by Junting Pan et al,. The model learns to predict a saliency map given an input image.

The code are mainly based on batsa003/salgan. Thanks a lot for the great share.

TODO: We train the model on Salicon for 240 epoches. And finetune it on CAT2000 for another 240 epoches. The examples can be seen below.

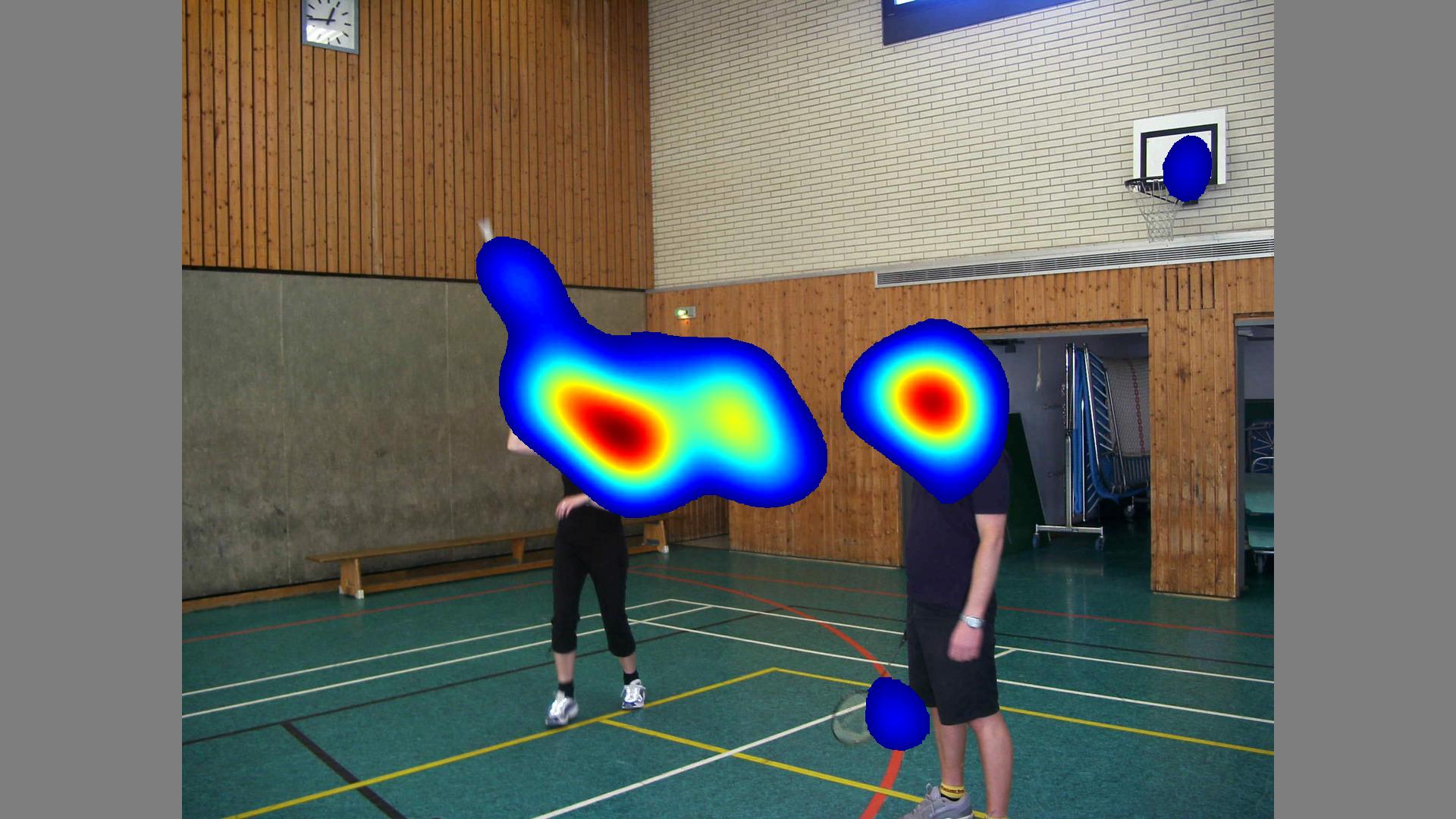

| Test image | Prediction | Groundtruth |

|---|---|---|

|

|

|

We can get CC with 0.82 and KL with 0.37 on CAT2000 after finetuning.

TODO: In the src/, run:

python predict.py

The code requires a pytorch installation.

Before you train the model, preprocess the dataset by running

python preprocess.py

to resize the ground truth images and saliency maps to 256x192.

To train the model, refer to main.py:

python main.py

TODO: We also add WGAN-GP in main.py.

However, though the loss seems right while training, we only get blank maps.

There are still unknown bugs when training in WGAN-GP mode.

Refer to main.py for more information.

We used the SALICON dataset for training.

We suggest you to finetune on your own dataset before testing if you want to test on a new dataset.

https://imatge-upc.github.io/saliency-salgan-2017/