Please move to https://github.com/pkunlp-icler/FastV for latest updates

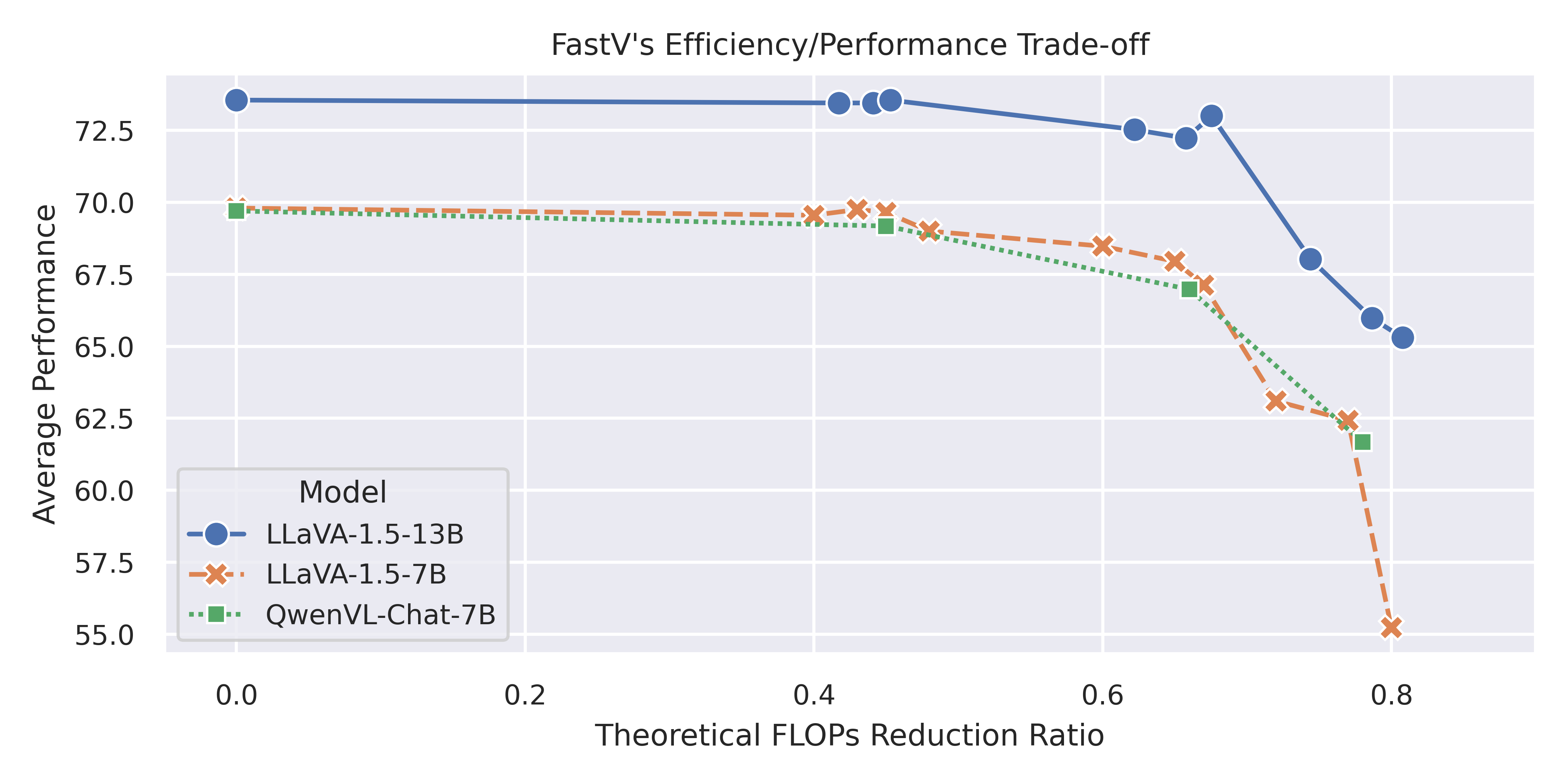

FastV is a plug-and-play inference acceleration method for large vision language models relying on visual tokens. It could reach 45% theoretical FLOPs reduction without harming the performance through pruning redundant visual tokens in deep layers.

Latest News 🔥

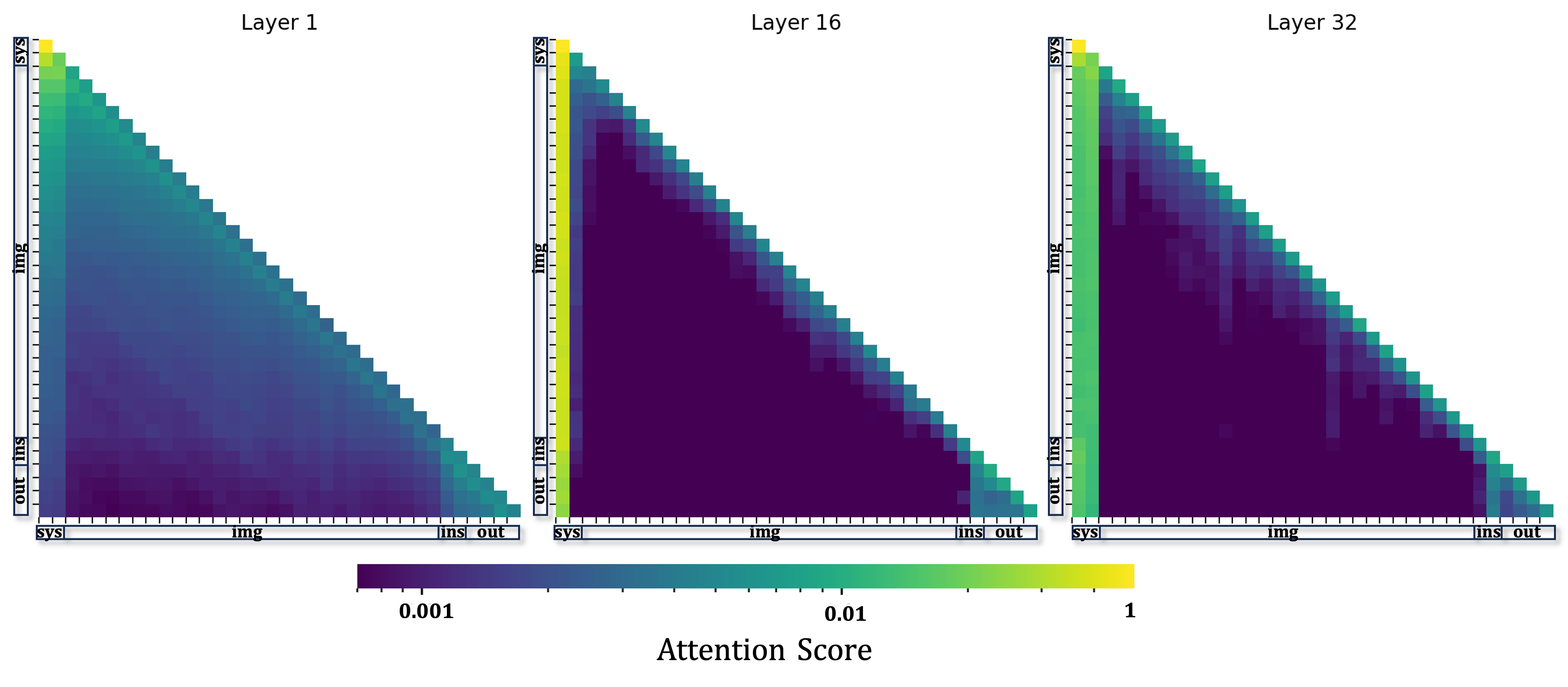

- LVLM Inefficent Visual Attention Visualization Code

- FastV demo inference code

- Graido visualization demo

- Integrate FastV to LLM inference framework

Stay tuned!

conda create -n fastv python=3.10

conda activate fastv

cd src

bash setup.shwe provide a script (./src/FastV/inference/visualization.sh) to reproduce the visualization result of each LLaVA model layer for a given image and prompt.

bash ./src/FastV/inference/visualization.shor

python ./src/FastV/inference/plot_inefficient_attention.py \

--model-path "PATH-to-HF-LLaVA1.5-Checkpoints" \

--image-path "./src/LLaVA/images/llava_logo.png" \

--prompt "Describe the image in details."\

--output-path "./output_example"\Model output and attention maps for different layers would be stored at "./output_example"

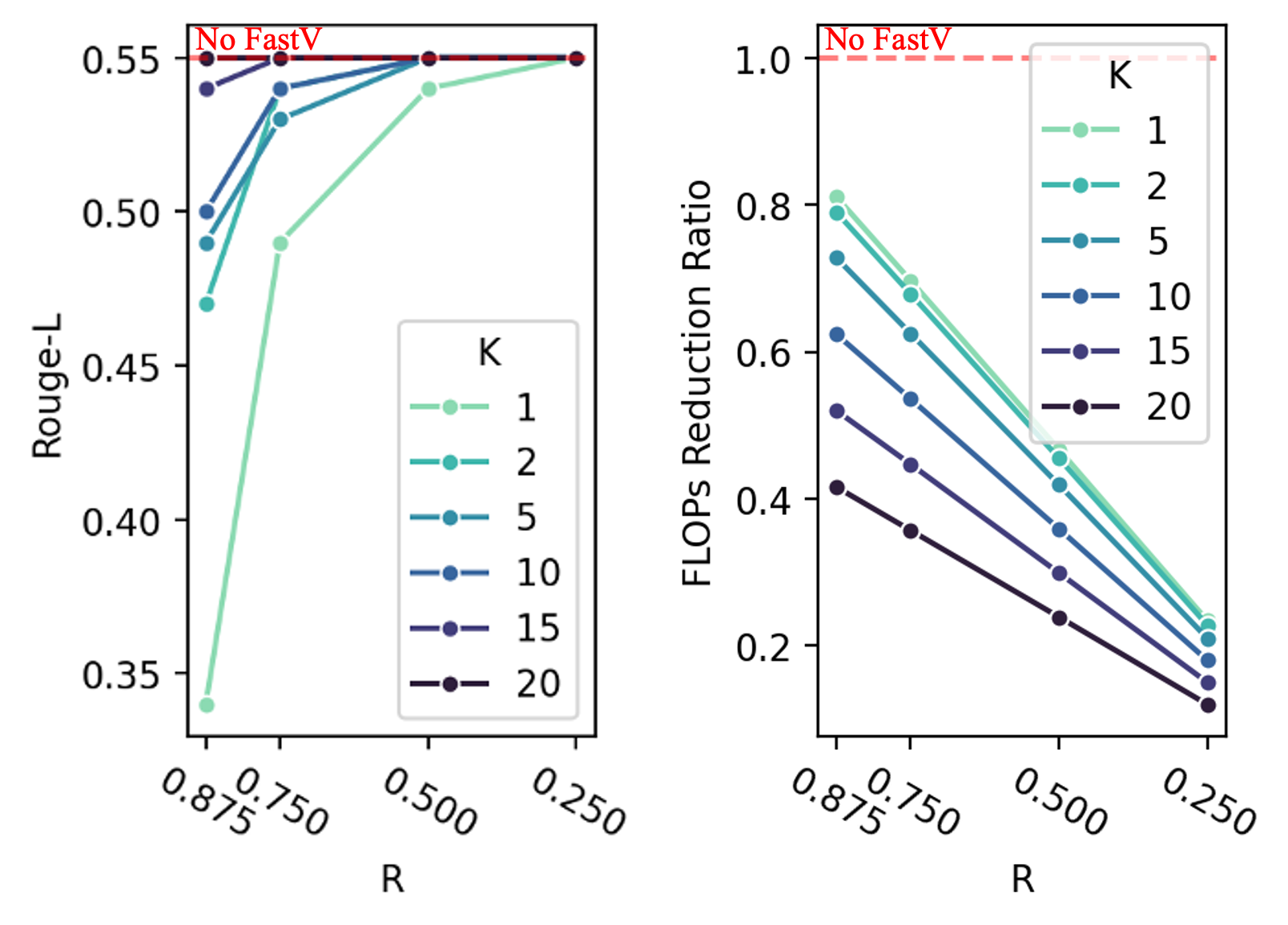

We provide code to reproduce the ablation study on K and R values, as shown in figure-7 in the paper. This implementation masks out the discarded tokens in deep layers for convenience. Inplace token dropping feature would be added in LLM inference framework section.

ocrvqa

bash ./src/FastV/inference/eval/eval_ocrvqa.sh@misc{chen2024image,

title={An Image is Worth 1/2 Tokens After Layer 2: Plug-and-Play Inference Acceleration for Large Vision-Language Models},

author={Liang Chen and Haozhe Zhao and Tianyu Liu and Shuai Bai and Junyang Lin and Chang Zhou and Baobao Chang},

year={2024},

eprint={2403.06764},

archivePrefix={arXiv},

primaryClass={cs.CV}

}