Azure Spring Apps File Processing Sample

1. Scenario

1.1. Log Files Explanation

-

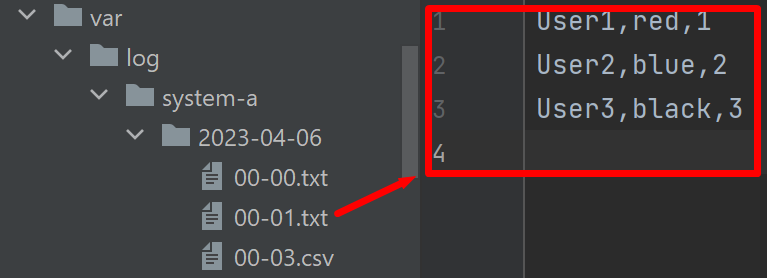

A system generates log files in folders named by date:

/var/log/system-a/${yyyy-MM-dd}. -

The log files are txt files named by hour and minute:

${hh-mm}.txt. -

Each line of the log files will have format like this:

${name,favorite_color,favorite_number}.Here is a picture about folder structure and log file:

1.2. File Processing Requirements

1.2.1. Functional Requirements

-

Before app starts, the number of files to be processed in unknown.

- All files in specific folder should be processed.

- All files in sub folder should be processed, too.

-

Each line should be converted into an avro object. Here is the format of avro:

{ "namespace": "com.azure.spring.example.file.processing.avro.generated", "type": "record", "name": "User", "fields": [ { "name": "name", "type": "string" }, { "name": "favorite_number", "type": [ "int", "null" ] }, { "name": "favorite_color", "type": [ "string", "null" ] } ] } -

Send the avro object to Azure Event Hubs.

-

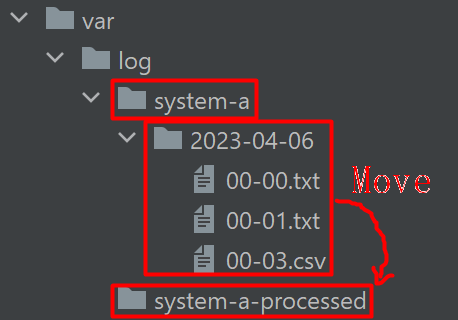

After file processed, move the file to another folder to avoid duplicate processing. Here is an example of move target folder:

/var/log/system-a-processed/${yyyy-MM-dd}Here is a picture about moving log files after processed:

1.2.2. Non-Functional Requirements

-

The system must be robust.

-

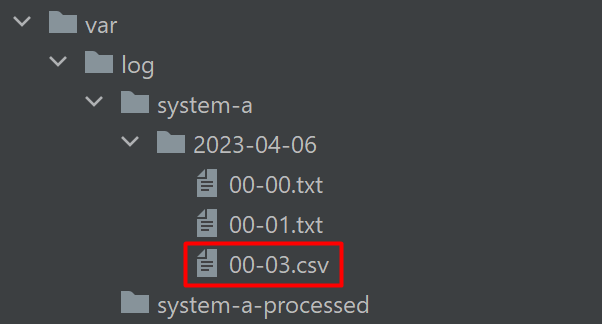

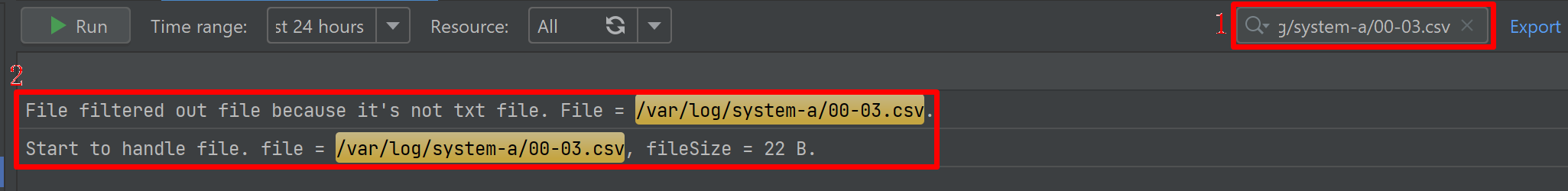

1.1. Handle invalid file. Current application only handle txt files. For other file types like csv, it will be filtered out.

-

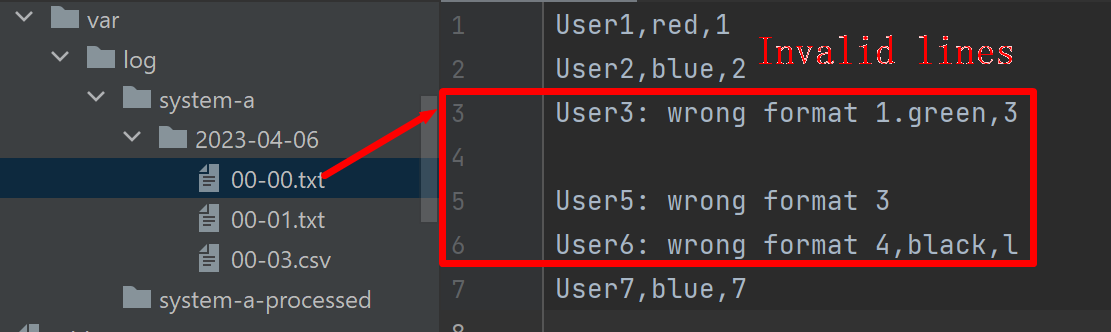

1.2. Handle invalid line. When there is a invalid data line in a file, output a warning log then continue processing.

-

-

Easy to track.

- 2.1. When there is invalid line, the log should contain these information:

- Which file?

- Which line?

- Why this line is invalid?

- What is the string value of this line?

- 2.2. Track each step of a specific file.

- Does this file be added in to processing candidate?

- If the file is filtered out, why it's filtered out?

- How many line does this file have?

- Does each line of this file been converted to avro object and send to Azure Event Hubs successfully?

- 2.1. When there is invalid line, the log should contain these information:

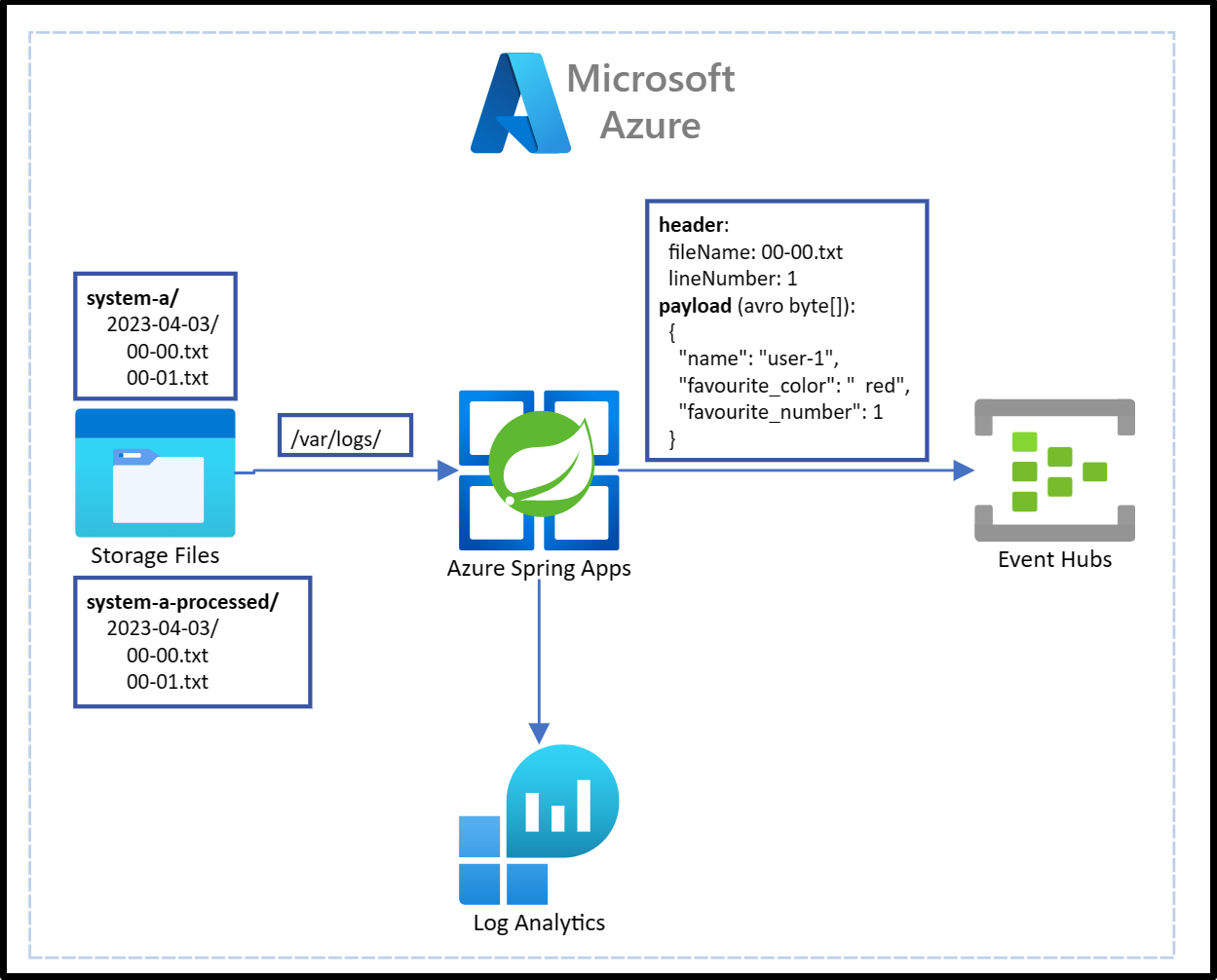

1.3. System Diagram

- Azure Spring Apps: Current application will run on Azure Spring Apps.

- Azure Storage Files: Log files stored in Azure Storage files.

- Azure Event Hubs: In log files, each valid line will be converted into avro format then send to Azure Event Hubs.

- Log Analytics: When current application run in Azure Spring Apps, the logs can be viewed by Log Analytics.

1.4. The Application

The scenario is a classic Enterprise Integration Pattern, so we use the Spring Boot + Spring Integration in this application.

2. Run Current Sample on Azure Spring Apps Consumption Plan

2.1. Provision Required Azure Resources

- Provision an Azure Spring Apps instance. Refs: Quickstart: Provision an Azure Spring Apps service instance.

- Create An app in created Azure Spring Apps.

- Create an Azure Event Hub. Refs: Create an event hub using Azure portal.

- Create Azure Storage Account. Refs: Create a storage account.

- Create a File Share in created Storage account.

- Mount Azure Storage into Azure Spring Apps to

/var/log/. Refs: How to enable your own persistent storage in Azure Spring Apps.

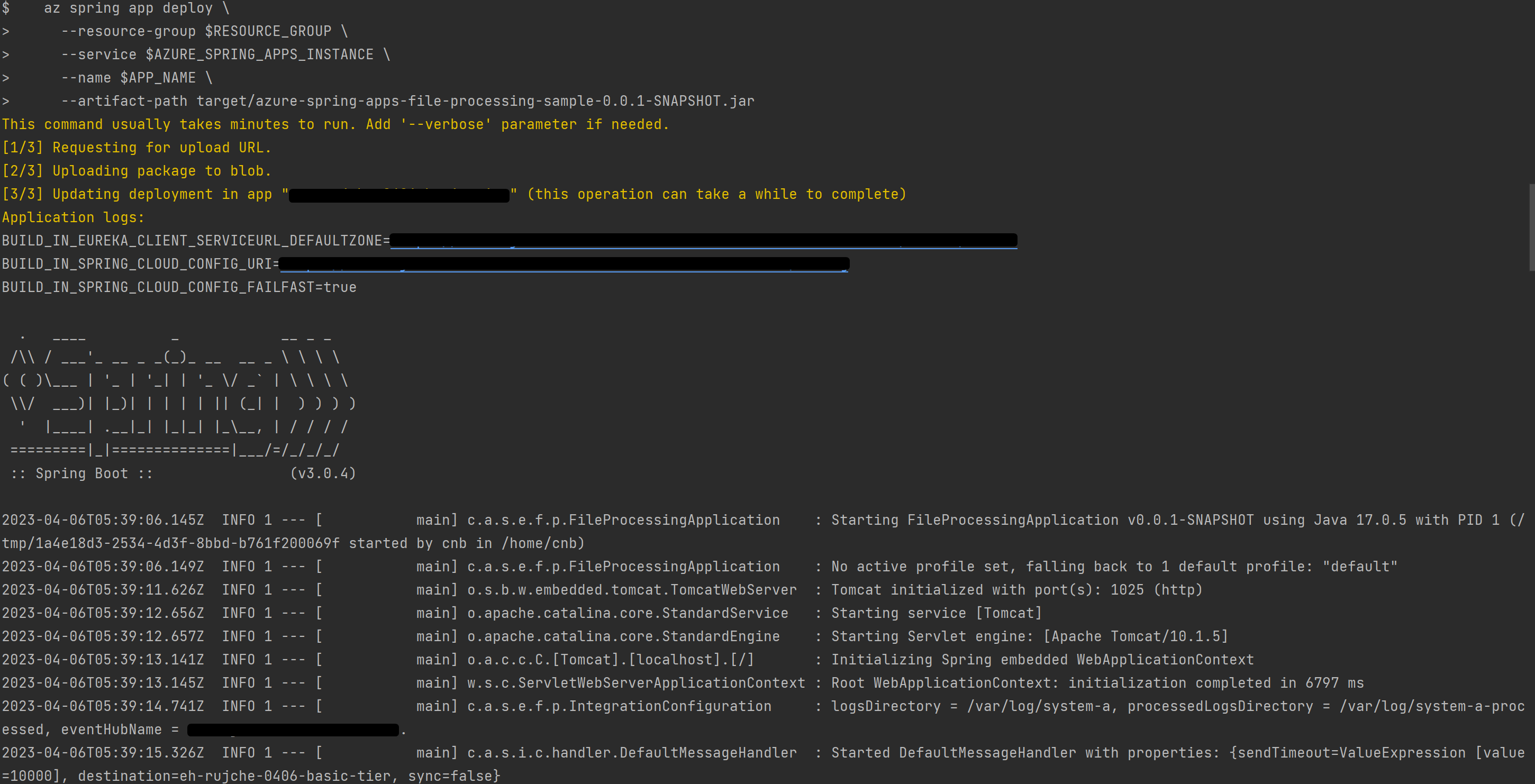

2.2. Deploy Current Sample

-

Set these environment variables for the app.

logs-directory=/var/log/system-a processed-logs-directory=/var/log/system-a-processed spring.cloud.azure.eventhubs.connection-string= spring.cloud.azure.eventhubs.event-hub-name=

-

Upload some sample log files into Azure Storage Files. You can use files in ./test-files/var/log/system-a.

-

Build package.

./mvnw clean package

-

Set necessary environment variables according to the created resources.

RESOURCE_GROUP= AZURE_SPRING_APPS_INSTANCE= APP_NAME=

-

Deploy app

az spring app deploy \ --resource-group $RESOURCE_GROUP \ --service $AZURE_SPRING_APPS_INSTANCE \ --name $APP_NAME \ --artifact-path target/azure-spring-apps-file-processing-sample-0.0.1-SNAPSHOT.jar

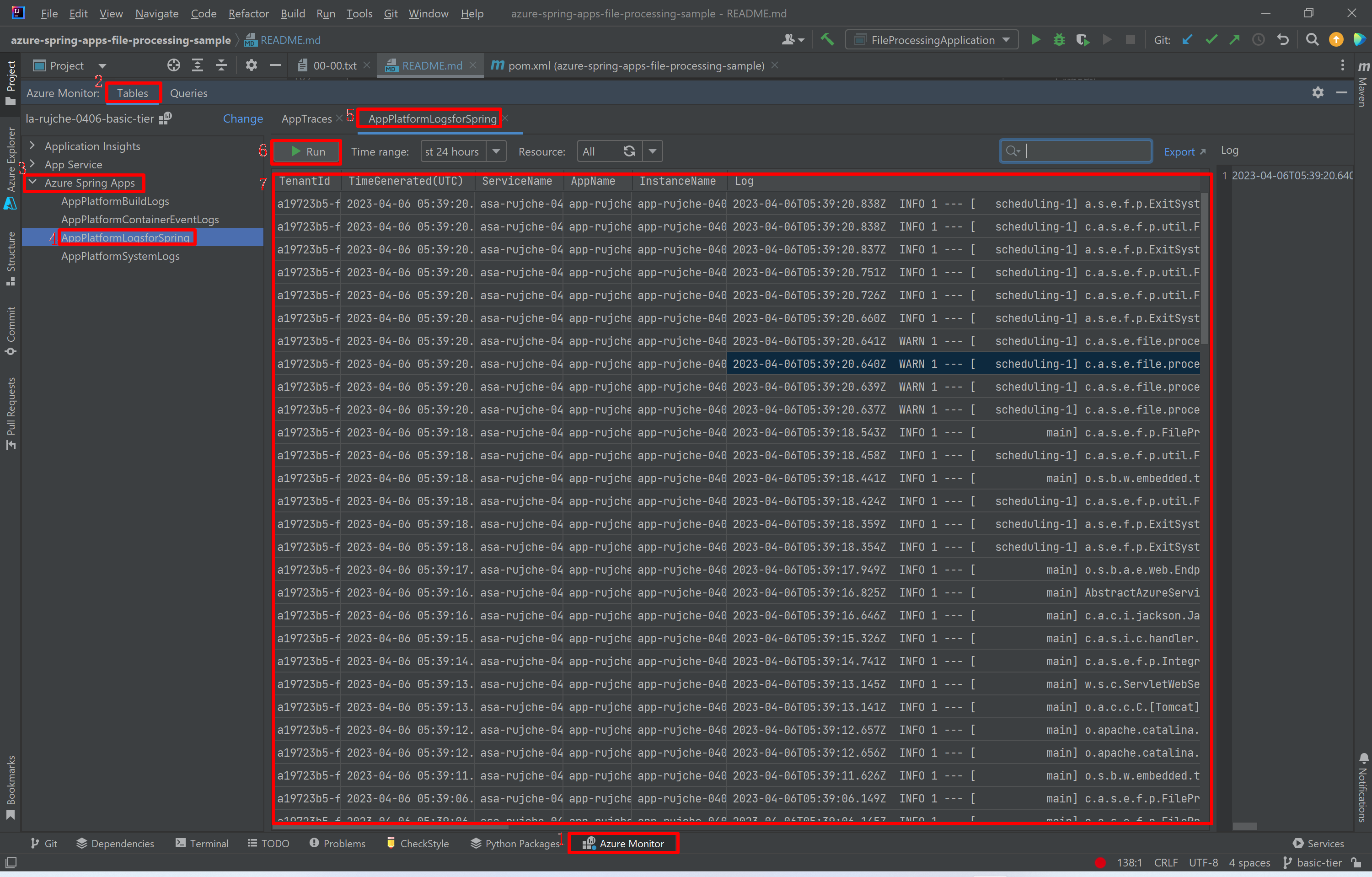

2.3. Check Details About File Processing

-

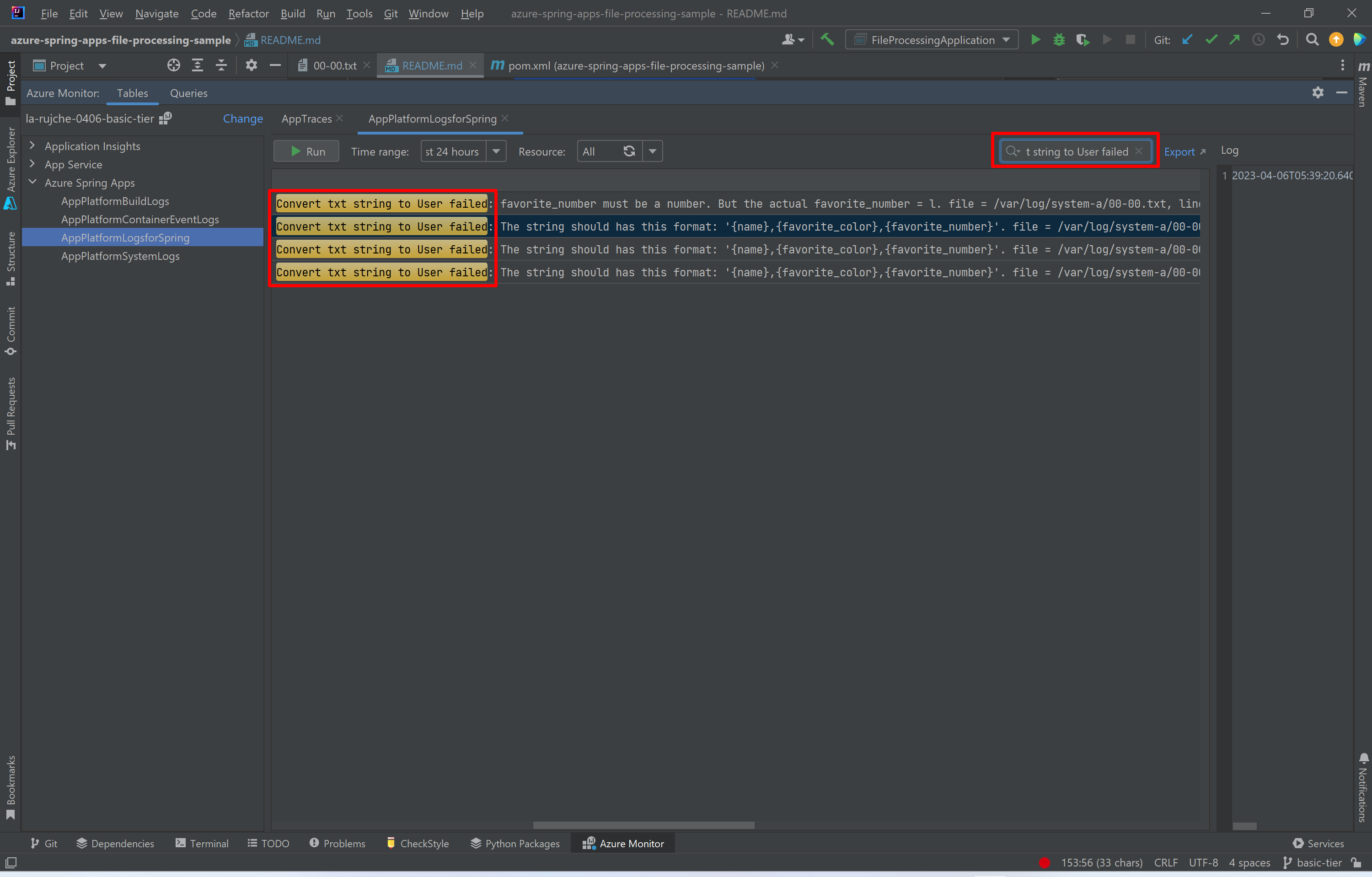

Check logs by Azure Toolkit for IntelliJ.

-

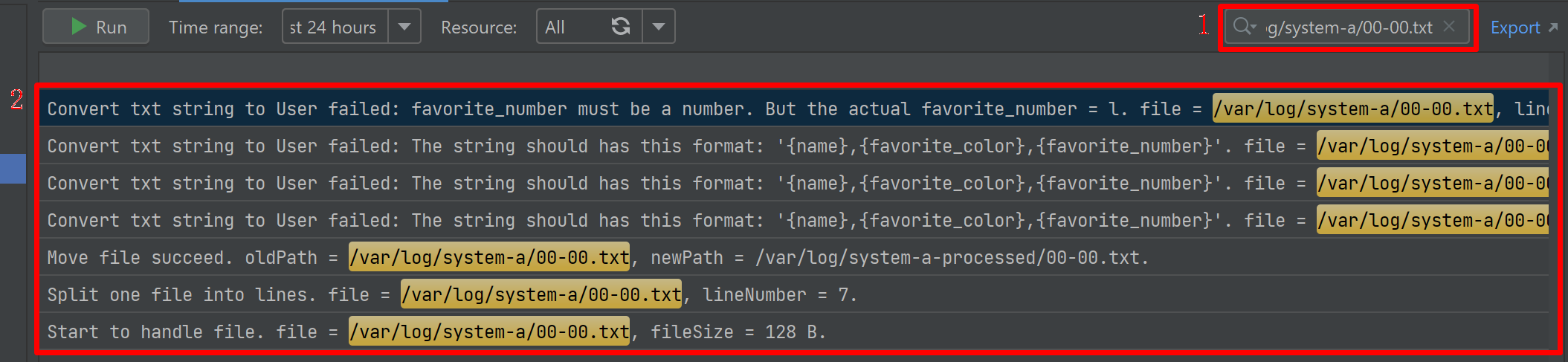

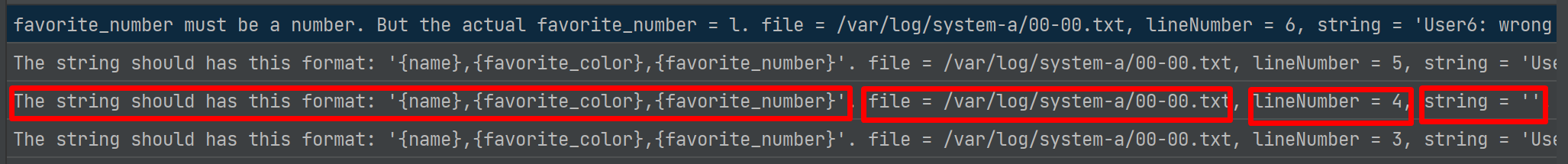

Get log of specific error.

Input

Convert txt string to User failedin search box: Error details can be found in the log:

Error details can be found in the log:

-

Get all logs about a specific file.

-

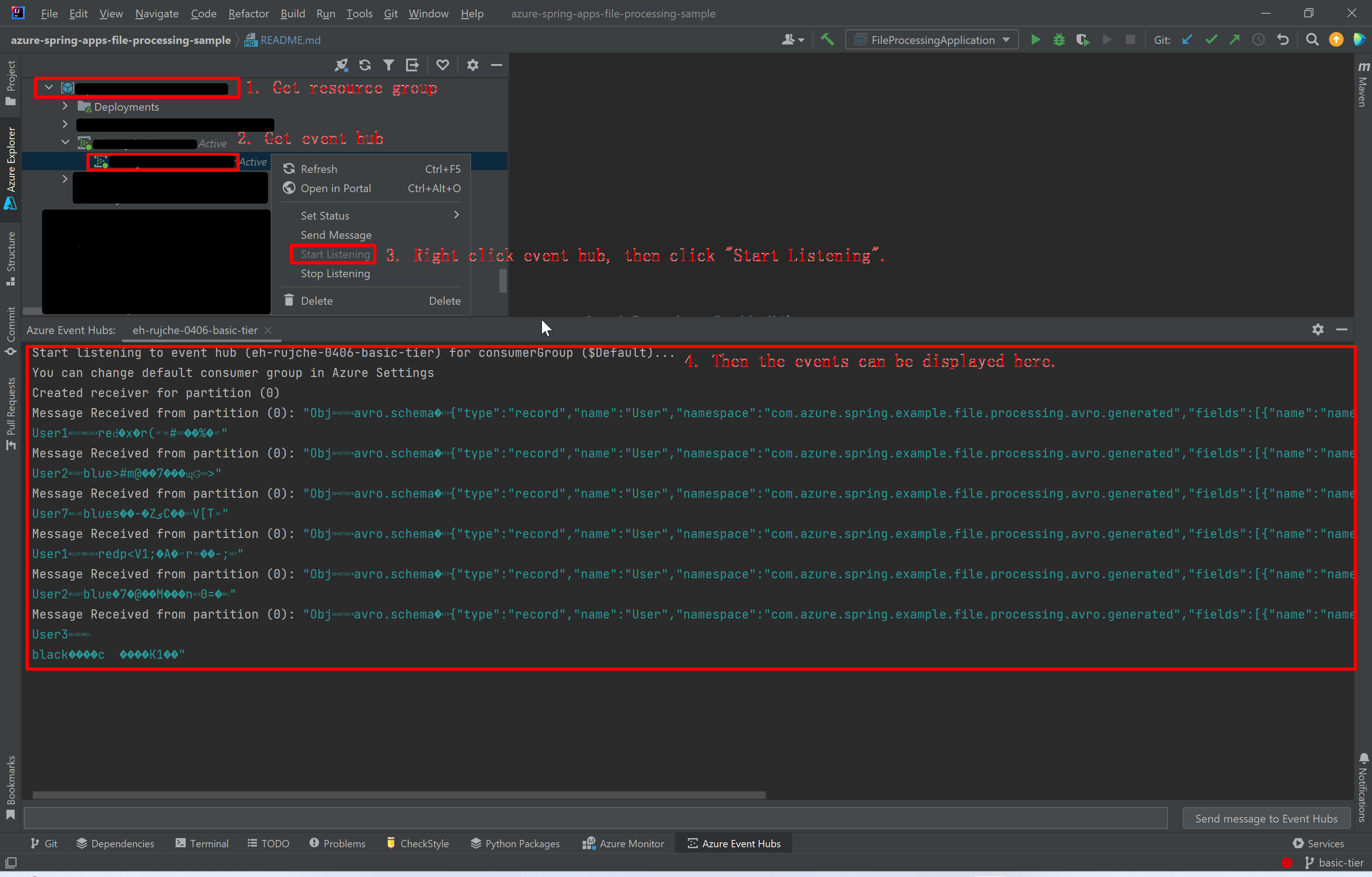

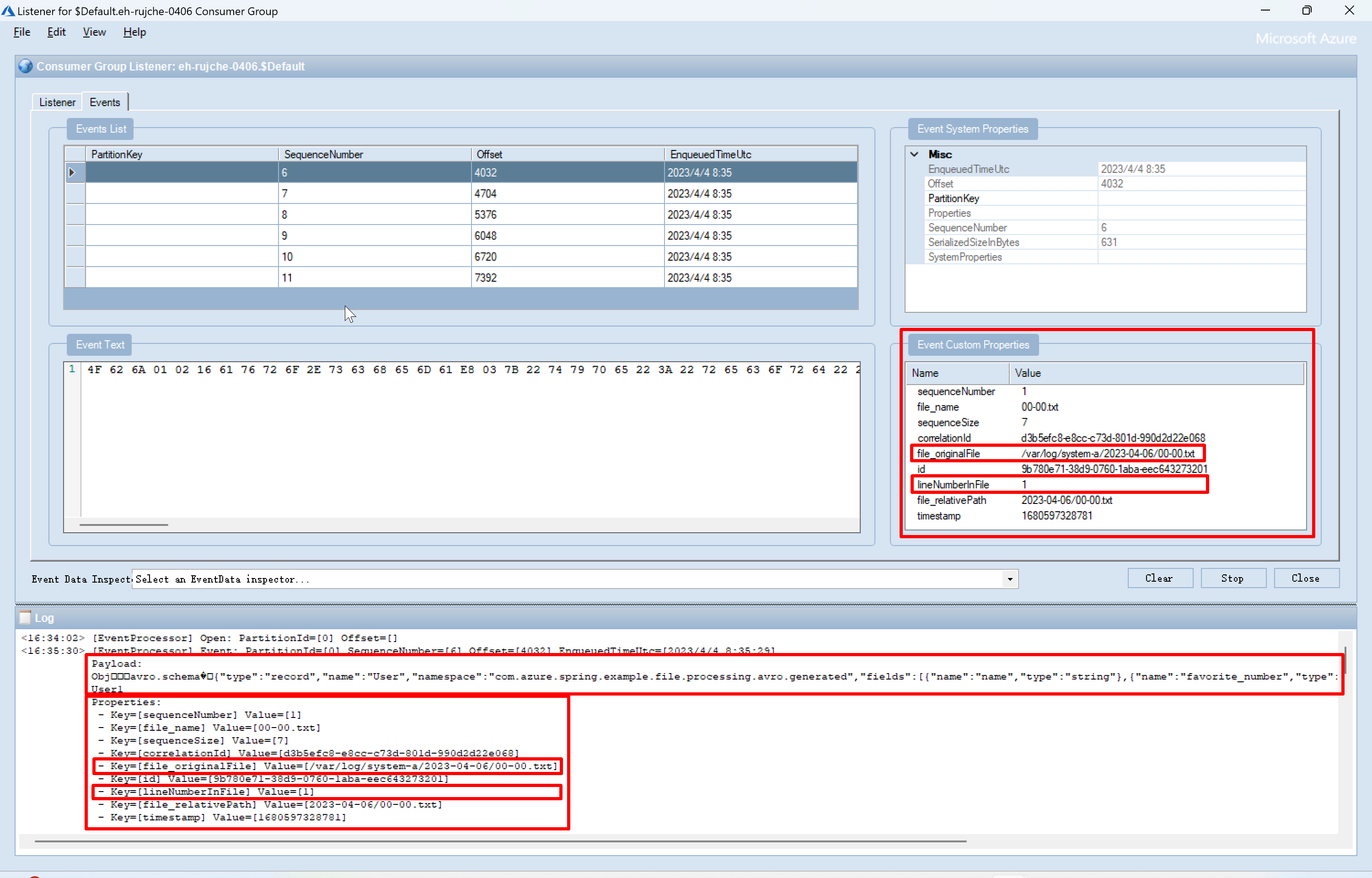

Check events in Azure Event Hubs.

Check events by Azure Toolkit for IntelliJ:

Another way to check events is using ServiceBusExplorer. It can give more information about message properties:

3. Next Steps

3.1. Store Secrets in Azure Key Vault Secrets

Secret can be stored in Azure Key Vault secrets and used in this application. spring-cloud-azure-starter-keyvault is a useful library to get secrets from Azure KeyVault in Spring Boot applications. And spring-cloud-azure-starter-keyvault supports refresh the secrets in a fixed interval.

The following are some examples of using secrets in current application:

- Azure Event Hubs connection string.

- Passwords to access file. All passwords can be stored in a key-value map. Here is example of such map:

[ {"00-00.txt": "password-0"}, {"00-01.txt": "password-1"}, {"00-02.txt": "password-2"} ]

3.2. Auto Scaling

3.2.1. Scale 0 - 1

- Design

- Scale to 0 instance when:

- There is no file need to be handled for more than 1 hour.

- Scale to 1 instance when one of these requirements satisfied:

- File exists for more than 1 hour.

- File count > 100.

- File total size > 1 GB.

- Scale to 0 instance when:

- Implement: Use Azure Blob Storage instead of Azure File Share. So related KEDA Scaler can be used.

3.2.2. Scale 1 - n

- Design: Scale instance number according to file count and total file size.

- Implement: To avoid competition between instances, use some proven technology like Master/slave module.