Table of Contents

- Live-streaming of swap events

- Processing of event data

- Dockerizing and writing into database

- Historical recording of swap events

- API service

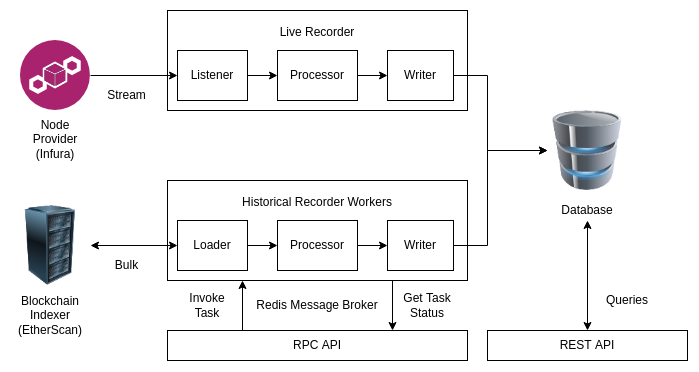

This project comprises the source code of

- Live Recording Streaming Service in

services/recording/src/live - Historical Batch Recording RPC API Service in

services/recording/src/historical - RESTful API Interface Service in

services/interface/src

NOTE: 1. and 2. have shared dependencies -- mainly the src/events module that provides event-specific handlers for each type of event.

- Uniswap V3 Pool Contract's

Swapevent (event_id=uniswap-v3-pool-swap)

databaseservice- The MongoDB database

- Exposed to host at port

27017(accessible atlocalhost:27017)

liveservice- Live events recording

redisservice- Message broker for historical batch recording tasks

historical-rpc-apiservice- RPC endpoints to invoke the tasks

historical-workersservice- Celery workers to execute the tasks

interfaceservice- RESTful endpoints for reading from the database

proxyservice- Nginx proxy to route

RPCandRESTfulcalls to the respective services - Exposed to host at port

80(accessible atlocalhost:80or justlocalhost)

- Nginx proxy to route

We can further categorize them as such:

- The database

- Live Recording

liveservice

- Historical Recording (RPC API)

redisservicehistorical-rpc-apiservicehistorical-workersservice

- RESTful API

interfaceservice

- Proxy for API routing

proxyservice

It is assumed that Python version >=3.9 and package manager pip is already set up on your machine.

NOTE: Version should be >= 3.9 to facilitate type-hinting with native types.

$ python -m venv venv

$ source venv/bin/activate$ pip install -r dev_requirements.txtUse the provided shell script:

$ sh bin/run_all_tests.shOr individually cd into the services/interface and services/recording directories to run:

$ flake8 . --count --exit-zero

$ mypy --install-types --non-interactive .

$ pytest .Before using, we need to specify some environment variables. The template can be found in .env.template. Simply copy the contents into a .env file in the root directory of the project and fill in the values for them.

NOTE: Do NOT change these 2 values if running on the same machine with the default docker-compose.yaml configurations.

DB_HOST=database

DB_PORT=27017DB_HOST=databaserefers to the name of the database service as per thedocker-compose.yamlconfiguration.DB_PORT=27017refers to the default port forMongoDBsince we do not change it as per thedocker-compose.yamlconfiguartion.

An example of the NODE_PROVIDER_RPC_URI and NODE_PROVIDER_WSS_URI are

https://mainnet.infura.io/v3/<my-infura-api-key>wss://mainnet.infura.io/ws/v3/<my-infura-api-key>

To run the services, we use docker-compose. Build it and run it with:

$ docker-compose build

$ docker-compose upWhile the default run-time configurations are already provided, we have the option to can change them by looking into the configs directory, for both live and historical recording.

The live recording configurations include:

gas_pricinggas_currency- e.g., ETH for Uniswap

quote_currency- e.g., USDT by default

subscriptions(array of subscriptions)contract_address- The address of the contract to subscribe to

- e.g.

0x88e6A0c2dDD26FEEb64F039a2c41296FcB3f5640for theUSDC-WETHUniswap V3 Pool

event_id- e.g.,

uniswap-v3-pool-swapfor Uniswap V3 Pool'sSwapevents, which helps to recognize the event type and thus, the event handler.

- e.g.,

The historical recording configurations include

gas_pricinggas_currency- e.g., ETH for Uniswap

quote_currency- e.g., USDT by default

NOTE: These config files are currently loaded into the containers with docker volumes. Simply stop the containers and restart them to update.

Once the services are up and running, we can head over to the welcome page @ http://localhost (i.e., http://localhost:80):

From the welcome page, we can navigate to either the RESTful API Docs or RPC API Docs (recall they are two separate services, merely proxied by Nginx).

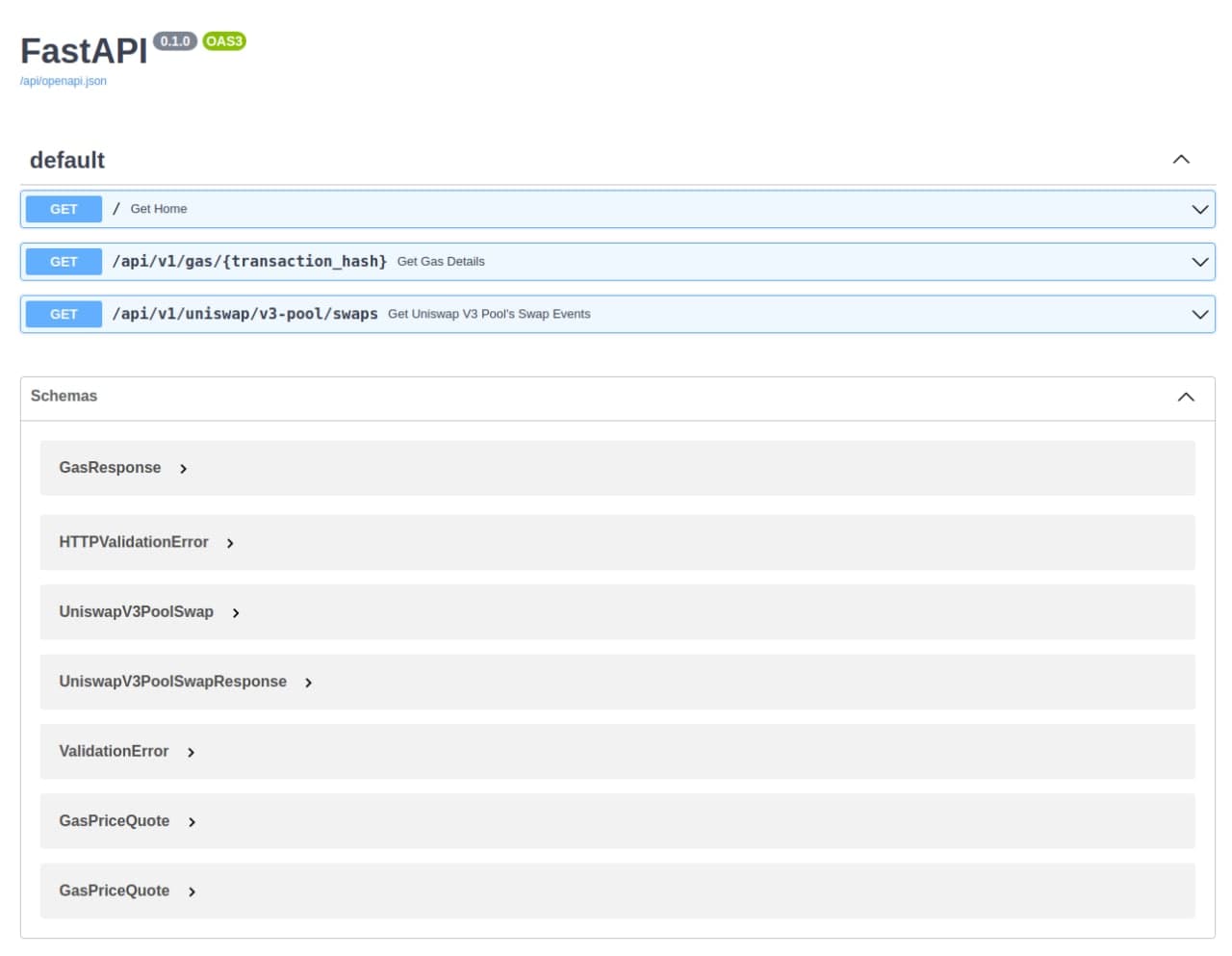

REST API Docs:

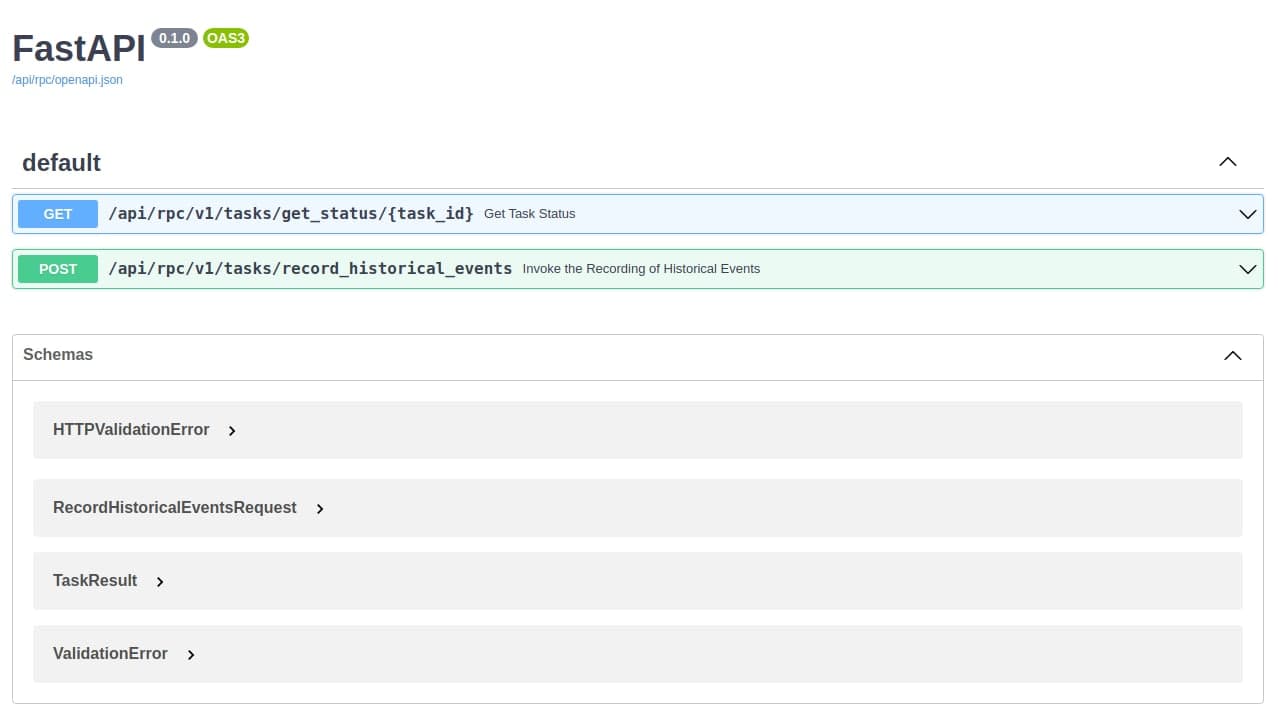

RPC API Docs:

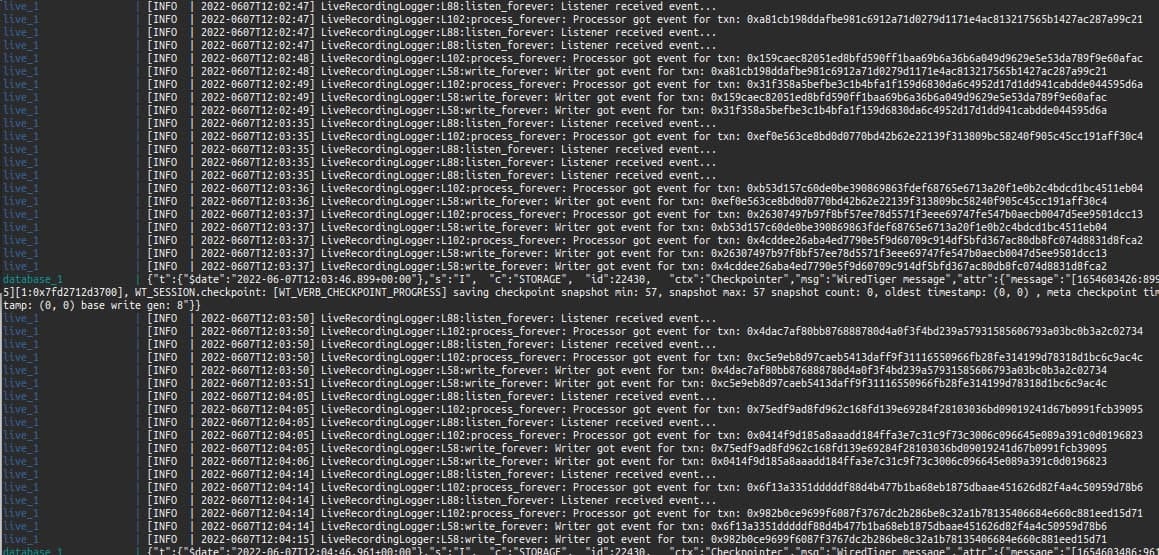

While we were busy exploring the documentations, the live recording service had started recording live data:

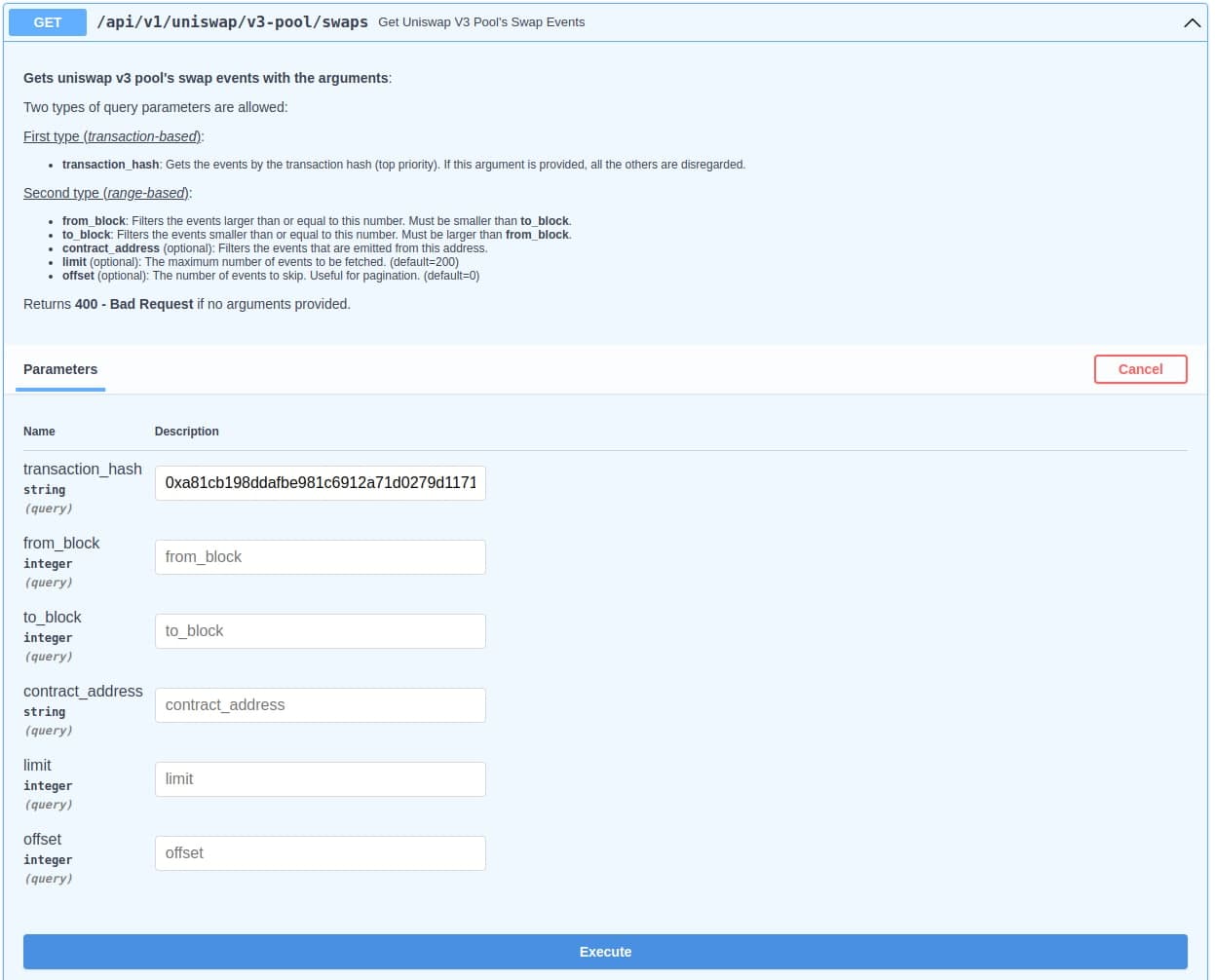

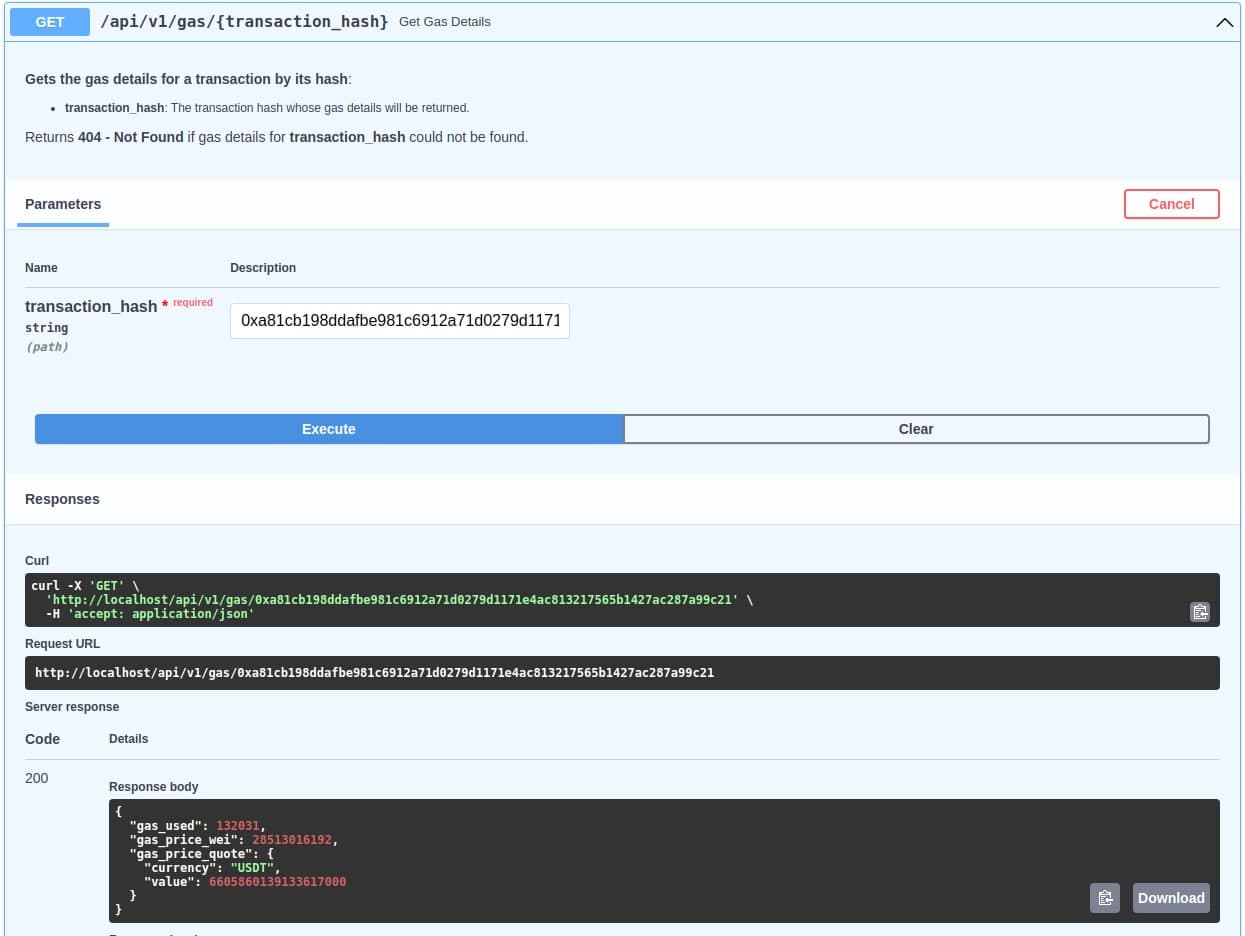

Say we are interested in transaction hash 0xa81cb198ddafbe981c6912a71d0279d1171e4ac813217565b1427ac287a99c21, the first one logged out in the above image, we can make a GET request to the REST API:

and voila! We get our response:

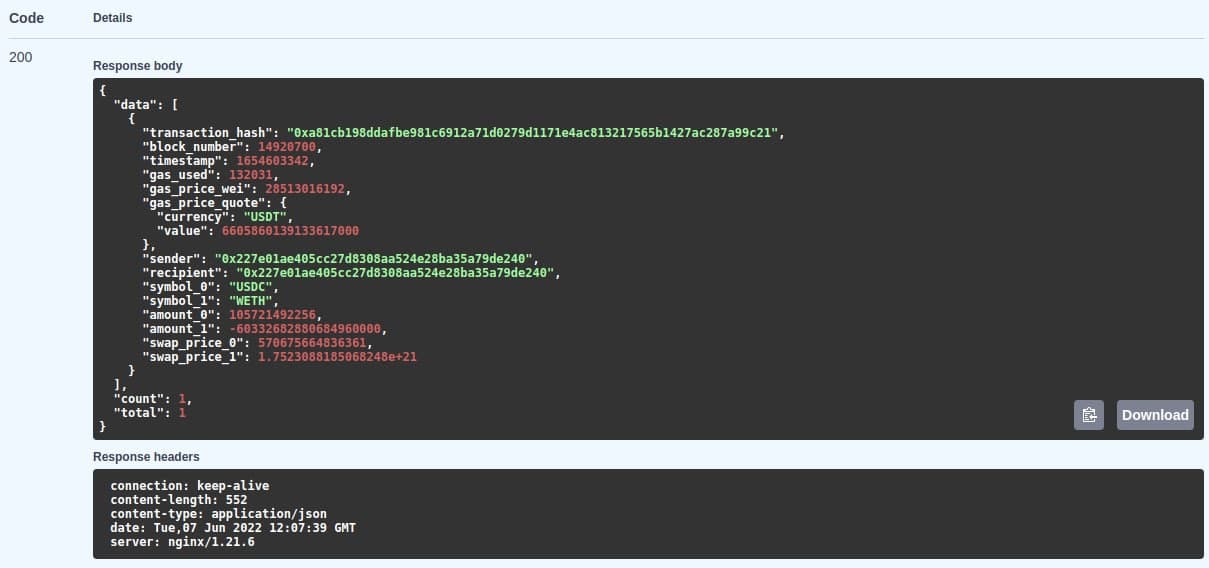

Alternatively, if we are only interested in the transaction gas fees, we make a call to the gas route:

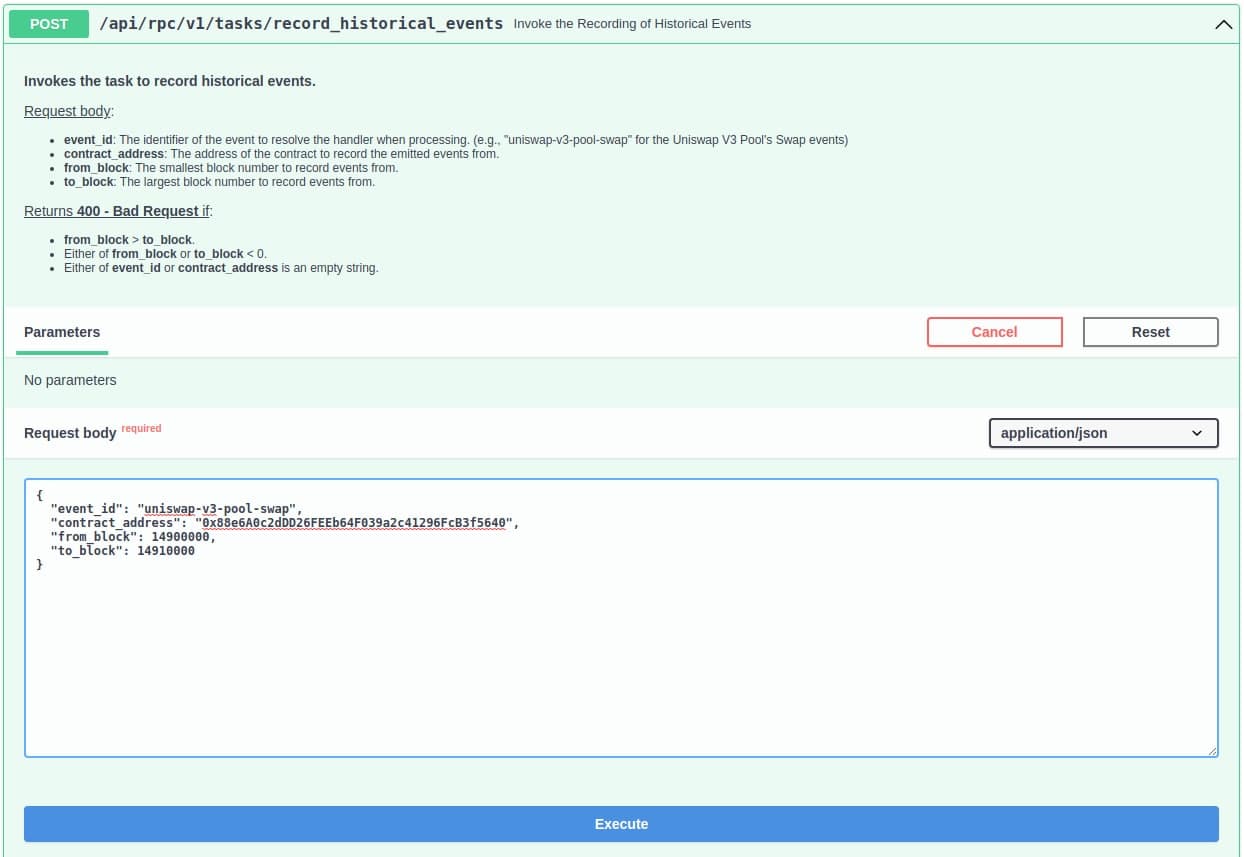

Now, let's say we are interested in events that had been emitted by the USD-WETH Uniswap V3 Pool contract in the past, say from block height = 14900000 to 14910000. We shall use the RPC API to invoke a historical batch recording task:

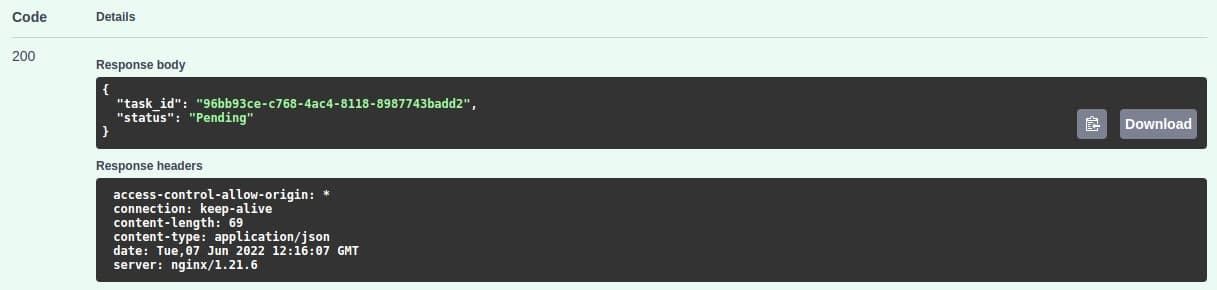

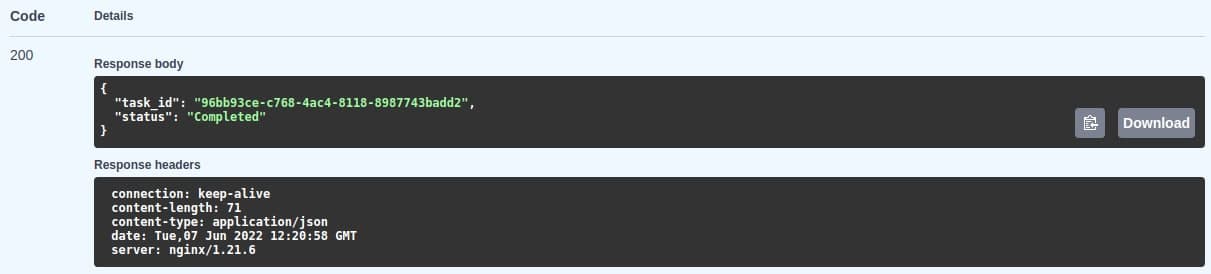

We should get back the task_id:

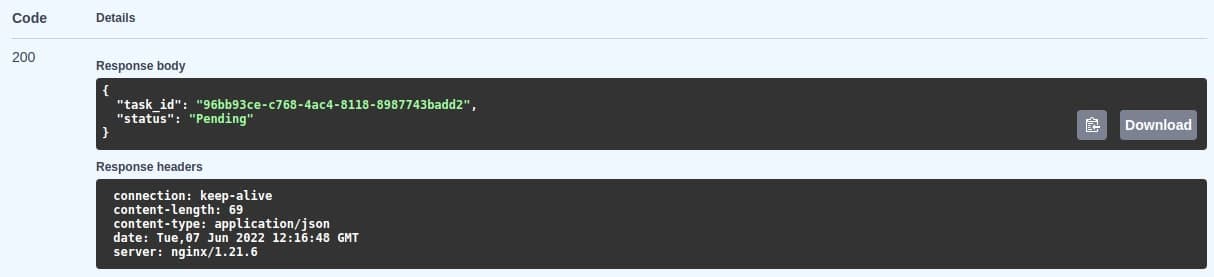

If we try to get the status of the task immediately, we'll see that it is still pending... (of course)

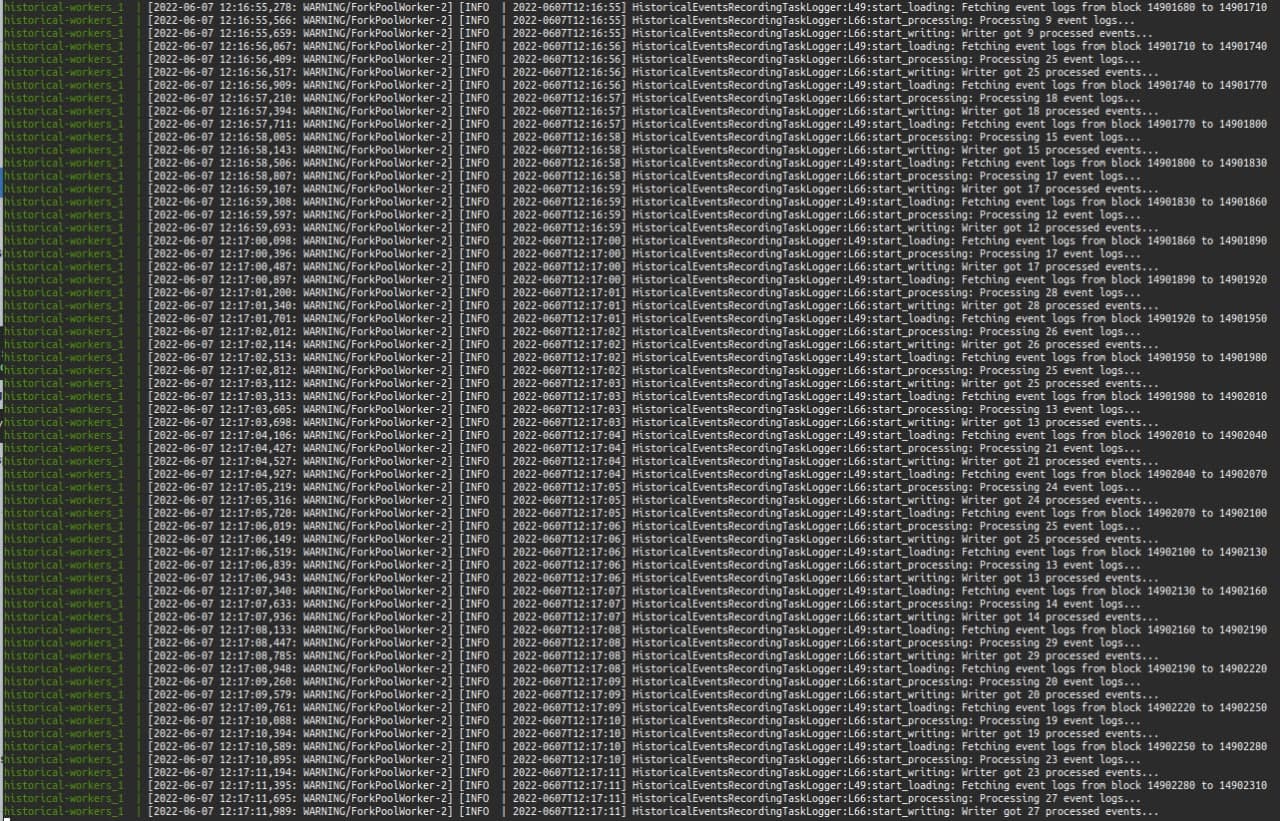

Then we see the logs that the task has indeed started running:

After some time, we try to get the status of the task again and see that it had completed:

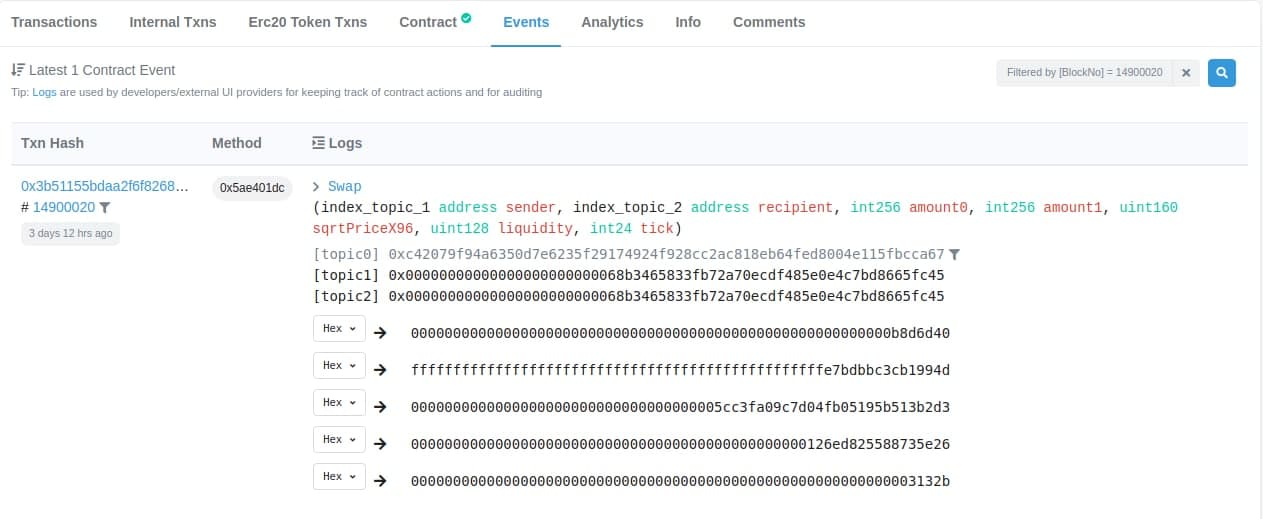

Now, suppose we are interested in a swap event from block 14900020, as seen on EtherScan:

And its corresponding transaction whose hash is 0x3b51155bdaa2f6f8268445a7bb5d7e1abc8ea983be34b7e3dcc592c9a3f230c7:

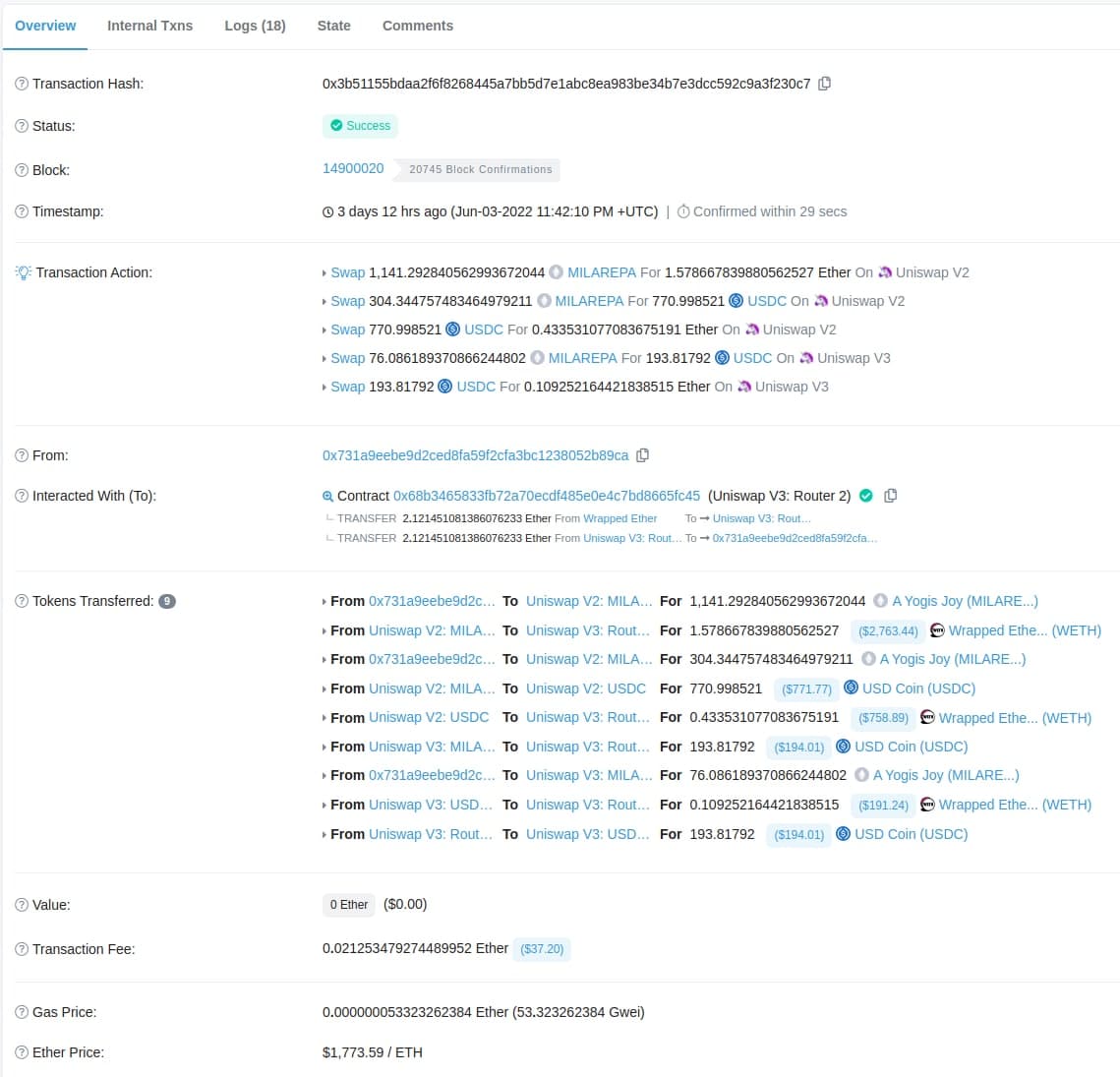

We can head back to the REST API to retrieve the details for this transaction:

We see that we have correctly recorded that

gas_used=398578unitsgas_price=53323262384wei (53.323262384gwei)gas_price= around37.74USDT

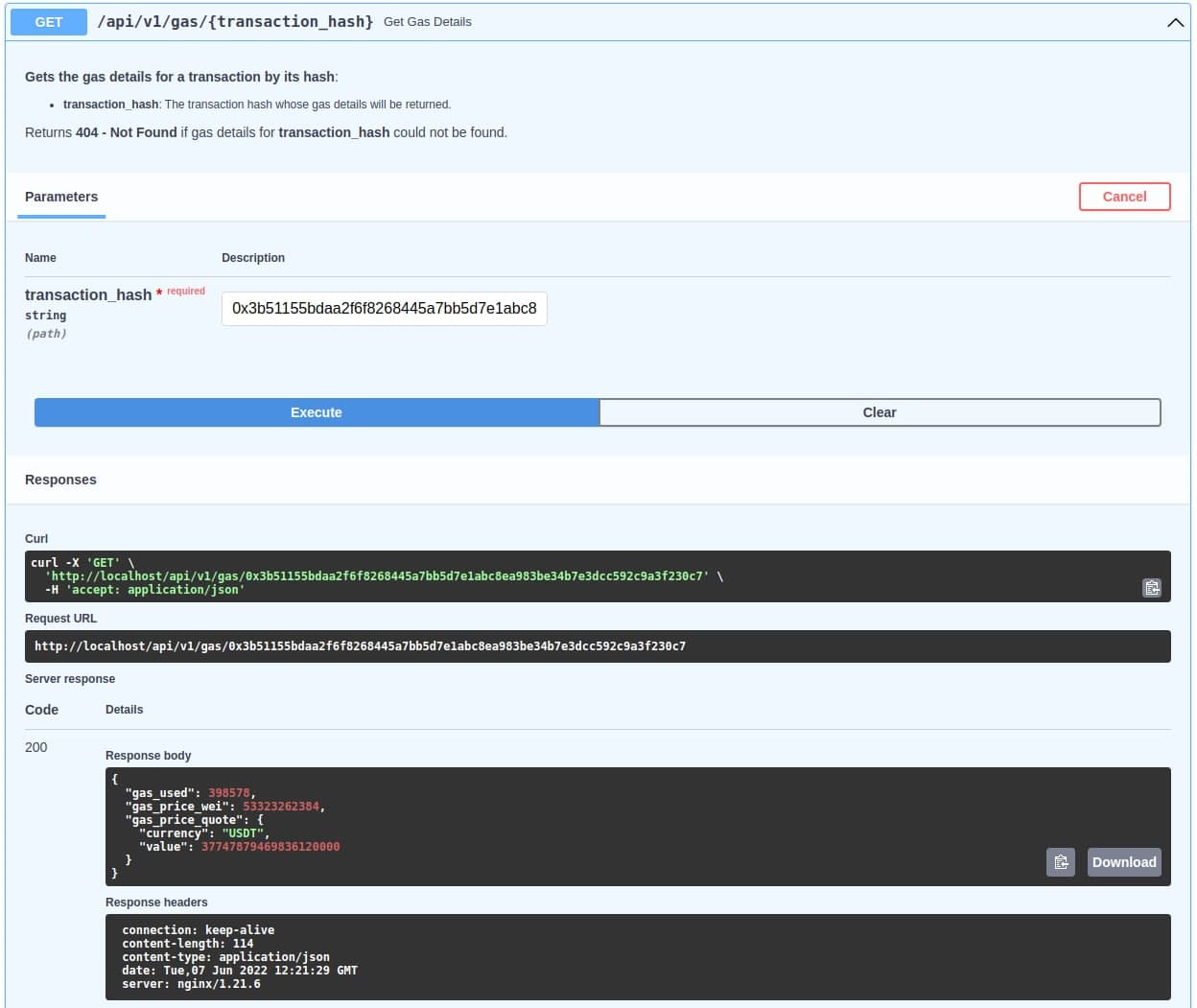

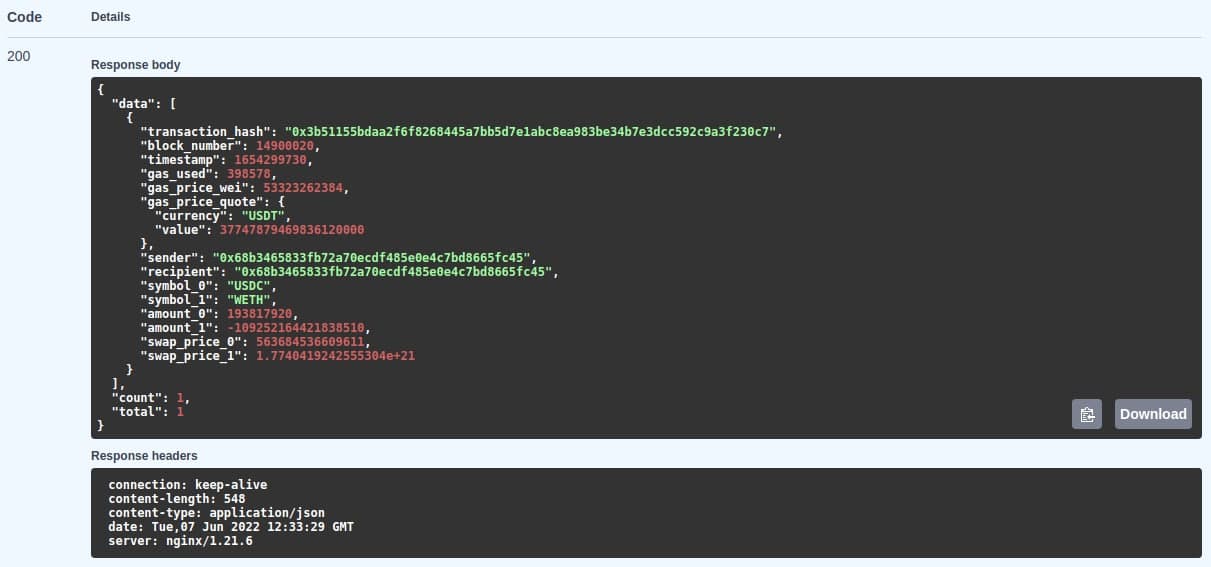

To get more details, we can also get the entire swap event's data from the swaps endpoint:

We see that we have also recorded the swap prices:

swap_price_0- Price oftoken_0quoted intoken_1(0.000563684536609611WETH per USDC)swap_price_1- Price oftoken_1quoted intoken_0(roughly1774.04192USDC per WETH)

Something to note is that, as we have seen previously in the screenshot of the transaction from EtherScan, a transaction can comprise multiple swap events. As such, the response is an array of the results. If we were to also record the other events, we would be able to see the other events in the same response.