VisDB: Visibility-aware Dense Body

Introduction

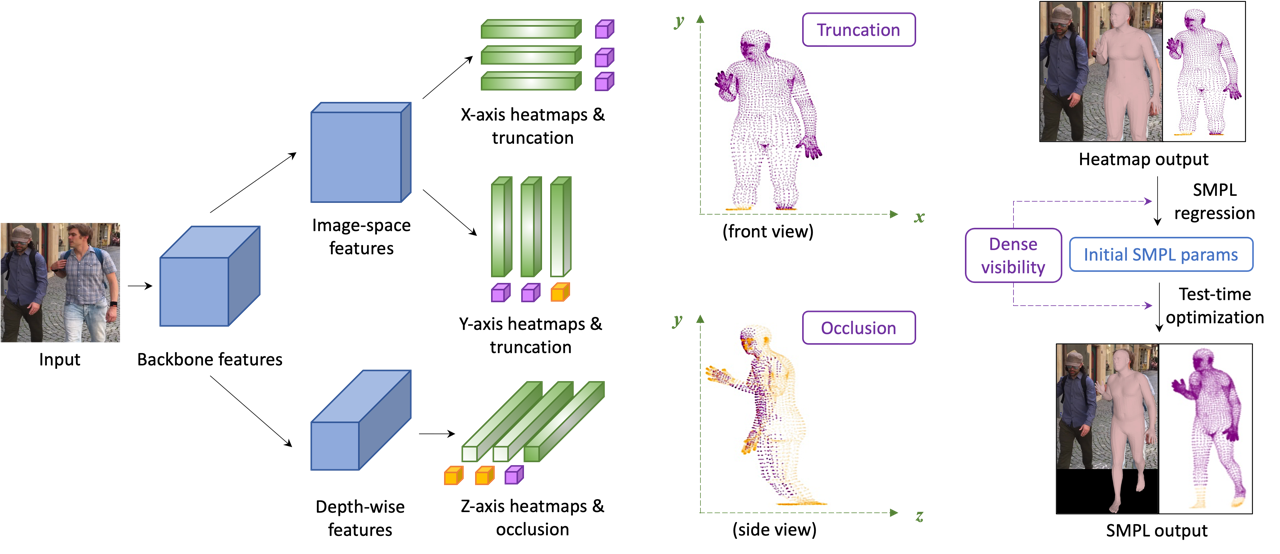

This repo contains the PyTorch implementation of "Learning Visibility for Robust Dense Human Body Estimation" (ECCV'2022). Extended from a heatmap-based representation in I2L-MeshNet, we explicitly model the dense visibility of human joints and vertices to improve the robustness on partial-body images.

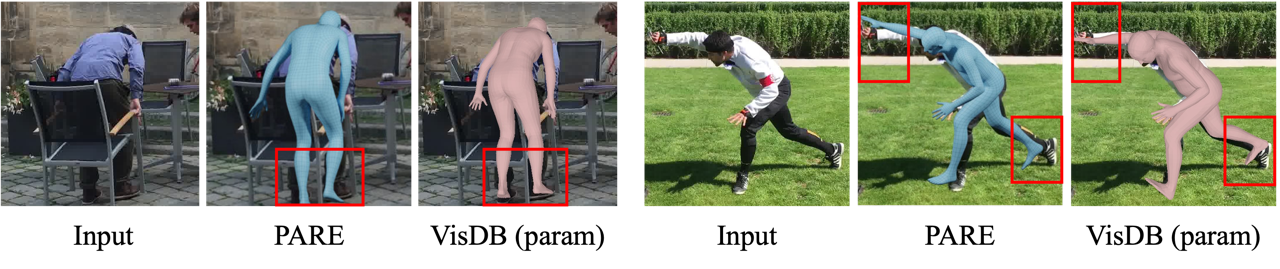

Example Results

We show some qualitative comparisons against prior arts which can handle occlusions: PARE and METRO. We observe that PARE and METRO are robust to occlusions in general but VisDB aligns with the images better thanks to the accurate dense heatmap and visibility estimations.

Setup

We implement VisDB with Python 3.7.10 and PyTorch 1.8.1. Our code is mainly built upon this repo: I2L-MeshNet.

- Install PyTorch and Python >= 3.7.3 and run

sh requirements.sh. - Change

torchgeometrykernel code slightly following here. - Download the pre-trained VisDB models (available soon).

- Download

basicModel_f_lbs_10_207_0_v1.0.0.pklandbasicModel_m_lbs_10_207_0_v1.0.0.pklfrom here andbasicModel_neutral_lbs_10_207_0_v1.0.0.pklfrom here. Place them undercommon/utils/smplpytorch/smplpytorch/native/models/. - Download DensePose UV files

UV_Processed.matandUV_symmetry_transforms.matfrom here and place them undercommon/. - Download GMM prior

gmm_08.pklfrom here and place it undercommon/.

Quick demo

- Prepare

input.jpgand pre-trained snapshot in thedemo/folder. - Go to

demofolder and editbboxindemo.py. - Run

python demo.py --gpu 0 --stage param --test_epoch 8if you want to run on gpu 0. - Output images can be found under

demo/. - If you run this code in ssh environment without display device, do follow:

1、Install oemesa follow https://pyrender.readthedocs.io/en/latest/install/

2、Reinstall the specific pyopengl fork: https://github.com/mmatl/pyopengl

3、Set opengl's backend to egl or osmesa via os.environ["PYOPENGL_PLATFORM"] = "egl"

Usage

Data preparation

- Download training and testing data (MSCOCO, MuCo, Human3.6M, and 3DPW) following I2L-MeshNet's instructions.

- Run DensePose on images following here and store the UV estimations in individual data directories (e.g.

ROOT/data/PW3D/dp/).

Training

1. lixel stage

First, you need to train VisDB in lixel stage. In the main/ folder, run

python train.py --gpu 0-3 --stage lixel to train the lixel model on GPUs 0-3.

2. param stage

Once you pre-trained VisDB in lixel stage, you can resume training in param stage. In the main/ folder, run

python train.py --gpu 0-3 --stage param --continueto train the param model on GPUs 0-3.

Testing

Place trained model at the output/model_dump/. Choose the stage you want to test among (lixel or param).

In the main/ folder, run

python test.py --gpu 0-3 --stage $STAGE --test_epoch 8 Contact

Chun-Han Yao: cyao6@ucmerced.edu

Citation

If you find our project useful in your research, please consider citing:

@inproceedings{yao2022learning,

title={Learning visibility for robust dense human body estimation},

author={Yao, Chun-Han and Yang, Jimei and Ceylan, Duygu and Zhou, Yi and Zhou, Yang, and Yang, Ming-Hsuan},

booktitle={European conference on computer vision (ECCV)},

year={2022}

}