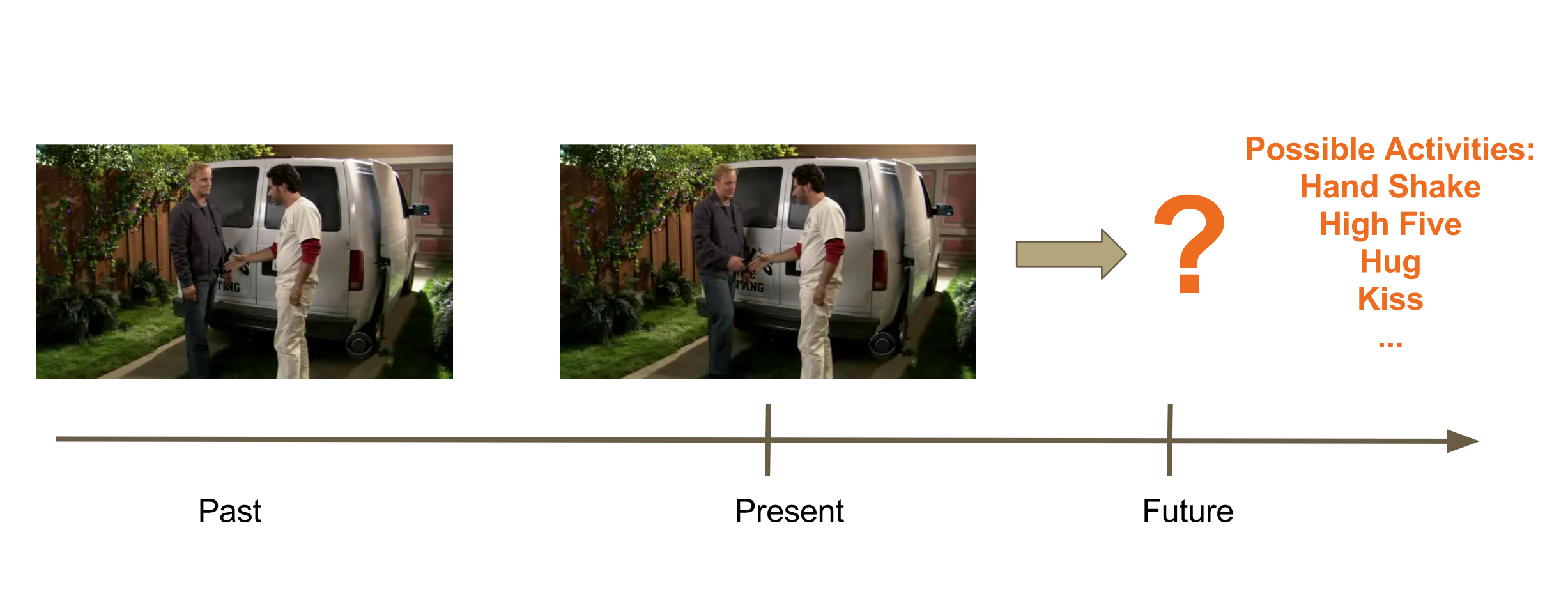

Human activity anticipation from videos using an LSTM-based model.

- Python

- NumPy

- Tensorflow 1.0

- scikit-image

- Matplotlib

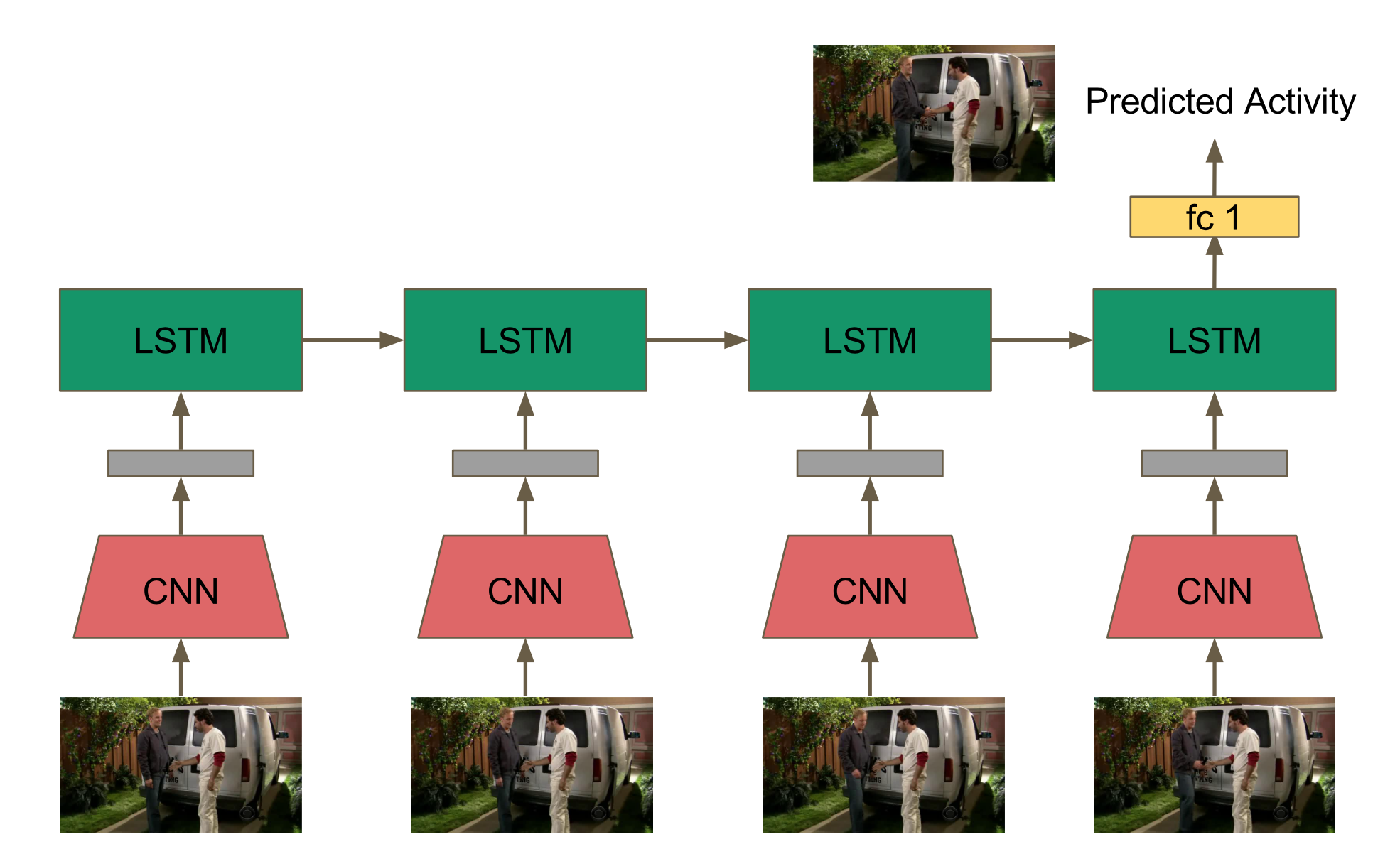

Extract features from each frame with a CNN and pass the sequence to an LSTM, in a separate network.

I use the TV Human Interactions (TVHI) dataset. The dataset consists of people performing four different actions: hand shake, high five, hug, and kiss, with a total of 200 videos (excluding the clips that don't contain any of the interactions).

Please extract the above files and store the videos inside the ./videos directory, annotations inside the ./annotations directory.

For the CNN, I use Inception V3, pre-trained on ImageNet.

Extract the compressed file and put inception_v3.ckpt into the ./inception_checkpoint directory.

First, extract features from the frames before the annotated action begins in each video:

$ python preprocessing.py

Then, generate the train_file.csv file containing the ground_truth label information of the dataset:

$ python generate\_video\_mapping.py

To train the model with default parameters:

$ python train.py

| Activity | # of training data | # of augmented training data | # of validation data |

|---|---|---|---|

| hand shake | 27 | 315 | 20 |

| high five | 30 | 320 | 20 |

| hug | 30 | 362 | 20 |

| kiss | 28 | 293 | 20 |

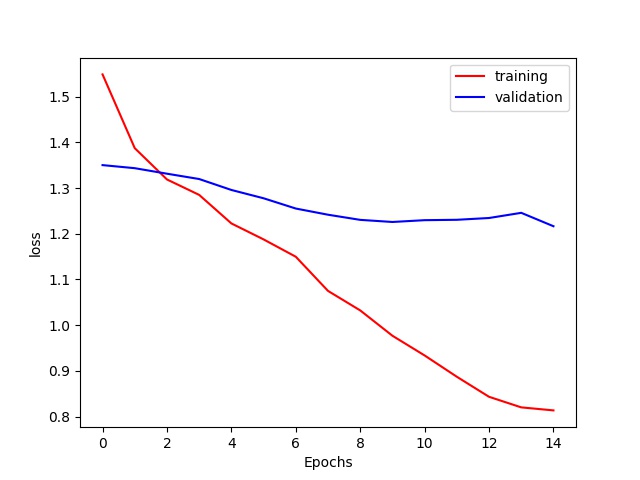

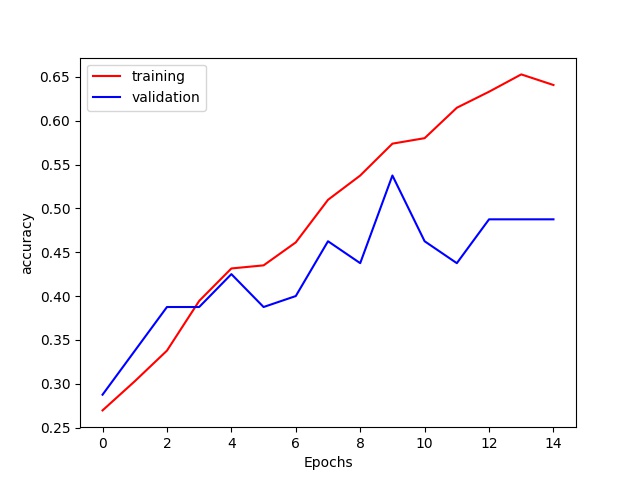

Train the model for 15 epochs.

- C. Vondrick, H. Pirsiavash, and A. Torralba. Anticipating Visual Representations from Unlabeled Video. In CVPR, 2016.

- A. Jain, A. Singh, H. S. Koppula, S. Soh, and A. Saxena. Recurrent Neural Networks for Driver Activity Anticipation via Sensory-Fusion Architecture. In ICRA, 2016.