In this lab, we'll use everything we've learned so far to build a model that can classify a text document as one of many possible classes!

You will be able to:

- Perform classification using a text dataset, using sensible preprocessing, tokenization, and feature engineering scheme

- Use scikit-learn text vectorizers to fit and transform text data into a format to be used in a ML model

For this lab, we'll be working with the classic Newsgroups Dataset, which is available as a training data set in sklearn.datasets. This dataset contains many different articles that fall into 1 of 20 possible classes. Our goal will be to build a classifier that can accurately predict the class of an article based on the features we create from the article itself!

Let's get started. Run the cell below to import everything we'll need for this lab.

import nltk

from nltk.corpus import stopwords

import string

from nltk import word_tokenize, FreqDist

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics import accuracy_score

from sklearn.datasets import fetch_20newsgroups

from sklearn.ensemble import RandomForestClassifier

from sklearn.naive_bayes import MultinomialNB

import pandas as pd

import numpy as np

np.random.seed(0)Now, we need to fetch our dataset. Run the cell below to download all the newsgroups articles and their corresponding labels. If this is the first time working with this dataset, scikit-learn will need to download all of the articles from an external repository -- the cell below may take a little while to run.

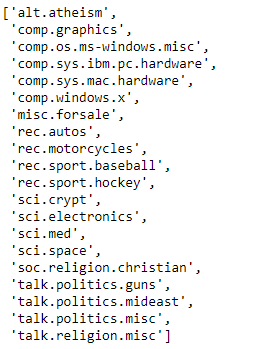

The actual dataset is quite large. To save us from extremely long runtimes, we'll work with only a subset of the classes. Here is a list of all the possible classes:

For this lab, we'll only work with the following five:

'alt.atheism''comp.windows.x''rec.sport.hockey''sci.crypt''talk.politics.guns'

In the cell below:

- Create a list called

categoriesthat contains the five newsgroups classes listed above, as strings - Get the training set by calling

fetch_20newsgroups()and passing in the following parameters:subset='train'categories=categoriesremove=('headers', 'footers', 'quotes')-- this is so that the model can't overfit to metadata included in the articles that sometimes acts as a dead-giveaway as to what class the article belongs to

- Get the testing set as well by passing in the same parameters, with the exception of

subset='test

categories = None

newsgroups_train = None

newsgroups_test = NoneGreat! Let's break apart the data and the labels, and then inspect the class names to see what the actual newsgroups are.

In the cell below:

- Grab the data from

newsgroups_train.dataand store it in the appropriate variable - Grab the labels from

newsgroups_train.targetand store it in the appropriate variable - Grab the label names from

newsgroups_train.target_namesand store it in the appropriate variable - Display the

label_namesso that we can see the different classes of articles that we're working with, and confirm that we grabbed the right ones

data = None

target = None

label_names = None

label_namesFinally, let's check the shape of data to see what our data looks like. We can do this by checking the .shape attribute of newsgroups_train.filenames.

Do this now in the cell below.

# Your code here(2814,)

Our dataset contains 2,814 different articles spread across the five classes we chose.

Now that we have our data, the fun part begins. We'll need to begin by preprocessing and cleaning our text data. As you've seen throughout this section, preprocessing text data is a bit more challenging that working with more traditional data types because there's no clear-cut answer for exactly what sort of preprocessing and cleaning we need to do. Before we can begin cleaning and preprocessing our text data, we need to make some decisions about things such as:

- Do we remove stop words or not?

- Do we stem or lemmatize our text data, or leave the words as is?

- Is basic tokenization enough, or do we need to support special edge cases through the use of regex?

- Do we use the entire vocabulary, or just limit the model to a subset of the most frequently used words? If so, how many?

- Do we engineer other features, such as bigrams, or POS tags, or Mutual Information Scores?

- What sort of vectorization should we use in our model? Boolean Vectorization? Count Vectorization? TF-IDF? More advanced vectorization strategies such as Word2Vec?

These are all questions that we'll need to think about pretty much anytime we begin working with text data.

Let's get right into it. We'll start by getting a list of all of the english stopwords, and concatenating them with a list of all the punctuation.

In the cell below:

- Get all the english stopwords from

nltk - Get all of the punctuation from

string.punctuation, and convert it to a list - Add the two lists together. Name the result

stopwords_list - Create another list containing various types of empty strings and ellipses, such as

["''", '""', '...', '``']. Add this to ourstopwords_list, so that we won't have tokens that are only empty quotes and such

stopwords_list = NoneGreat! We'll leave these alone for now, until we're ready to remove stop words after the tokenization step.

Next, let's try tokenizing our dataset. In order to save ourselves some time, we'll write a function to clean our dataset, and then use Python's built-in map() function to clean every article in the dataset at the same time.

In the cell below, complete the process_article() function. This function should:

- Take in one parameter,

article - Tokenize the article using the appropriate function from

nltk - Lowercase every token, remove any stopwords found in

stopwords_listfrom the tokenized article, and return the results

def process_article(article):

pass Now that we have this function, let's go ahead and preprocess our data, and then move into exploring our dataset.

In the cell below:

- Use Python's

map()function and pass in two parameters: theprocess_articlefunction and thedata. Make sure to wrap the whole map statement in alist().

Note: Running this cell may take a minute or two!

processed_data = NoneGreat. Now, let's inspect the first article in processed_data to see how it looks.

Do this now in the cell below.

processed_data[0]Now, let's move onto exploring the dataset a bit more. Let's start by getting the total vocabulary size of the training dataset. We can do this by creating a set object and then using it's .update() method to iteratively add each article. Since it's a set, it will only contain unique words, with no duplicates.

In the cell below:

- Create a

set()object calledtotal_vocab - Iterate through each tokenized article in

processed_dataand add it to the set using the set's.update()method - Once all articles have been added, get the total number of unique words in our training set by taking the length of the set

total_vocab = NoneGreat -- our processed dataset contains 46,990 unique words!

Next, let's create a frequency distribution to see which words are used the most!

In order to do this, we'll need to concatenate every article into a single list, and then pass this list to FreqDist().

In the cell below:

- Create an empty list called

articles_concat - Iterate through

processed_dataand add every article it contains toarticles_concat - Pass

articles_concatas input toFreqDist() - Display the top 200 most used words

articles_concat = Nonearticles_freqdist = NoneAt first glance, none of these words seem very informative -- for most of the words represented here, it would be tough to guess if a given word is used equally among all five classes, or is disproportionately represented among a single class. This makes sense, because this frequency distribution represents all the classes combined. This tells us that these words are probably the least important, as they are most likely words that are used across multiple classes, thereby providing our model with little actual signal as to what class they belong to. This tells us that we probably want to focus on words that appear heavily in articles from a given class, but rarely appear in articles from other classes. You may recall from previous lessons that this is exactly where TF-IDF Vectorization really shines!

Although NLTK does provide functionality for vectorizing text documents with TF-IDF, we'll make use of scikit-learn's TF-IDF vectorizer, because we already have experience with it, and because it's a bit easier to use, especially when the models we'll be feeding the vectorized data into are from scikit-learn, meaning that we don't have to worry about doing any extra processing to ensure they play nicely together.

Recall that in order to use scikit-learn's TfidfVectorizer(), we need to pass in the data as raw text documents -- the TfidfVectorizer() handles the count vectorization process on it's own, and then fits and transforms the data into TF-IDF format.

This means that we need to:

- Import

TfidfVectorizerfromsklearn.feature_extraction.textand instantiateTfidfVectorizer() - Call the vectorizer object's

.fit_transform()method and pass in ourdataas input. Store the results intf_idf_data_train - Also create a vectorized version of our testing data, which can be found in

newsgroups_test.data. Store the results intf_idf_data_test.

NOTE: When transforming the test data, use the .transform() method, not the .fit_transform() method, as the vectorizer has already been fit to the training data.

# Import TfidfVectorizervectorizer = Nonetf_idf_data_train = Nonetf_idf_data_test = NoneGreat! We've now preprocessed and explored our dataset, let's take a second to see what our data looks like in vectorized form.

In the cell below, get the shape of tf_idf_data.

# Your code here(2814, 36622)

Our vectorized data contains 2,814 articles, with 36,622 unique words in the vocabulary. However, the vast majority of these columns for any given article will be zero, since every article only contains a small subset of the total vocabulary. Recall that vectors mostly filled with zeros are referred to as Sparse Vectors. These are extremely common when working with text data.

Let's check out the average number of non-zero columns in the vectors. Run the cell below to calculate this average.

non_zero_cols = tf_idf_data_train.nnz / float(tf_idf_data_train.shape[0])

print("Average Number of Non-Zero Elements in Vectorized Articles: {}".format(non_zero_cols))

percent_sparse = 1 - (non_zero_cols / float(tf_idf_data_train.shape[1]))

print('Percentage of columns containing 0: {}'.format(percent_sparse))As we can see from the output above, the average vectorized article contains 107 non-zero columns. This means that 99.7% of each vector is actually zeroes! This is one reason why it's best not to create your own vectorizers, and rely on professional packages such as scikit-learn and NLTK instead -- they contain many speed and memory optimizations specifically for dealing with sparse vectors. This way, we aren't wasting a giant chunk of memory on a vectorized dataset that only has valid information in 0.3% of it.

Now that we've vectorized our dataset, let's create some models and fit them to our vectorized training data.

In the cell below:

- Instantiate

MultinomialNB()andRandomForestClassifier(). For random forest, setn_estimatorsto100. Don't worry about tweaking any of the other parameters - Fit each to our vectorized training data

- Create predictions for our training and test sets

- Calculate the

accuracy_score()for both the training and test sets (you'll find our training labels stored within the variabletarget, and the test labels stored withinnewsgroups_test.target)

nb_classifier = None

rf_classifier = Nonenb_train_preds = None

nb_test_preds = Nonerf_train_preds = None

rf_test_preds = Nonenb_train_score = None

nb_test_score = None

rf_train_score = None

rf_test_score = None

print("Multinomial Naive Bayes")

print("Training Accuracy: {:.4} \t\t Testing Accuracy: {:.4}".format(nb_train_score, nb_test_score))

print("")

print('-'*70)

print("")

print('Random Forest')

print("Training Accuracy: {:.4} \t\t Testing Accuracy: {:.4}".format(rf_train_score, rf_test_score))Question: Interpret the results seen above. How well did the models do? How do they compare to random guessing? How would you describe the quality of the model fit?

Write your answer below:

# Your answer hereIn this lab, we used our NLP skills to clean, preprocess, explore, and fit models to text data for classification. This wasn't easy -- great job!!