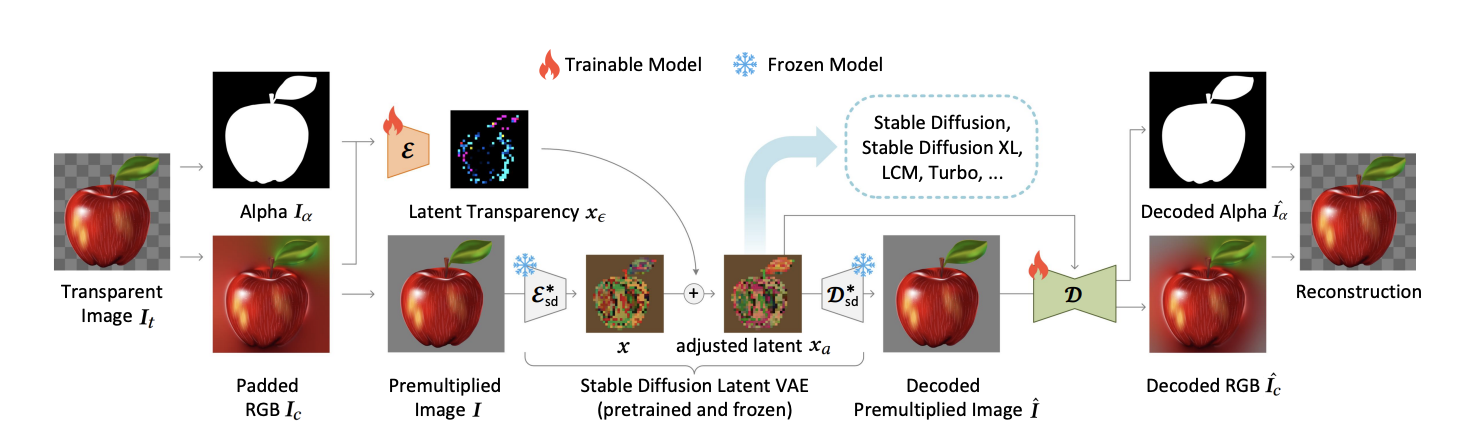

Transparent Image Layer Diffusion using Latent Transparency for refiners.

Note: This repo only implements layer diffusion for foreground generation base on the Transparent Image Layer Diffusion using Latent Transparency paper. Click here for the official implementation.

Clone this repo

git clone https://github.com/chloedia/layerdiffuse.git

cd layerdiffuseSet up the environment using rye

rye sync --all-features \

source .venv/bin/activateAnd you are ready to go ! You can start by launching the generation of a cute panda wizard by simply running :

python3 src/layerdiffuse/inference.py --checkpoint_path "checkpoints/" --prompt "a futuristic magical panda with a purple glow, cyberpunk" --save_path "outputs/"Note: It should take time the first time as it will download all the necessary weight files. If you want to use different SDXL checkpoints you can use the refiners library to convert those file.

Install your own sdxl weight files using the convertion script of refiners (see refiners docs).

Go check into the refiners docs and especially the part to add loras or Adapters on top of the layer diffuse, create the assets for any of your creations that matches a specific style.

Add style aligned to the generated content, to align a batch to the same style with or without a ref image;

Add post processing for higher details quality (hands);

Don't hesitate to contribute! 🔆

Thanks to @limiteinductive for his help toward this implementation !