This project provides an example of Apache Kafka data processing application. The build and deployment of the application if fully automated using AWS CDK.

Project consists of three main parts:

- AWS infrastructure and deployment definition - AWS CDK scripts written in Typescript

- AWS Lambda function - sends messages to Apache Kafka topic using KafkaJS library. It is implemented in Typescript.

- Consumer application - Spring Boot Java application containing main business logic of the data processing pipeline. It consumes messages from Apache Kafka topic, performs simple validation and processing and stores results in Amazon DynamoDB table.

Provided example show cases two ways of packaging and deploying business logic using high-level AWS CDK constructs.

One way using Dockerfile and AWS CDK ECS ContainerImage to deploy Java application to AWS Fargate

and the other way is using AWS CDK NodejsFunction construct to deploy Typescript AWS Lambda code

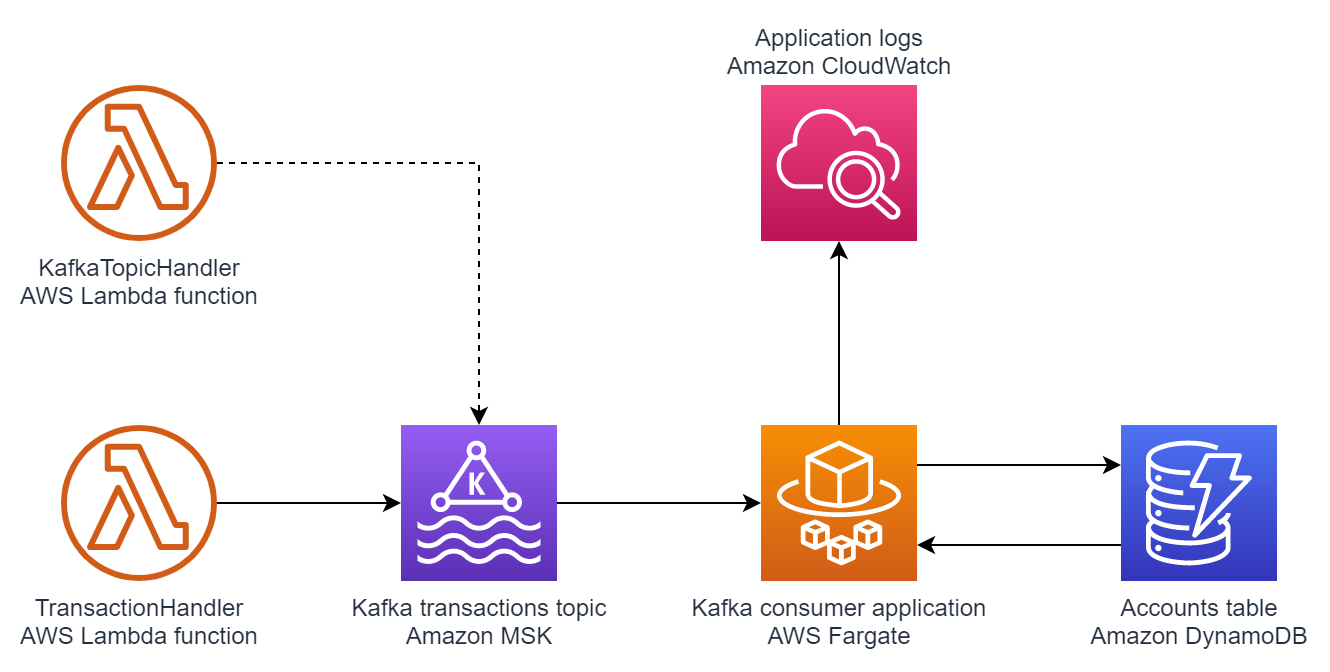

Triggering the TransactionHandler Lambda function publishes messages to an Apache Kafka topic. The application is packaged in a container and deployed to ECS Fargate, consumes messages from the Kafka topic, processes them, and stores the results in an Amazon DynamoDB table. The KafkaTopicHandler Lambda function is called once during deployment to create Kafka topic. Both the Lambda function and the consumer application publish logs to Amazon CloudWatch.

amazon-msk-java-app-cdk/lib- directory containing all AWS CDK stacksamazon-msk-java-app-cdk/bin- directory containing AWS CDK app definitionamazon-msk-java-app-cdk/lambda- directory containing code ofTransactionHandlerAWS Lambda function as well as code of Custom Resource handler responsible for creating Kafka topic during deploymentconsumer- directory containing code of Kafka consumer Spring Boot Java applicationconsumer/docker/Dockerfile- definition of Docker image used for AWS Fargate container deploymentdoc- directory containing architecture diagramsscripts- directory containing deployment scripts

- An active AWS account

- Java SE Development Kit (JDK) 11, installed

- Apache Maven, installed

- AWS Cloud Development Kit (AWS CDK), installed

- AWS Command Line Interface (AWS CLI) version 2, installed

- Docker, installed

- AWS CDK – The AWS Cloud Development Kit (AWS CDK) is a software development framework for defining your cloud infrastructure and resources by using programming languages such as TypeScript, JavaScript, Python, Java, and C#/.Net.

- Amazon MSK - Amazon Managed Streaming for Apache Kafka (Amazon MSK) is a fully managed service that makes it easy for you to build and run applications that use Apache Kafka to process streaming data.

- AWS Fargate – AWS Fargate is a serverless compute engine for containers. Fargate removes the need to provision and manage servers, and lets you focus on developing your applications.

- AWS Lambda - AWS Lambda is a serverless compute service that lets you run code without provisioning or managing servers, creating workload-aware cluster scaling logic, maintaining event integrations, or managing runtimes.

- Amazon DynamoDB - Amazon DynamoDB is a key-value and document database that delivers single-digit millisecond performance at any scale.

- Start the Docker daemon on your local system. The AWS CDK uses Docker to build the image that is used in the AWS Fargate task. You must run Docker before you proceed to the next step.

export AWS_PROFILE=<REPLACE WITH YOUR AWS PROFILE NAME>or alternatively follow instructions in the AWS CDK documentation- (First-time AWS CDK users only) Follow instructions on AWS CDK documentation page to bootstrap AWS CDK

cd scripts- Run

deploy.shscript - Trigger lambda function to send message to Kafka queue. You can use below command line or alternatively you can trigger lambda function from AWS console. Fell free to change values of

accountIdandvaluefields in the payload JSON and send multiple messages with different payloads to experiment with the application.aws lambda invoke --cli-binary-format raw-in-base64-out --function-name TransactionHandler --log-type Tail --payload '{ "accountId": "account_123", "value": 456}' /dev/stdout --query 'LogResult' --output text | base64 -d

- To view results in DynamoDB table you can run below command. Alternatively you can navigate to Amazon DynamoDB AWS console and select

Accountstable.aws dynamodb scan --table-name Accounts --query "Items[*].[id.S,Balance.N]" --output text - You can also view CloudWatch logs in AWS console or by running

aws logs tailcommand with specified CloudWatch Logs group

Follow AWS CDK instructions to remove AWS CDK stacks from your account. You can also use scripts/destroy.sh.

Be sure to also manually remove Amazon DynamoDB table, clean up Amazon CloudWatch logs and remove Amazon ECR images to avoid incurring additional AWS infrastructure costs.