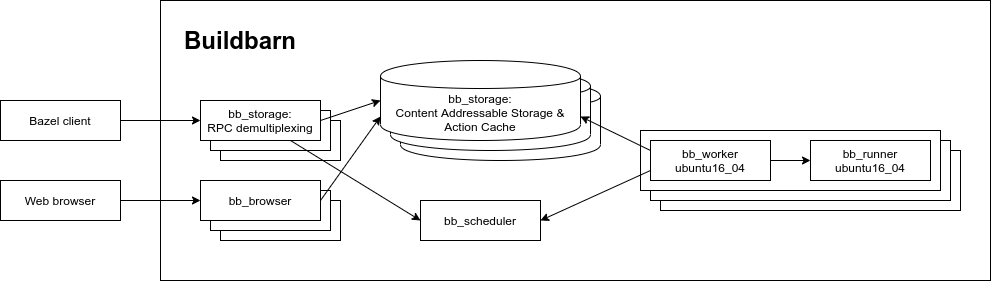

This repository contains a set of scripts and configuration files that can be used to deploy Buildbarn on various platforms. Buildbarn is pretty flexible, in that it can both be used for single-node remote caching setups and large-scale remote execution setups. Unless noted otherwise, the configurations in this repository all use assume the following setup:

- Sharded storage, using the Buildbarn storage daemon. To apply the sharding to client RPCs, a separate set of stateless frontend servers is used to fan out requests.

- Remote execution of build actions, using container images based on Google RBE's official Ubuntu 16.04 image.

- An installation of the Buildbarn Browser.

- An installation of the Buildbarn Event Service.

Below is a diagram of what this setup Buildbarn looks like. In this diagram, the arrows represent the direction in which network connections are established.

This example aims to showcase a very simple build and test with remote execution using docker-compose as the deployment for Buildbarn. We will be compiling examples from the abseil-hello project using Bazel.

First clone the repo and start up a docker-compose example:

git clone https://github.com/buildbarn/bb-deployments.git

cd bb-deployments/docker-compose

./run.sh

You may see initially see an error message along the lines of:

worker-ubuntu16-04_1 | xxxx/xx/xx xx:xx:xx rpc error: code = Unavailable desc = Failed to synchronize with scheduler: all SubConns are in TransientFailure, latest connection error: connection error: desc = "transport: Error while dialing dial tcp xxx.xx.x.x:xxxx: connect: connection refused"

This is usually because container of the worker has started before the scheduler and so it cannot connect. After a second or so, this error message should stop.

Bazel can perform remote builds against these deployments by adding the official Bazel toolchain definitions for the RBE container images to the WORKSPACE file of your project. It is also possible to derive your own configuration files using the rbe_autoconfig. More information can be found by reading the documentation here.

For this example, we have provided the necessary WORKSPACE setup already but we still need to create the .bazelrc. In a separate terminal to your docker-compose deployment, create your .bazelrc in the project root:

cd bb-deployments/

cp bazelrc .bazelrc

cat >> .bazelrc << EOF

build:mycluster --remote_executor=grpc://localhost:8980

build:mycluster --remote_instance_name=remote-execution

build:mycluster-ubuntu16-04 --config=mycluster

build:mycluster-ubuntu16-04 --config=rbe-ubuntu16-04

build:mycluster-ubuntu16-04 --jobs=64

EOF

Now try a build:

bazel build --config=mycluster-ubuntu16-04 @abseil-hello//:hello_main

The output should look something like:

INFO: 30 processes: 30 remote.

You can check to see if the binary has built successfully by trying:

bazel run --config=mycluster-ubuntu16-04 @abseil-hello//:hello_main

Equally, you can try to execute a test remotely:

bazel test --config=mycluster-ubuntu16-04 @abseil-hello//:hello_test

Which will give you an output containing something like:

INFO: 29 processes: 29 remote.

//:hello_test PASSED in 0.1s

Executed 1 out of 1 test: 1 test passes.

Next, we will try out the remote caching capability. If you clean your local build cache and then rerun a build:

bazel clean

bazel build --config=mycluster-ubuntu16-04 @abseil-hello//:hello_main

You'll see an output containing information that we hit the remote cache instead of executing on a worker.