| Name | GitHub | ||

|---|---|---|---|

| Team Lead | Xi Hu | chris_huxi@163.com | Xi |

| Yuanhui Li | viglyh@163.com | Yuanhui | |

| Zyuanhua | zzyuanhua@163.com | Zyuanhua | |

| Maharshi Patel | patelmaharshi94@gmail.com | Maharshi | |

| Aniket Satbhai | anks@tuta.io | Aniket |

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launchwait until we get log info:

[INFO] [1564778567.666529]: loaded ssd detector!

then, open the simulator, check the "Camera" and uncheck the "Manual" you will see the car runs like video here.

optional: if you want to show how the camera output looks like, you can run in another terminal:

rosrun rviz rvizthen add topic: image_color/raw.

optional: if you want to run 2nd test lot, you need to modify ros/src/waypoint_loader/launch/waypoint_loader.launch like this:

#<param name="path" value="$(find styx)../../../data/wp_yaw_const.csv" />

<param name="path" value="$(find styx)../../../data/churchlot_with_cars.csv"/>

<param name="velocity" value="5" />then launch styx.launch and run simulator. you will see the car runs like video here

Firstly download the bag file then run in terminal :

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/site.launchAnd wait until we get log info:

[INFO] [1564778567.666529]: loaded ssd detector!

then run in other terminal:

rosbag play -l XXX.bagif you want to see the image given by bag file, open one another terminal and run:

rosrun rviz rvizadd topic: image_color/raw, you will see result as this video: just_traffic_light.bag, video: loop_with_traffic_light.bag, video: udacity_succesful_light_detection.bag

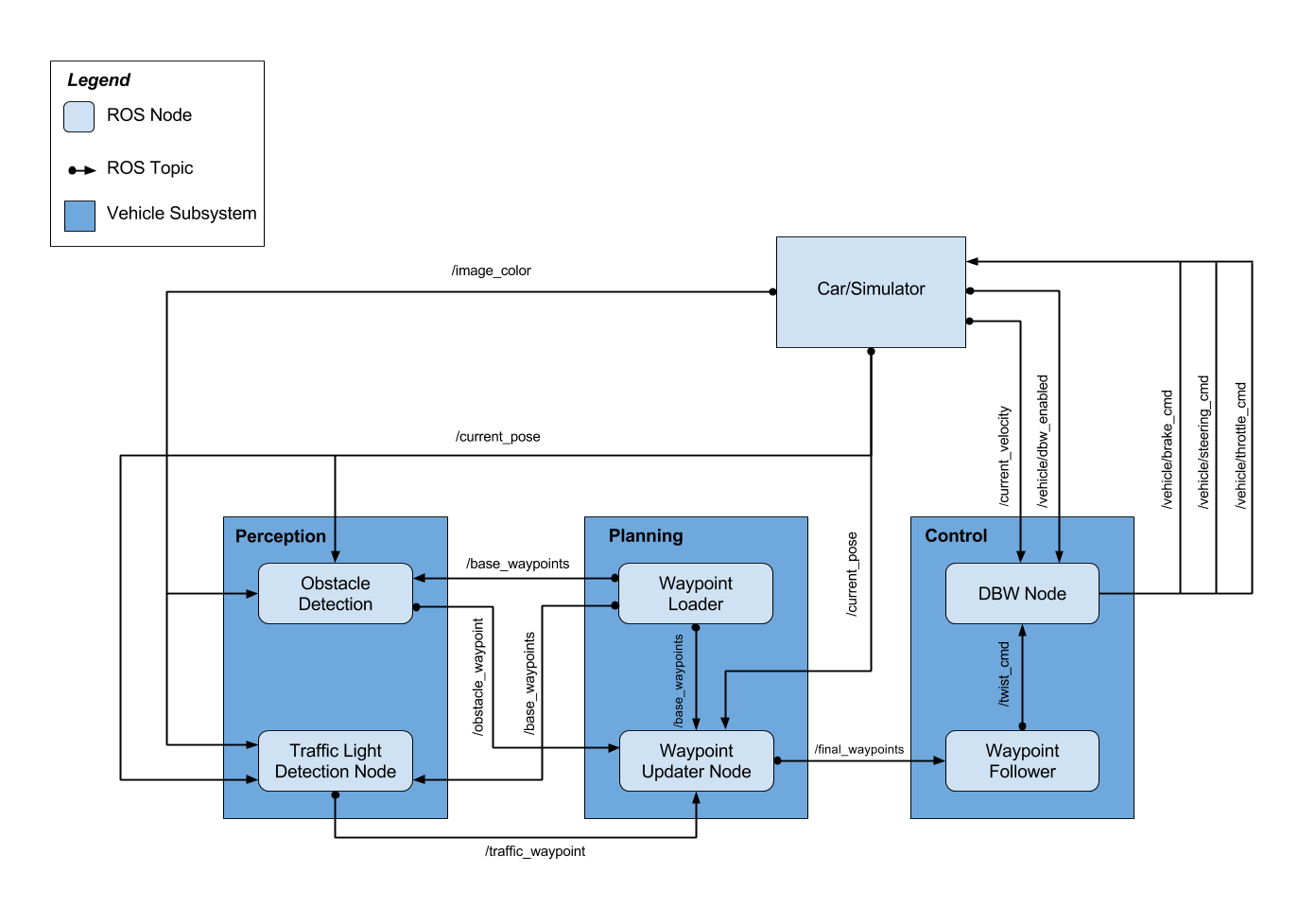

The project has following architecture:

Waypointer Updater node helps updating the target velocity of each waypoints based on traffic light and obstacle detection data.

- It subscribes to the /base_waypoints, /current_pose and /traffic_waypoint topics.

- It publishes a list of waypoints ahead of the car with target velocities to the /final_waypoints topic.

Subscribed Topics:

Msg Type: styx_msgs/Lane

This topic provides the waypoints along the driveway path. Waypoint Loader node publishes the list of waypoints to this topic at the starting.

Msg Type: geometry_msgs/PoseStamped

This topic provides the current position of the vehicle. The position is published by the Car/Simulator.

Msg Type: std_msgs/Int32

This topic provides the waypoints at which the car is expected to halt. Traffic Light Detection node publishes to this topic.

Published topics:

Msg Type: styx_msgs/Lane

Final waypoints are published to this topic. The vehicle is supposed to follow these waypoints.

Traffic Light Detection Node detects the traffic light and publishes it's location.

- It subscribes to the /base_waypoints, /current_pose, /vehicle/traffic_lights and /image_raw topics.

- It publishes waypoint index of upcoming traffic light position to /traffic_waypoint.

Subscribed Topics:

Msg Type: styx_msgs/Lane

This topic provides the waypoints along the driveway path. These are the same list of waypoints used in Waypoint Updater node.

Msg Type: geometry_msgs/PoseStamped

This topic provides the current position of the vehicle. The position is published by the Car/Simulator.

Msg Type: sensor_msgs/Image

This topic provides raw image from the vehicle sensor. The image helps identify red lights in the incoming camera image.

Msg Type: styx_msgs/TrafficLightArray

This topic is only used while using the simulator for testing the vehicle path without the use of classifier. This topic provides the location of the traffic light in 3D map space and helps acquire an accurate ground truth data source for the traffic light classifier by sending the current color state of all traffic lights in the simulator.

Published Topics:

Msg Type: std_msgs/Int32

This topic provides the waypoints at which the car is expected to halt.

Drive-By-Wire Node uses the final waypoints to apply required brake, steering and throttle values to drive the vehicle.

- It subscribes to the /twist_cmd, /vehicle/dbw_enabled and /current_velocity topics.

- It publishes /vehicle/brake_cmd, /vehicle/steering_cmd and /vehicle/throttle_cmd topics.

Subscribed Topics:

Msg Type: geometry_msgs/TwistStamped

This topic provides the proposed linear and angular velocities. Wapoint Follower Node publishes the message to this topic.

Msg Type: std_msgs/Bool

This topic indicates if the car is under dbw or driver control. In the simulator, it'll always be True. But, for the actual vehicle we have to make sure if dbw_enabled is True for driving autonomously.

Msg Type: geometry_msgs/TwistStamped

This topic provides target linear and angular velocities the car should follow.

Published Topics:

Msg Type: dbw_mkz_msgs/BrakeCmd

Required percent of throttle is published to this topic.

Msg Type: dbw_mkz_msgs/SteeringCmd

Required steering angle is published to this topic.

Msg Type: dbw_mkz_msgs/ThrottleCmd

Required amount of torque is applied using this topic.

This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car. For more information about the project, see the project introduction here.

Please use one of the two installation options, either native or docker installation.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

The Udacity provided virtual machine has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using this.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

-

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

-

Download the Udacity Simulator.

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneTo set up port forwarding, please refer to the "uWebSocketIO Starter Guide" found in the classroom (see Extended Kalman Filter Project lesson).

- Clone the project repository

git clone https://github.com/udacity/CarND-Capstone.git- Install python dependencies

cd CarND-Capstone

pip install -r requirements.txt- Make and run styx

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launch- Run the simulator

- Download training bag that was recorded on the Udacity self-driving car.

- Unzip the file

unzip traffic_light_bag_file.zip- Play the bag file

rosbag play -l traffic_light_bag_file/traffic_light_training.bag- Launch your project in site mode

cd CarND-Capstone/ros

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images

Outside of requirements.txt, here is information on other driver/library versions used in the simulator and Carla:

Specific to these libraries, the simulator grader and Carla use the following:

| Simulator | Carla | |

|---|---|---|

| Nvidia driver | 384.130 | 384.130 |

| CUDA | 8.0.61 | 8.0.61 |

| cuDNN | 6.0.21 | 6.0.21 |

| TensorRT | N/A | N/A |

| OpenCV | 3.2.0-dev | 2.4.8 |

| OpenMP | N/A | N/A |

We are working on a fix to line up the OpenCV versions between the two.