This is the official repository of the paper Deep Image Matting: A Comprehensive Survey.

Introduction | Preliminary | Methods | Datasets | Benchmark | Statement

Image matting refers to extracting precise alpha matte from natural images, and it plays a critical role in various downstream applications, such as image editing. The emergence of deep learning has revolutionized the field of image matting and given birth to multiple new techniques, including automatic, interactive, and referring image matting. Here we present a comprehensive review of recent advancements in image matting in the era of deep learning by focusing on two fundamental sub-tasks: auxiliary input-based image matting.

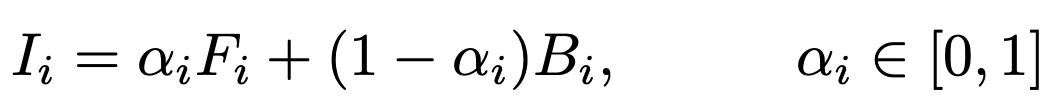

Image matting, which refers to the precise extraction of the soft matte from foreground objects in arbitrary images, has been extensively studied for several decades. The process can be described mathematically as below, where I represents the input image, F represents the foreground image, and B represents the background image. The opacity of the pixel in the foreground is denoted by αi, which ranges from 0 to 1. We also show the typical input image, ground truth alpha matte and various auxiliary inputs such as trimap, background, coarse map, user clicks, scribbles, and a text description in the following figure. The text description for this image can be the cute smiling brown dog in the middle of the image.

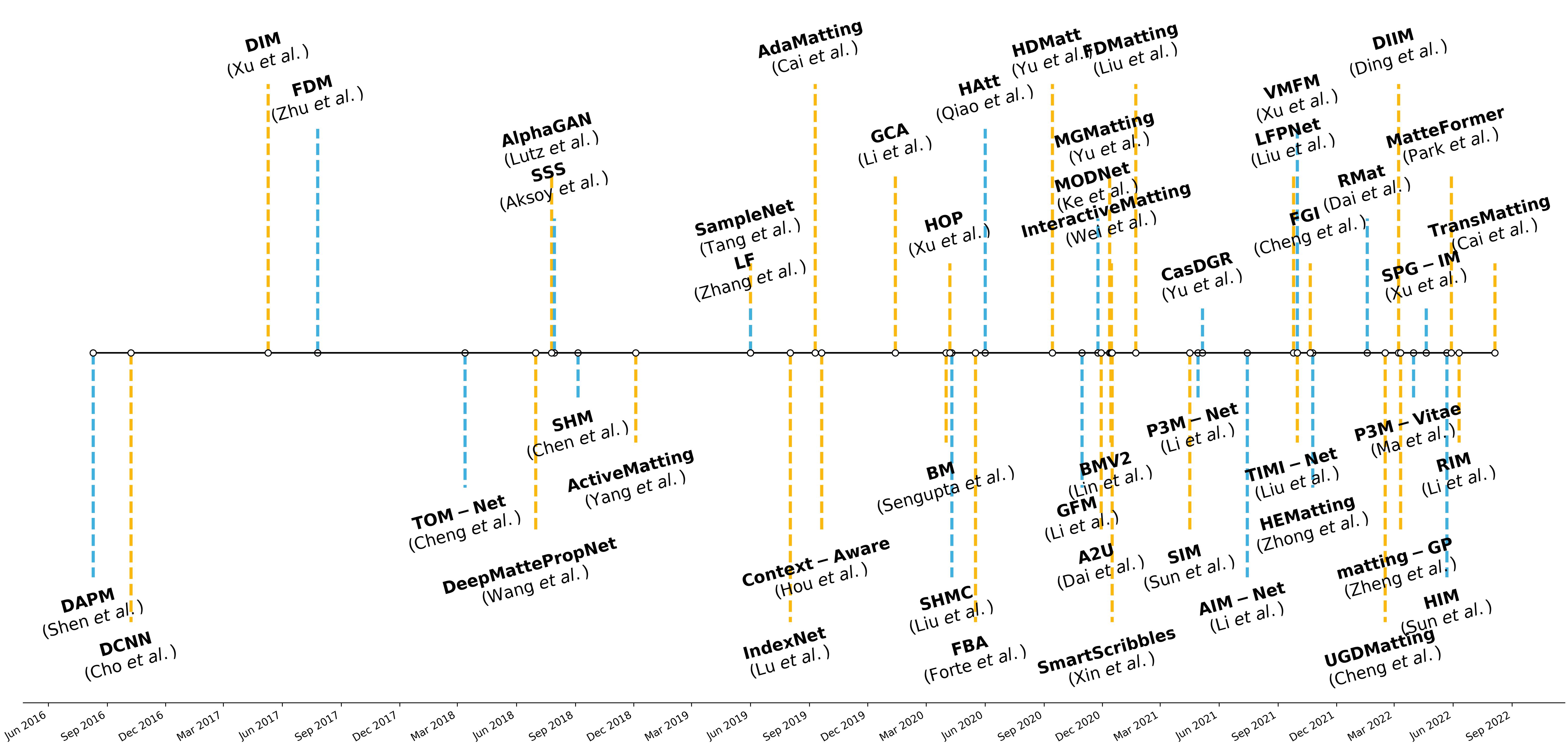

We compile a timeline of the developments in deep learning-based image matting methods as follows.

We also list a summary of image matting methods organized according to the year of publication, the publication venue, input modality, automaticity, matting target, architecture, and availability of the code (with the link). The list of papers is chronologically ordered. Please note that [U] stands for the unofficial implementation of the code.

We list a summary of the image matting datasets, categorized as the synthetic image-based benchmark, natural image-based benchmark, and test sets. The datasets are ordered based on their release date and are described in terms of publication venue, naturalness, matting target, resolution, number of training and test samples, and availability (along with their links). It should be noted that the size of the datasets is calculated based on the number of distinguished foregrounds, except for TOM and RefMatte, which have pre-defined composite rules.

| Name | Pub. | Natural | Target | Resolution | #Train | #Test | Publicity |

|---|---|---|---|---|---|---|---|

| DIM-481 | CVPR'17 | ✗ | object | 1298×1083 | 431 | 50 | Link |

| TOM | CVPR'18 | ✗ | transparent | - | 178,000 | 876 | Link |

| LF-257 | CVPR'19 | ✗ | human | 553×756 | 228 | 29 | Link |

| HATT-646 | CVPR'20 | ✗ | object | 1573×1731 | 596 | 60 | Link |

| PhotoMatte13k | CVPR'20 | ✗ | human | - | 13665 | - | - |

| SIM | CVPR'21 | ✗ | object | 2194×1950 | 348 | 50 | Link |

| Human-2k | ICCV'21 | ✗ | human | 2112×2075 | 2000 | 100 | Link |

| Trans-460 | ECCV'22 | ✗ | transparent | 3766×3820 | 410 | 50 | Link |

| HIM2k | CVPR'22 | ✗ | human | 1823×1424 | 1500 | 500 | Link |

| RefMatte | CVPR'23 | ✗ | object | 1543×1162 | 45000 | 2500 | Link |

| AlphaMatting | CVPR'09 | ✓ | object | 3056×2340 | 27 | 8 | Link |

| DAPM-2k | ECCV'16 | ✓ | human | 600×800 | 1700 | 300 | Link |

| SHM-35k | MM'18 | ✓ | human | - | 52511 | 1400 | - |

| SHMC-10k | CVPR'20 | ✓ | human | - | 9324 | 125 | - |

| P3M-10k | MM'21 | ✓ | human | 1349×1321 | 9421 | 1000 | Link |

| AM-2k | IJCV'22 | ✓ | animal | 1471×1195 | 1800 | 200 | Link |

| Multi-Object-1k | MM'22 | ✓ | human-object | - | 1000 | 200 | - |

| UGD-12k | TIP'22 | ✓ | human | 356×317 | 12066 | 700 | Link |

| PhotoMatte85 | CVPR'20 | ✗ | human | 2304×3456 | - | 85 | Link |

| AIM-500 | IJCAI'21 | ✓ | object | 1397×1260 | - | 500 | Link |

| RWP-636 | CVPR'21 | ✓ | human | 1038×1327 | - | 636 | Link |

| PPM-100 | AAAI'22 | ✓ | human | 2997×2875 | - | 100 | Link |

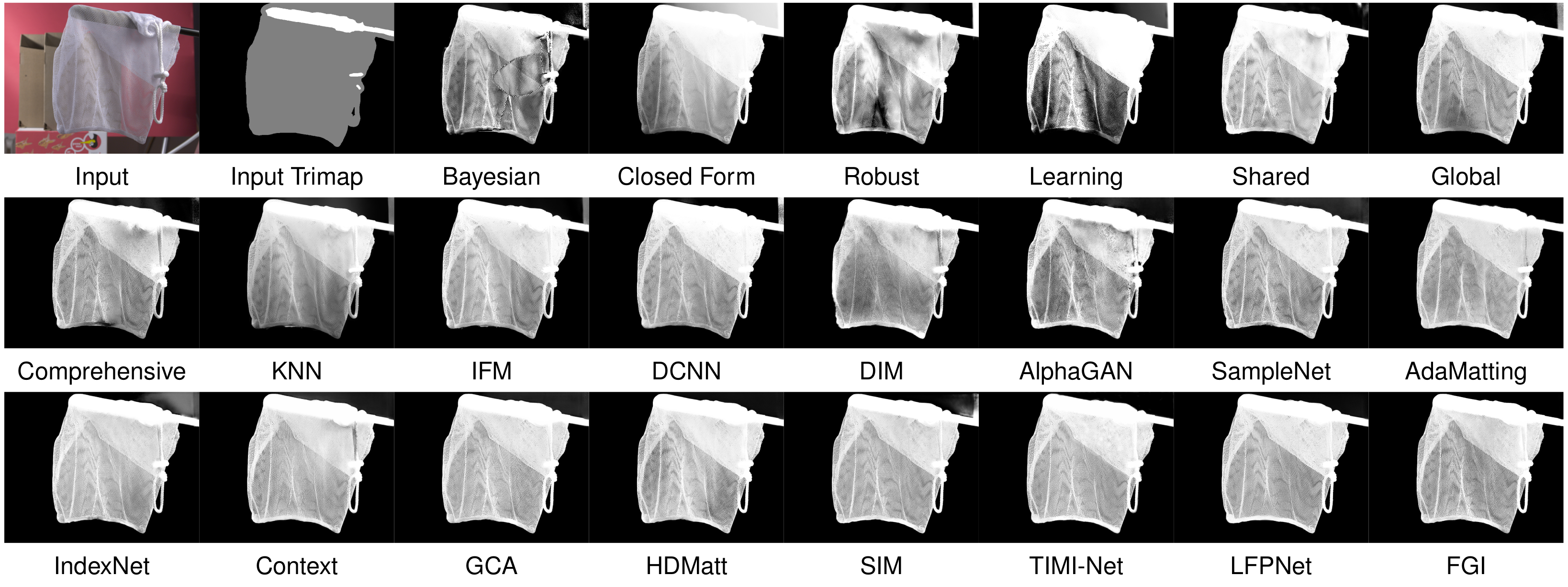

We provide a comprehensive evaluation of representative matting methods in the paper. Here, we present some subjective results of auxiliary-based matting methods on alphamatting.com and automatic matting methods on P3M-500-NP.

If you are interested in our work, please consider citing the following:

@article{li2023deep,

title={Deep Image Matting: A Comprehensive Survey},

author={Jizhizi Li and Jing Zhang and Dacheng Tao},

journal={ArXiv},

year={2023},

volume={abs/2304.04672}

}

This project is under the MIT license. For further questions, please contact Jizhizi Li at jili8515@uni.sydney.edu.au.

[1] Deep Automatic Natural Image Matting, IJCAI, 2021 | Paper | Github

Jizhizi Li, Jing Zhang, and Dacheng Tao

[2] Privacy-preserving Portrait Matting, ACM MM, 2021 | Paper | Github

Jizhizi Li∗, Sihan Ma∗, Jing Zhang, Dacheng Tao

[3] Bridging Composite and Real: Towards End-to-end Deep Image Matting, IJCV, 2022 | Paper | Github

Jizhizi Li∗, Jing Zhang∗, Stephen J. Maybank, Dacheng Tao

[4] Referring Image Matting, CVPR, 2023 | Paper | Github

Jizhizi Li, Jing Zhang, and Dacheng Tao

[5] Rethinking Portrait Matting with Privacy Preserving, IJCV, 2023 | Paper | Github

Sihan Ma∗, Jizhizi Li∗, Jing Zhang, He Zhang, Dacheng Tao