This repository contains the toolkit for replicating results from our technical report.

Large Language Models (LLMs) are typically presumed to process context uniformly—that is, the model should handle the 10,000th token just as reliably as the 100th. However, in practice, this assumption does not hold. We observe that model performance varies significantly as input length changes, even on simple tasks.

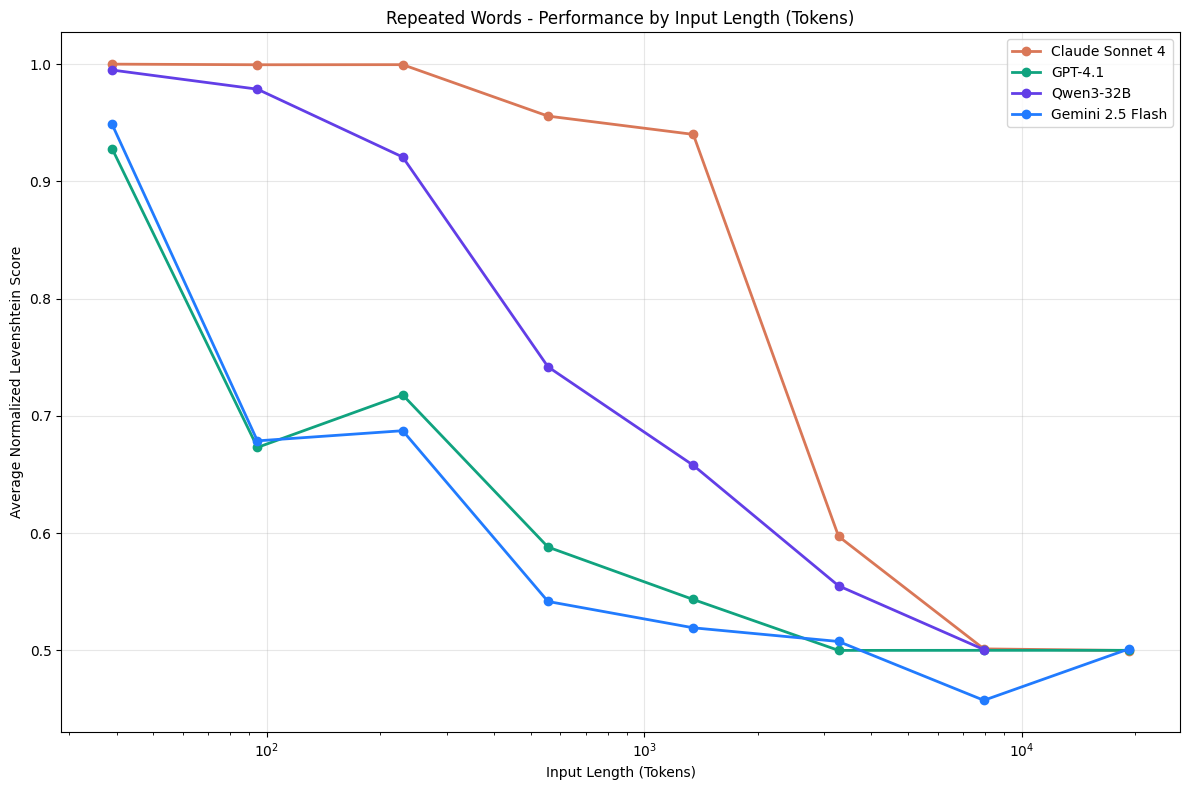

Latest Models on Repeated Words Task

Our experiments are organized under the experiments/ folder:

Extension of Needle in a Haystack to examine the effects of needles with semantic, rather than direct lexical matches, as well as the effects of introducing variations to the haystack content.

LongMemEval task.

Tests model performance on replicating a sequence of repeated words.

Each experiment contains detailed instructions in their respective README.md files.

Datasets can be downloaded here.

-

Clone the repository

-

Create and activate a virtual environment:

python -m venv venv source venv/bin/activate # On Windows: venv\Scripts\activate

-

Install dependencies:

pip install -r requirements.txt -

Set up environment variables:

- OpenAI:

OPENAI_API_KEY - Anthropic:

ANTHROPIC_API_KEY - Google:

GOOGLE_APPLICATION_CREDENTIALSandGOOGLE_MODEL_PATH

- OpenAI:

-

Navigate to specific experiment folder and follow README instructions

If you find this work useful, please cite our technical report:

@techreport{hong2025context,

title = {Context Rot: How Increasing Input Tokens Impacts LLM Performance},

author = {Hong, Kelly and Troynikov, Anton and Huber, Jeff},

year = {2025},

month = {July},

institution = {Chroma},

url = {https://research.trychroma.com/context-rot},

}