Semantic Compositional Networks

The Theano code for the CVPR 2017 paper “Semantic Compositional Networks for Visual Captioning”

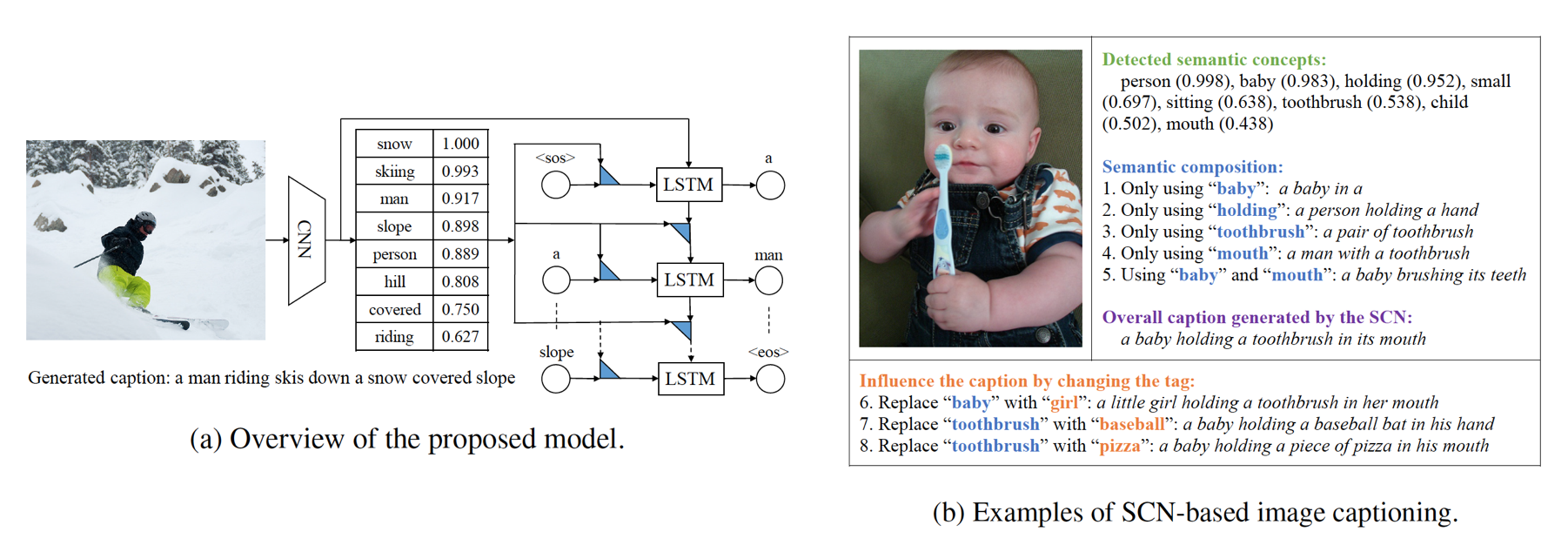

Model architecture and illustration of semantic composition.

Dependencies

This code is written in python. To use it you will need:

- Python 2.7 (do not use Python 3.0)

- Theano 0.7 (you can also use the most recent version)

- A recent version of NumPy and SciPy

Getting started

We provide the code on how to train SCN for image captioning on the COCO dataset.

-

In order to start, please first download the ResNet features and tag features we used in the experiments. Put the

cocofolder inside the./datafolder. -

We also provide our pre-trained model on COCO. Put the

pretrained_modelfolder into the current directory. -

In order to evaluate the model, please download the standard coco-caption evaluation code. Copy the folder

pycocoevalcapinto the current directory. -

Now, everything is ready.

How to use the code

- Run

SCN_training.pyto start training. On a modern GPU, the model will take one night to train.

THEANO_FLAGS=mode=FAST_RUN,device=gpu,floatX=float32 python SCN_training.py

-

Based on our pre-trained model, run

SCN_decode.pyto generate captions on the COCO small 5k test set. The generated captions are also provided, namedcoco_scn_5k_test.txt. -

Now, run

SCN_evaluation.pyto evaluate the model. The code will output

CIDEr: 1.043, Bleu-4: 0.341, Bleu-3: 0.446, Bleu-2: 0.582, Bleu-1: 0.743, ROUGE_L: 0.550, METEOR: 0.261.

- In the

./data/cocofolder, we also provide the features for the COCO official validation and test sets. RunSCN_for_test_server.pywill help you generate captions for the official test set, and prepare the.jsonfile for submission.

Video Captioning

In order to keep things simple, we provide another separate repo that reproduces our results on video captioning, using the Youtube2Text dataset.

Citing SCN

Please cite our CVPR paper in your publications if it helps your research:

@inproceedings{SCN_CVPR2017,

Author = {Gan, Zhe and Gan, Chuang and He, Xiaodong and Pu, Yunchen and Tran, Kenneth and Gao, Jianfeng and Carin, Lawrence and Deng, Li},

Title = {Semantic Compositional Networks for Visual Captioning},

booktitle={CVPR},

Year = {2017}

}