English | 简体中文

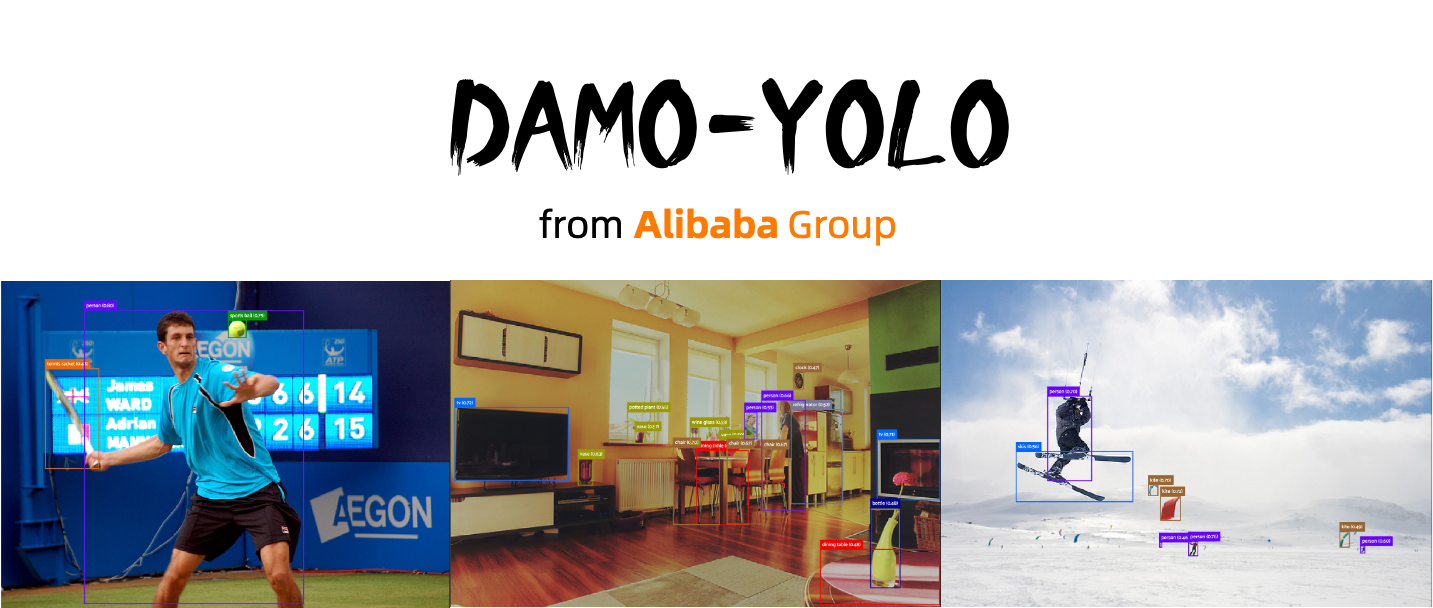

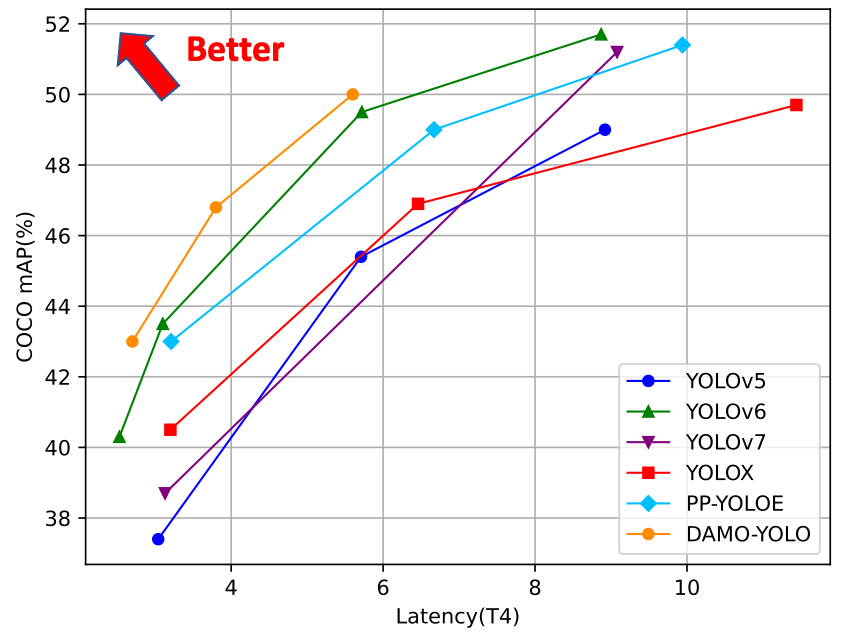

Welcome to **DAMO-YOLO**! It is a fast and accurate object detection method, which is developed by TinyML Team from Alibaba DAMO Data Analytics and Intelligence Lab. And it achieves a higher performance than state-of-the-art YOLO series. DAMO-YOLO is extend from YOLO but with some new techs, including Neural Architecture Search (NAS) backbones, efficient Reparameterized Generalized-FPN (RepGFPN), a lightweight head with AlignedOTA label assignment, and distillation enhancement. For more details, please refer to our [Arxiv Report](https://arxiv.org/pdf/2211.15444v2.pdf). Moreover, here you can find not only powerful models, but also highly efficient training strategies and complete tools from training to deployment.- [2023/01/07: We release DAMO-YOLO v0.2.1!]

- Add TensorRT Int8 Quantization Tutorial, achieves 19% speed up with only 0.3% accuracy loss.

- Add general demo tools, support TensorRT/Onnx/Torch based vidoe/image inference.

- Add more industry application models, including human detection, helmet detection, facemask detection and cigarette detection.

- Add third-party resources, including DAMO-YOLO Code Interpretation, Practical Example for Finetuning on Custom Dataset.

- [2022/12/15: We release DAMO-YOLO v0.1.1!]

- Add a detailed Custom Dataset Finetune Tutorial.

- The stuck problem caused by no-label data (e.g., ISSUE#30) is solved. Feel free to contact us, we are 24h stand by.

- [2022/11/27: We release DAMO-YOLO v0.1.0!]

- Release DAMO-YOLO object detection models, including DAMO-YOLO-T, DAMO-YOLO-S and DAMO-YOLO-M.

- Release model convert tools for easy deployment, supports onnx and TensorRT-fp32, TensorRT-fp16.

- DAMO-YOLO-T, DAMO-YOLO-S, DAMO-YOLO-M is integrated into ModelScope. Training is supported on ModelScope now! Come and try DAMO-YOLO with free GPU resources provided by ModelScope.

| Model | size | mAPval 0.5:0.95 |

Latency T4 TRT-FP16-BS1 |

FLOPs (G) |

Params (M) |

AliYun Download | Google Download |

|---|---|---|---|---|---|---|---|

| DAMO-YOLO-T | 640 | 41.8 | 2.78 | 18.1 | 8.5 | torch,onnx | torch,onnx |

| DAMO-YOLO-T* | 640 | 43.0 | 2.78 | 18.1 | 8.5 | torch,onnx | torch,onnx |

| DAMO-YOLO-S | 640 | 45.6 | 3.83 | 37.8 | 16.3 | torch,onnx | torch,onnx |

| DAMO-YOLO-S* | 640 | 46.8 | 3.83 | 37.8 | 16.3 | torch,onnx | torch,onnx |

| DAMO-YOLO-M | 640 | 48.7 | 5.62 | 61.8 | 28.2 | torch,onnx | torch,onnx |

| DAMO-YOLO-M* | 640 | 50.0 | 5.62 | 61.8 | 28.2 | torch,onnx | torch,onnx |

- We report the mAP of models on COCO2017 validation set, with multi-class NMS.

- The latency in this table is measured without post-processing.

- * denotes the model trained with distillation.

Installation

Step1. Install DAMO-YOLO.

git clone https://github.com/tinyvision/damo-yolo.git

cd DAMO-YOLO/

conda create -n DAMO-YOLO python=3.7 -y

conda activate DAMO-YOLO

conda install pytorch==1.7.0 torchvision==0.8.0 torchaudio==0.7.0 cudatoolkit=10.2 -c pytorch

pip install -r requirements.txt

export PYTHONPATH=$PWD:$PYTHONPATHStep2. Install pycocotools.

pip install cython;

pip install git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI # for Linux

pip install git+https://github.com/philferriere/cocoapi.git#subdirectory=PythonAPI # for WindowsDemo

Step1. Download a pretrained torch, onnx or tensorRT engine from the benchmark table, e.g., damoyolo_tinynasL25_S.pth, damoyolo_tinynasL25_S.onnx, damoyolo_tinynasL25_S.trt.

Step2. Use -f(config filename) to specify your detector's config, --path to specify input data path, image or video are supported. For example:

# torch

python tools/demo.py -f ./configs/damoyolo_tinynasL25_S.py --engine ./damoyolo_tinynasL25_S.pth --engine_type torch --conf 0.6 --infer_size 640 640 --device cuda --path ./assets/dog.jpg

# onnx

python tools/demo.py -f ./configs/damoyolo_tinynasL25_S.py --engine ./damoyolo_tinynasL25_S.onnx --engine_type onnx --conf 0.6 --infer_size 640 640 --device cuda --path ./assets/dog.jpg

# tensorRT

python tools/demo.py -f ./configs/damoyolo_tinynasL25_S.py --engine ./damoyolo_tinynasL25_S.trt --engine_type tensorRT --conf 0.6 --infer_size 640 640 --device cuda --path ./assets/dog.jpgReproduce our results on COCO

Step1. Prepare COCO dataset

cd <DAMO-YOLO Home>

ln -s /path/to/your/coco ./datasets/cocoStep 2. Reproduce our results on COCO by specifying -f(config filename)

python -m torch.distributed.launch --nproc_per_node=8 tools/train.py -f configs/damoyolo_tinynasL25_S.pyFinetune on your data

Please refer to custom dataset tutorial for details.

Evaluation

python -m torch.distributed.launch --nproc_per_node=8 tools/eval.py -f configs/damoyolo_tinynasL25_S.py --ckpt /path/to/your/damoyolo_tinynasL25_S.pthCustomize tinynas backbone

Step1. If you want to customize your own backbone, please refer to [MAE-NAS Tutorial for DAMO-YOLO](https://github.com/alibaba/lightweight-neural-architecture-search/blob/main/scripts/damo-yolo/Tutorial_NAS_for_DAMO-YOLO_cn.md). This is a detailed tutorial about how to obtain an optimal backbone under the budget of latency/flops.Step2. After the searching process completed, you can replace the structure text in configs with it. Finally, you can get your own custom ResNet-like or CSPNet-like backbone after setting the backbone name to TinyNAS_res or TinyNAS_csp. Please notice the difference of out_indices between TinyNAS_res and TinyNAS_csp.

structure = self.read_structure('tinynas_customize.txt')

TinyNAS = { 'name'='TinyNAS_res', # ResNet-like Tinynas backbone

'out_indices': (2,4,5)}

TinyNAS = { 'name'='TinyNAS_csp', # CSPNet-like Tinynas backbone

'out_indices': (2,3,4)}

Installation

Step1. Install ONNX.

pip install onnx==1.8.1

pip install onnxruntime==1.8.0

pip install onnx-simplifier==0.3.5Step2. Install CUDA、CuDNN、TensorRT and pyCUDA

2.1 CUDA

wget https://developer.download.nvidia.com/compute/cuda/10.2/Prod/local_installers/cuda_10.2.89_440.33.01_linux.run

sudo sh cuda_10.2.89_440.33.01_linux.run

export PATH=$PATH:/usr/local/cuda-10.2/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda-10.2/lib64

source ~/.bashrc2.2 CuDNN

sudo cp cuda/include/* /usr/local/cuda/include/

sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64/

sudo chmod a+r /usr/local/cuda/include/cudnn.h

sudo chmod a+r /usr/local/cuda/lib64/libcudnn*2.3 TensorRT

cd TensorRT-7.2.1.6/python

pip install tensorrt-7.2.1.6-cp37-none-linux_x86_64.whl

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:TensorRT-7.2.1.6/lib2.4 pycuda

pip install pycuda==2022.1Model Convert

Now we support trt_int8 quantization, you can specify trt_type as int8 to export the int8 tensorRT engine. You can also try partial quantization to achieve a good compromise between accuracy and latency. Refer to partial_quantization for more details.

Step.1 convert torch model to onnx or trt engine, and the output file would be generated in ./deploy. end2end means to export trt with nms. trt_eval means to evaluate the exported trt engine on coco_val dataset after the export compelete.

# onnx export

python tools/converter.py -f configs/damoyolo_tinynasL25_S.py -c damoyolo_tinynasL25_S.pth --batch_size 1 --img_size 640

# trt export

python tools/converter.py -f configs/damoyolo_tinynasL25_S.py -c damoyolo_tinynasL25_S.pth --batch_size 1 --img_size 640 --trt --end2end --trt_evalStep.2 trt engine evaluation on coco_val dataset. end2end means to using trt_with_nms to evaluation.

python tools/trt_eval.py -f configs/damoyolo_tinynasL25_S.py -trt deploy/damoyolo_tinynasL25_S_end2end_fp16_bs1.trt --batch_size 1 --img_size 640 --end2endStep.3 onnx or trt engine inference demo and appoint test image/video by --path. end2end means to using trt_with_nms to inference.

# onnx inference

python tools/demo.py -f ./configs/damoyolo_tinynasL25_S.py --engine ./damoyolo_tinynasL25_S.onnx --engine_type onnx --conf 0.6 --infer_size 640 640 --device cuda --path ./assets/dog.jpg

# trt inference

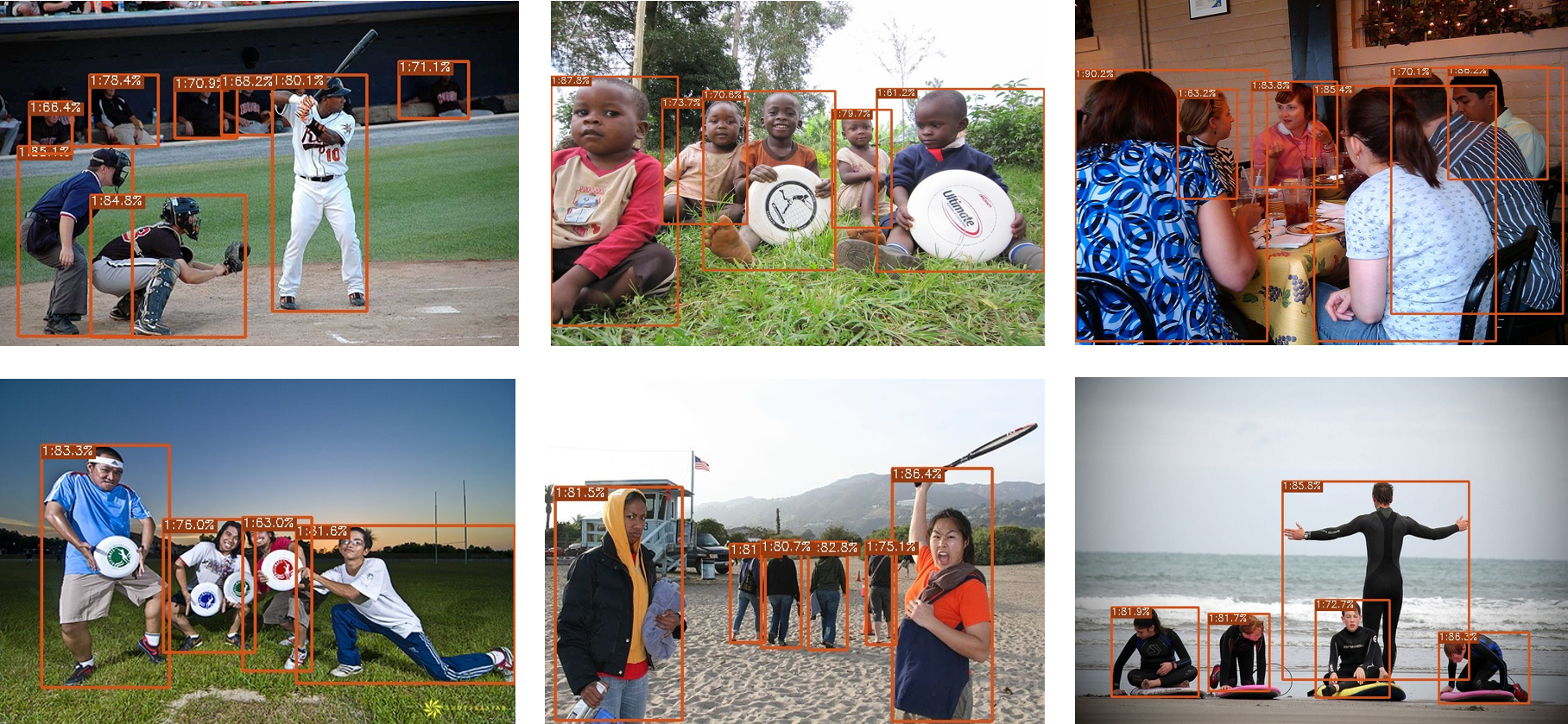

python tools/demo.py -f ./configs/damoyolo_tinynasL25_S.py --engine ./deploy/damoyolo_tinynasL25_S_end2end_fp16_bs1.trt --engine_type tensorRT --conf 0.6 --infer_size 640 640 --device cuda --path ./assets/dog.jpg --end2endWe provide DAMO-YOLO models for applications in real scenarios, which are listed as follows. More powerful models are coming, please stay tuned.

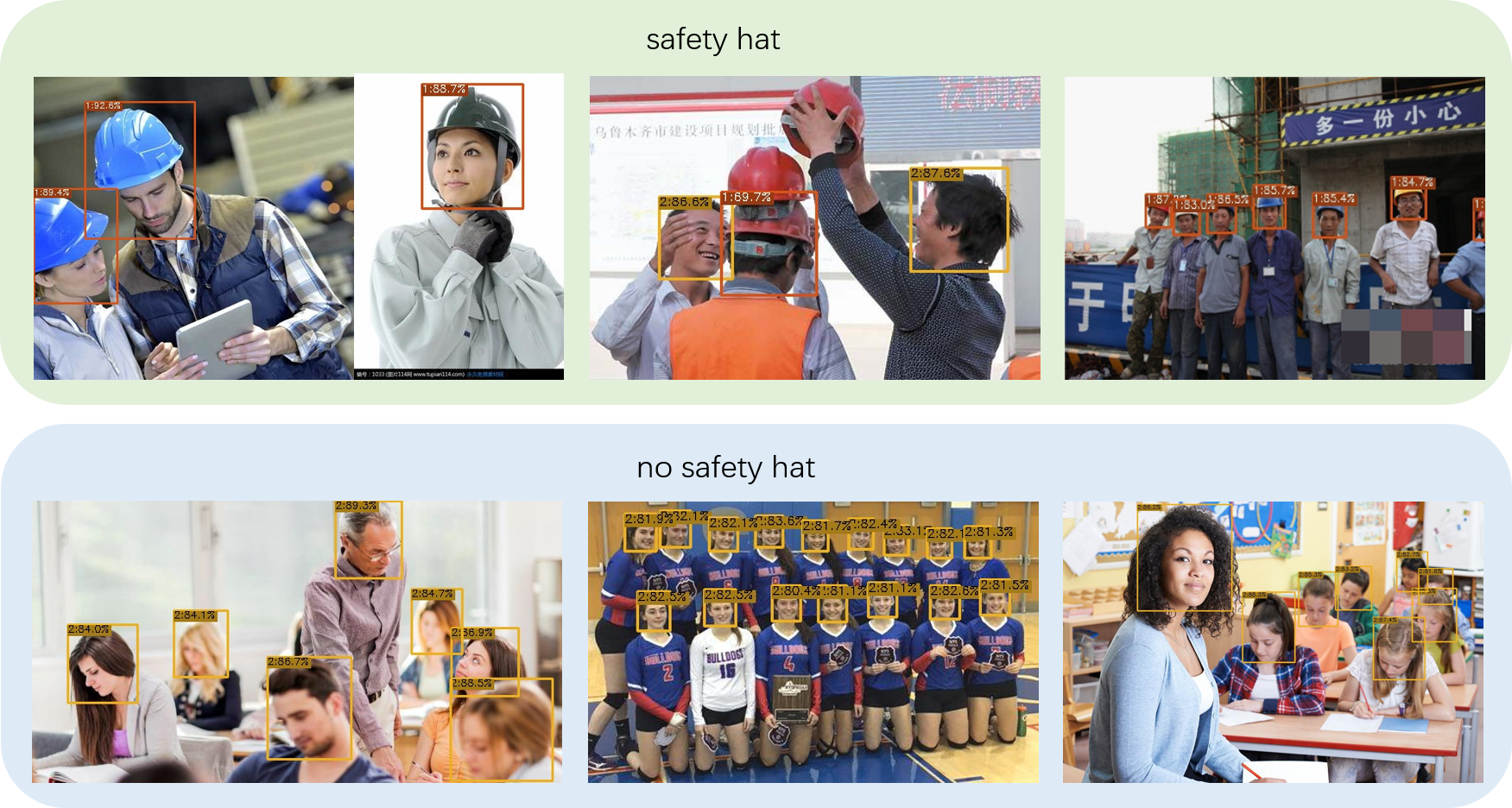

| Human Detection | Helmet Detection |

|---|---|

|

|

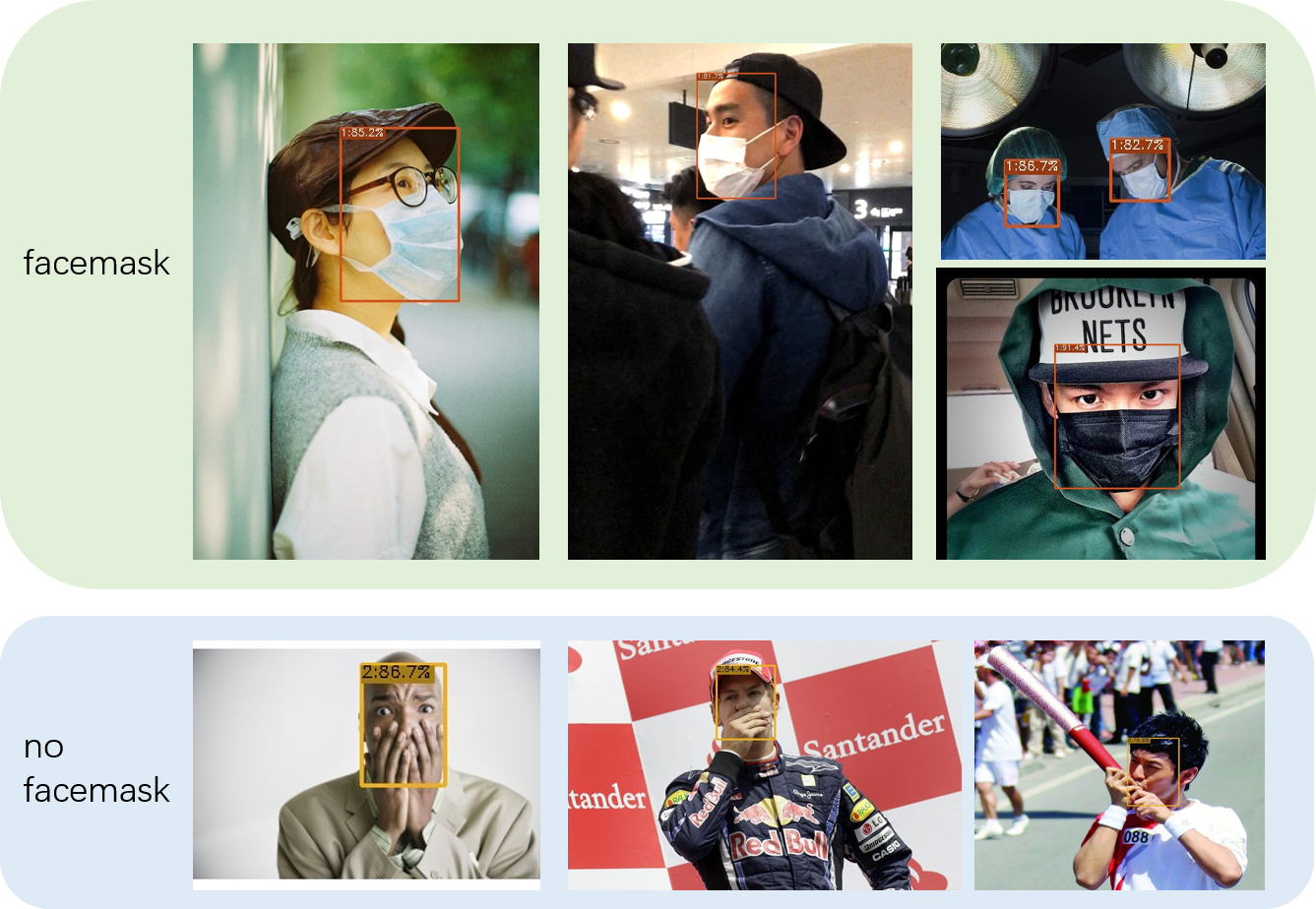

| Facemask Detection | Cigarette Detection |

|

|

In order to promote communication among DAMO-YOLO users, we collect third-party resources in this section. If you have original content about DAMO-YOLO, please feel free to contact us at xianzhe.xxz@alibaba-inc.com.

- DAMO-YOLO Overview: slides(中文 | English), videos(中文 | English).

- DAMO-YOLO Code Interpretation

- Practical Example for Finetuning on Custom Dataset

We are recruiting research intern, if you are interested in object detection, model quantization or NAS, please send your resume to xiuyu.sxy@alibaba-inc.com

If you use DAMO-YOLO in your research, please cite our work by using the following BibTeX entry:

@article{damoyolo,

title={DAMO-YOLO: A Report on Real-Time Object Detection Design},

author={Xianzhe Xu, Yiqi Jiang, Weihua Chen, Yilun Huang, Yuan Zhang and Xiuyu Sun},

journal={arXiv preprint arXiv:2211.15444v2},

year={2022},

}