Code for CVPR 2019 paper:

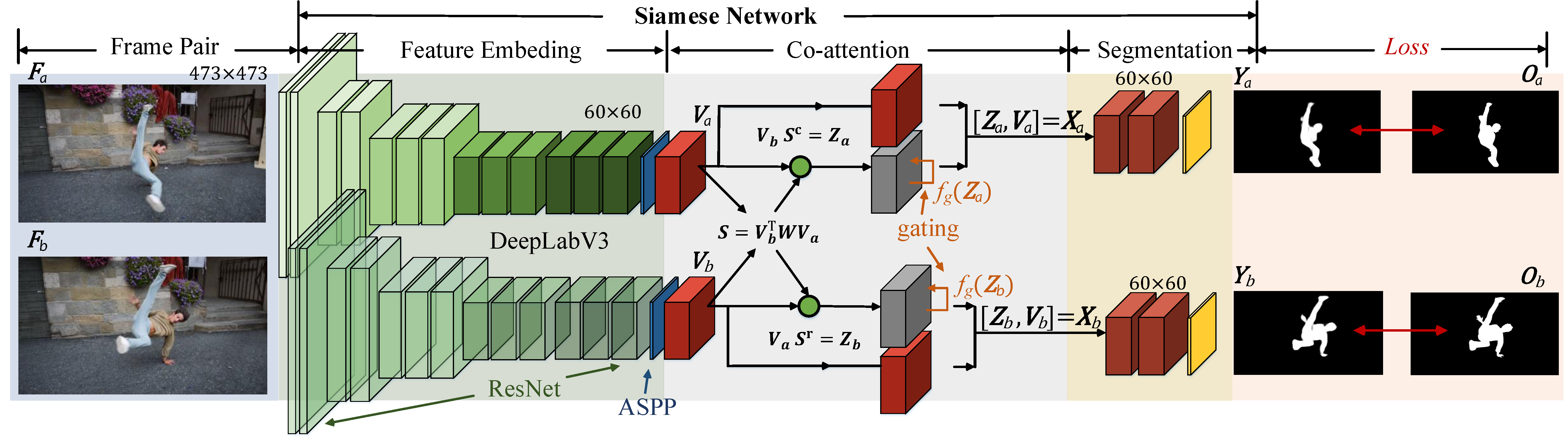

See More, Know More: Unsupervised Video Object Segmentation with Co-Attention Siamese Networks

Xiankai Lu, Wenguan Wang, Chao Ma, Jianbing Shen, Ling Shao, Fatih Porikli

###The pre-trained model and testing code:

-

Install pytorch (version:1.0.1).

-

Download the pretrained model. Run 'test_coattention_conf.py' and change the davis dataset path, pretrainde model path and result path.

-

Run command: python test_coattention_conf.py --dataset davis --gpus 0

-

Post CRF processing code: https://github.com/lucasb-eyer/pydensecrf

The pretrained weight can be download from GoogleDrive or BaiduPan, pass code: xwup.

The segmentation results on DAVIS, FBMS and Youtube-objects can be download from GoogleDrive or BaiduPan, pass code: q37f.

We will release the training codes soon.

If you find the code and dataset useful in your research, please consider citing:

@InProceedings{Lu_2019_CVPR,

author = {Lu, Xiankai and Wang, Wenguan and Ma, Chao and Shen, Jianbing and Shao, Ling and Porikli, Fatih},

title = {See More, Know More: Unsupervised Video Object Segmentation With Co-Attention Siamese Networks},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2019} }

Saliency-Aware Geodesic Video Object Segmentation (CVPR15)

Learning Unsupervised Video Primary Object Segmentation through Visual Attention (CVPR19)

Any comments, please email: carrierlxk@gmail.com