Code based on Pytorch-GAN

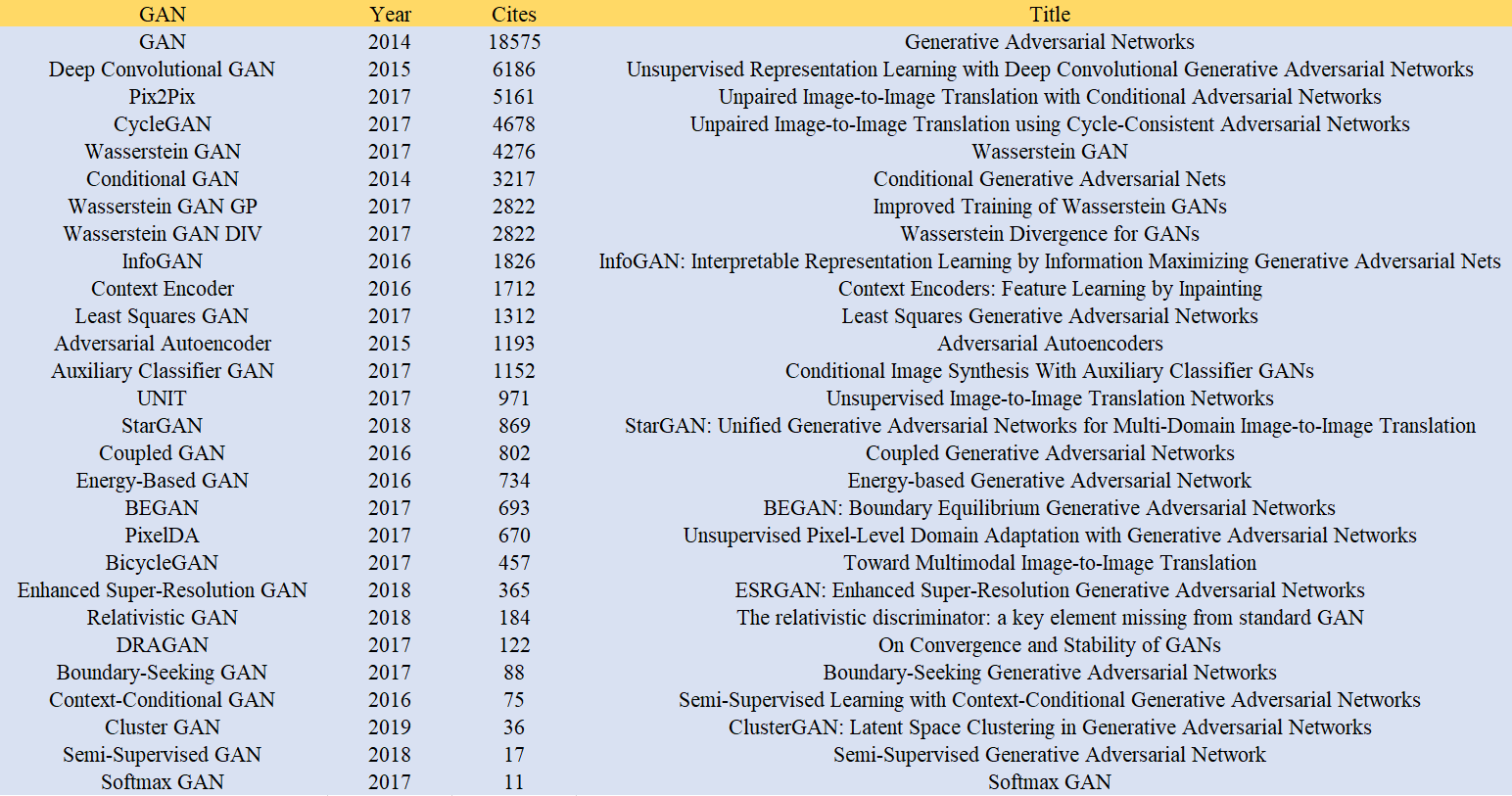

Our GAN model zoo supports 27 kinds of GAN. This table is the latest citations we found from Google Scholar. It can be seen that since GAN was proposed in 2014, a lot of excellent work based on GAN has appeared. These 27 GANs have a total of 60953 citations, with an average of 2176 citations per article.

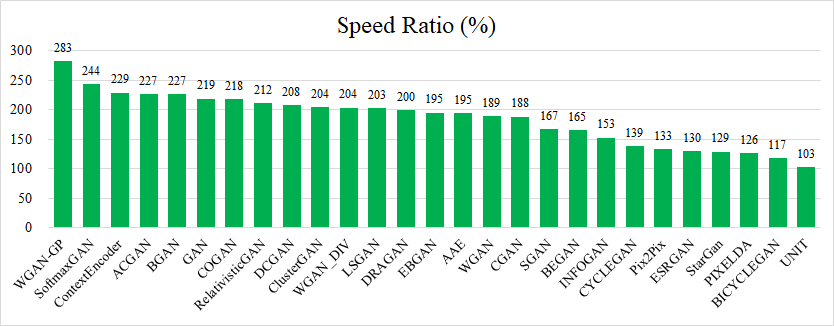

We compared the performance of these GANs of Jittor and Pytorch. The picture below is the speedup ratio of Jittor relative to Pytorch. It can be seen that the highest acceleration ratio of these GANs reaches 283%, and the average acceleration ratio is 185%.

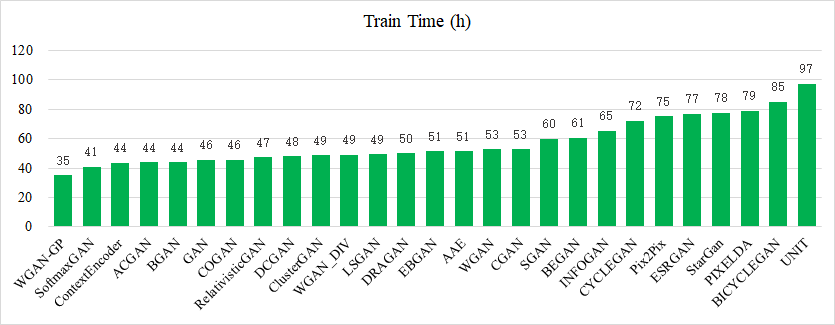

In another form of presentation, assuming that Pytorch's training time is 100 hours, we calculated the time required for GAN training corresponding to Jittor. Of these GANs, our fastest accelerating GAN takes only 35 hours to run, with an average of 57 hours.

- Installation

- models

- Auxiliary Classifier GAN

- Adversarial Autoencoder

- BEGAN

- BicycleGAN

- Boundary-Seeking GAN

- Cluster GAN

- Conditional GAN

- Context Encoder

- Coupled GAN

- CycleGAN

- Deep Convolutional GAN

- DRAGAN

- Energy-Based GAN

- Enhanced Super-Resolution GAN

- GAN

- InfoGAN

- Least Squares GAN

- Pix2Pix

- PixelDA

- Relativistic GAN

- Semi-Supervised GAN

- Softmax GAN

- StarGAN

- UNIT

- Wasserstein GAN

- Wasserstein GAN GP

- Wasserstein GAN DIV

$ git clone https://github.com/Jittor/gan-jittor.git

$ cd gan-jittor/

$ sudo python3.7 -m pip install -r requirements.txt

Auxiliary Classifier Generative Adversarial Network

Augustus Odena, Christopher Olah, Jonathon Shlens

$ cd models/acgan/

$ python3.7 acgan.py

Adversarial Autoencoder

Alireza Makhzani, Jonathon Shlens, Navdeep Jaitly, Ian Goodfellow, Brendan Frey

$ cd models/aae/

$ python3.7 aae.py

BEGAN: Boundary Equilibrium Generative Adversarial Networks

David Berthelot, Thomas Schumm, Luke Metz

$ cd models/began/

$ python3.7 began.py

Toward Multimodal Image-to-Image Translation

Jun-Yan Zhu, Richard Zhang, Deepak Pathak, Trevor Darrell, Alexei A. Efros, Oliver Wang, Eli Shechtman

$ cd data/

$ bash download_pix2pix_dataset.sh edges2shoes

$ cd ../models/bicyclegan/

$ python3.7 bicyclegan.py

Various style translations by varying the latent code.

Boundary-Seeking Generative Adversarial Networks

R Devon Hjelm, Athul Paul Jacob, Tong Che, Adam Trischler, Kyunghyun Cho, Yoshua Bengio

$ cd models/bgan/

$ python3.7 bgan.py

ClusterGAN: Latent Space Clustering in Generative Adversarial Networks

Sudipto Mukherjee, Himanshu Asnani, Eugene Lin, Sreeram Kannan

$ cd models/cluster_gan/

$ python3.7 clustergan.py

Conditional Generative Adversarial Nets

Mehdi Mirza, Simon Osindero

$ cd models/cgan/

$ python3.7 cgan.py

Context Encoders: Feature Learning by Inpainting

Deepak Pathak, Philipp Krahenbuhl, Jeff Donahue, Trevor Darrell, Alexei A. Efros

$ cd models/context_encoder/

<follow steps at the top of context_encoder.py>

$ python3.7 context_encoder.py

Rows: Masked | Inpainted | Original | Masked | Inpainted | Original

Coupled Generative Adversarial Networks

Ming-Yu Liu, Oncel Tuzel

$ download mnistm.pkl from https://cloud.tsinghua.edu.cn/f/d9a411da271745fcbe1f/?dl=1 and put it into data/mnistm/mnistm.pkl

$ cd models/cogan/

$ python3.7 cogan.py

Generated MNIST and MNIST-M images

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros

$ cd data/

$ bash download_cyclegan_dataset.sh monet2photo

$ cd ../models/cyclegan/

$ python3.7 cyclegan.py --dataset_name monet2photo

Monet to photo translations.

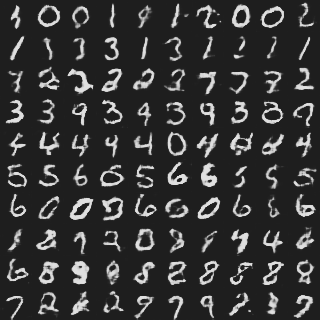

Deep Convolutional Generative Adversarial Network

Alec Radford, Luke Metz, Soumith Chintala

$ cd models/dcgan/

$ python3.7 dcgan.py

On Convergence and Stability of GANs

Naveen Kodali, Jacob Abernethy, James Hays, Zsolt Kira

$ cd models/dragan/

$ python3.7 dragan.py

Energy-based Generative Adversarial Network

Junbo Zhao, Michael Mathieu, Yann LeCun

$ cd models/ebgan/

$ python3.7 ebgan.py

ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks

Xintao Wang, Ke Yu, Shixiang Wu, Jinjin Gu, Yihao Liu, Chao Dong, Chen Change Loy, Yu Qiao, Xiaoou Tang

$ cd models/esrgan/

<follow steps at the top of esrgan.py>

$ python3.7 esrgan.py

Generative Adversarial Network

Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

$ cd models/gan/

$ python3.7 gan.py

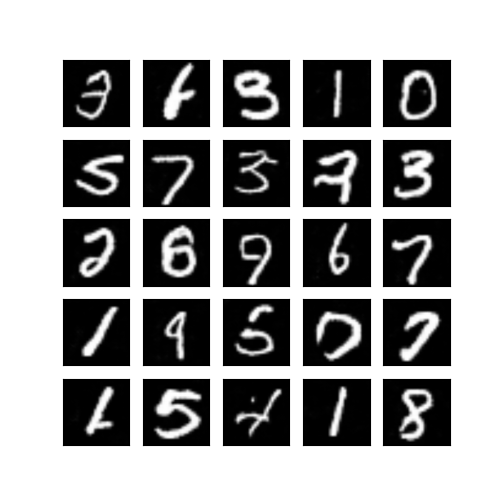

InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets

Xi Chen, Yan Duan, Rein Houthooft, John Schulman, Ilya Sutskever, Pieter Abbeel

$ cd models/infogan/

$ python3.7 infogan.py

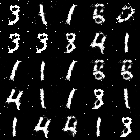

Result of varying continuous latent variable by row.

Least Squares Generative Adversarial Networks

Xudong Mao, Qing Li, Haoran Xie, Raymond Y.K. Lau, Zhen Wang, Stephen Paul Smolley

$ cd models/lsgan/

$ python3.7 lsgan.py

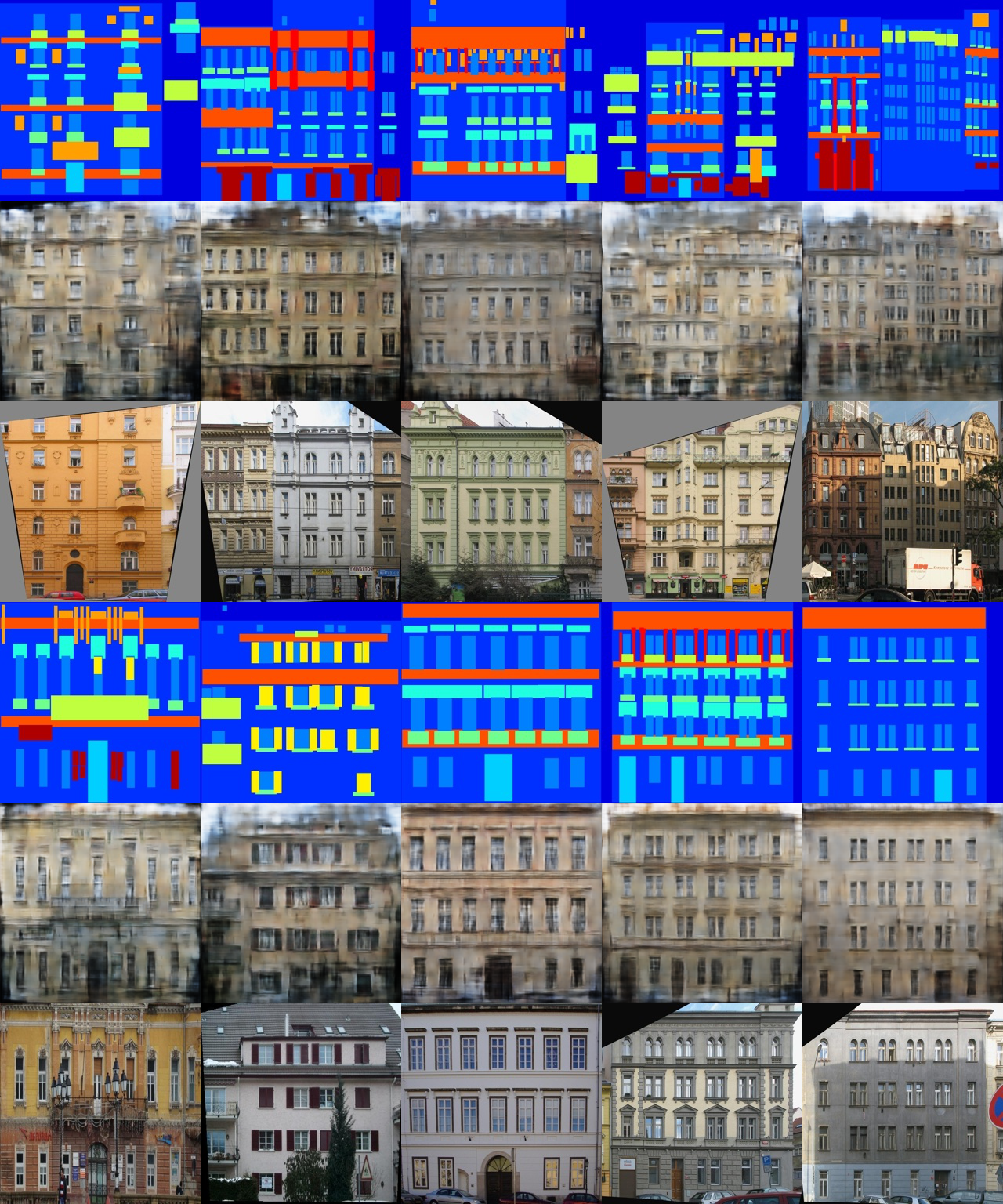

Unpaired Image-to-Image Translation with Conditional Adversarial Networks

Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, Alexei A. Efros

$ cd data/

$ bash download_pix2pix_dataset.sh facades

$ cd ../models/pix2pix/

$ python3.7 pix2pix.py --dataset_name facades

Rows from top to bottom: (1) The condition for the generator (2) Generated image <br>

based of condition (3) The true corresponding image to the condition

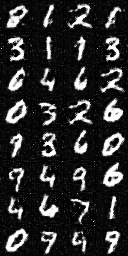

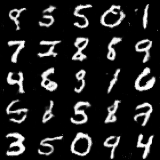

Unsupervised Pixel-Level Domain Adaptation with Generative Adversarial Networks

Konstantinos Bousmalis, Nathan Silberman, David Dohan, Dumitru Erhan, Dilip Krishnan

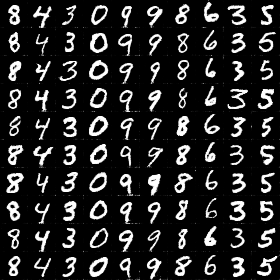

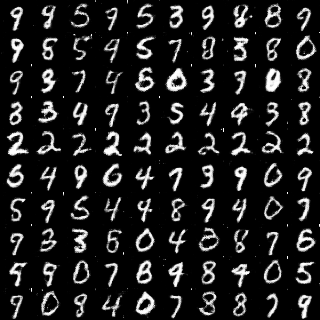

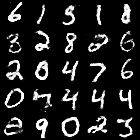

Trains a classifier on images that have been translated from the source domain (MNIST) to the target domain (MNIST-M) using the annotations of the source domain images. The classification network is trained jointly with the generator network to optimize the generator for both providing a proper domain translation and also for preserving the semantics of the source domain image. The classification network trained on translated images is compared to the naive solution of training a classifier on MNIST and evaluating it on MNIST-M. The naive model manages a 55% classification accuracy on MNIST-M while the one trained during domain adaptation achieves a 95% classification accuracy.

$ download mnistm.pkl from https://cloud.tsinghua.edu.cn/f/d9a411da271745fcbe1f/?dl=1 and put it into data/mnistm/mnistm.pkl

$ cd models/pixelda/

$ python3.7 pixelda.py

Rows from top to bottom: (1) Real images from MNIST (2) Translated images from

MNIST to MNIST-M (3) Examples of images from MNIST-M

The relativistic discriminator: a key element missing from standard GAN

Alexia Jolicoeur-Martineau

$ cd models/relativistic_gan/

$ python3.7 relativistic_gan.py # Relativistic Standard GAN

$ python3.7 relativistic_gan.py --rel_avg_gan # Relativistic Average GAN

Semi-Supervised Generative Adversarial Network

Augustus Odena

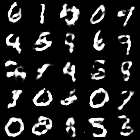

$ cd models/sgan/

$ python3.7 sgan.py

Softmax GAN

Min Lin

$ cd models/softmax_gan/

$ python3.7 softmax_gan.py

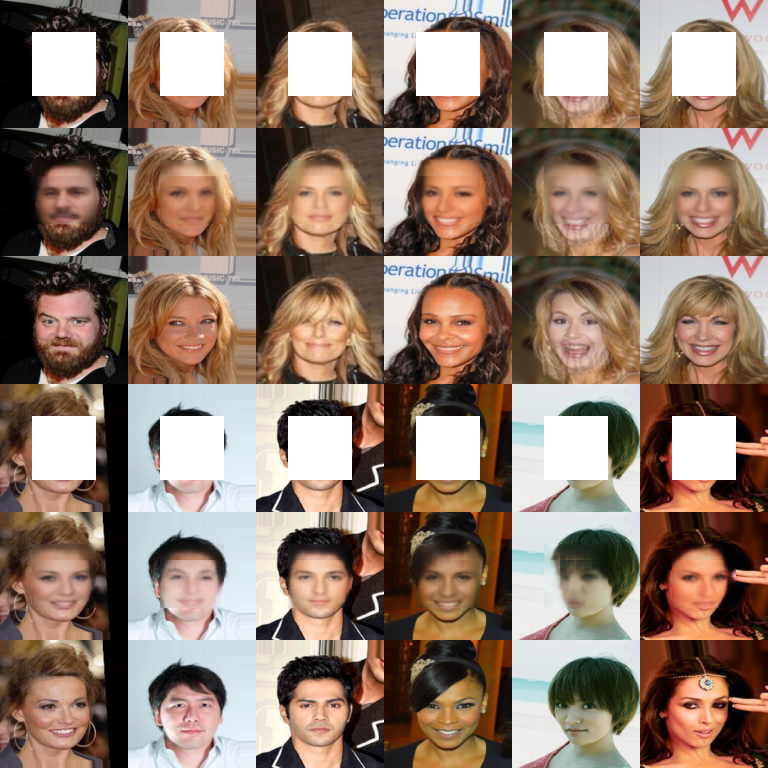

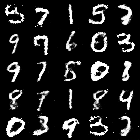

StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation

Yunjey Choi, Minje Choi, Munyoung Kim, Jung-Woo Ha, Sunghun Kim, Jaegul Choo

$ cd models/stargan/

<follow steps at the top of stargan.py>

$ python3.7 stargan.py

Original | Black Hair | Blonde Hair | Brown Hair | Gender Flip | Aged

Unsupervised Image-to-Image Translation Networks

Ming-Yu Liu, Thomas Breuel, Jan Kautz

$ cd data/

$ bash download_cyclegan_dataset.sh apple2orange

$ cd models/unit/

$ python3.7 unit.py --dataset_name apple2orange

Wasserstein GAN

Martin Arjovsky, Soumith Chintala, Léon Bottou

$ cd models/wgan/

$ python3.7 wgan.py

Improved Training of Wasserstein GANs

Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, Aaron Courville

$ cd models/wgan_gp/

$ python3.7 wgan_gp.py

Wasserstein Divergence for GANs

Jiqing Wu, Zhiwu Huang, Janine Thoma, Dinesh Acharya, Luc Van Gool

$ cd models/wgan_div/

$ python3.7 wgan_div.py