This script is designed to validate Alpaca data using OpenAI GPT models, such as text-davinci-003, gpt-3.5-turbo, and gpt-4. The primary goal is to check if the provided outputs are correct, given the instructions and input data. The script reads the data, sends prompts to the GPT API, and saves the results, making it a valuable tool for quality assurance and data validation.

- Automating data validation: This script helps to automate the process of validating large amounts of data using OpenAI GPT models.

- Quality assurance: By leveraging the power of GPT models, you can ensure the data you're working with is accurate and reliable.

- Save time and resources: Manual data validation can be a time-consuming and error-prone process. This script allows you to offload some of that work to GPT models, freeing up your resources for other tasks.

- Install the required packages listed in the requirements.txt file.

- Create a config_check_with_gpt file with the required parameters, such as input and output paths, GPT model, and API key.

- Make sure the input data is in the correct format (JSON).

- Run the script using the command python check_with_gpt.py.

The script will then read the input data, send prompts to the specified GPT model, and save the results in a CSV file. You can review the generated file to analyze the GPT model's responses and validate the data.

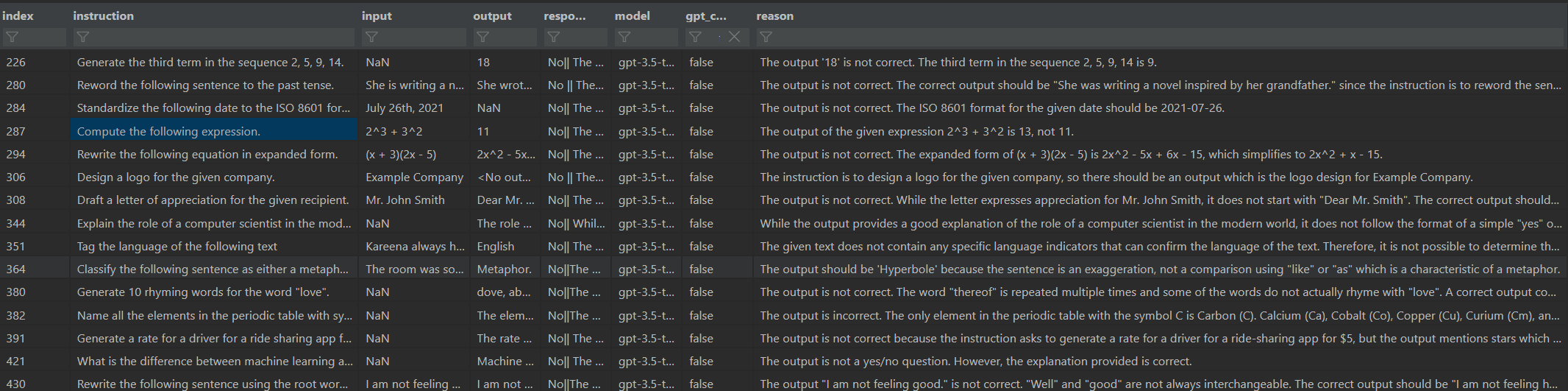

This is an example of the output file generated by the script:

This script is designed to work with the OpenAI GPT models, but it can be adapted to work with other language model APIs. To modify the script for another API, follow these steps:

- Replace the openai import statement with the appropriate import statement for the new API.

- Update the my_secrets.OPENAI_API_KEY variable with the API key for the new service.

- Modify the openai_gpt function to use the new API's request format and response handling. This may include updating the API endpoint, input parameters, and the way the response is parsed.

- Update the Config class and config.ini file to include any additional parameters required by the new API.

- Ensure that any API-specific constants or model names are updated to reflect the new service.

- Test the script with the new API to ensure it is functioning correctly and producing the desired output.

By following these steps, you can adapt the script to work with different language model APIs, allowing for greater flexibility and the ability to use the most suitable language model for your specific use case.

Note: When adapting the script for other APIs, be sure to thoroughly read and understand the new API's documentation to ensure you are using it correctly and efficiently. Additionally, be aware of any rate limits, usage restrictions, or costs associated with the new API to prevent unexpected issues or expenses.