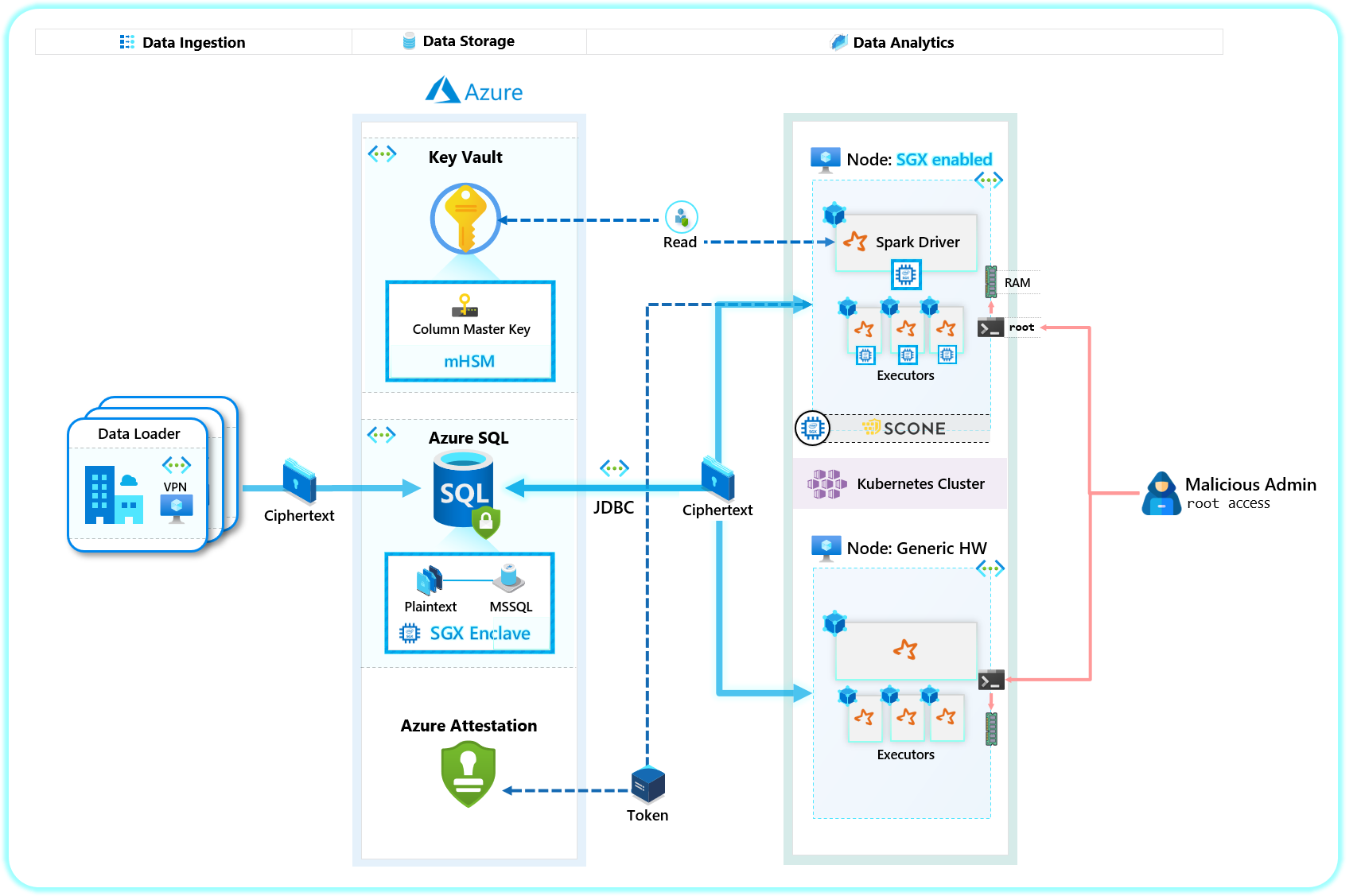

This repository demonstrates the following architecture for Confidential Analytics on Azure SGX enabled machines (AKS or Standalone) for running containerized applications:

💡 Confidential analytics in this context is meant to imply: "run analytics on PII data with peace of mind against data exfiltration" - this includes potential

root-level access breach both internally (rogue admin) or externally (system compromise).

Demonstrate how to run end-to-end Confidential Analytics on Azure (presumably on PII data), leveraging Azure SQL Always Encrypted with Secure Enclaves as the database, and containerized Apache Spark on SGX-enabled Azure machines for analytics workloads.

-

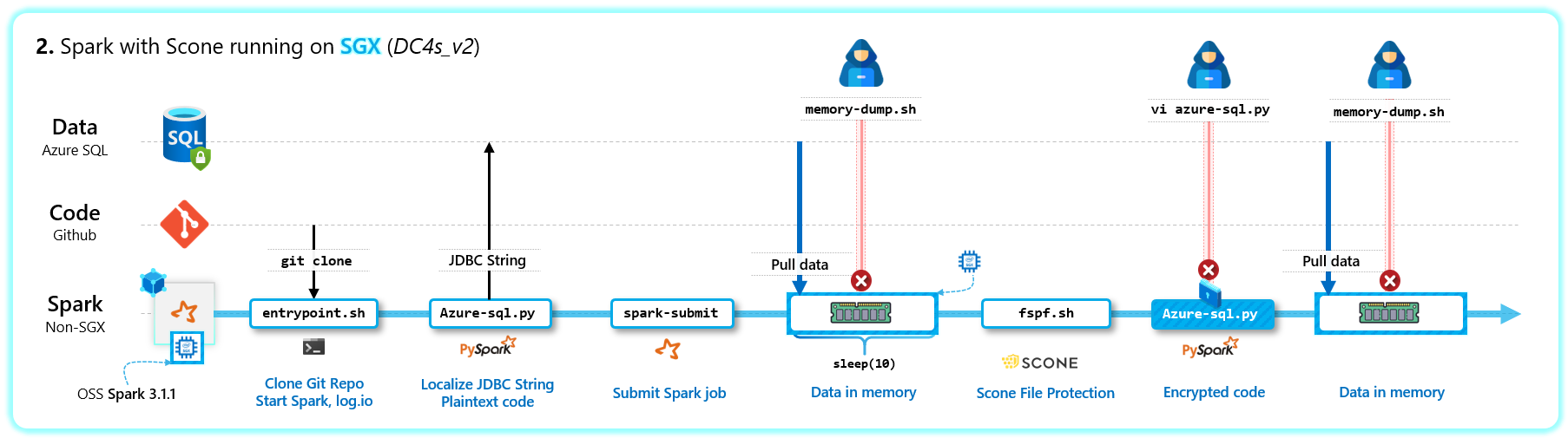

Azure DC Series: We run a containerized Spark 3.1.1 application, on an Azure DC4s_v2 machine running Docker. These machines are backed by the latest generation of Intel XEON E-2288G Processor with SGX extensions - the key component to enabling the core message of this demo.

- 💡 Note: This demo works identically well on AKS running DC4s_v2 nodes. We perform the demo on the standalone node to enjoy the additional benefits of RDP for demonstrations purposes that

kubectlwouldn't allow as easily.

- 💡 Note: This demo works identically well on AKS running DC4s_v2 nodes. We perform the demo on the standalone node to enjoy the additional benefits of RDP for demonstrations purposes that

-

SCONE: To run Spark inside an SGX enclave - we leverage SCONE, who have essentially taken the Open Source Spark code, and wrapped it with their runtime so that Spark can run inside SGX enclaves (a task that requires deep knowledge of the SGX ecosystem - something SCONE is an expert at).

-

Introduction: Here's a fantastic video on SCONE from one of the founders - Professor Christof Fetzer:

-

Deep dive: If you're looking for deeper material on SCONE, here's an academic paper describing the underlying mechanics: link

-

Deep dive w/ commentary: This is an entertaining walkthrough of the above paper by Jessie Frazelle - also a leader in the Confidential Computing space.

-

Sconedocs: Scone's official documentation for getting started: link

-

- Registry for access to the 2 Spark images used per scenario:

#TODO

- Follow the tutorial here to deploy an Azure SQL Always Encrypted with Secure Enclaves Database with some sample PII data: link

- A DC4s_v2 VM deployment (standalone or in AKS cluster)

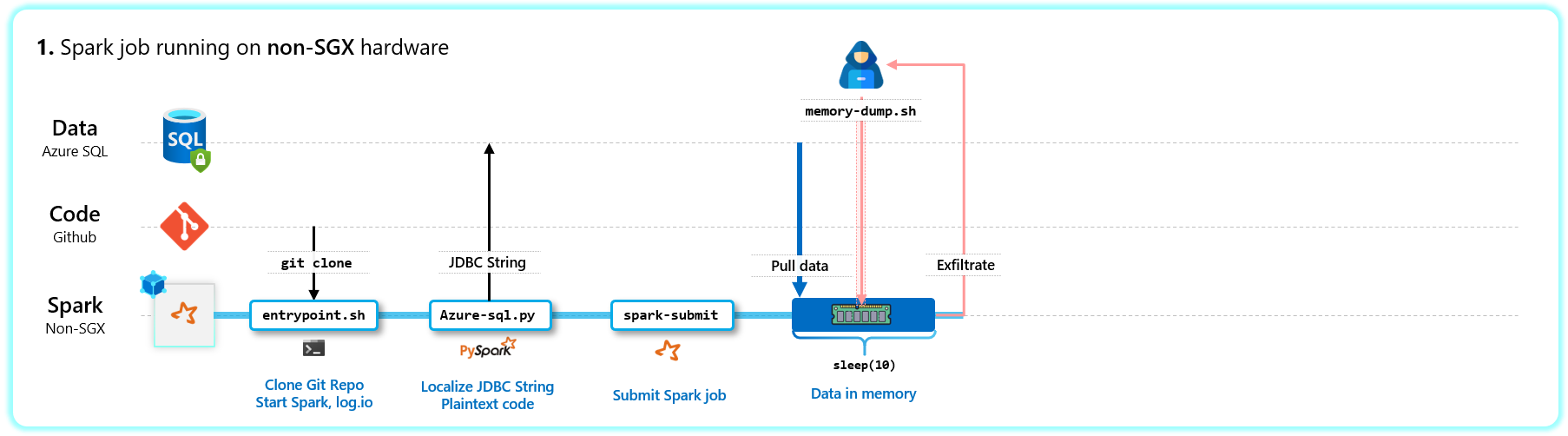

- Scenario 1 (baseline) can run on any machine (including the DC4s_v2 machine). I perform it on my Surface laptop 3.

- Scenario 2 will not work without SGX - i.e. must run on an Azure DC series machine. You can enable xfce to get an RDP interface.

Setup

- RDP into VM/laptop

- Navigate to

http://localhost:28778/(log.io) andlocalhost:8080/(Spark Web UI)

Execute steps

# Run Container

docker run -it --rm --name "sgx_pyspark_sql" --privileged -p 8080:8080 -p 6868:6868 -p 28778:28778 aiaacireg.azurecr.io/scone/sgx-pyspark-sql sh

# Explore Docker Entrypoint to see startup

vi /usr/local/bin/docker-entrypoint.sh

# Replace the JDBC endpoint before running job

vi input/code/azure-sql.py

# E.g. jdbc:sqlserver://your--server--name.database.windows.net:1433;database=ContosoHR;user=your--username@your--server--name;password=your--password;

# Scenario 1: Scone: Off | Data: Encrypted | Code: Plaintext

############################################################

# Run Spark job

/spark/bin/spark-submit --driver-class-path input/libraries/mssql-jdbc-9.2.1.jre8.jar input/code/azure-sql.py >> output.txt 2>&1 &

# Testing the memory attack

./memory-dump.sh

Setup

- RDP into Azure DC VM (need xfce or similar desktop interface)

- Navigate to

http://localhost:6688/#{%221618872518526%22:[%22spark|SCONE-PySpark%22]}(log.io - newer version) andlocalhost:8080/(Spark Web UI)

Execute steps

# Elevate to superuser for Docker

sudo su -

# Set SGX variable - for more information, see https://sconedocs.github.io/sgxinstall/

export MOUNT_SGXDEVICE="-v /dev/sgx/:/dev/sgx"

# Run Container

docker run $MOUNT_SGXDEVICE -it --rm --name "sgx_pyspark_3" --privileged -p 8080:8080 -p 6688:6688 aiaacireg.azurecr.io/scone/sgx-pyspark-3 sh

# Explore Docker Entrypoint to see startup

vi /usr/local/bin/docker-entrypoint.sh

# Replace the JDBC endpoint before running job

vi input/code/azure-sql.py

# E.g. jdbc:sqlserver://your--server--name.database.windows.net:1433;database=ContosoHR;user=your--username@your--server--name;password=your--password;columnEncryptionSetting=enabled;enclaveAttestationUrl=https://your--attestation--url.eus.attest.azure.net/attest/SgxEnclave;enclaveAttestationProtocol=AAS;keyVaultProviderClientId=your--sp--id;keyVaultProviderClientKey=your--sp--secret;

# Scenario 1: Scone: On | Data: CMK Protected | Code: Plaintext

###############################################################

# Run Spark job

/spark/bin/spark-submit --driver-class-path input/libraries/mssql-jdbc-9.2.0.jre8-shaded.jar input/code/azure-sql.py >> output.txt 2>&1 &

# Testing the memory attack

./memory-dump.sh

# Scenario 2: Scone: On | Data: CMK Protected | Code: Encrypted

###############################################################

# Show that there is no encrypted code

tree

# Encrypt code into fspf

source ./fspf.sh

# Show the encrypted code

tree

vi encrypted-files/azure-sql.py

# Run Spark job

/spark/bin/spark-submit --driver-class-path input/libraries/mssql-jdbc-9.2.0.jre8-shaded.jar encrypted-files/azure-sql.py >> output.txt 2>&1 &

# Testing the memory attack

./memory-dump.sh