This repo contains the submissions and related material for "Team: Think Like Car" for Udacity "Self Driving Car" Nano degree program's Term 3 - Project 3, "System-Integration"

The main goal of the project is to write different nodes of ROS, to be able to successfully run the code on Udacity self driving car "Carla". The code will be first tested on simulator then on car. The key implementations are :

- Detection of Traffic lights - RED, YELLOW, GREEN

- Stopping car on RED, moving on GREEN

- Sending the control command on drive by wire, for throttle, brake and steering for car to be controlled and be able to move.

| Member | ||

|---|---|---|

| Sulabh Matele | sulabhmatele@gmail.com | |

| Clint Adams | clintonadams23@gmail.com |  |

| Sunil Prakash | prakashsunil@gmail.com |  |

| Ankit Jain | asj.ankit@gmail.com |  |

| Frank Xia | tyxia2004@gmail.com |  |

| Description | |

|---|---|

| Car stopping on RED |  |

| Car moves back on GREEN |  |

Here we use ROS nodes to implement core functionality of the autonomous vehicle system, including traffic light detection, control, and waypoint following. The code was tested in a simulator, and later run on Carla, the Udacity's own self-driving car.

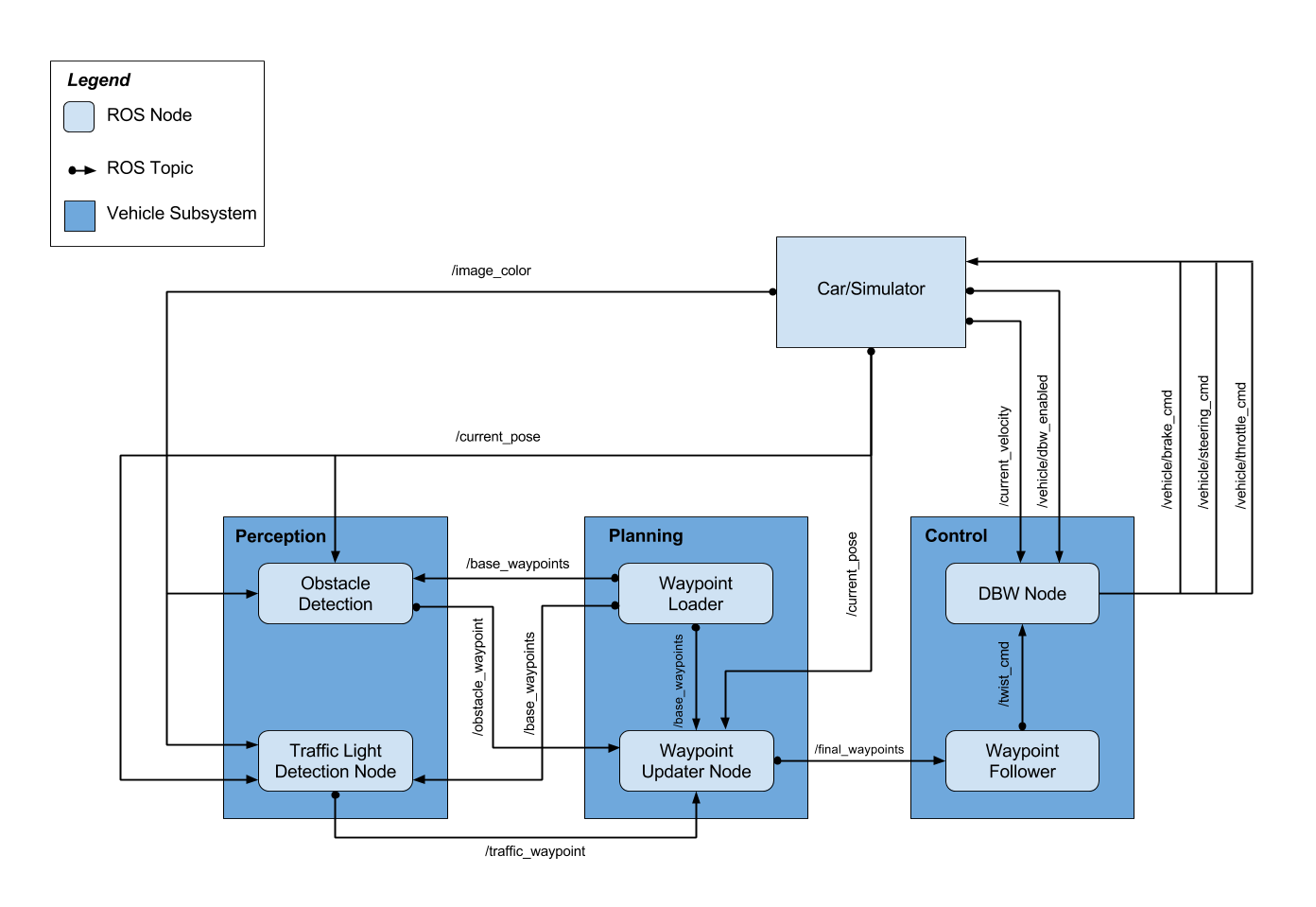

The following is a system architecture diagram showing the ROS nodes and topics used in the project.

The main responsibilities of this node are:

- This node listens for the

/base_waypointstopic sent byWaypoint Loadernode. This topic sends all the available waypoints at the start and then never updates. - This node stores all the waypoints and sends a chunk of waypoints at a time, when it receives the current vehicle position from simulator/car.

- This node listens for

/current_posetopic to receive car's current position. Then decides to send the required number of future waypoints to follow by publishingfinal_waypoints - This node also listens for

/traffic_waypointand/obstacle_waypointtopics to receive the information about RED signal and obstacle on road if any. - When RED light is detected by

tl_classifierthen it sends the RED light waypoint index, and then waypoint updater starts setting velocities for future waypoints to make car stop at the RED light waypoint. - Once the light turns GREEN, then

tl_classifieragain informs to waypoint updater. and waypoint updater sends next waypoints with required velocity to make car moving.

Different challenges in implementation of Waypoint Updater:

- The main challenge was to efficiently detecting the nearest waypoint to the car position, this was resolved by checking the Euclidean distance between the simulator reported car position to the list of waypoints using method

euclidean_distand then if the distance is increasing for 25 waypoints then break the loop. - The next challenge was to handle the stopping and starting car on RED and GREEN :

- When the traffic light turns RED, it sets

self.is_stop_reqso waypoint updater stats setting velocities in advance uptoself.decrement_factoradvanced waypoints. When car stops on RED, then we also stop sending new list of waypoints so, car keeps in stop position. - When the light turns GREEN, the request to stop

self.is_stop_reqgets clear and makes the methodpose_cbto fill the waypoints with their velocities, which in turn moves the car.

- When the traffic light turns RED, it sets

- The last challenge was to resolve the condition when the car is at end of the track and our list of waypoints is about to be finished. This condition is handled as an special condition and we set

self.short_of_points, which then takes care of setting velocities to 0, near end of waypoints.

This node will read the current state and future velocity from the system to provide the best (throttle, brake, steering) tuple.

Publisher:

/vehicle/steering_cmd: provide the steering angle of the next step./vehicle/throttle_cmd: provide the throttle of the next step./vehicle/brake_cmd: provide the brake of the next step.

Subscriber:

/twist_cmd: the next angular and linear velocity of the car/current_velocity: the current velocity of the car/dbw_enabled: whether a driver is taking control of the car. Iffalse, the controller will not do anything.

Important features:

- The current command frequency is set to 50HZ for optimal performance.

- The steer value is calculated using the

YawControllerclass with the givenwheel_base,steer_ratio,min_speed,max_lat_accel,max_steer_angle - The throttle value is calculated using

PIDclass withkp = 2.0ki = 0.4kd = 0.1

- The brake value is calculated using

brake = vehicle_mass * wheel_radius * (current_v - target_v)/update_frequency

This node is responsible for the traffic light detection and classifying. If the traffic light is found and is as red then it locates the closest waypoint to that red light's stop line and publishes the index of that waypoint to the /traffic_waypoint topic.

tl_detector node subscribes to the following topics:

- /base_waypoints: Waypoints for the whole track are published to this topic. This publication is a one-time only operation.

- /current_pose: To receive the current position of the vehicle.

- /image_color: To receive camera images to identify the traffic light state. Every time a new image is received, the traffic light node finds the location of the current car with respect to the traffic light, it is close to traffic light, it calls the classifier to find the state of the light, if it is red, it sends the waypoint and traffic light index to waypoint_follower node.

- /vehicle/traffic_lights: If ground truth data has been created. This topic provides the location of the traffic light in the map space and helps acquiring an accurate ground truth data source for the traffic light classifier by sending the current color state of all traffic lights in the simulator.

example:

Hand labeled Udacity Simulator data

example:

Hand labeled Udacity Traffic Light Detection Test Video data

example:

Train data count: 11125 Validation data count: 3178 Test data count: 1589

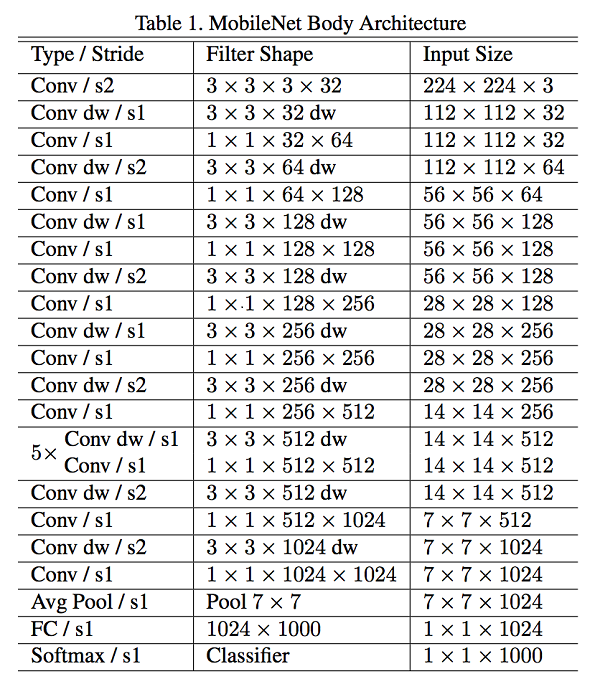

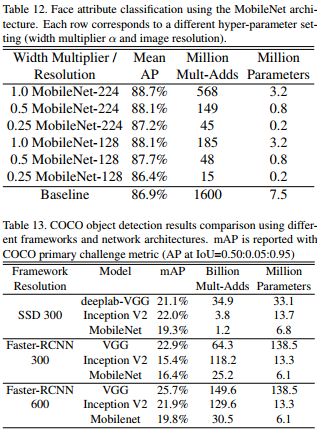

For the task of classifying traffic lights, we fed images recorded from the vehicles camera through a convolutional neural network. Because the classifier would be running at high frequency, in real time, we needed an architecture with relatively few parameters. A fine-tuned Mobilenet offered a good balance between efficiency and accuracy.

Because we have data for stop line locations available, we decided not to use an object detection approach and instead classify entire images as containing, simply, a red, yellow, or green light. It can be seen in the Mobilenet paper that using an object detection based approach such as Faster-RCNN or SSD brings the number of mult-adds from the order of millions to the order of billions. We felt this was an unncessary use of resources for the task, as defined.

Keras offers a friendly api for loading a Mobilenet model pretrained on the imagenet dataset. We used this, along with various image augmentation techniques, to train the model we would use for classification. We quickly found that using Keras in the inference stage did not offer acceptable performance, however, so we converted the fine-tuned model to a tensorflow protobuf file. We also applied various optimizations, including freezing the graph and running the Tensorflow optimize_for_inference script. With this, we were able to get the inference time to less than 100 ms per image on a cpu.

Acquiring training data was a major challenge. Bosch, helpfully, made their traffic light training data available. The Bosch data (realistically) contained many images that had more than one light, making it unclear what the singular label of that image should be.

Bosch labeled the data with bounding boxes instead of a single label. To get around this, we labeled the images using the label of the largest bounding in each image.

Performance was another challenge. During testing, we utilized a simulator running on a virutal machine image with dependencies similar to what would be used on the car. We noticed a considerable amount of latency between each prediction. The natural assumption was that it was because of the model running inference. So we optimized and experimented with different Mobilenet hyper-parameters. After profiling it was revealed that there were sections of the code that could be optimized, and that the model was not the bottleneck. This solved the performance issues.