Table of Contents

Interested to explore Reddit data for trends, analytics, or just for the fun of it?

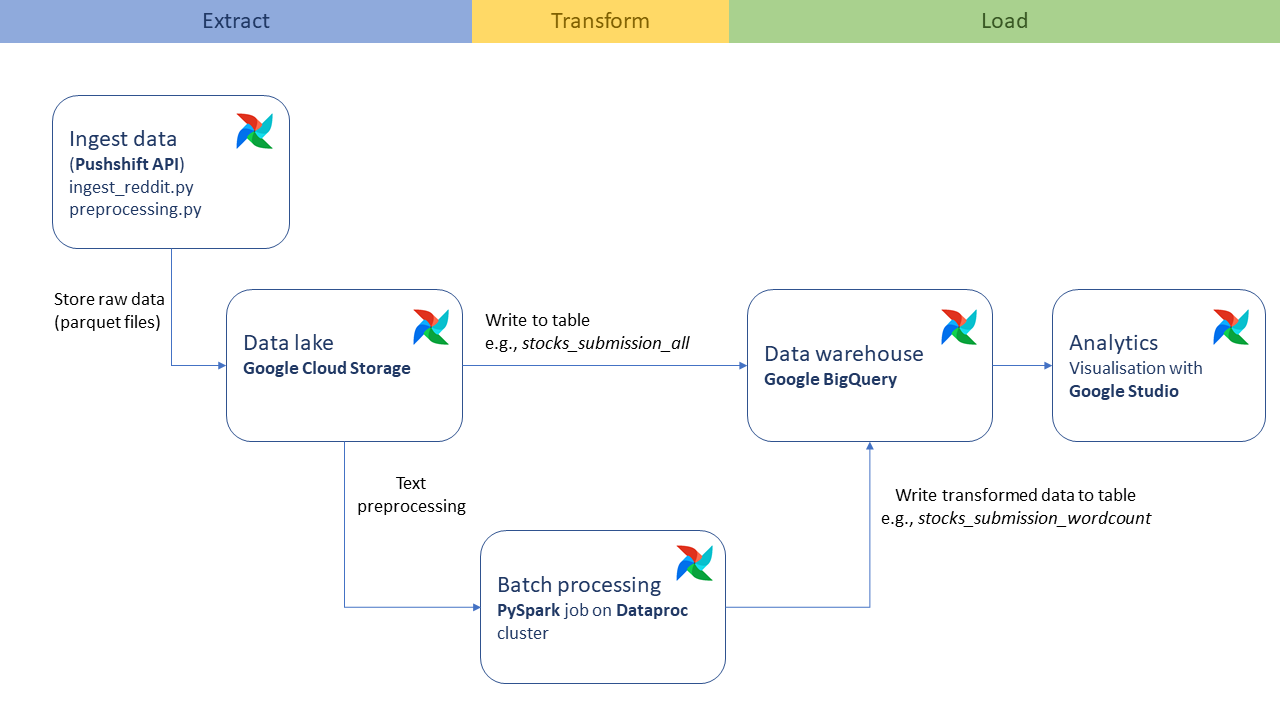

This project builds a data pipeline (from data ingestion to visualisation) that stores and preprocess data over any time period that you want.

- Data Ingestion: Pushshift API

- Infrastructure as Code: Terraform

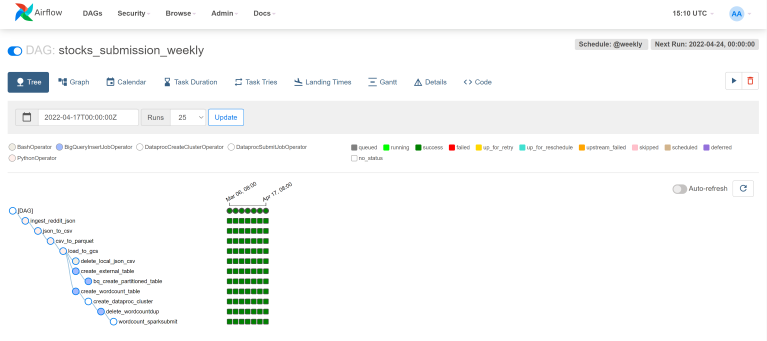

- Workflow Orchestration: Airflow

- Data Lake: Google Cloud Storage

- Data Warehouse: Google BigQuery

- Batch Processing: Spark on Dataproc

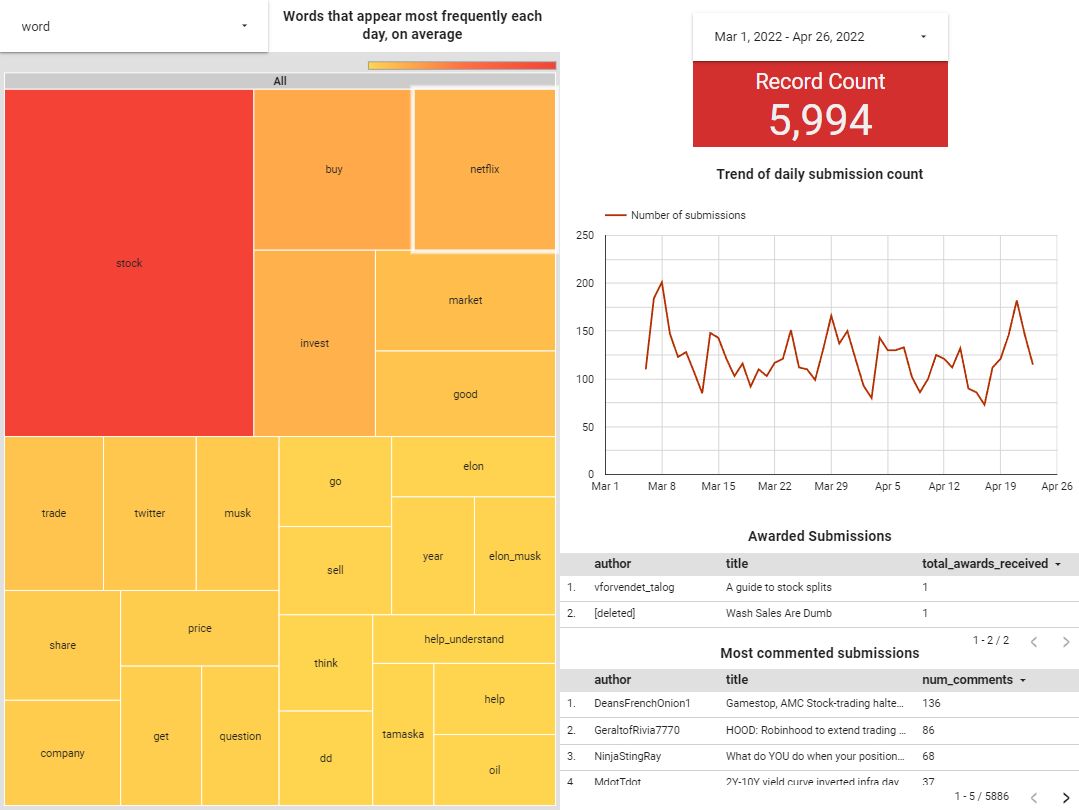

- Visualisation: Google Data Studio

Cloud infrastructure is set up with Terraform.

Cloud infrastructure is set up with Terraform.

Airflow is run on a local docker container. It orchestrates the following on a weekly schedule:

- Download data (JSON)

- Parquetize the data and store it in a bucket on Google Cloud Storage

- Write data to a table on BigQuery

- Create cluster on Dataproc and submit PySpark job to preprocess parquet files from Google Cloud Storage

- Write preprocessed data to a table on BigQuery

I created this project in WSL 2 (Windows Subsystem for Linux) on Windows 10.

To get a local copy up and running in the same environment, you'll need to:

- Install Python (3.8 and above)

- Install VSCode

- Install WSL 2 if you haven't

- Install Terraform for Linux

- Install Docker Desktop

- Install Google Cloud SDK for Ubuntu

- Have a Google Cloud Platform account

- Clone this repository locally

- Go to Google Cloud and create a new project. I set the id to 'de-r-stocks'.

- Go to IAM and create a Service Account with these roles:

- BigQuery Admin

- Storage Admin

- Storage Object Admin

- Viewer

- Download the Service Account credentials, rename it to

de-r-stocks.jsonand store it in$HOME/.google/credentials/. - On the Google console, enable the following APIs:

- IAM API

- IAM Service Account Credentials API

- Cloud Dataproc API

- Compute Engine API

I recommend executing the following on VSCode.

-

Using VSCode + WSL, open the project folder

de_r-stocks. -

Open

variables.tfand modify:variable "project"to your own project id (I think may not be necessary)variable "region"to your project regionvariable "credentials"to your credentials path

-

Open the VSCode terminal and change directory to the terraform folder, e.g.

cd terraform. -

Initialise Terraform:

terraform init -

Plan the infrastructure:

terraform plan -

Apply the changes:

terraform apply

If everything goes right, you now have a bucket on Google Cloud Storage called 'datalake_de-r-stocks' and a dataset on BigQuery called 'stocks_data'.

-

Using VSCode, open

docker-compose.yamland look for the#self-definedblock. Modify the variables to match your setup. -

Open

stocks_dag.py. You may need to change the following:zoneinCLUSTER_GENERATOR_CONFIG- Parameters in

default_args

- Using the terminal, change the directory to the airflow folder, e.g.

cd airflow. - Build the custom Airflow docker image:

docker-compose build - Initialise the Airflow configs:

docker-compose up airflow-init - Run Airflow:

docker-compose up

If the setup was done correctly, you will be able to access the Airflow interface by going to localhost:8080 on your browser.

Username and password are both airflow.

-

Go to

wordcount_by_date.pyand modify the string value ofBUCKETto your bucket's id. -

Store initialisation and PySpark scripts on your bucket. It is required to create the cluster to run our Spark job.

Run in the terminal (using the correct bucket name and region):

gsutil cp gs://goog-dataproc-initialization-actions-asia-southeast1/python/pip-install.sh gs://datalake_de-r-stocks/scriptsgsutil cp spark/wordcount_by_date.py gs://datalake_de-r-stocks/scripts

Now, you are ready to enable the DAG on Airflow and let it do its magic!

When you are done, just stop the airflow services by going to the airflow directory with terminal and execute docker-compose down.

Authorisation error while trying to create a Dataproc cluster from Airflow

- Go to Google Cloud Platform's IAM

- Under the Compute Engine default service account, add the roles 'Editor' and 'Dataproc Worker'.

- Refactor code for convenient change to

subredditandmode. - Use Terraform to set up tables on BigQuery instead of creating tables as part of the DAG.

- Unit tests

- Data quality checks

- CI/CD

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the MIT License. See LICENSE.txt for more information.

Use this space to list resources you find helpful and would like to give credit to. I've included a few of my favorites to kick things off!