The open-source tool for Long Tail Data Management

Website • Install • Docs • Make your own from a Template • Try it now

Join our Slack to talk to the devs.

CodeFlare + Clowder makes it easy to run ML inference and similar workloads over your files, no matter how unique your data is.

At its heart, extractors run a Python function over every file in a dataset. They can run at the click of a button in Clowder web UI or like an event listener every time a new file is uploaded.

Extractors are performant, parallel-by-default, web-native Clowder Extractors using CodeFlare & Ray.io.

Check out our 📜 blog post on the incredible speed and developer experience of building on Ray.

Need to process a lot of files? This is great for ML inference and data pre-processing. These examples work out of the box or you can swap in your own model!

Huggingface Transformers example

Have daily data dumps? Extractors are perfect for event-driven actions. They will run code every time a file is uploaded. Uploads themselves can be automated via PyClowder for a totally hands-free data pipeline.

Benefit from the rich featureset & full extensibility of Clowder:

- Instead of files on your laptop, use Clowder to add collaborators & share datasets via the browser.

- Scientists like that we work with every filetype, and have rich extensibility for any job you need to run.

- Clone this repo inside your Clowder directory (or install Clowder if you haven't yet):

cd your/path/to/clowder

git clone git@github.com:clowder-framework/CodeFlare-Extractors.git- Install CodeFlare-CLI

brew tap project-codeflare/codeflare-cli https://github.com/project-codeflare/codeflare-cli

brew install codeflareOn Linux, please install from source, as described in the CodeFlare-CLI repo. Windows has not been tested.

Invoke from inside your Clowder directory, so that we may respect Clowder's existing Docker Compose files.

- Launch the codeflare CLI to try our default extractors. This will launch Clowder. Do not use sudo.

cd your/path/to/clowder

codeflare ./CodeFlare-Extractors-

Now, as an example, upload a few images to a Clowder Dataset so we can try to classify it (into one of 1000 imagenet classes).

-

Finally, run the extractor! In the web app, click “Submit for extraction” (shown below).

Start from our heavily documented & commented template here. Just fill in a single python function and add dependencies to the requirements.txt (or Dockerfile for infinitly complex projects)! ./template_for_custom_parallel_batch_extractors.

Here we can walk thru exactly what to modify. It's really as easy filling in this function, and we'll run it in parallel over all the files in your dataset.

There are just two parts: An init() that runs once per thread to setup your ML model, or other class variables. And a process_file() that runs, you guessed it, once per file.

@ray.remote

class AsyncActor:

def __init__(self):

"""

⭐️ Here, define global values and setup your models.

This code runs a once per thread, if you're running this in parall, when your code first launches.

For example:

```

from tensorflow.keras.applications.resnet50 import ResNet50

self.model = ResNet50(weights='imagenet')

```

"""

self.model = 'load your model here' # example

def process_file(self, filepaths: str):

"""

⭐️ Here you define the action taken for each fo your files.

This function will be called once for each file in your dataset, when you manually click

"extract this dataset"

param filepath: is a single input filepath

return {Dictionary}: Return any dictionary you'd like. It will be attached to this file as Clowder Metadata.

"""

print("In process file \n")

start_time = time.monotonic()

# 👉 ADD YOUR PARALLEL CODE HERE

# For example:

prediction = self.model.run(filepath)

# return your results, it MUST return a Python dictionary.

metadata = {

"Predicted class": 'happy cats!', # for example

"Extractor runtime": f"{start_time - time.monotonic():.2f} seconds",

}

assert type(metadata) == Dict, logger.debug(f"The returned metadata must be a Dict, but was of type {type(metadata)}")

return metadataTo see debug steps in the Clowder web UI, which is often helpful, simply use logger.debug(f"My custom message"). The logger is already available in your environment.

(Coming soon) By default, the code will detect your number of availbe CPU threads, and spawn one worker per thread, likely the only time you'll want to use less is if you anticipate out of memory errors.

This is defined in each extractor's extractor_info.json file, which defines how the extractor appears inside Clowder. You can also change the extractor's name and add authors there.

"dataset": [

"file-added"

]"dataset": [

"*"

]This won't run on datasets, it is for cases where you want to manually click on a file, then run just that one file through an extractor.

"file": [

"*"

]Here's a list of common filetypes (i.e. MIME types) that we support: https://developer.mozilla.org/en-US/docs/Web/HTTP/Basics_of_HTTP/MIME_types/Common_types.

You can use a list of these MIME type strings in the extractors, then it will only run on those files. For example:

"dataset": [

"image/jpeg",

"image/png",

"image/tiff"

]This repository contains scripts to handle all regions and sites for Landsat data processing.

-

Obtain the Google Cloud keys: Ensure you have the Google Cloud keys in the following format:

// landsattrend-pipeline/project-keys/uiuc-ncsa-permafrost-44d44c10c9c7.json { "type": "service_account", "project_id": "", "private_key_id": "", "private_key": "-----BEGIN PRIVATE KEY----- ...", "client_email": "", "client_id": "", "auth_uri": "", "token_uri": "", "auth_provider_x509_cert_url": "", "client_x509_cert_url": "" }

-

Export to Google Cloud: Use the export_to_cloud.py script to export a specific region to Google Cloud. The region can be one of the following: ALASKA, CANADA, EURASIA1, EURASIA2, EURASIA3, TEST.

python export_to_cloud.py $regionAlternatively, use the export_all_to_cloud.py script to export all regions to Google Cloud.

python export_all_to_cloud.py

Note: Future improvements will include adding parameters for start and end year.

-

Download from Google Cloud to DELTA or other HPC: Use the cloud_download_all_regions.py script to download all data from Google Cloud to a specified directory.

python cloud_download_all_regions.py $download_directoryAlternatively, use the cloud_download_region.py script to download data for a specific region.

python cloud_download_region.py $region $download_directory

-

Generate SLURM Files: Use the generate_slurm_for_all_sites.py script to generate the SLURM file for all sites.

python generate_slurm_for_all_sites.py

-

Upload Input and Output: Use the upload_data.py script to upload all the input data.

python upload_data.py $url $key $landsat_space_id $data_dir

Use the upload_process.py script to upload all the results. This script assumes a specific structure of the folders under process.

python upload_process.py $url $key $landsat_space_id $process_dir

Note: Future improvements will include making the script more generic to handle different folder structures.

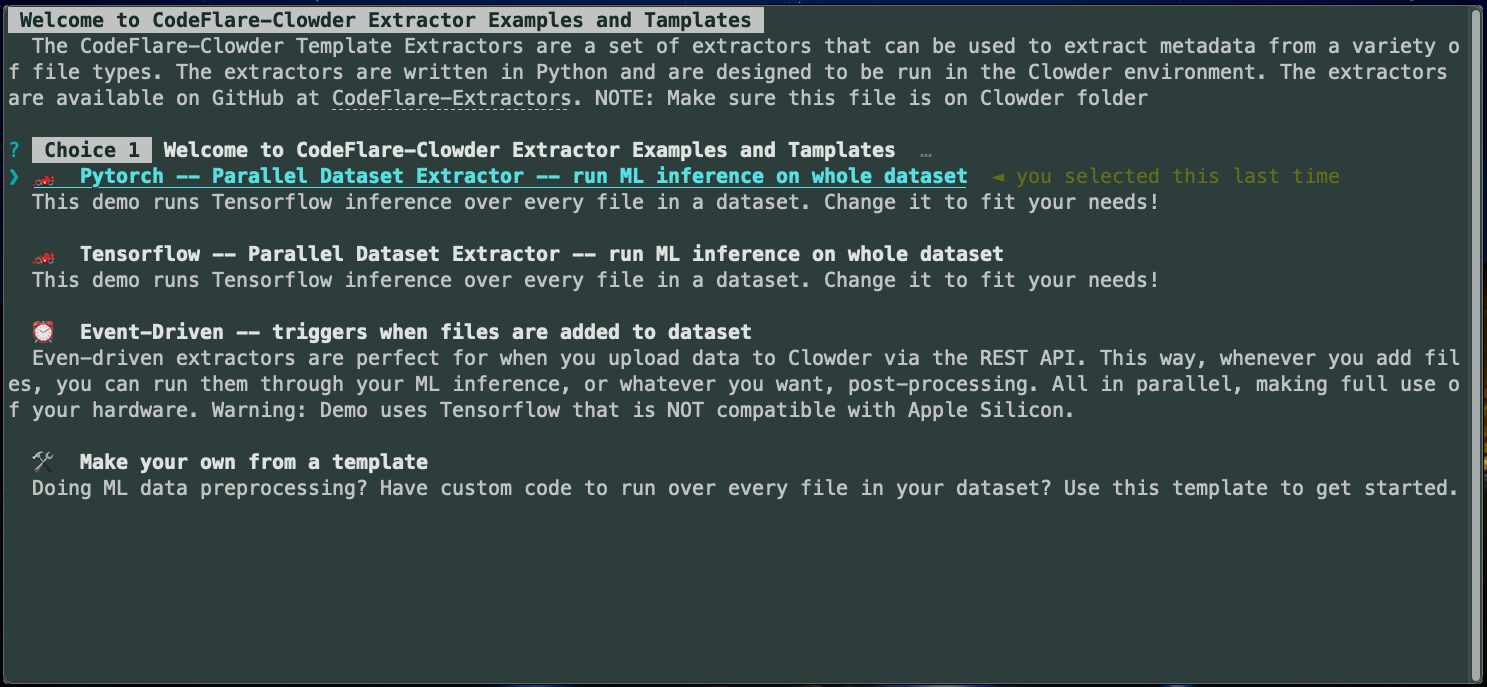

What: Running the CodeFlare-CLI build the selected extractor's Dockerfile adds it to Clowder’s docker-compose-extractors.yaml file. Immediately after, we start Clowder as normal, e.g. docker-compose -f docker-compose.yml -f docker-compose.extractors.yml up -d. All codeflare code lives in index.md because index files are run by default. But any CodeFlareCLI-compatible .md file can be run by calling codeflare my_file.md to launch new CLI programs.

How: It uses a pretty involved customization on layer top of normal markdown syntax to enable interactive CLIs called MDWizzard, an open source project from IBM.