Omega_Gomoku_AI is a Gomoku game AI based on Monte Carlo Tree Search. It's written in Python. The neural network part uses the Keras framework.

Omega_Gomoku_AI is not only used for Gomoku game, but you can also customize the size of the board and an n-in-a-row game. Tic-tac-toe, for example, is a 3-in-a-row game, and played on a board of size 3.

This repo provides a visual game interface, easy-to-use training process, and easy-to-understand code.

Enjoy yourself ~~~

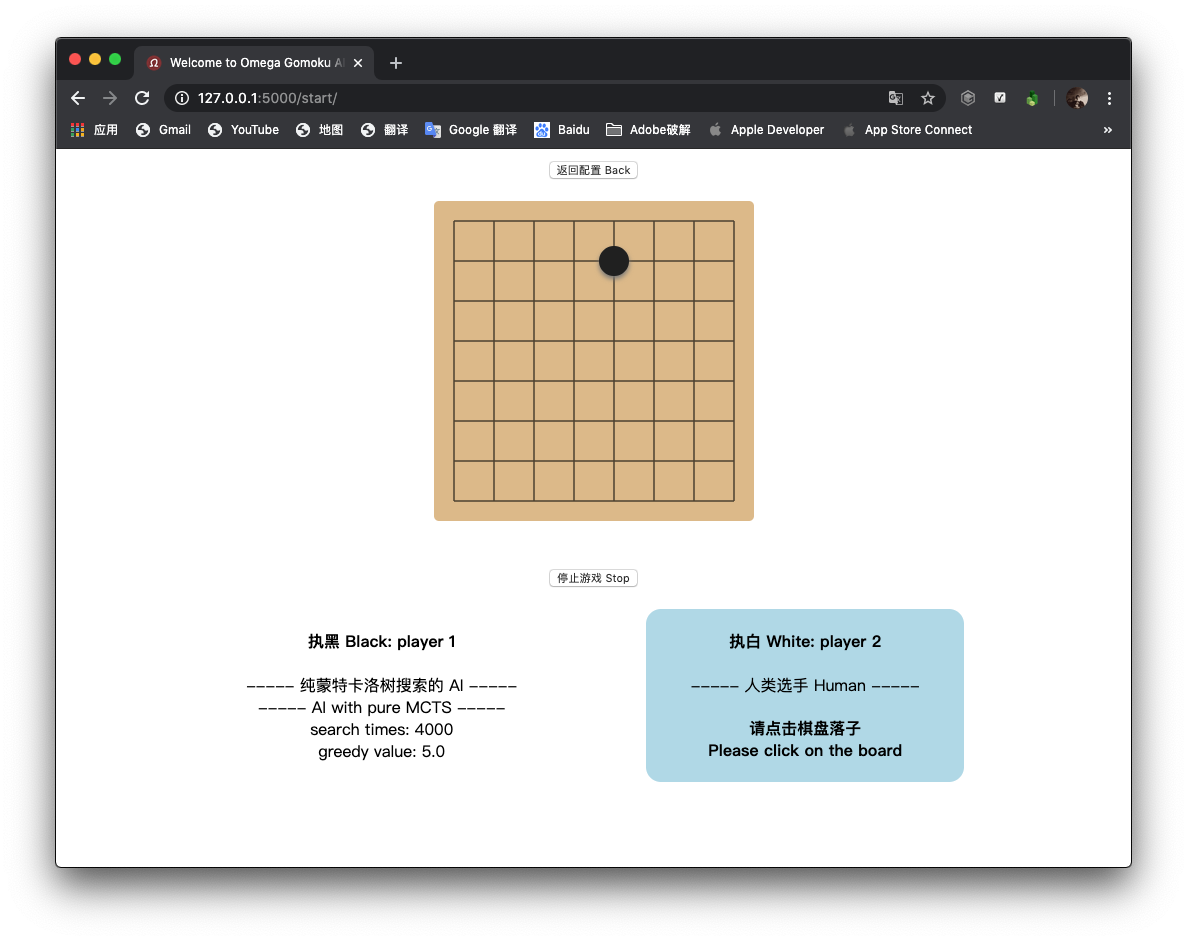

Run visual game using web server is available now!

1.3 is available, visual game etc. were added.

✅ Monte Carlo tree -> ✅ Train models -> ✅ Visual game interface -> Custom battle.

About the algorithm, Omega_Gomoku_AI refers to this article: Monte Carlo Tree Search – beginners guide, written by int8.

Omega_Gomoku_AI Inspired by AlphaZero_Gomoku and tictactoe_mcts.

- start.py - Start the game, human vs AI, or human vs human, or AI vs AI.

- start_from_web.py - Start the visual game, and the web server.

- train.py - The training script, which can be used to train with different networks and saved models.

- configure.py - Configure the game, including board size, n-in-a-row, and Monte Carlo Tree search times.

- game.conf - Configuration file.

- Function.py - Some functions.

- console_select.py - Some console input functions.

- Game/

- Game.py - A script to start the game.

- Board.py - Game board, including board rendering, execution and result determination.

- BoardRenderer.py - An abstract class named BoardRenderer, implemented by ConsoleRenderer and VisualRenderer.

- ConsoleRenderer.py - implements class BoardRenderer.

VisualRenderer.py - implements class BoardRenderer.

- Player/

- Player.py - An abstract class named Player, implemented by Human and AIs.

- Human.py - Human player, implements class Game.

- AI_MCTS.py - AI player with pure MCTS, implements class Player and MonteCarloTreeSearch.

- AI_MCTS_Net.py - AI player with MCTS and neural network, implements class Player and MonteCarloTreeSearch.

- AI/ - AIs.

- MonteCarloTreeSearch.py - An abstract class named MonteCarloTreeSearch, implements by all AIs using MCTS.

- MonteCarloTreeNode.py - Base class for nodes in Monte Carlo Tree.

- Network/ - Networks.

- Network.py - An abstract class Network, implements by Networks.

- PolicyValueNet_from_junxiaosong.py - A policy-value network, composed by @junxiaosong.

PolicyValueNet_AlphaZero.py - A policy-value network from AlphaZero paper.

- Train/

- train_with_net_junxiaosong.py - Training script, called by 'train.py'.

- Web/

- web_configure.py - The '/configure' flask pages.

- web_game_thread.py - The game script, using multithreading.

- web_select.py - A class named web_select, used for web configure.

- web_start.py - The '/start' flask pages, and websocket communication code.

- static/

- templates/ - htmls.

- Model/ - Models. Training data will be saved here.

You can try Omega_Gomoku_AI yourself, and there are two ways to try it.

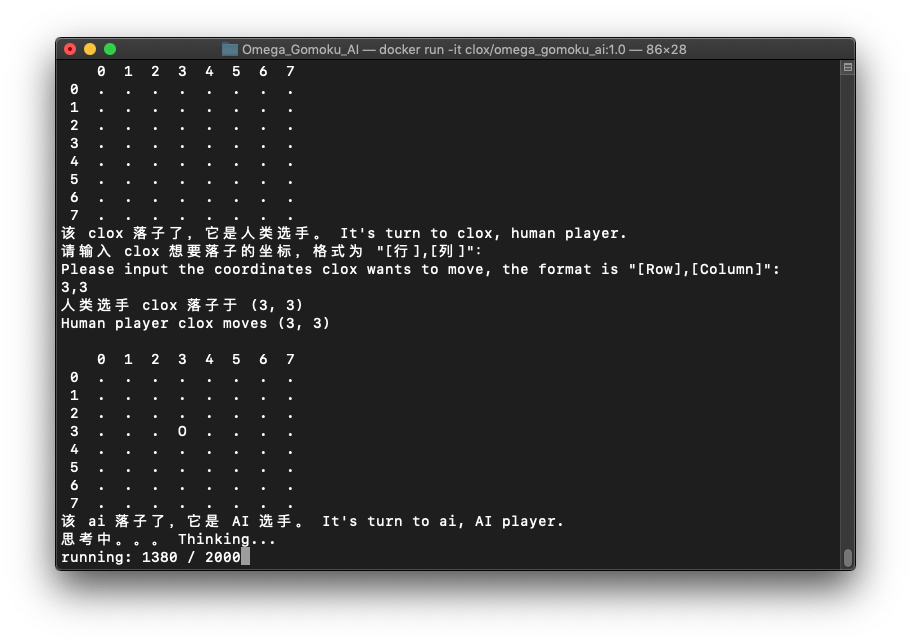

If you have installed Docker, run the following command:

$ docker pull clox/omega_gomoku_ai:latestThen, just run this:

$ docker run -it clox/omega_gomoku_ai:latestAdd --rm after -it can automatically remove the container when it exits.

That's all, the above is the simplest usage, It's a really simple way!

If you want to render the visual game using web server in Docker, remember to enable port mapping:

$ docker run -it -p 5000:5000 clox/omega_gomoku_ai:latestIn addition to mapping to port 5000, you can also change '5000' before the colon to other values.

It is worth noting that if you want to save the training data on your own machine, you need to add -v to mount directory.

$ docker run -it -v [Path]:/home/Model clox/omega_gomoku_ai:latest[Path] here should fill in the local model path you want to save or load. Remember, cannot use relative directory here.

That's all, It's a really simple way!

PS:

- Homepage (clox/omega_gomoku_ai) of this docker image.

- The compressed size of the Docker image is about 428 MB.

- The Docker image is based on tensorflow/tensorflow:2.0.0-py3.

- If you use Docker, you do not need to clone this repo to your local machine.

- Docker Hub image cache in China.

Configuring...

Running...

Make sure you have a Keras backend (Tensorflow) environment installed on your computer, and after cloning this repo, then run the following command:

$ pip install -r requirements.txtIf necessary, the command 'pip' should be changed to 'pip3'.

In Mac/Linux, just run:

$ bash game.shis enough, execute the file game.sh in PC.

Or, you can also run:

$ python configure.pyto configure the game, and run:

$ python start.pyto start the game.

$ python train.pyto train a model.

$ python start_from_web.pyto start the visual game using web server.

Either installation way is fairly simple.

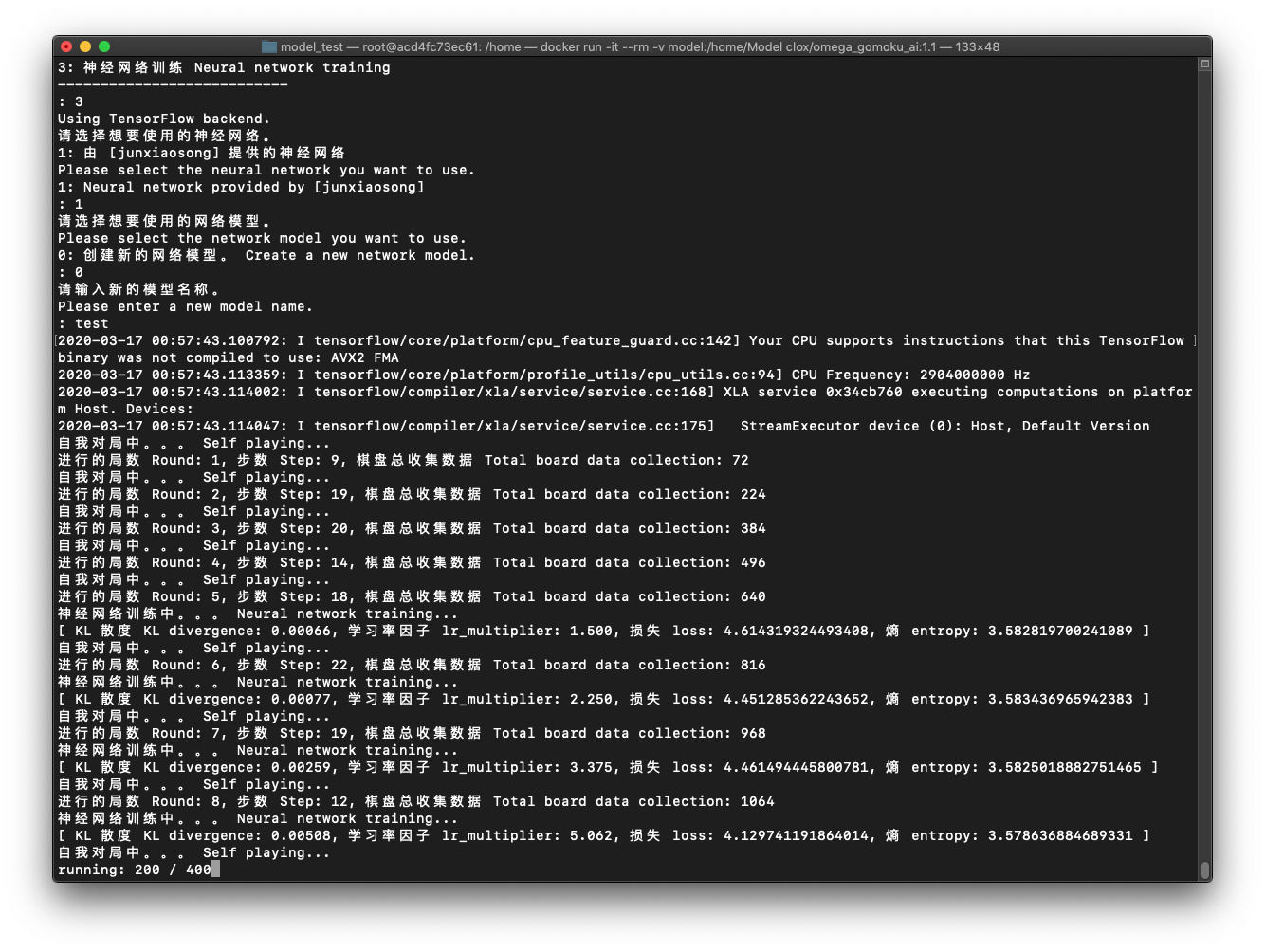

If you want to train on some cloud platforms, etc. that need to be quick and convenient and do not allow user input, you may need to run train.py with parameters:

$ python train.py 1 my_model 1000The first parameter '1' means use the '1st' neural network, it must be the serial number of the neural network.

The second parameter 'my_model' means training the model named 'my_model'. If there is, it will automatically train the 'latest.h5' record. If there is no, it will automatically create it.

The third parameter '1000' indicates that the number of self-play is '1000'. If it is less than or equal to 0, it is infinite. It must be an integer.

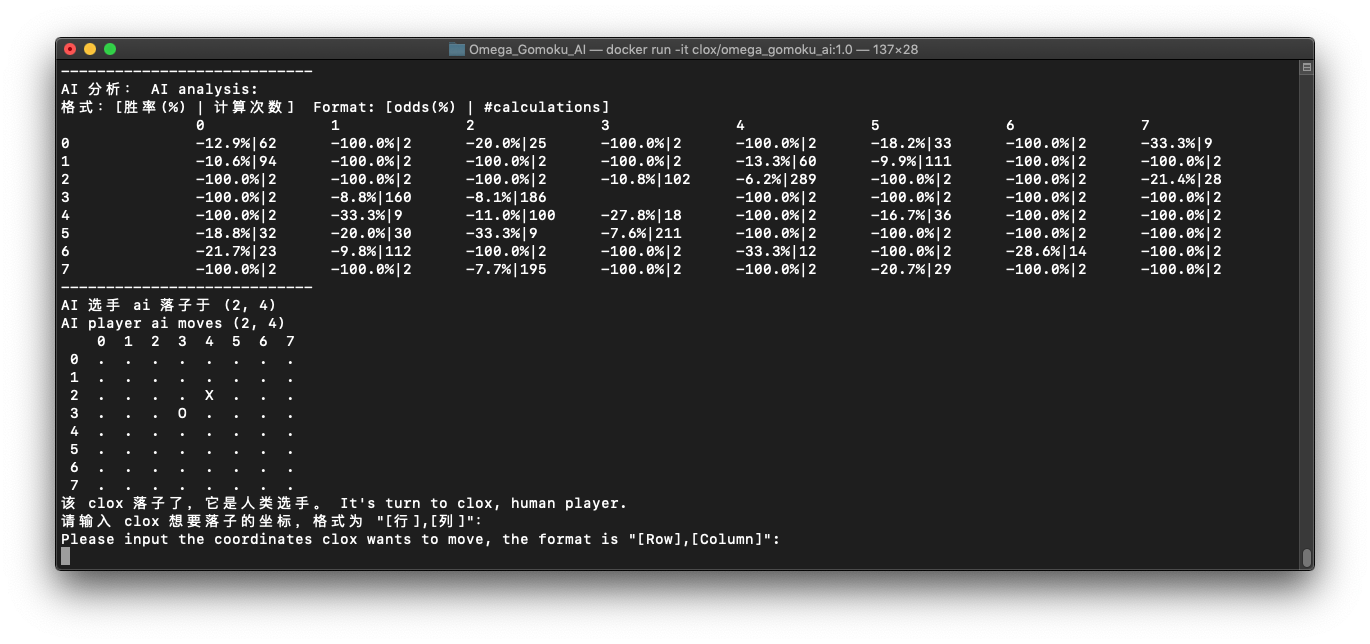

By default, this is a 5-in-a-row game played on an 8 * 8 size of board, and AI with pure MCTS searches 2,000 times each turn.

Sometimes, AI with pure MCTS may do weird action, because 2,000 times searching is not enough in a 5-in-a-row game in fact. So you can adjust the board size to 3 * 3 or 6 * 6, set to a 3-in-a-row or 4-in-a-row game, like tac-tic-toe.

Sure, It's a good idea to adjust 2,000 times to more, but it will take more time to think.

Now, we open the choice of greedy value, you can adjust the exploration degree of Monte Carlo tree search by yourself.

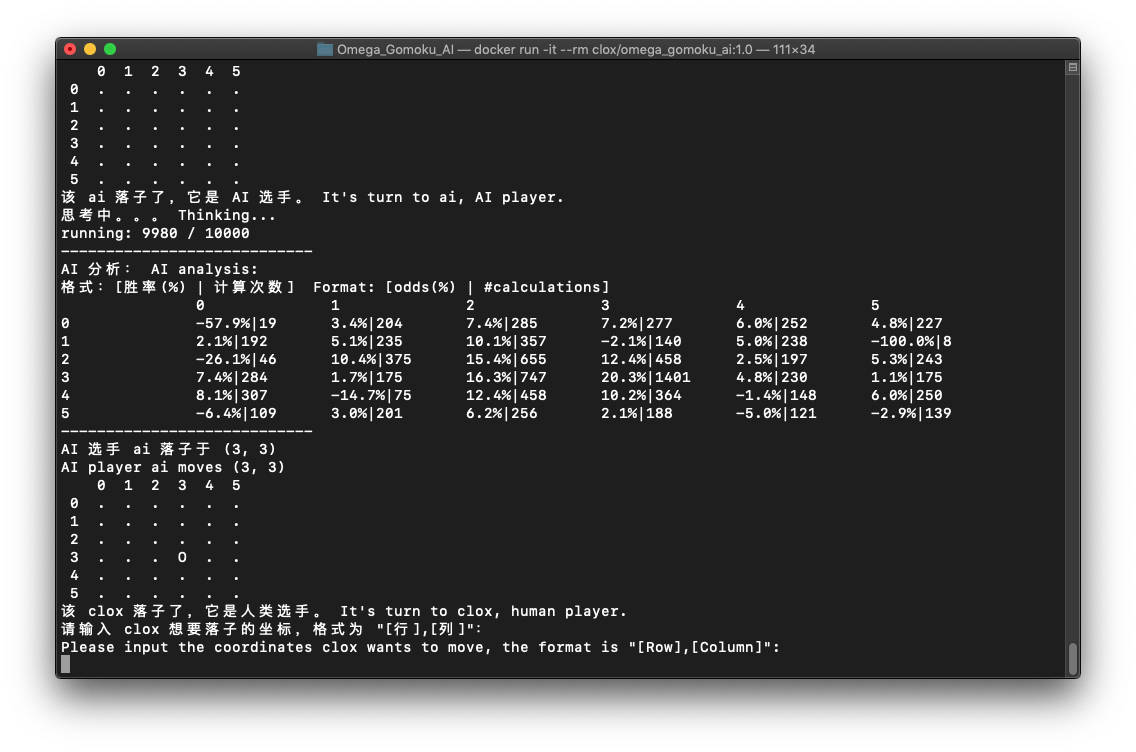

For example, I adjusted the number of Monte Carlo tree searches to 10,000 and played a 4-in-a-row game on a 6 * 6 board. It can be seen in the AI analysis that the Monte Carlo tree has traversed almost all of the board.

Will, AI with MCTS + neural network can solve this problem.

Training is available now.

After opening the web server to run the game, open your browser and enter http://127.0.0.1:5000 or http://0.0.0.0:5000 to enjoy the game.

Chrome and Safari passed the support test.

Omega_Gomoku_AI is licensed under MIT license. See LICENSE for details.