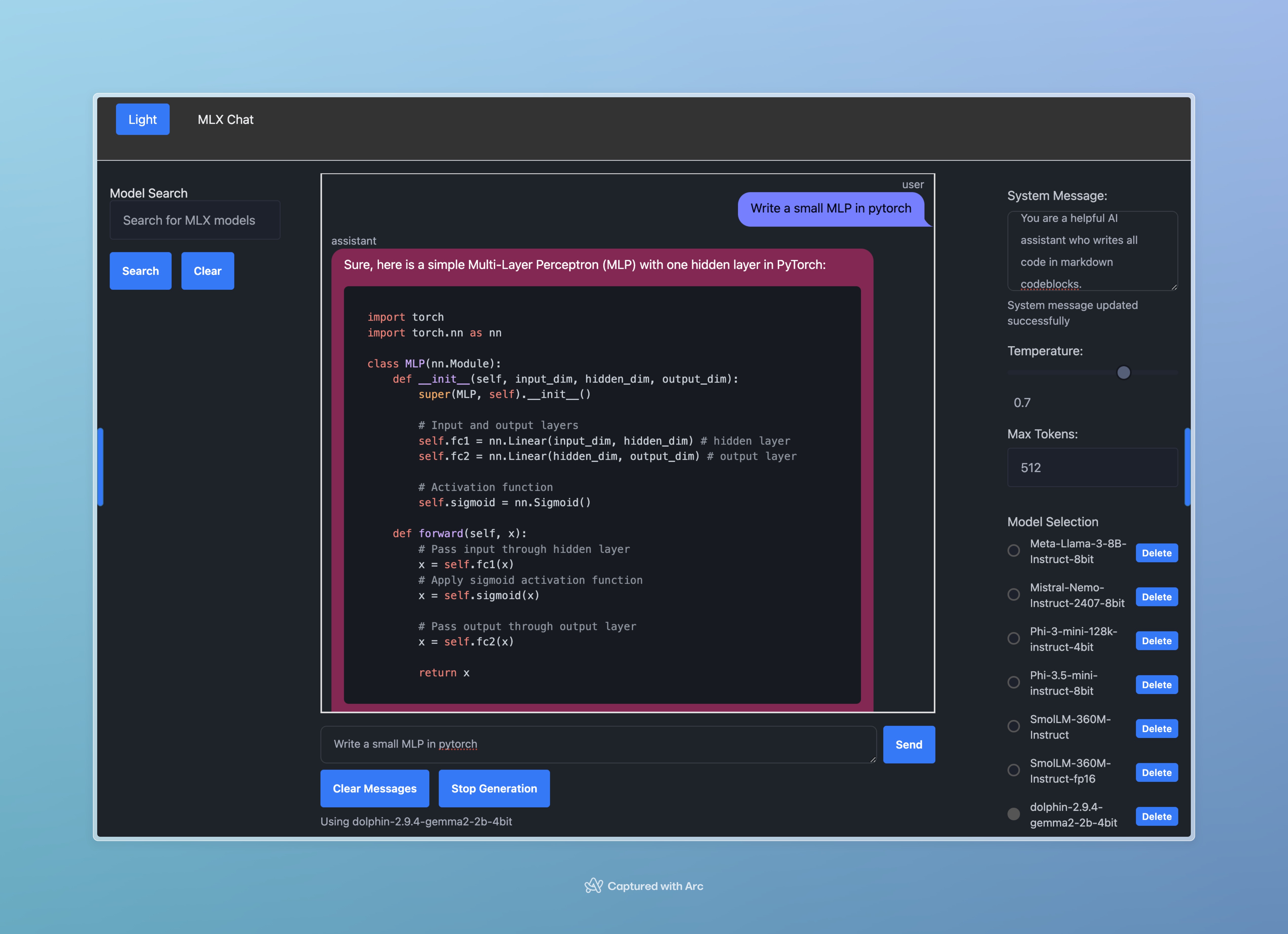

MLX Chat is an AI chatbot interface using MLX and FastHTML.

- MLX for LLM inference on Apple silicon

- FastHTML user interface

- Multiple AI model options

- Adjustable temperature and max tokens

- Conversation history

- Dark and light theme

-

Clone the repository:

git clone https://github.com/yourusername/mlx-chat.git cd mlx-chat -

Install the package and its dependencies:

pip install -e .

To run MLX Chat, use the following command from the terminal:

mlx_chat

- Select an AI model

- Set temperature and max tokens

- Type your message

- Send and view the AI's response

MLX Chat uses MLX for on device LLM inference and FastHTML for the user interface.

- Clear Messages

- Stop Generation

- Light/Dark Mode

- Model Search