This repository contains:

snzts- source code for a time series data servicesnzscrape- a simple command-line utility for scraping the Stats NZ websitedocker- Dockerfiles for easy running and deployment of the serviceclient- prototype client libraries--just an R package for now

snzscrape used Selenium to fetch files from the Stats NZ website. A set of R scripts exist in snzscrape_r which can be used to do this using only R.

Note: I worked for Stats NZ at the time of creation, and it would not have been possible to reshape the data without arranging for the release of some internally-held metadata. However, this is not a Stats NZ product.

By far and away the easiest way to get everything up and running is to use the provided Docker Compose setup. All containers are configured to run as the current user, and to ensure this works correctly, the following environment variables need to be set:

export UID=$(id -u)

export GID=$(id -g)The compose setup also makes use of a couple of other environment variables–PG_PASS to set an admin password for the PostgreSQL back-end, and APPLICATION_SECRET for the front-end. The APPLICATION_SECRET needs a particular format, and can be created manually as follows:

export APPLICATION_SECRET="$(head -c 32 /dev/urandom | base64)"The easiest option is probably just to create a file called .env in the root directory with the required variables (and make sure to set the mode to 600):

echo "GID=$(id -g)" > .env

echo "UID=$(id -u)" >> .env

echo "APPLICATION_SECRET=\"$(head -c 32 /dev/urandom | base64)\"" >> .env

echo "PG_PASS=\"$(head -c 8 /dev/urandom | base64)\"" >> .env

chmod 600 .envsnzts is an sbt project, and this must be built first. To do this run:

docker compose -f build_jar.yml upTo copy all the data from the Stats NZ website and create a zip file we can load to our database:

docker compose -f snzscrape.yml upThen, to get everything up and running, run:

docker compose -f snzts.yml upThis will take while since all of the csv data has to be copied into a PostgreSQL database.

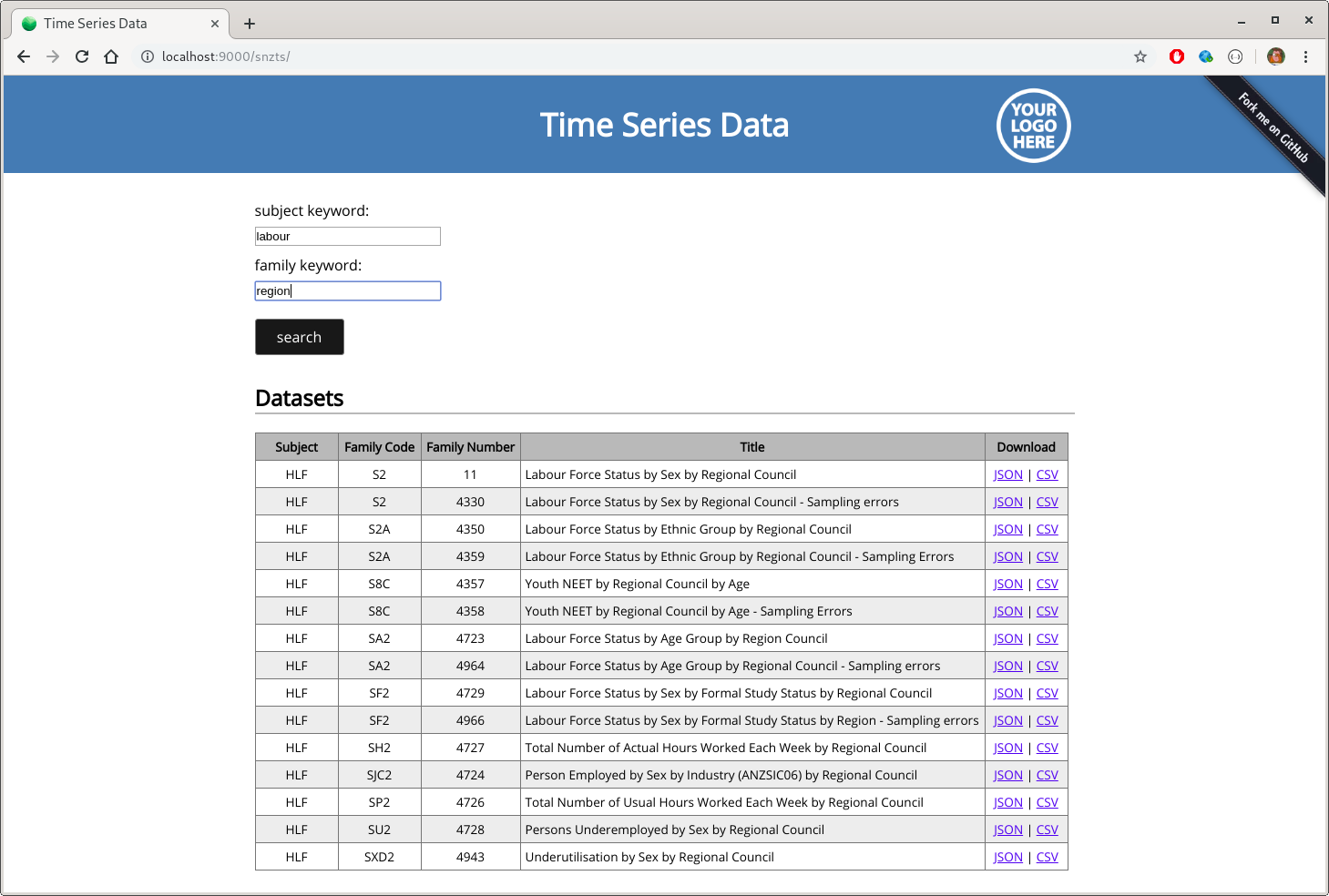

Once the database is loaded and everything is up and running, the service will be accessible at localhost:9000/snzts.

We can stop the service at any time by running:

docker-compose -f snzts.yml downIf you want to test this with Nginx, a basic location directive which will work is as follows (not sure about the CORS thing):

server {

...

location /snzts/ {

proxy_pass http://127.0.0.1:9000/snzts/;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

if ($request_method = 'GET') {

add_header 'Access-Control-Allow-Origin' '*';

}

}

...

}

Note that the backend database can also be used as the basis for a data service using tools such as PostGraphile and PostgREST. I have created a separate post about that here. Dockerfiles have been provided to demonstrate this, and they can be run at the same time as the sntzs service by running:

docker-compose -f allservice.yml up -dTo create nice end-points using NGINX for PostGraphile, add something like the following to your config:

server {

...

location /snztsgql/ {

proxy_pass http://127.0.0.1:5000/snztsgql/;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

...

}

Similarly, to create end-points (you'll still need to do more work to update the OpenAPI description for use with Swagger, etc.) for PostgREST:

stream {

...

http {

...

upstream postgrest {

server localhost:3000;

keepalive 64;

}

...

}

...

}

server {

...

location /snztsrest/ {

default_type application/json;

proxy_hide_header Content-Locatoin;

add_header Content-Location /snztsrest/$upstream_http_content_location;

proxy_set_header Connection "";

proxy_http_version 1.1;

proxy_pass http://postgrest/;

}

...

}