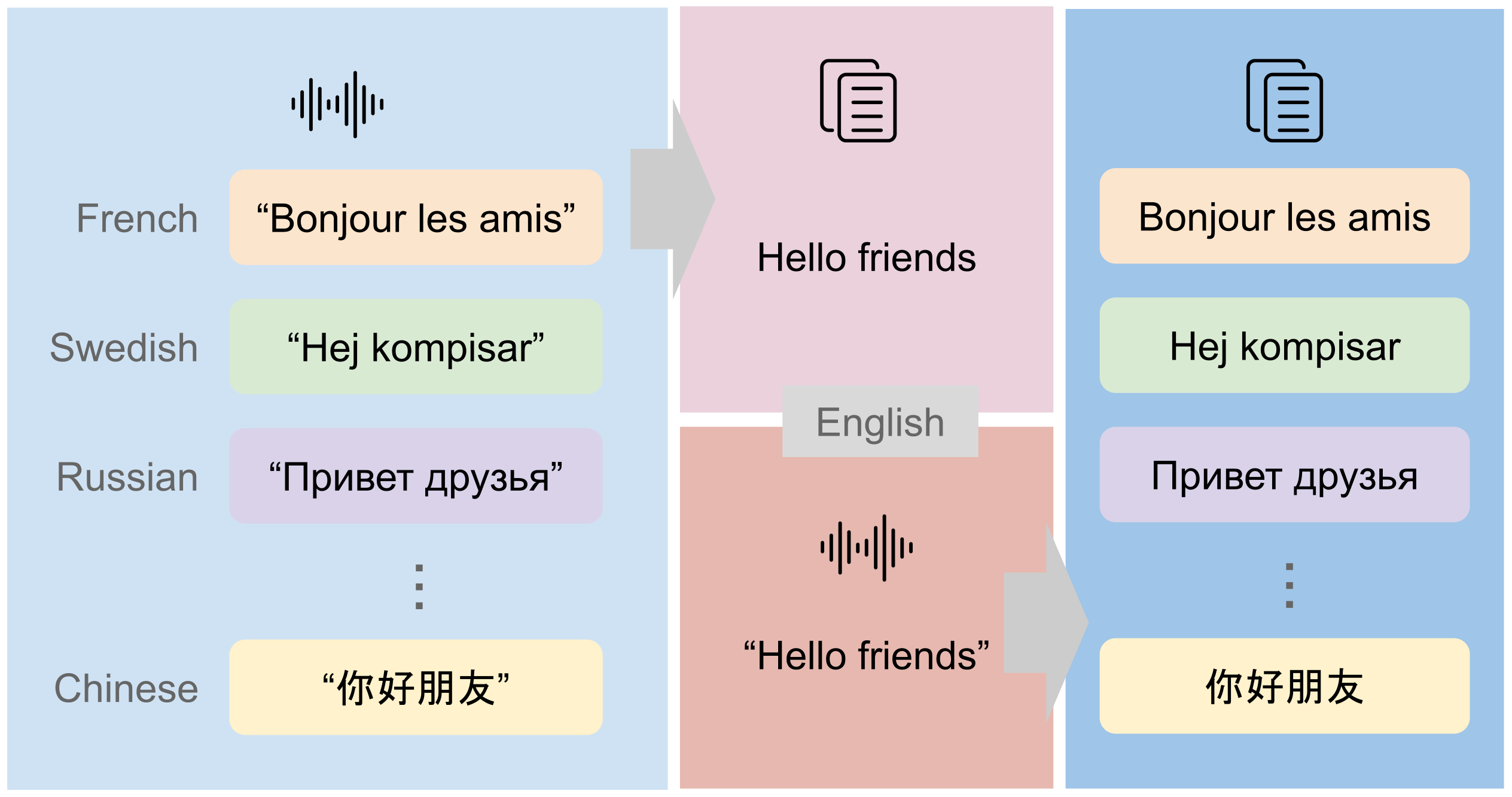

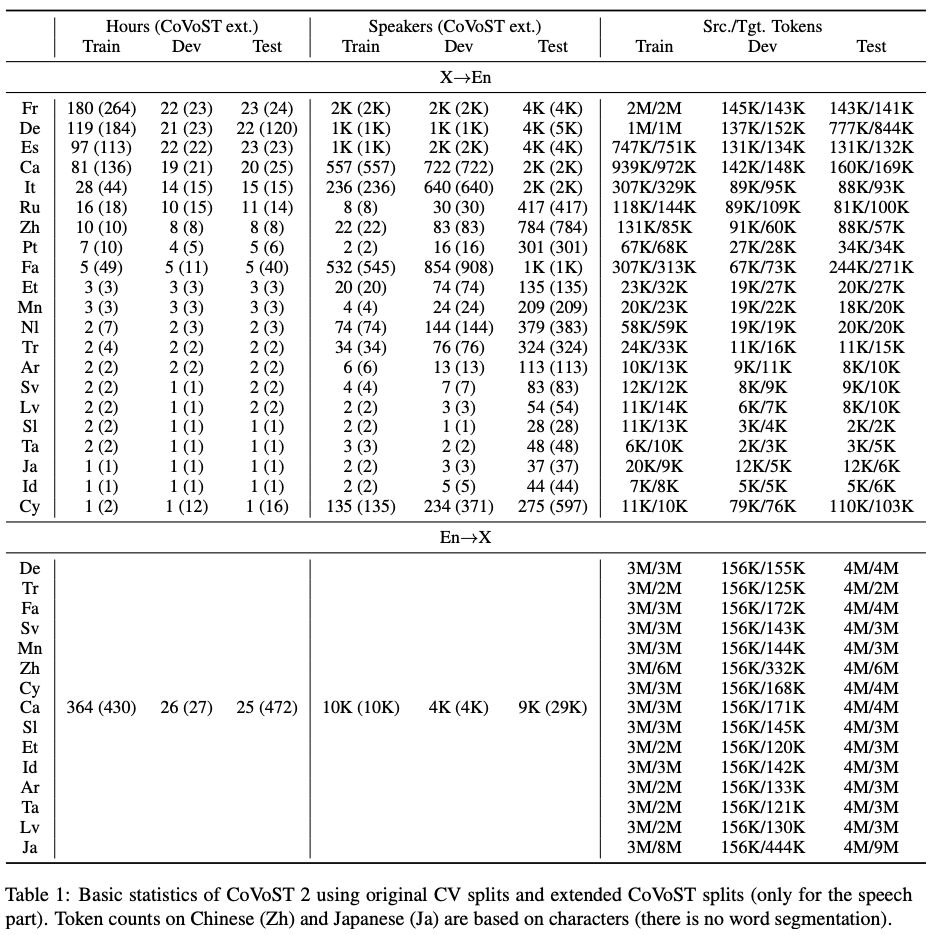

End-to-end speech-to-text translation (ST) has recently witnessed an increased interest given its system simplicity, lower inference latency and less compounding errors compared to cascaded ST (i.e. speech recognition + machine translation). End-to-end ST model training, however, is often hampered by the lack of parallel data. Thus, we created CoVoST, a large-scale multilingual ST corpus based on Common Voice, to foster ST research with the largest ever open dataset. Its latest version covers translations from English into 15 languages---Arabic, Catalan, Welsh, German, Estonian, Persian, Indonesian, Japanese, Latvian, Mongolian, Slovenian, Swedish, Tamil, Turkish, Chinese---and from 21 languages into English, including the 15 target languages as well as Spanish, French, Italian, Dutch, Portuguese, Russian. It has total 2,880 hours of speech and is diversified with 78K speakers.

Please check out our papers (CoVoST 1, CoVoST 2) for more details and the VizSeq example for exploring CoVoST data.

We also provide an additional out-of-domain evaluation set from Tatoeba for 5 languages (French, German, Dutch, Russian and Spanish) into English.

- 2021-01-06: Data splitting script added. Fairseq S2T example added for model training.

- 2020-07-21: CoVoST 2 released (arXiv paper) with 25 new translation directions.

- 2020-02-27: Colab example added for exploring CoVoST data with VizSeq.

- 2020-02-13: Paper accepted to LREC 2020.

- 2020-02-07: CoVoST released.

Language code

| Lang | Code |

|---|---|

| English | en |

| French | fr |

| German | de |

| Spanish | es |

| Catalan | ca |

| Italian | it |

| Russian | ru |

| Chinese | zh-CN |

| Portuguese | pt |

| Persian | fa |

| Estonian | et |

| Mongolian | mn |

| Dutch | nl |

| Turkish | tr |

| Arabic | ar |

| Swedish | sv-SE |

| Latvian | lv |

| Slovenian | sl |

| Tamil | ta |

| Japanese | ja |

| Indonesian | id |

| Welsh | cy |

- Download Common Voice audio clips and transcripts (version 4).

- Download CoVoST 2 translations (

covost_v2.<src_lang_code>_<tgt_lang_code>.tsv, which matches the rows invalidated.tsvfrom Common Voice):

- X into English: French, German, Spanish, Catalan, Italian, Russian, Chinese, Portuguese, Persian, Estonian, Mongolian, Dutch, Turkish, Arabic, Swedish, Latvian, Slovenian, Tamil, Japanese, Indonesian, Welsh

- English into X: German, Catalan, Chinese, Persian, Estonian, Mongolian, Turkish, Arabic, Swedish, Latvian, Slovenian, Tamil, Japanese, Indonesian, Welsh

- Get data splits: we adopt the standard Common Voice development/test splits and an extended Common Voice train split

to improve data utilization (see also Section 2.2 in our paper). Use the

following script to generate the data splits:

You should get 3 TSV files (

python get_covost_splits.py \ --version 2 --src-lang <src_lang_code> --tgt-lang <tgt_lang_code> \ --root <root path to the translation TSV and output TSVs> \ --cv-tsv <path to validated.tsv>

covost_v2.<src_lang_code>_<tgt_lang_code>.<split>.tsv) fortrain,devandtestsplits, respectively. Each of them has 4 columns:path(audio filename),sentence(transcript),translationandclient_id(speaker ID).

-

Download Common Voice audio clips and transcripts (version 3).

-

Download CoVoST translations (

covost.<src_lang_code>_<tgt_lang_code>.tsv, which matches the rows invalidated.tsvfrom Common Voice): -

Get data splits: we use extended Common Voice splits to improve data utilization. Use the following script to generate the data splits:

python get_covost_splits.py \ --version 1 --src-lang <src_lang_code> --tgt-lang <tgt_lang_code> \ --root <root path to the translation TSV and output TSVs> \ --cv-tsv <path to validated.tsv>

You should get 3 TSV files (

covost.<src_lang_code>_<tgt_lang_code>.<split>.tsv) fortrain,devandtestsplits, respectively. Each of them has 4 columns:path(audio filename),sentence(transcript),translationandclient_id(speaker ID).

-

Download transcripts and translations and extract files to

data/tt/*. -

Download speech data:

python get_tt_speech.py \

--root <mp3 download root (default to data/tt/mp3)>We provide fairseq S2T example for speech recognition/translation model training.

| License | |

|---|---|

| CoVoST data | CC0 |

| Tatoeba sentences | CC BY 2.0 FR |

| Tatoeba speeches | Various CC licenses (please check out the "audio_license" column in data/tt/tatoeba20191004.s2t.<lang>_en.tsv) |

| Anything else | CC BY-NC 4.0 |

Please cite as (CoVoST 2)

@misc{wang2020covost,

title={CoVoST 2: A Massively Multilingual Speech-to-Text Translation Corpus},

author={Changhan Wang and Anne Wu and Juan Pino},

year={2020},

eprint={2007.10310},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

and (CoVoST 1)

@inproceedings{wang-etal-2020-covost,

title = "{C}o{V}o{ST}: A Diverse Multilingual Speech-To-Text Translation Corpus",

author = "Wang, Changhan and

Pino, Juan and

Wu, Anne and

Gu, Jiatao",

booktitle = "Proceedings of The 12th Language Resources and Evaluation Conference",

month = may,

year = "2020",

address = "Marseille, France",

publisher = "European Language Resources Association",

url = "https://www.aclweb.org/anthology/2020.lrec-1.517",

pages = "4197--4203",

abstract = "Spoken language translation has recently witnessed a resurgence in popularity, thanks to the development of end-to-end models and the creation of new corpora, such as Augmented LibriSpeech and MuST-C. Existing datasets involve language pairs with English as a source language, involve very specific domains or are low resource. We introduce CoVoST, a multilingual speech-to-text translation corpus from 11 languages into English, diversified with over 11,000 speakers and over 60 accents. We describe the dataset creation methodology and provide empirical evidence of the quality of the data. We also provide initial benchmarks, including, to our knowledge, the first end-to-end many-to-one multilingual models for spoken language translation. CoVoST is released under CC0 license and free to use. We also provide additional evaluation data derived from Tatoeba under CC licenses.",

language = "English",

ISBN = "979-10-95546-34-4",

}

Changhan Wang (changhan@fb.com), Juan Miguel Pino (juancarabina@fb.com), Jiatao Gu (jgu@fb.com)