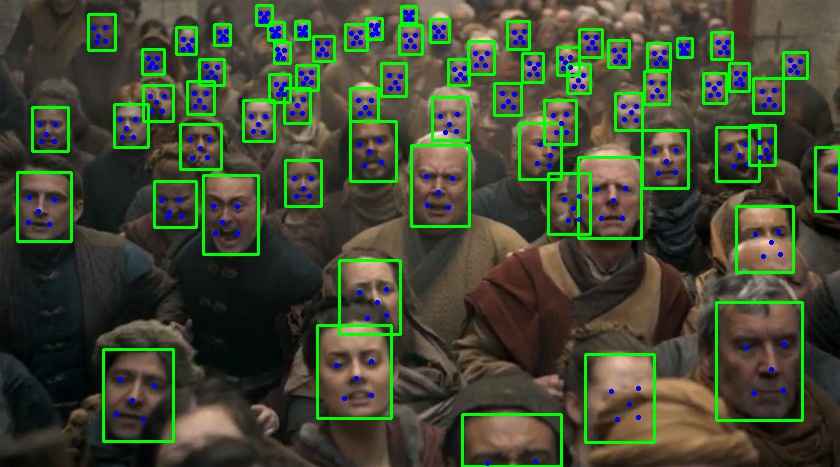

Demonstration of some pretty good facial rec tech using a famous selfie with a bunch of stars.

TL/DR - jump to results

At a high level, facial recognition consists of two steps: detection and embedding.

-

detection takes a large image and produces a list of faces in the image. In our case this piece uses fully-convolutional approaches which means they take an input image and output an output 'image' where each pixel consists of a bounding box, and confidence measure, and a set of landmarks. A second step does non-maximal-suppression of the data which is a fancy way of saying it looks at overlaps and takes the highest confidence one. From here we get a list of faces and landmarks. We crop the faces out of the source image and align them using the landmarks to a set of 'standard' landmarks used during training.

-

embedding - The next step is to produce an embedding which is an N-dimensional vector. In our case that vector has 512 elements. These vectors have a special property that faces that look more similar will be closer in Euclidean space. So for example if I have two images of Tom Hanks and I create two embeddings I would expect the distance between them to be less than the distance between say, an image of Tom Hanks and an image of Denzel Washington.

One of the most interesting aspects to me is that I can use a deep learning system to construct a metric space where distance corresponds to something very abstract like how similar these two people appear are or how how similar these two outfits are. In this sense the target of our learning algorithm is a good embedding in our new space.

That is it. That is how facial rec works at the base level. If you are more curious as to how this works from scratch please review the RetinaFace detector and the ArcFace loss function.

If you notice, the ArcFace paper is specifically about only a loss function so given no more information let me tell you that the loss function is the device that you use to tell a deep learning system exactly what to target during its training. The loss function, however, isn't specific to the network it is used for training so one could use this loss function to train other types of similarity measures potentially with networks that aren't specific to image recognition.

If anyone asks you how facial rec works you can say:

Chris Nuernberger told me facial recognition uses, among other things, a deep learning system to embed an image of a face into a metrc space where distance is related to how likely the two images represent the same physical person.

:-). Enjoy!

This system is built to show a realistic example of a cutting-edge system. As such it rests on four components:

- docker

- Conda

- Python

- Clojure

The most advanced piece of the demo is actually the facial detection component. Luckily, it was nicely wrapped. To get it working we needed cython working and there is some good information there if you want to use a system that is based partially on cython.

Installing docker is system specific but on all systems you want to install it such that you can run it without sudo.

This script mainly downloads the models used for detection and feature embedding.

scripts/get-datascripts/run-conda-dockerThe port is printed out in a line like:

nREPL server started on port 44507 on host localhost - nrepl://localhost:44507Now in emacs, vim or somewhere connect to the exposed port on localhost.

(require '[facial-rec.demo :as demo])

;;long pause as things compileAt this point, we have to say that the system is dynamically compiling cython and upgrading the networks to the newest version of mxnet. This is a noisy process for a few reasons; we are loading a newer numpy, compiling files and loading networks. You will see warnings in the repl and your stdout of your docker will display some errors regarding compiling gpu non maximal suppression (nms) algorithms:

In file included from /home/chrisn/.conda/envs/pyclj/lib/python3.6/site-packages/numpy/core/include/numpy/ndarraytypes.h:1832:0,

from /home/chrisn/.conda/envs/pyclj/lib/python3.6/site-packages/numpy/core/include/numpy/ndarrayobject.h:12,

from /home/chrisn/.conda/envs/pyclj/lib/python3.6/site-packages/numpy/core/include/numpy/arrayobject.h:4,

from /home/chrisn/.pyxbld/temp.linux-x86_64-3.6/pyrex/rcnn/cython/gpu_nms.c:598:

/home/chrisn/.conda/envs/pyclj/lib/python3.6/site-packages/numpy/core/include/numpy/npy_1_7_deprecated_api.h:17:2: warning: #warning "Using deprecated NumPy API, disable it with " "#define NPY_NO_DEPRECATED_API NPY_1_7_API_VERSION" [-Wcpp]

#warning "Using deprecated NumPy API, disable it with " \

^~~~~~~

/home/chrisn/.pyxbld/temp.linux-x86_64-3.6/pyrex/rcnn/cython/gpu_nms.c:600:10: fatal error: gpu_nms.hpp: No such file or directory

#include "gpu_nms.hpp"

^~~~~~~~~~~~~Interestingly enough, the system still works fine. The nms errors are around building the gpu version of the nms algorithms and we aren't using the gpu for this demo. Nothing to see here

(def faces (find-annotate-faces!))

;;...pause...

#'facesNow there are cutout faces in the faces subdir. You can do nearest searches in the demo namespace and see how well this network does.

(output-face-results! faces)This takes each face, find the 5 nearest, and outputs the results to results.md.

We use grip to view the markdown files locally.

Going further, any non-directory files in the dataset directory will be scanned and added to the dataset so feel free to try it with your friends and family and see how good the results are.

Copyright © 2019 Chris Nuernberger

This program and the accompanying materials are made available under the terms of the Eclipse Public License 2.0 which is available at http://www.eclipse.org/legal/epl-2.0.