RepoFuse is a pioneering solution designed to enhance repository-level code completion without the latency trade-off. RepoFuse uniquely fuses two types of context: the analogy context, rooted in code analogies, and the rationale context, which encompasses in-depth semantic relationships. We propose a novel rank truncated generation (RTG) technique that efficiently condenses these contexts into prompts with restricted size. This enables RepoFuse to deliver precise code completions while maintaining inference efficiency. Our evaluations using the CrossCodeEval suite reveal that RepoFuse outperforms common open-source methods, achieving an average improvement of 3.97 in code exact match score for the Python dataset and 3.01 for the Java dataset compared to state-of-the-art baseline methods.

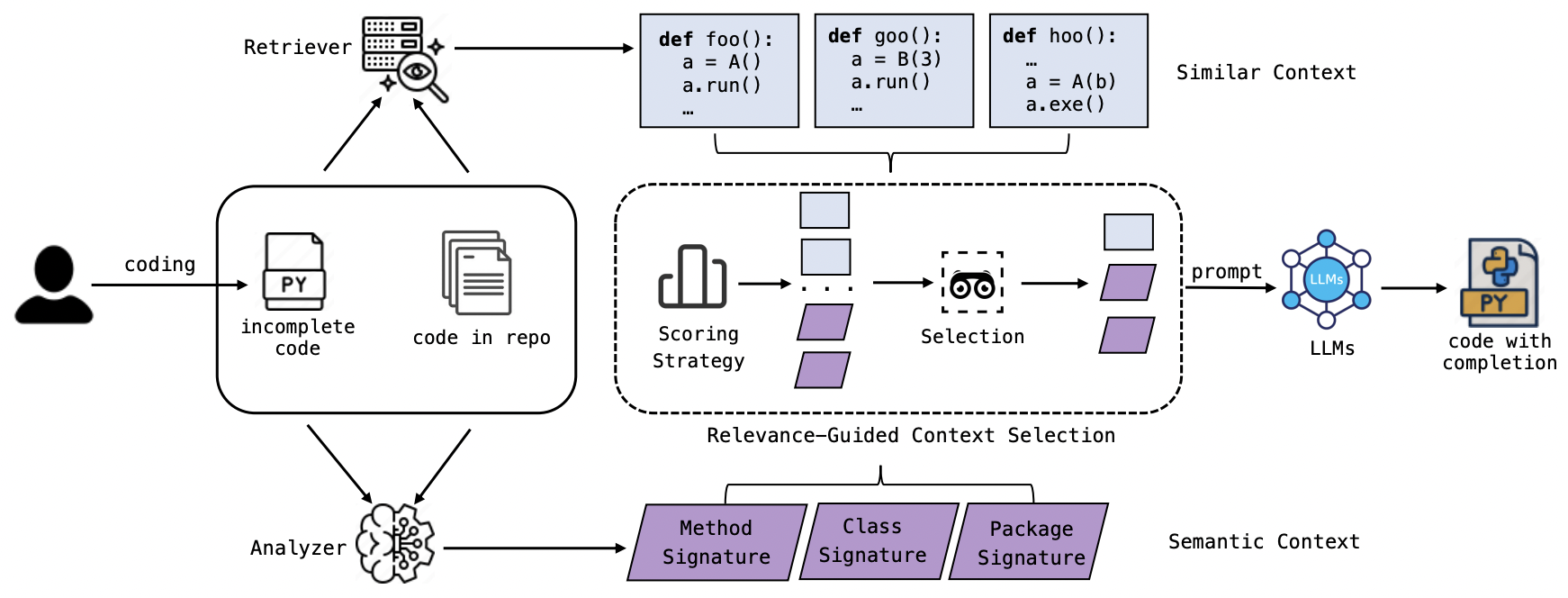

Figure: The workflow of RepoFuse

The Repo-specific semantic graph is a tool that can construct the dependency relationships between entities in the code and store this information in the form of a multi-directed graph. We use this graph to construct the context for code completion.

See repo_specific_semantic_graph/README.md for details.

-

Follow instructions on repo_specific_semantic_graph/README.md#install to install the Repo-specific semantic graph Python package.

-

Install the rest of the dependencies that the script depend on:

pip install -r retrieval/requirements.txt -

Download the CrossCodeEval dataset and the raw data from https://github.com/amazon-science/cceval

-

Run

retrieval/construct_cceval_data.pyto construct the Repo-Specific Semantic Graph context data. You can runpython retrieval/construct_cceval_data.py -hfor help on the arguments. For example:python retrieval/construct_cceval_data.py -d <path/to/CrossCodeEval>/crosscodeeval_data/python/line_completion_oracle_bm25.jsonl -o <path/to/output_dir>/line_completion_dependency_graph.jsonl -r <path/to/CrossCodeEval>/crosscodeeval_rawdata -j 10 -l python

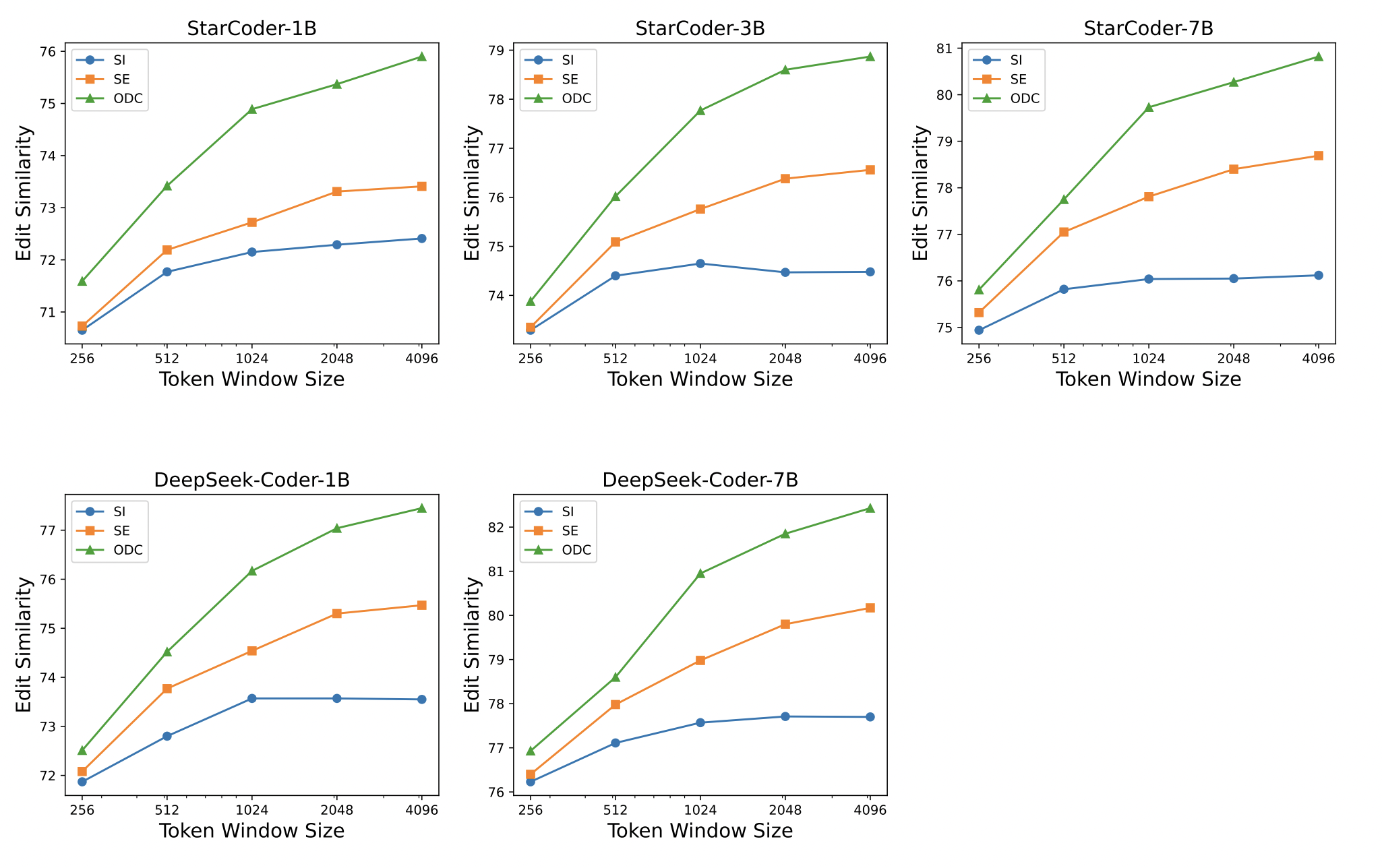

The following figure demonstrates the overall performance of RepoFuse:

We conducted experiments on DeepSeek and StarCoder models with varying parameter sizes, comparing the performance of using only Similar context, only Semantic context, and using Optimal Dual Context (ODC) under different Token Window sizes. The results show that ODC achieves the best performance across different models and Token Window sizes. To reproduce the results of this experiment, please follow these steps:

- run

cd eval && pip install -r requirements.txtto install evaluation environment. - You need to modify the configuration in

eval.sh, specifically including the following:

-

model_name_or_path:Replace {YOUR_MODEL_PATH} with the path to your model.

-

prompt_file:Replace {YOUR_PROMPT_FILE} with the path to your prompt file.

-

cfc_seq_length_list:Adjust the list of lengths for the crossfile content prompt as needed. You can pass in multiple values at once, separated by commas.

-

crossfile_type:The type of crossfile content you use. You can choose from Similar, Related and S_R. You can pass in multiple values at once, separated by commas.

-

ranking_strategy_list:Specify the ranking strategies to use. You can choose from UnixCoder, Random, CodeBert, Jaccard, Edit, BM25, InDegree, and Es_Orcal.

-

lang:Set the test language. Supported languages are python, java, csharp, and typescript.

- Run

bash eval.sh

Contributions are welcome! If you have any suggestions, ideas, bug reports, or new model/feature supported, please open an issue or submit a pull request.

If you find our work useful or helpful for your R&D works, please feel free to cite our paper as below.

@misc{liang2024repofuserepositorylevelcodecompletion,

title={RepoFuse: Repository-Level Code Completion with Fused Dual Context},

author={Ming Liang and Xiaoheng Xie and Gehao Zhang and Xunjin Zheng and Peng Di and wei jiang and Hongwei Chen and Chengpeng Wang and Gang Fan},

year={2024},

eprint={2402.14323},

archivePrefix={arXiv},

primaryClass={cs.SE},

url={https://arxiv.org/abs/2402.14323},

}