This code has the source code for the paper "Revisiting instance search: a new benchmark using Cycle Self-Training". Including:

-

the annotation description

-

the evaluation protocal and leaderboard

-

Train/Evaluate/Demo code

-

Please download original images from CUHK-SYSU[2] and PRW[3]. Other information including the naming style, image size, etc., can be also found in the original project pages.

-

We provide txt files for annotation in folder

annotation. The annotation protocal is similar to PRW,gallery.txtandquery.txtinlucde annotation with the format as [ID, left, top, right, bottom, image_name]. Only objects that pass through at least 2 cameras are taken into account.- The INS-PRW dataset has 7,834 bboxes for

INS_PRW_gallery.txtand 1,537 bboxes forINS_PRW_query.txt. - The INS-CUHK-SYSU has 16,780 bboxes for

INS_CUHK_SYSU_gallery.txtand 6,972 bboxes forINS-CUHK_SYSU_query.txt.INS_CUHK_SYSU_local_gallery.txtis the local distractor for each query, which is similar to the local gallery setting in original CUHK_SYSU.

- The INS-PRW dataset has 7,834 bboxes for

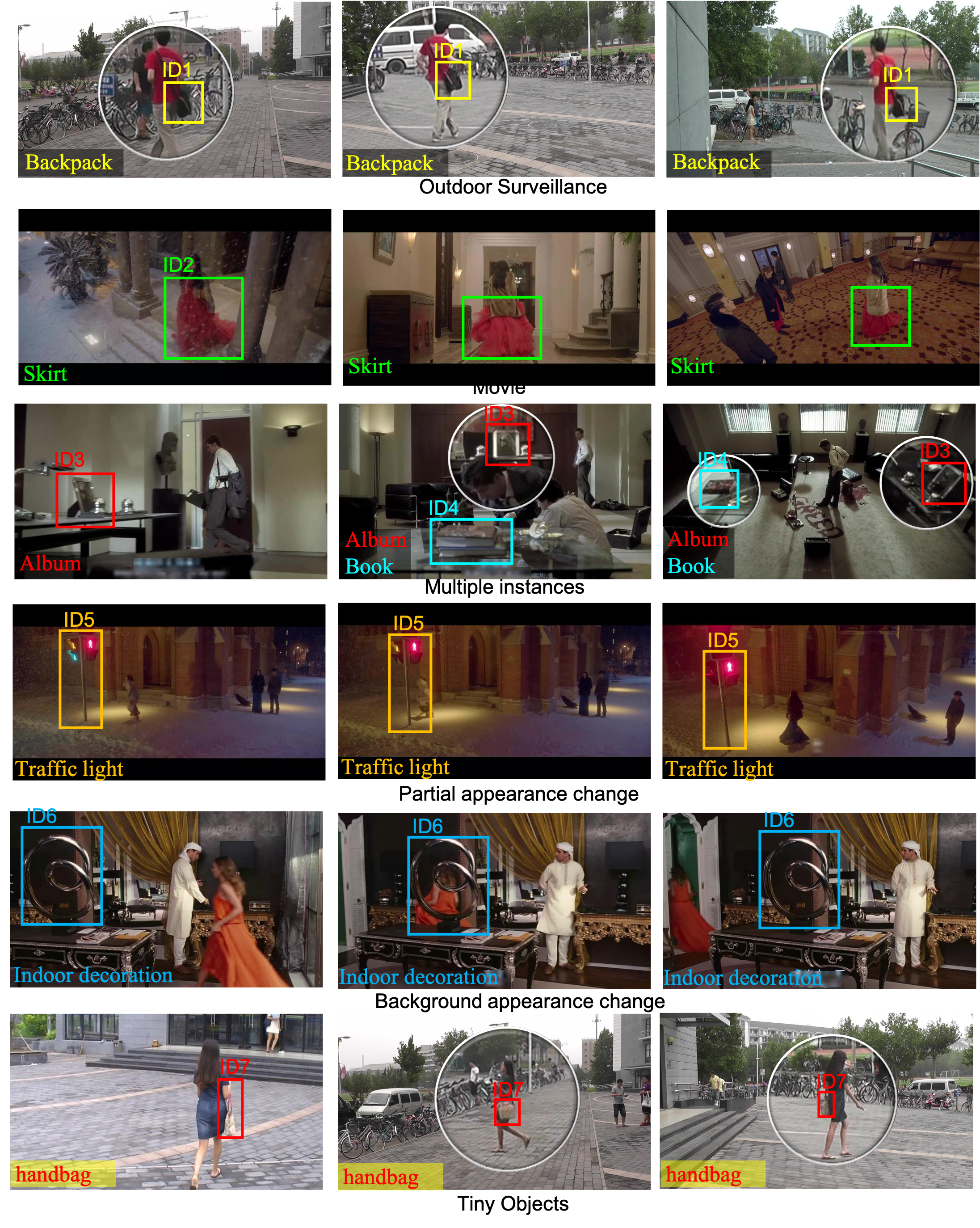

- Sample images from INS-PRW and INS-CUHK-SYSU are shown below. Note the

non saliency(great scale change),class-agnostic,great view changefeature of the datasets.

- The statics of INS-PRW and INS-CUHK-SYSU are shown below.

| Name | IDs | Images | Bboxes | Qeuries |

|---|---|---|---|---|

| INS-PRW | 535 | 6,079 | 7,834 | 1,537 |

| INS-CUHK-SYSU | 6,972 | 9,648 | 16,780 | 6,972 |

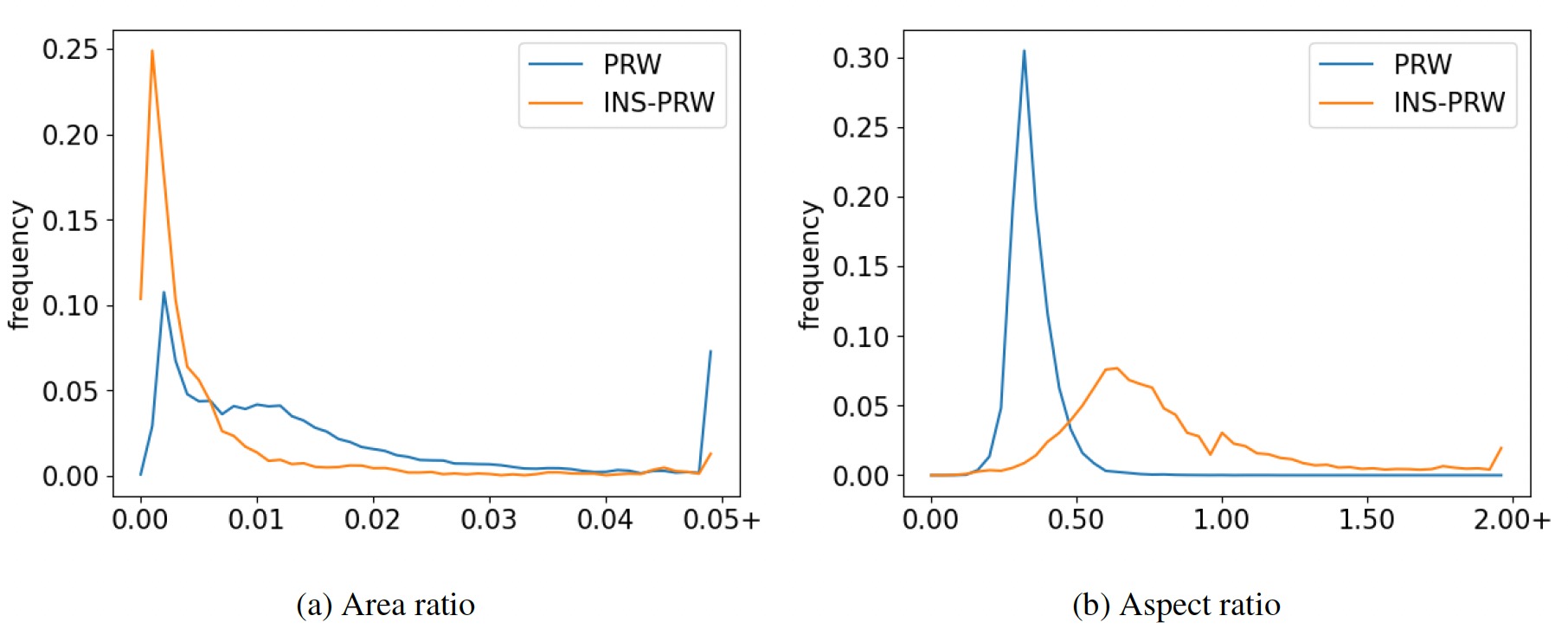

- Distribution of normalized area and aspect ratio of the annotated boxes. From (a), the boxes occupy much smaller area in the full image compared with the person boxes, which means they are much harder to be localized. From (b), the aspect ratio distribution of the new annotated objects is more spreading out compared to those of person boxes, meaning that our objects are of wider variations.

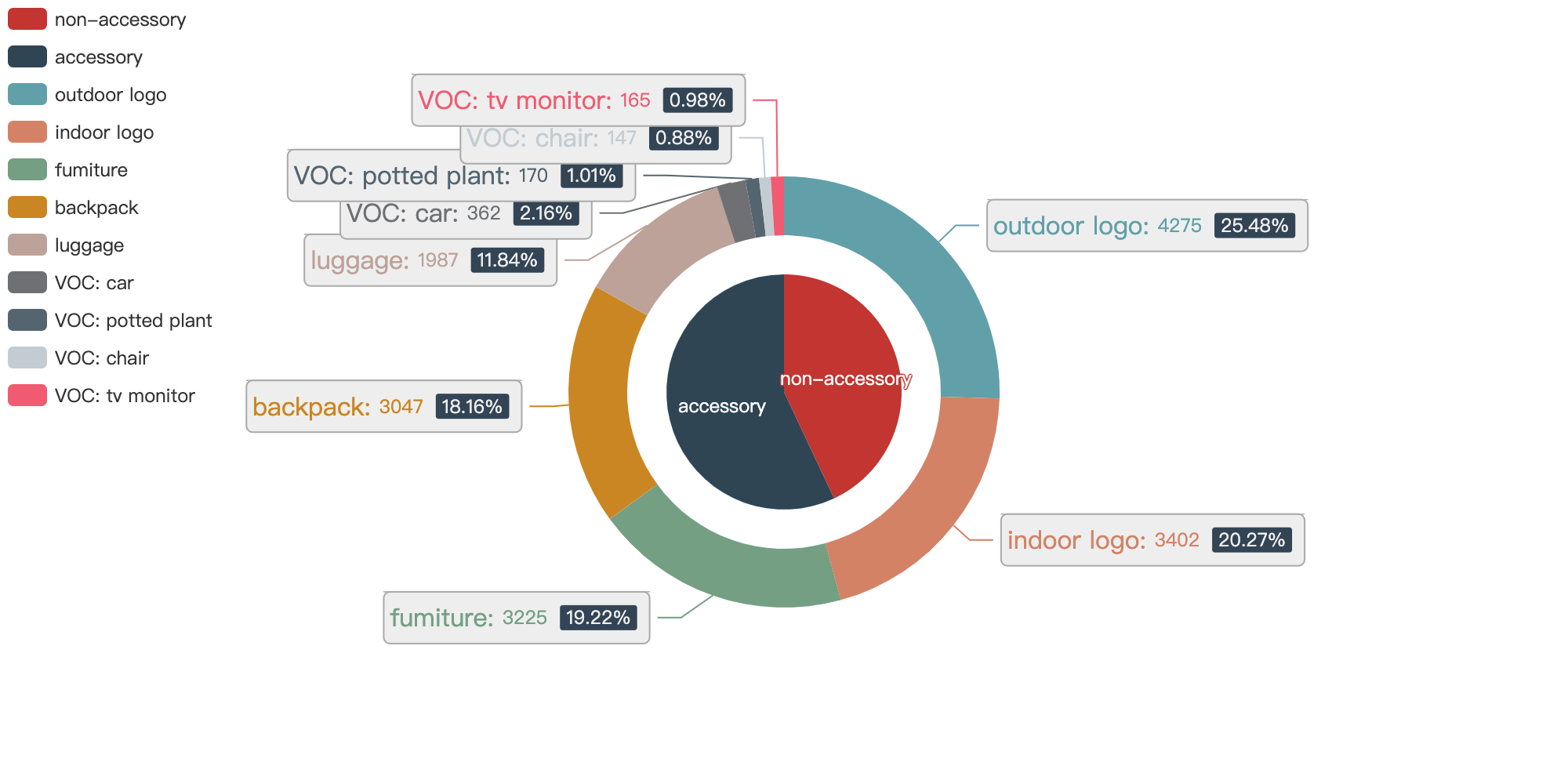

- Category statistics of the two annotated datasets are shown below. There is only a small portion of VOC predefined categories.

Given a query image with a bounding box, an instance search algorithm should produce multiple prediction boxes (with ranking scores) on the gallery frames. A prediction is regarded as true positive only if it has enough overlap (iou>0.5) with the ground truth bounding box which has the same ID as the query.

-

Metrics

- mAP: For each query, we calculate the area under the Precision-Recall curve, which is known as average precision(AP)[1]. Then the mean value of APs of all queries, i.e. mAP is obtained.

- CMC: Cumulative Matching Characteristic, which shows the probability that a query object appears in deifferent-sized candidate lists. The calculation process is described in [1].

-

Global gallery for INS-PRW: All gallery scene images are used for each query. The setting is identical to [3].

-

Local gallery for INS-CUHK-SYSU: The local gallery distractors are added in INS_CUHK_SYSU_local_gallery.txt. Each query search in the local gallery set of 100 scene images (ground truth + local distractors). See [2] for details.

We evaluate DELG[4], SiamRPN[5], GlobalTrack[6] and proposed methods in INS-CUHK-SYSU and INS-PRW datasets as follows:

| Method | INS-CUHK-SYSU | INS-PRW |

|---|---|---|

| DELG | 2.0(1.2) | 0.0(0.0) |

| SiamRPN | 16.0(14.2) | 0.0(0.0) |

| GlobalTrack | 28.4(27.8) | 0.2(0.2) |

| our baseline | 43.1(42.1) | 18.7(8.5) |

| our selftrain | 49.4(47.4) | 24.2(13.4) |

We use INS-CUHK-SYSU as an example.

sh experiments/baseline_ins.sh to evaluate datasets.

sh experiments/demo.sh to run instance search on several sample images.

For simplicity, we only use 3 images from images/self_train_samples as self-train source.

sh experiments/gen_query_seed.sh to prepare query seed files.

sh experiments/self_train.sh to start cycle self training.

@article{zhang2022revisiting,

title={Revisiting instance search: a new benchmark using Cycle Self-Training},

author={Zhang, Yuqi and Liu, Chong and Chen, Weihua and Xu, Xianzhe and Wang, Fan and Li, Hao and Hu, Shiyu and Zhao, Xin},

journal={Neurocomputing},

year={2022},

publisher={Elsevier}

}

[1] Scalable Person Re-identification: A Benchmark

[2] Joint Detection and Identification Feature Learning for Person Search

[3] Person Re-identification in the Wild

[4] Unifying deep local and global features for image search

[5] High Performance Visual Tracking with Siamese Region Proposal Network

[6] GlobalTrack: A Simple and Strong Baseline for Long-term Tracking