Test with artificial user intents!

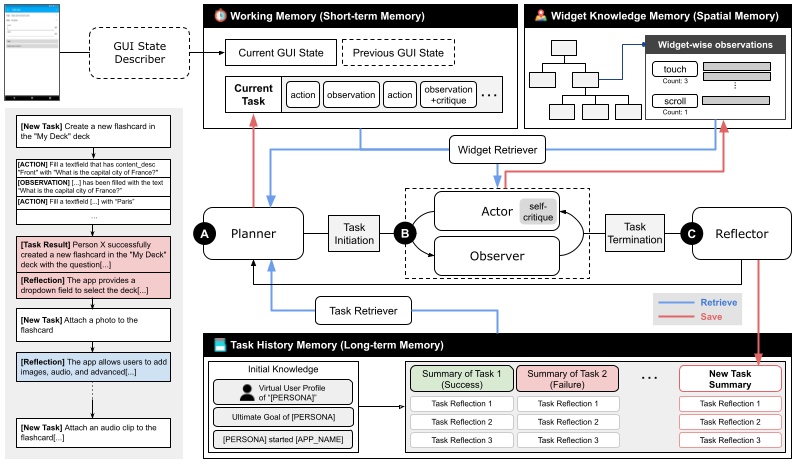

DroidAgent produces high-level testing scenarios with executable scripts (currently in the format of UIAutomator2) by autonomously exploring a given application under test (AUT). It is built as a modular framework driven by multiple LLM instances.

DroidAgent requires ADB (Android debugging bridge) and several Python libraries installed.

-

>=16GB RAM

-

>=Python 3.10

-

Android SDK & ADB installed and configured

- Install Android Studio

- Install command line tools:

Android Studio > Tools > SDK Manager > SDK Tools > Android SDK Command-line Tools (latest) - Setup Environment Variables: Check official document

export ANDROID_HOME="[YOUR_HOME_DIRECTORY]/Android/sdk" export PATH="$PATH:$ANDROID_HOME/tools:$ANDROID_HOME/platform-tools:$ANDROID_HOME/cmdline-tools/latest/bin"

-

Android device connected or emulator running

-

DroidAgent uses the slightly modified version of DroidBot (included as submodule)

-

OpenAI key: rename

.env.exampleto.envand add your own OpenAI API key

$ git clone --recurse-submodule https://github.com/coinse/droidagent.git

$ cd droidbot

$ pip install -e . # install droidbot

$ cd ..

$ pip install -r requirements.txt

$ pip install -e . # install droidagent- Make sure that your Android device is connected or emulator is running.

- Locate the APK file of the application under test (AUT) in the

target_appsdirectory, with the name[APP_NAME].apk. - Run the following command in the

scriptsdirectory (add--is_emulatoroption if you are using an emulator):

$ cd script

$ python run_droidagent.py --app [APP_NAME] --output_dir [OUTPUT_DIR] --is_emulator

# example: python run_droidagent.py --app AnkiDroid --output_dir ../evaluation/data_new/AnkiDroid --is_emulatorAccording to your needs, use DroidAgent with a persona (a set of user characteristics) and a set of intents (a set of user goals) to guide the testing process.

- You can add a custom persona by adding a new

.txtfile in theresources/personadirectory, and giving the name of the file as an argument to--profile_idoption torun_droidagent.py. - You can adjust the goal by modifying the following line in

run_droidagent.py(we plan to make this easily configurable in the future):

persona.update({

'ultimate_goal': 'visit as many pages as possible while trying their core functionalities',

# 'ultimate_goal': 'check whether the app supports interactions between multiple users', # for QuickChat case study

'initial_knowledge': initial_knowledge_map(args.app, persona_name, app_name),

})DroidAgent supports generating corresponding UIAutomator2 scripts from the exploration history. In the script directory, run the following command:

$ python make_script.py --result_dir [RESULT_DIR] --project [PROJECT_NAME] --package [PACKAGE_NAME]

# example: python make_script.py --project AnkiDroid --package_name com.ichi2.anki --result_dir ../evaluation/data/AnkiDroid[RESULT_DIR] should point to the [OUTPUT_DIR] you used in the previous step. Refer to the evaluation/package_name_map.json to find the package name of the AUT used in our evaluation. The replay script will be generated in the gen_tests directory. You can check the generation example in the gen_test_examples directory.

Note that the scripts are not guaranteed to be fully reproducible due to the possible flakiness of the AUT, but they can further be processed (e.g., manual exception handling) for constructing robust regression testing suite.

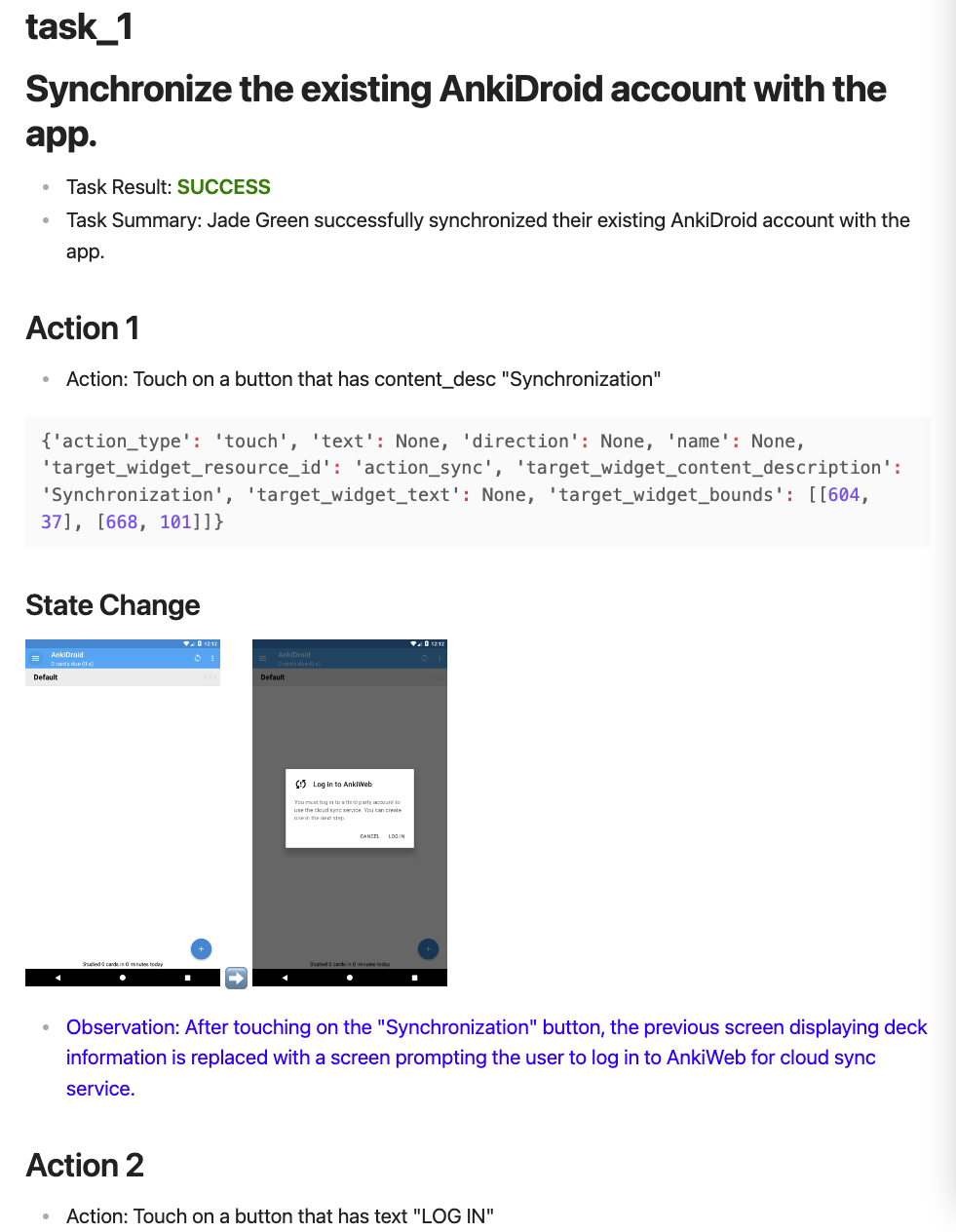

We provide a script to generate a markdown report consisting of the generated tasks during the testing process. Run the following command in the script directory:

$ python make_report.py --result_dir [RESULT_DIR] --project [PROJECT_NAME]

# example: python make_report.py --project AnkiDroid --result_dir ../evaluation/data/AnkiDroid[RESULT_DIR] should point to the [OUTPUT_DIR] as well.

The task-by-task markdown report will be generated in the reports/[PROJECT_NAME] directory. Each report contains the task description, performed GUI actions, and the observation of the application state after the task execution with screenshots.

Reports on the evaluation data - published on website (TBD)

To replicate evaluation results for the paper "Intent-Driven Android GUI Testing with Autonomous Large Language Model Agents", follow the steps:

- Download evaluation data from the below links (these are linked to Google drive uploads linked to an anonymised account).

- Locate the DroidAgent data in the

evaluation/datadirectory. - Locate the baseline data in the

evaluation/baselinesdirectory. - Locate the ablation data in the

evaluation/ablationdirectory. - Run the notebooks in the

evaluation/notebooks/directory to replicate the results for each of our research questions.

-

If you get a sqlite3 version-related error message from chromaDB dependency, follow the instruction about building pysqlite3 from source: ChromaDB documentation

-

In short:

pip install pysqlite3-binary -

Add following lines into

~/<your_python_directory>/lib/python3.10/site-packages/chromadb/__init__.py:

__import__('pysqlite3')

import sys

sys.modules['sqlite3'] = sys.modules.pop('pysqlite3')