Jitesh Jain, Jiachen Li†, MangTik Chiu†, Ali Hassani, Nikita Orlov, Humphrey Shi

† Equal Contribution

[Project Page] [arXiv] [pdf] [BibTeX]

This repo contains the code for our paper OneFormer: One Transformer to Rule Universal Image Segmentation.

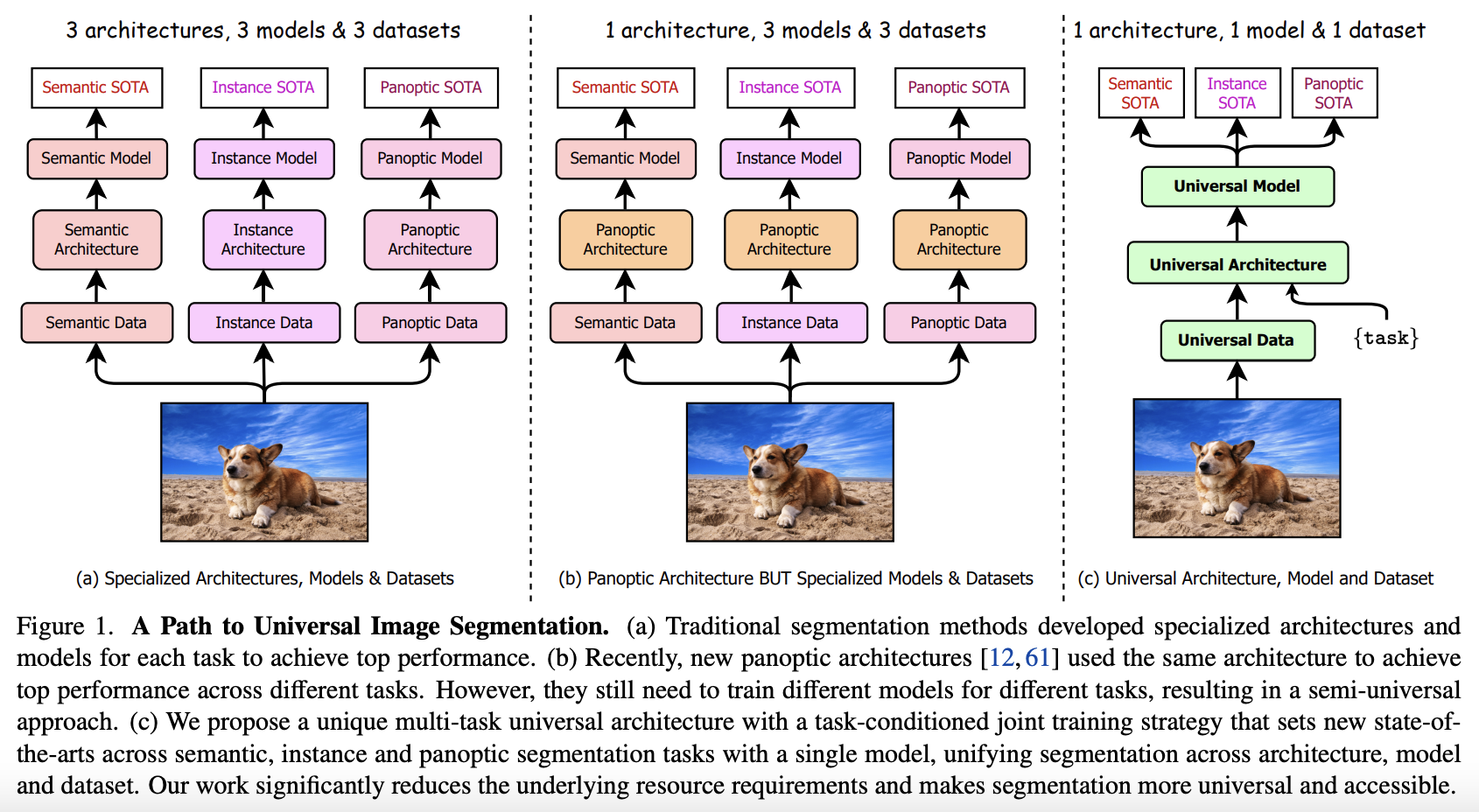

- OneFormer is the first multi-task universal image segmentation framework based on transformers.

- OneFormer needs to be trained only once with a single universal architecture, a single model, and on a single dataset , to outperform existing frameworks across semantic, instance, and panoptic segmentation tasks.

- OneFormer uses a task-conditioned joint training strategy, uniformly sampling different ground truth domains (semantic instance, or panoptic) by deriving all labels from panoptic annotations to train its multi-task model.

- OneFormer uses a task token to condition the model on the task in focus, making our architecture task-guided for training, and task-dynamic for inference, all with a single model.

- [February 27, 2023]: OneFormer is accepted to CVPR 2023!

- [January 26, 2023]: OneFormer sets new SOTA performance on the the Mapillary Vistas val (both panoptic & semantic segmentation) and Cityscapes test (panoptic segmentation) sets. We’ve released the checkpoints too!

- [January 19, 2023]: OneFormer is now available as a part of the 🤗 HuggingFace transformers library and model hub! 🚀

- [December 26, 2022]: Checkpoints for Swin-L OneFormer and DiNAT-L OneFormer trained on ADE20K with 1280×1280 resolution released!

- [November 23, 2022]: Roboflow cover OneFormer on YouTube! Thanks to @SkalskiP for making the video!

- [November 18, 2022]: Our demo is available on 🤗 Huggingface Space!

- [November 10, 2022]: Project Page, ArXiv Preprint and GitHub Repo are public!

- OneFormer sets new SOTA on Cityscapes val with single-scale inference on Panoptic Segmentation with 68.5 PQ score and Instance Segmentation with 46.7 AP score!

- OneFormer sets new SOTA on ADE20K val on Panoptic Segmentation with 51.5 PQ score and on Instance Segmentation with 37.8 AP!

- OneFormer sets new SOTA on COCO val on Panoptic Segmentation with 58.0 PQ score!

- We use Python 3.8, PyTorch 1.10.1 (CUDA 11.3 build).

- We use Detectron2-v0.6.

- For complete installation instructions, please see INSTALL.md.

- We experiment on three major benchmark dataset: ADE20K, Cityscapes and COCO 2017.

- Please see Preparing Datasets for OneFormer for complete instructions for preparing the datasets.

- We train all our models using 8 A6000 (48 GB each) GPUs.

- We use 8 A100 (80 GB each) for training Swin-L† OneFormer and DiNAT-L† OneFormer on COCO and all models with ConvNeXt-XL† backbone. We also train the 896x896 models on ADE20K on 8 A100 GPUs.

- Please see Getting Started with OneFormer for training commands.

- Please see Getting Started with OneFormer for evaluation commands.

- We provide quick to run demos on Colab

and Hugging Face Spaces

.

- Please see OneFormer Demo for command line instructions on running the demo.

- † denotes the backbones were pretrained on ImageNet-22k.

- Pre-trained models can be downloaded following the instructions given under tools.

| Method | Backbone | Crop Size | PQ | AP | mIoU (s.s) |

mIoU (ms+flip) |

#params | config | Checkpoint |

|---|---|---|---|---|---|---|---|---|---|

| OneFormer | Swin-L† | 640×640 | 49.8 | 35.9 | 57.0 | 57.7 | 219M | config | model |

| OneFormer | Swin-L† | 896×896 | 51.1 | 37.6 | 57.4 | 58.3 | 219M | config | model |

| OneFormer | Swin-L† | 1280×1280 | 51.4 | 37.8 | 57.0 | 57.7 | 219M | config | model |

| OneFormer | ConvNeXt-L† | 640×640 | 50.0 | 36.2 | 56.6 | 57.4 | 220M | config | model |

| OneFormer | DiNAT-L† | 640×640 | 50.5 | 36.0 | 58.3 | 58.4 | 223M | config | model |

| OneFormer | DiNAT-L† | 896×896 | 51.2 | 36.8 | 58.1 | 58.6 | 223M | config | model |

| OneFormer | DiNAT-L† | 1280×1280 | 51.5 | 37.1 | 58.3 | 58.7 | 223M | config | model |

| OneFormer (COCO-Pretrained) | DiNAT-L† | 1280×1280 | 53.4 | 40.2 | 58.4 | 58.8 | 223M | config | model | pretrained |

| OneFormer | ConvNeXt-XL† | 640×640 | 50.1 | 36.3 | 57.4 | 58.8 | 372M | config | model |

| Method | Backbone | PQ | AP | mIoU (s.s) |

mIoU (ms+flip) |

#params | config | Checkpoint |

|---|---|---|---|---|---|---|---|---|

| OneFormer | Swin-L† | 67.2 | 45.6 | 83.0 | 84.4 | 219M | config | model |

| OneFormer | ConvNeXt-L† | 68.5 | 46.5 | 83.0 | 84.0 | 220M | config | model |

| OneFormer (Mapillary Vistas-Pretrained) | ConvNeXt-L† | 70.1 | 48.7 | 84.6 | 85.2 | 220M | config | model | pretrained |

| OneFormer | DiNAT-L† | 67.6 | 45.6 | 83.1 | 84.0 | 223M | config | model |

| OneFormer | ConvNeXt-XL† | 68.4 | 46.7 | 83.6 | 84.6 | 372M | config | model |

| OneFormer (Mapillary Vistas-Pretrained) | ConvNeXt-XL† | 69.7 | 48.9 | 84.5 | 85.8 | 372M | config | model | pretrained |

| Method | Backbone | PQ | PQTh | PQSt | AP | mIoU | #params | config | Checkpoint |

|---|---|---|---|---|---|---|---|---|---|

| OneFormer | Swin-L† | 57.9 | 64.4 | 48.0 | 49.0 | 67.4 | 219M | config | model |

| OneFormer | DiNAT-L† | 58.0 | 64.3 | 48.4 | 49.2 | 68.1 | 223M | config | model |

| Method | Backbone | PQ | mIoU (s.s) |

mIoU (ms+flip) |

#params | config | Checkpoint |

|---|---|---|---|---|---|---|---|

| OneFormer | Swin-L† | 46.7 | 62.9 | 64.1 | 219M | config | model |

| OneFormer | ConvNeXt-L† | 47.9 | 63.2 | 63.8 | 220M | config | model |

| OneFormer | DiNAT-L† | 47.8 | 64.0 | 64.9 | 223M | config | model |

If you found OneFormer useful in your research, please consider starring ⭐ us on GitHub and citing 📚 us in your research!

@inproceedings{jain2023oneformer,

title={{OneFormer: One Transformer to Rule Universal Image Segmentation}},

author={Jitesh Jain and Jiachen Li and MangTik Chiu and Ali Hassani and Nikita Orlov and Humphrey Shi},

journal={CVPR},

year={2023}

}We thank the authors of Mask2Former, GroupViT, and Neighborhood Attention Transformer for releasing their helpful codebases.