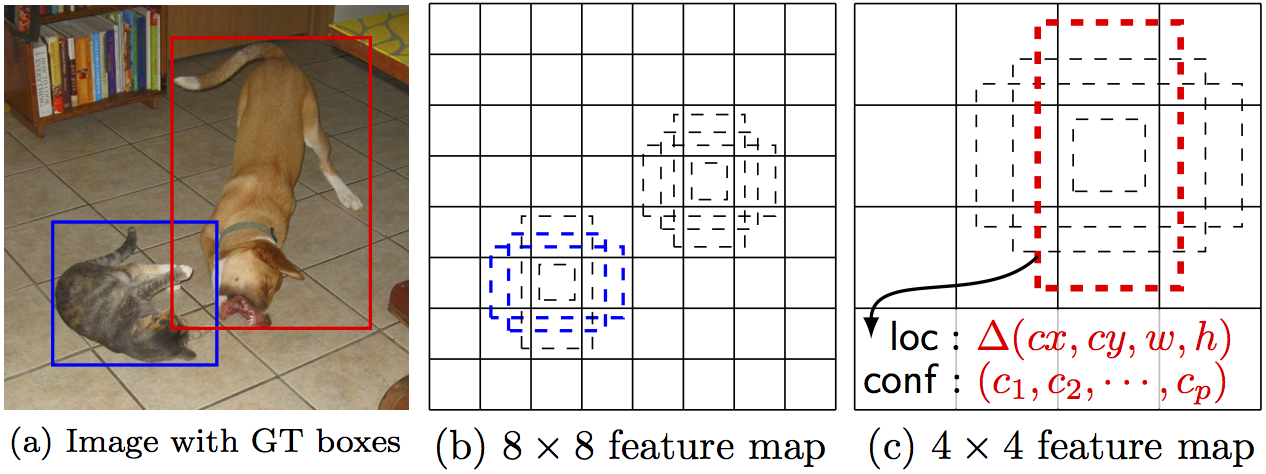

SSD: Single Shot MultiBox Detector

By Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang Fu, Alexander C. Berg.

Introduction

SSD is an unified framework for object detection with a single network. You can use the code to train/evaluate a network for object detection task. For more details, please refer to our arXiv paper.

| System | VOC2007 test mAP | FPS (Titan X) | Number of Boxes |

|---|---|---|---|

| Faster R-CNN (VGG16) | 73.2 | 7 | 300 |

| Faster R-CNN (ZF) | 62.1 | 17 | 300 |

| YOLO | 63.4 | 45 | 98 |

| Fast YOLO | 52.7 | 155 | 98 |

| SSD300 (VGG16) | 72.1 | 58 | 7308 |

| SSD300 (VGG16, cuDNN v5) | 72.1 | 72 | 7308 |

| SSD500 (VGG16) | 75.1 | 23 | 20097 |

Citing SSD

Please cite SSD in your publications if it helps your research:

@article{liu15ssd,

Title = {{SSD}: Single Shot MultiBox Detector},

Author = {Liu, Wei and Anguelov, Dragomir and Erhan, Dumitru and Szegedy, Christian and Reed, Scott and Fu, Cheng-Yang and Berg, Alexander C.},

Journal = {arXiv preprint arXiv:1512.02325},

Year = {2015}

}

Windows Setup

This branch of Caffe extends BVLC-led Caffe by adding Windows support and other functionalities commonly used by Microsoft's researchers, such as managed-code wrapper, Faster-RCNN, R-FCN, etc.

Contact: Kenneth Tran (ktran@microsoft.com)

Caffe

Linux (CPU) |

Windows (CPU) |

|---|---|

Requirements: Visual Studio 2013

Pre-Build Steps

Copy .\windows\CommonSettings.props.example to .\windows\CommonSettings.props

By defaults Windows build requires CUDA and cuDNN libraries.

Both can be disabled by adjusting build variables in .\windows\CommonSettings.props.

Python support is disabled by default, but can be enabled via .\windows\CommonSettings.props as well.

3rd party dependencies required by Caffe are automatically resolved via NuGet.

CUDA

Download CUDA Toolkit 7.5 from nVidia website.

If you don't have CUDA installed, you can experiment with CPU_ONLY build.

In .\windows\CommonSettings.props set CpuOnlyBuild to true and set UseCuDNN to false.

cuDNN

Download cuDNN v4 or cuDNN v5 from nVidia website.

Unpack downloaded zip to %CUDA_PATH% (environment variable set by CUDA installer).

Alternatively, you can unpack zip to any location and set CuDnnPath to point to this location in .\windows\CommonSettings.props.

CuDnnPath defined in .\windows\CommonSettings.props.

Also, you can disable cuDNN by setting UseCuDNN to false in the property file.

Python

To build Caffe Python wrapper set PythonSupport to true in .\windows\CommonSettings.props.

Download Miniconda 2.7 64-bit Windows installer [from Miniconda website] (http://conda.pydata.org/miniconda.html).

Install for all users and add Python to PATH (through installer).

Run the following commands from elevated command prompt:

conda install --yes numpy scipy matplotlib scikit-image pip

pip install protobuf

Remark

After you have built solution with Python support, in order to use it you have to either:

- set

PythonPathenvironment variable to point to<caffe_root>\Build\x64\Release\pycaffe, or - copy folder

<caffe_root>\Build\x64\Release\pycaffe\caffeunder<python_root>\lib\site-packages.

Matlab

To build Caffe Matlab wrapper set MatlabSupport to true and MatlabDir to the root of your Matlab installation in .\windows\CommonSettings.props.

Remark

After you have built solution with Matlab support, in order to use it you have to:

- add the generated

matcaffefolder to Matlab search path, and - add

<caffe_root>\Build\x64\Releaseto your system path.

Build

Now, you should be able to build .\windows\Caffe.sln

Contents

Installation

- Get the code. We will call the directory that you cloned Caffe into

%CAFFE_ROOT%

git clone https://github.com/conner99/caffe.git

cd caffe

git checkout ssd-microsoft- Build the code. Please follow Caffe instruction to install all necessary packages and build it.

Preparation

-

Download fully convolutional reduced (atrous) VGGNet. By default, we assume the model is stored in

%CAFFE_ROOT%\models\VGGNet\ -

Download VOC2007 and VOC2012 dataset. By default, we assume the data is stored in

%CAFFE_ROOT%\data\VOC0712http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

# Extract the data manual.

%CAFFE_ROOT%\data\VOC0712\

VOC2007\Annotations

VOC2007\JPEGImages

VOC2012\Annotations

VOC2012\JPEGImages

- Create the LMDB file.

cd %CAFFE_ROOT%

# Create test_name_size.txt in data\VOC0712\

.\data\VOC0712\get_image_size.bat

# You can modify the parameters in create_data.bat if needed.

# It will create lmdb files for trainval and test with encoded original image:

# - %CAFFE_ROOT%\data\VOC0712\trainval_lmdb

# - %CAFFE_ROOT%\data\VOC0712\test_lmdb

.\data\VOC0712\create_data.batTrain/Eval

- Train your model and evaluate the model on the fly.

# It will create model definition files and save snapshot models in:

# - %CAFFE_ROOT%\models\VGGNet\VOC0712\SSD_300x300\

# and job file, log file, and the python script in:

# - %CAFFE_ROOT%\jobs\VGGNet\VOC0712\SSD_300x300\

# and save temporary evaluation results in:

# - %CAFFE_ROOT%\data\VOC2007\results\SSD_300x300\

# It should reach 72.* mAP at 60k iterations.

python examples/ssd/ssd_pascal.pyIf you don't have time to train your model, you can download a pre-trained model at here.

- Evaluate the most recent snapshot.

# If you would like to test a model you trained, you can do:

python examples\ssd\score_ssd_pascal.py- Test your model using a webcam. Note: press esc to stop.

# If you would like to attach a webcam to a model you trained, you can do:

python examples\ssd\ssd_pascal_webcam.pyHere is a demo video of running a SSD500 model trained on MSCOCO dataset.

-

Check out

examples/ssd_detect.ipynborexamples/ssd/ssd_detect.cppon how to detect objects using a SSD model. -

To train on other dataset, please refer to data/OTHERDATASET for more details. We currently add support for MSCOCO and ILSVRC2016.