Stubborn is an experiment in the field of multi-agent reinforcement learning. The goal of the experiment is to see whether reinforcement learning agents can learn to communicate important information to each other by fighting with each other, even though they are "on the same side". By running the experiment and generating plots using the commands documented below, you could replicate the results shown in our paper. By modifying the environment rules as defined in the code, you could extend the experiment to investigate this scenario in different ways.

Stubborn was presented at the Workshop on Rebellion and Disobedience in AI at The International Conference on Autonomous Agents and Multiagent Systems. Read the full paper. Abstract:

Recent research in multi-agent reinforcement learning (MARL) has shown success in learning social behavior and cooperation. Social dilemmas between agents in mixed-sum settings have been studied extensively, but there is little research into social dilemmas in fully cooperative settings, where agents have no prospect of gaining reward at another agent’s expense.

While fully-aligned interests are conducive to cooperation between agents, they do not guarantee it. We propose a measure of "stubbornness" between agents that aims to capture the human social behavior from which it takes its name: a disagreement that is gradually escalating and potentially disastrous. We would like to promote research into the tendency of agents to be stubborn, the reactions of counterpart agents, and the resulting social dynamics.

In this paper we present Stubborn, an environment for evaluating stubbornness between agents with fully-aligned incentives. In our preliminary results, the agents learn to use their partner’s stubbornness as a signal for improving the choices that they make in the environment. Continue reading...

python3 -m venv "${HOME}/stubborn_env"

source "${HOME}/stubborn_env/bin/activate"

pip3 install stubbornShow list of commands:

python -m stubborn --helpShow arguments and options for a specific command:

python -m stubborn run --helpRun the Stubborn experiment, training agents and evaluating their performance:

python3 -m stubborn runThere are two plot commands implemented. Each of them, by default, draws a plot for the very last run that you made.

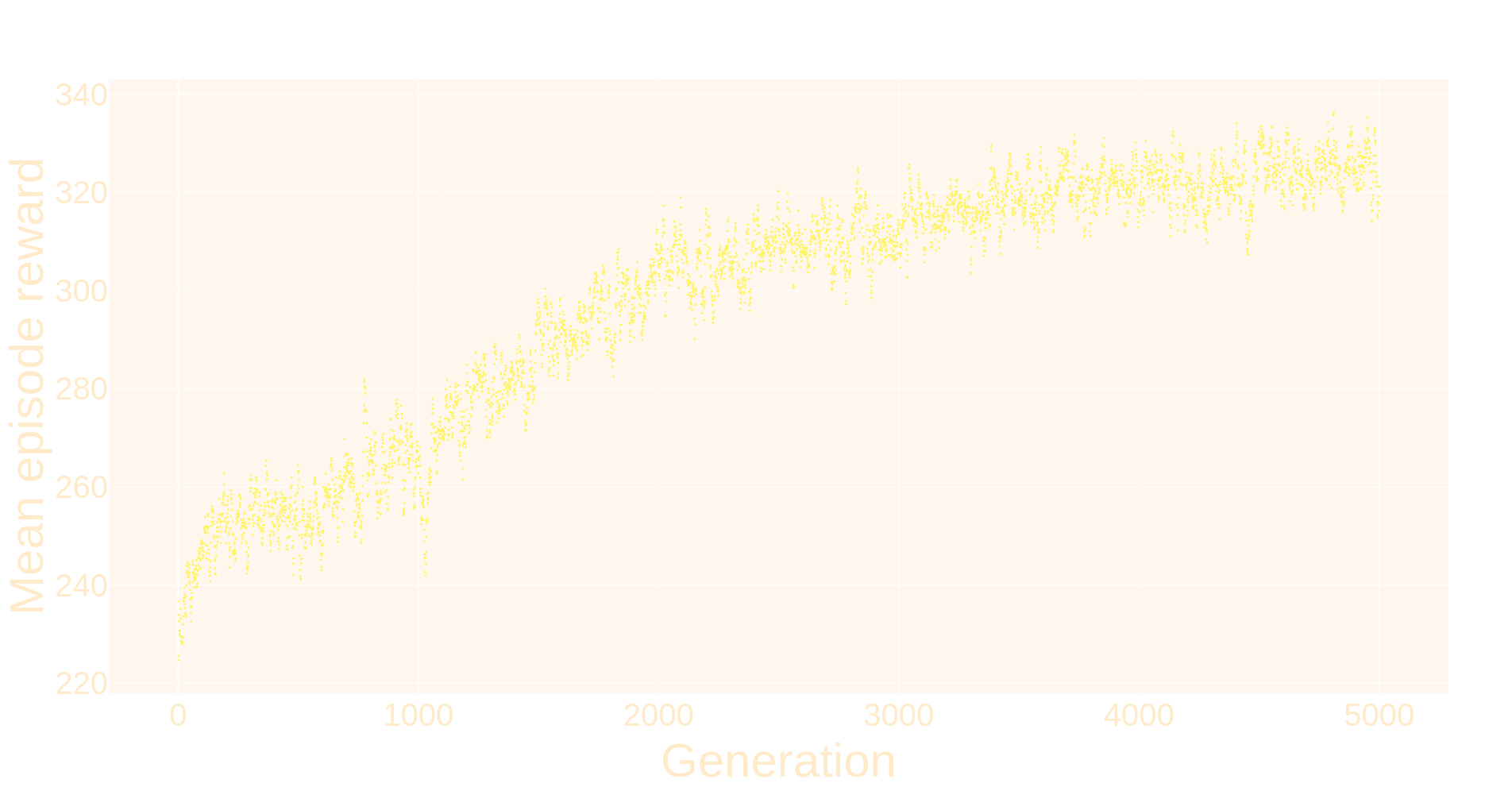

Draw a plot showing the rewards of both agents as they learn:

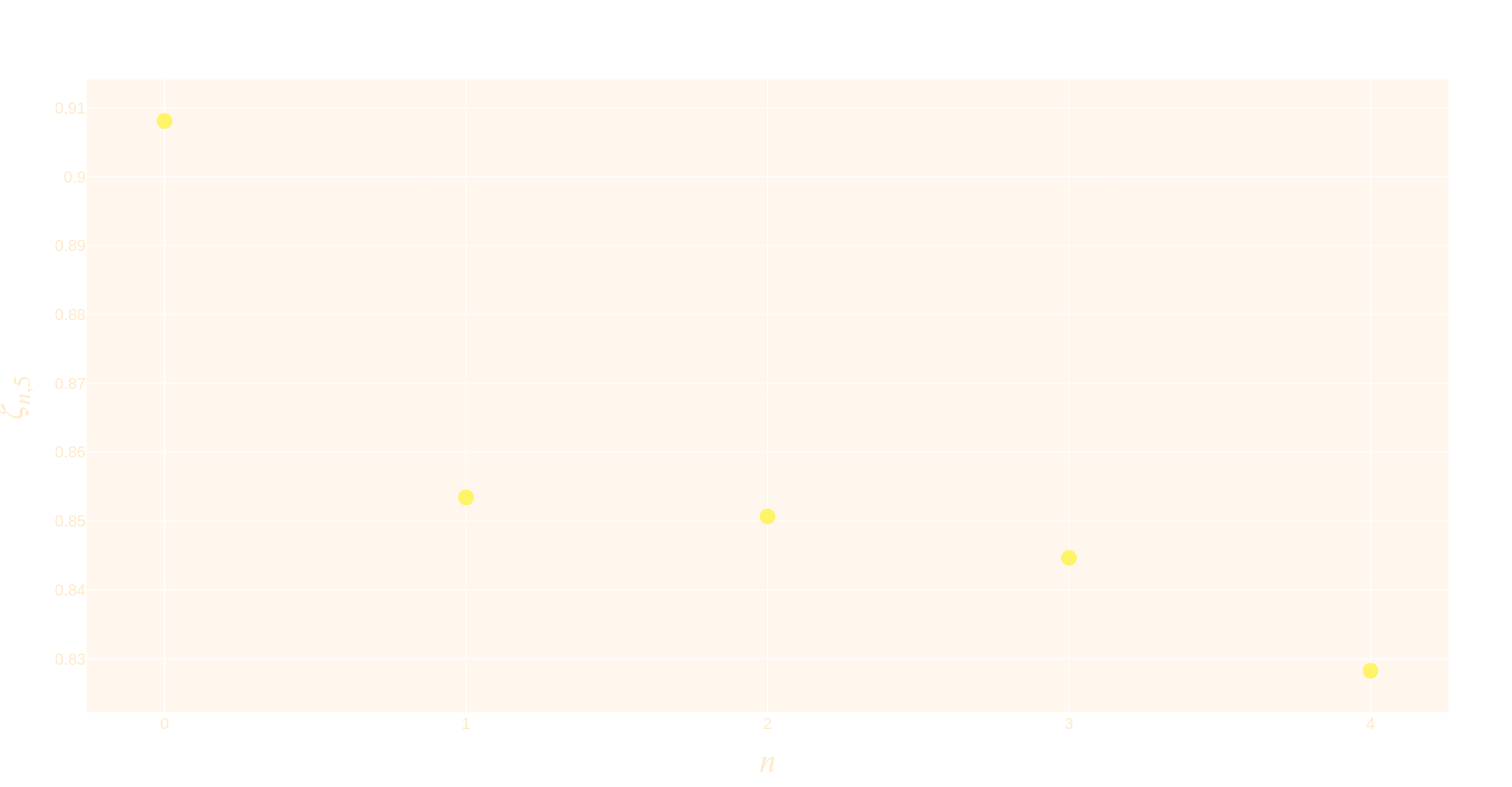

python3 -m stubborn plot-rewardDraw a plot showing the insistence of one agent as a function of the other agent's stubbornness, defined as

python3 -m stubborn plot-insistenceIf you use Stubborn in your research, please cite the accompanying paper:

@article{Rachum2023Stubborn,

title={Stubborn: An Environment for Evaluating Stubbornness between Agents with Aligned Incentives},

author={Rachum, Ram and Nakar, Yonatan and Mirsky, Reuth},

year = {2023},

journal = {Proceedings of the Workshop on Rebellion and Disobedience in AI at The International Conference on Autonomous Agents and Multiagent Systems}

}